TestingOverview

Testing Overview

CS 4311

Frank Tsui, Orland Karam, and Barbara Bernal, Essential of Software

Engineering , 3rd edition, Jones & Bartett Learning. Sections 10.1-10.2.

Hans Van Vliet, Software Engineering, Principles and Practice, 3 rd edition, John Wiley & Sons, 2008. Chapter 13.

Outline

V&V

Definitions of V&V terms

V&V and software lifecycle

Sample techniques

Testing

Basics of testing

Levels of software testing

Sample testing techniques

Verification and Validation (V&V)

Textbook use of term “Testing”

General/wider sense to mean V&V

Q: What is V&V in software testing?

Groups of 2

What? Why? Who? Against what? When? How?

5 minutes

What is V&V?

Different use by different people, e.g.,

Formal vs. informal and static vs. dynamic

Verification

Evaluation of an object to demonstrate that it meets its specification. ( Did we build the system right?)

Evaluation of the work product of a development phase to determine whether the product satisfies the conditions imposed at the start of the phase .

Validation

Evaluation of an object to demonstrate that it meets the customer’s expectations . ( Did we build the right system?

)

4

V&V and Software Lifecycle

Throughout software lifecycle, e.g., V-model

5

Requirement Engineering

Determine general test strategy/plan

(techniques, criteria, team)

Test requirements specification

Completeness

Consistency

Feasibility (functional, performance requirements)

Testability (specific; unambiguous; quantitative; traceable)

Generate acceptance/validation testing data

6

Design

Determine system and integration test strategy

Assess/test the design

Completeness

Consistency

Handling scenarios

Traceability ( to and from )

Design walkthrough, inspection

7

Implementation and Maintenance

Implementation

Determine unit test strategy

Techniques (static vs. dynamic)

Tools, and whistles and bells (driver/harness, stub)

Maintenance

Determine regression test strategy

Documentation maintenance (vital!)

8

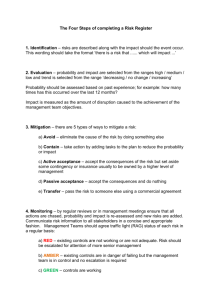

Hierarchy of V&V Techniques

Dynamic Technique

Testing in narrow sense

Symbolic

Execution

Model

Checking

Complementary

V&V

Static Technique

Formal

Analysis

Static

Analysis

Proof

Informal

Analysis

Walkthrough

Inspection

Reading

9

Definitions of V&V Terms

“Correct” program and specification

Program matches its specification

Specification matches the client’s intent

Error (a.k.a. mistake)

A human activity that leads to the creation of a fault

A human error results in a fault which may, at runtime, result in a failure

Fault (a.k.a. bug)

The physical manifestation of an error that may result in a failure

A discrepancy between what something should contain (in order for failure to be impossible) and what it does contain

Failure (a.k.a. symptom, problem, incident)

Observable misbehavior

Actual output does not match the expected output

Can only happen when a thing is being used

10

Definitions

Fault identification and correction

Process of determining what fault caused a failure

Process of changing a system to remove a fault

Debugging

The act of finding and fixing program errors

Testing

The act of designing, debugging, and executing tests

Test case and test set

A particular set of input and the expected output

A finite set of test cases working together with the same purpose

Test oracle

Any means used to predict the outcome of a test

11

Where Do the Errors Come From?

Q: What kinds of errors? Who?

Groups of 2

3 minutes

12

Where Do the Errors Come From?

Kinds of errors

Missing information

Wrong information/design/implementation

Extra information

Facts about errors

To err is human (but different person has different error rate).

Different studies indicate 30 to 85 errors per 1000 lines . After extensive testing, 0.5 to 3 errors per 1000 lines remain.

The longer an error goes undetected, the more costly to correct

13

Types of Faults

List all the types and causes of faults: what can go wrong in the development process?

In group of 2

3 minutes

14

Sample Types of Faults

Algorithmic : algorithm or logic does not produce the proper output for the given input

Syntax : improper use of language constructs

Computation ( precision ): formula’s implementation wrong or result not to correct degree of accuracy

Documentation : documentation does not match what program does

Stress ( overload ): data structures filled past capacity

Capacity : system’s performance unacceptable as activity reaches its specified limit

Timing : code coordinating events is inadequate

Throughput : system does not perform at speed required

Recovery : failure encountered and does not behave correctly

15

Sample Causes of Faults

Requirements

System Design

Program Design

Program Implementation

Unit Testing

System Testing

Incorrect or missing requirements

Incorrect translation

Incorrect design specification

Incorrect design interpretation

Incorrect documentation

Incorrect semantics

Incomplete testing

New faults introduced correcting others

16

Sample V&V Techniques

Requirements

Design

Reviews: walkthroughs/inspections

Implementation

Testing

Operation

Model checking

Correctness proofs

Synthesis

Maintenance

Runtime monitoring

17

Outline

V&V

Definitions of V&V terms

V&V and software lifecycle

Sample techniques

Testing

Basics of testing

Levels of software testing

Sample testing techniques

Question

How do you know your software works correctly?

19

Question

How do you know your software works correctly?

Answer: Try it.

Example: I have a function, say f, of one integer input. I tried f(6). It returned 35.

Is my program correct?

Groups of 2

1 minute

20

Question

How do you know your software works correctly?

Answer: Try it.

Example: I have a function, say f, of one integer input. I tried f(6). It returned 35.

My function is supposed to compute x*6-1. Is it correct?

Is my program correct?

Groups of 2

1 minute

21

Goals of Testing

I want to show that my program is correct; i.e., it produces the right answer for every input.

Q: Can we write tests to show this?

Groups of 2

1 minute

22

Goals of Testing

Can we prove a program is correct by testing?

Yes, if we can test it exhaustively : every combination of inputs in every environment.

23

How Long Will It Take?

Consider X+Y for 32-bit integers.

How many test cases are required?

How long will it take?

1 test per second:

1,000 tests per second:

1,000,000 per second:

Groups of 2

1 minute

24

How Long?

Consider X+Y for 32-bit integers.

How many test cases are required?

2 32 * 2 32 = 2 64 =10 19

(The universe is 4*10 17 seconds old.)

How long will it take?

1 test per second:

1,000 tests per second:

1,000,000 per second:

580,000,000,000 years

580,000,000 years

580,000 years

25

Another Example

A

B

A loop returns to A.

We want to count the number of paths.

The maximum number of iterations of the loop is 20.

How many?

C

26

Another Example

A

B

Suppose the loop does not repeat:

Only one pass executes

5 distinct paths

C

27

Another Example

A

B

Suppose the loop repeats exactly once

5*5=25 distinct paths

If it repeats at most once,

5 + 5*5

C

28

Another Example

A

B

What if it repeats exactly n times?

5 n paths

C

29

Another Example

A

B

What if it repeats at most n times?

∑5 n = 5 n + 5 n-1 + … + 5 n=20, ∑5 n = 10 15

32 years at 1,000,000 tests per second

C

30

Yet Another Example

Consider testing a Java compiler?

How many inputs are needed to test every input?

31

Limits of Testing

You can’t test it completely.

You can’t test all valid inputs.

You can’t test all invalid inputs.

You really can’t test edited inputs.

You can’t test in every environment.

You can’t test all variations on timing.

You can’t even test every path. (path, set of lines executed, start to finish)

32

Why Bother?

Test cannot show an absence of a fault.

But, it can show its existence!

33

Goals of Testing

Identify errors

Make errors repeatable (when do they occur?)

Localize errors (where are they?)

The purpose of testing is to find problems in programs so they can be fixed.

34

Cost of Testing

Testing accounts for between 30% and 90% of the total cost of software.

Microsoft employs one tester for each developer.

We want to reduce the cost

Increase test efficiency: #defects found/test

Reduce the number of tests

Find more defects

How?

Organize!

35

A Good Test

:

Has a reasonable probability of catching an error

Is not redundant

Is neither too simple nor complex

Reveals a problem

Is a failure if it doesn’t reveal a problem

36

Outline

V&V

Definitions of V&V terms

V&V and software lifecycle

Sample techniques

Testing

Basics of testing

Levels of software testing

Sample testing techniques

Levels of Software Testing

Unit/Component testing

Integration testing

System testing

Acceptance testing

Installation testing

38

Levels of Software Testing

Unit/Component testing

Verify implementation of each software element

Trace each test to detailed design

Integration testing

System testing

Acceptance testing

Installation testing

39

Levels of Software Testing

Unit/Component testing

Integration testing

Combine software units and test until the entire system has been integrated

Trace each test to high-level design

System testing

Acceptance testing

Installation testing

40

Levels of Software Testing

Unit/Component testing

Integration testing

System testing

Test integration of hardware and software

Ensure software as a complete entity complies with operational requirements

Trace test to system requirements

Acceptance testing

Installation testing

41

Levels of Software Testing

Unit/Component testing

Integration testing

System testing

Acceptance testing

Determine if test results satisfy acceptance criteria of project stakeholder

Trace each test to stakeholder requirements

Installation testing

42

Levels of Software Testing

Unit/Component testing

Integration testing

System testing

Acceptance testing

Installation testing

Perform testing with application installed on its target platform

43

Testing Phases: V-Model

Requirements

Specification

System

Specification

Acceptance

Test Plan

System

Integration

Test Plan

System

Design

Detailed

Design

Sub-system

Integration

Test Plan

Unit code and

Test

Service

Acceptance

Test

System

Integration test

Sub-system

Integration test

44

Hierarchy of Testing

Testing

Ad Hoc

Unit Testing

Program Testing

Integration Testing

Black Box

Equivalence

Boundary

Decision Table

State Transition

Use Case

Domain Analysis

White Box

Control Flow

Top Down

Bottom Up

Big Bang

Sandwich

Data Flow

System Testing

Function

Properties

Performance

Reliability

Acceptance

Testing

Benchmark

Pilot

Alpha

Availability

Security

Usability

Documentation

Beta

Portability

Capacity 45