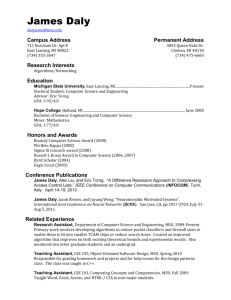

CSE841PerceptionF08

advertisement

Computer Vision and Human

Perception

A brief intro from an AI

perspective

Stockman MSU/CSE Fall 2008

1

Computer Vision and Human

Perception

What are the goals of CV?

What are the applications?

How do humans perceive the 3D world

via images?

Some methods of processing images.

Stockman MSU/CSE Fall 2008

2

Goal of computer vision

Make useful decisions about real physical

objects and scenes based on sensed images.

Alternative (Aloimonos and Rosenfeld): goal

is the construction of scene descriptions from

images.

How do you find the door to leave?

How do you determine if a person is friendly

or hostile? .. an elder? .. a possible mate?

Stockman MSU/CSE Fall 2008

3

Critical Issues

Sensing: how do sensors obtain images of

the world?

Information: how do we obtain color,

texture, shape, motion, etc.?

Representations: what representations

should/does a computer [or brain] use?

Algorithms: what algorithms process image

information and construct scene descriptions?

Stockman MSU/CSE Fall 2008

4

Images: 2D projections of 3D

3D world has color, texture, surfaces,

volumes, light sources, objects, motion,

betweeness, adjacency, connections, etc.

2D image is a projection of a scene from a

specific viewpoint; many 3D features are

captured, some are not.

Brightness or color = g(x,y) or f(row, column)

for a certain instant of time

Images indicate familiar people, moving

objects or animals, health of people or

machines

Stockman MSU/CSE Fall 2008

5

Image receives reflections

Light reaches

surfaces in 3D

Surfaces reflect

Sensor element

receives light

energy

Intensity matters

Angles matter

Material maters

Stockman MSU/CSE Fall 2008

6

CCD Camera has discrete elts

Stockman MSU/CSE Fall 2008

Lens collects

light rays

CCD elts

replace

chemicals of

film

Number of elts

less than with

film (so far)

7

Intensities near center of eye

Stockman MSU/CSE Fall 2008

8

Camera + Programs = Display

Camera inputs

to frame

buffer

Program can

interpret data

Program can

add graphics

Program can

add imagery

Stockman MSU/CSE Fall 2008

9

Some image format issues

Spatial resolution; intensity

resolution; image file format

Stockman MSU/CSE Fall 2008

10

Resolution is “pixels per unit

of length”

Stockman MSU/CSE Fall 2008

Resolution

decreases by

one half in

cases at left

Human faces

can be

recognized at

64 x 64

pixels per

face

11

Features detected depend on

the resolution

Stockman MSU/CSE Fall 2008

Can tell

hearts from

diamonds

Can tell face

value

Generally

need 2 pixels

across line or

small region

(such as eye)

12

Human eye as a spherical

camera

100M sensing elts

in retina

Rods sense

intensity

Cones sense color

Fovea has tightly

packed elts, more

• Eye scans, or saccades to

cones

image details on fovea

Periphery has more

rods

• 100M sensing cells funnel to

Focal length is

1M optic nerve connections

about 20mm

to the brain

Stockman MSU/CSE Fall 2008

13

Pupil/iris controls

Image processing operations

Thresholding;

Edge detection;

Motion field computation

Stockman MSU/CSE Fall 2008

14

Find regions via thresholding

Region has brighter or darker or redder

color, etc.

If pixel > threshold

then pixel = 1 else pixel = 0

Stockman MSU/CSE Fall 2008

15

Example red blood cell image

Many blood cells are

separate objects

Many touch – bad!

Salt and pepper

noise from

thresholding

How useable is this

data?

Stockman MSU/CSE Fall 2008

16

Robot vehicle must see stop sign

sign = imread('Images/stopSign.jpg','jpg');

red = (sign(:, :, 1)>120) & (sign(:,:,2)<100) & (sign(:,:,3)<80);

out = red*200;

imwrite(out, 'Images/stopRed120.jpg', 'jpg')

Stockman MSU/CSE Fall 2008

17

Thresholding is usually not trivial

Stockman MSU/CSE Fall 2008

18

Can cluster pixels by color

similarity and by adjacency

Original RGB Image

Color Clusters by K-Means

Stockman MSU/CSE Fall 2008

19

Some image processing ops

Finding contrast in an image;

using neighborhoods of pixels;

detecting motion across 2 images

Stockman MSU/CSE Fall 2008

20

Differentiate to find object

edges

For each pixel,

compute its

contrast

Can use max

difference of its

8 neighbors

Detects

intensity

change across

boundary of

adjacent

regions

LOG filter later on

Stockman MSU/CSE Fall 2008

21

4 and 8 neighbors of a pixel

4 neighbors are at

multiples of 90

degrees

8 neighbors are at

every multiple of 45

degrees

. N .

W* E

. S .

NW N NE

W * E

SW S SE

Stockman MSU/CSE Fall 2008

22

Detect Motion via Subtraction

Differences computed over

time rather than over space

Stockman MSU/CSE Fall 2008

Constant

background

Moving object

Produces

pixel

differences at

boundary

Reveals

moving object

and its shape

23

Two frames of aerial imagery

Video frame N and N+1 shows slight movement:

most pixels are same, just in different locations.

Stockman MSU/CSE Fall 2008

24

Best matching blocks between video

frames N+1 to N (motion vectors)

The bulk of the vectors

show the true motion of

the airplane taking the

pictures. The long vectors

are incorrect motion

vectors, but they do work

well for compression of

image I2!

Best matches from 2nd to first image shown as vectors

overlaid on the 2nd image. (Work by Dina Eldin.)

Stockman MSU/CSE Fall 2008

25

Gradient from 3x3 neighborhood

Estimate both magnitude and direction of the edge.

Stockman MSU/CSE Fall 2008

26

Prewitt versus Sobel masks

Sobel mask uses weights of 1,2,1 and -1,-2,-1 in

order to give more weight to center estimate.

The scaling factor is thus 1/8 and not 1/6.

Stockman MSU/CSE Fall 2008

27

Computational short cuts

Stockman MSU/CSE Fall 2008

28

2 rows of intensity vs difference

Stockman MSU/CSE Fall 2008

29

“Masks” show how to combine

neighborhood values

Multiply the mask by the image

neighborhood to get first derivatives

of intensity versus x and versus y

Stockman MSU/CSE Fall 2008

30

Curves of contrasting pixels

Stockman MSU/CSE Fall 2008

31

Boundaries not always found well

Stockman MSU/CSE Fall 2008

32

Canny boundary operator

Stockman MSU/CSE Fall 2008

33

LOG filter creates zero crossing at

step edges (2nd der. of Gaussian)

3x3 mask applied at

each image position

Detects spots

Detects steps edges

Marr-Hildreth

theory of edge

detection

Stockman MSU/CSE Fall 2008

34

G(x,y): Mexican hat filter

Stockman MSU/CSE Fall 2008

35

Positive

center

Negative

surround

Stockman MSU/CSE Fall 2008

36

Properties of LOG filter

Has zero response on constant region

Has zero response on intensity ramp

Exaggerates a step edge by making it

larger

Responds to a step in any direction

across the “receptive field”.

Responds to a spot about the size of

the center

Stockman MSU/CSE Fall 2008

37

Human receptive field is

analogous to a mask

X[j] are the

image

intensities.

W[j] are

gains

(weights) in

the mask

Stockman MSU/CSE Fall 2008

38

Human receptive fields amplify

contrast

Stockman MSU/CSE Fall 2008

39

3D neural network in brain

Level j

Level j+1

Stockman MSU/CSE Fall 2008

40

Mach band effect shows human bias

Stockman MSU/CSE Fall 2008

41

Human

bias and

illusions

supports

receptive

field theory

of edge

detection

Stockman MSU/CSE Fall 2008

42

Human brain as a network

100B neurons, or nodes

Half are involved in vision

10 trillion connections

Neuron can have “fanout” of 10,000

Visual cortex highly structured to

process 2D signals in multiple ways

Stockman MSU/CSE Fall 2008

43

Color and shading

Used heavily in human vision

Color is a pixel property,

making some recognition

problems easy

Visible spectrum for humans

is 400nm (blue) to 700 nm

(red)

Machines can “see” much

more; ex. X-rays, infrared,

radio waves

Stockman MSU/CSE Fall 2008

44

Imaging Process (review)

Stockman MSU/CSE Fall 2008

45

Factors that Affect Perception

• Light:

the spectrum of energy that

illuminates the object surface

• Reflectance: ratio of reflected light to incoming light

• Specularity:

highly specular (shiny) vs. matte surface

• Distance:

distance to the light source

• Angle:

angle between surface normal and light

source

• Sensitivity

MSU/CSEisFall

2008sensor

howStockman

sensitive

the

46

Some physics of color

White light is composed of all visible frequencies (400-700)

Ultraviolet and X-rays are of much smaller wavelength

Infrared and radio waves are of much longer wavelength

Stockman MSU/CSE Fall 2008

47

Models of Reflectance

We need to look at models for the physics

of illumination and reflection that will

1. help computer vision algorithms

extract information about the 3D world,

and

2. help computer graphics algorithms

render realistic images of model scenes.

Physics-based vision is the subarea of computer vision

that uses physical models to understand image formation

in order to better analyze real-world images.

Stockman MSU/CSE Fall 2008

48

The Lambertian Model:

Diffuse Surface Reflection

A diffuse reflecting

surface reflects light

uniformly in all directions

Uniform brightness for

all viewpoints of a planar

surface.

Stockman MSU/CSE Fall 2008

49

Real matte objects

Light

from

ring

around

camera

lens

Stockman MSU/CSE Fall 2008

50

Specular reflection is highly

directional and mirrorlike.

R is the ray of

reflection

V is direction from the

surface toward the

viewpoint

is the shininess

parameter

Stockman MSU/CSE Fall 2008

51

CV: Perceiving 3D from 2D

Many cues from 2D images

enable interpretation of the

structure of the 3D world

producing them

Stockman MSU/CSE Fall 2008

52

Many 3D cues

How can humans and other

machines reconstruct the

3D nature of a scene from

2D images?

What other world

knowledge needs to be

added in the process?

Stockman MSU/CSE Fall 2008

53

Labeling image contours

interprets the 3D scene structure

“shadow” relates to

illumination, not material

Logo on cup is a

“mark” on the material

+

Stockman MSU/CSE Fall 2008

An egg and a

thin cup on a

table top

lighted from

the top right

54

“Intrinsic Image” stores 3D info

in “pixels” and not intensity.

For each point of

the image, we

want depth to the

3D surface point,

surface normal at

that point, albedo

of the surface

material, and

illumination of

that surface point.

Stockman MSU/CSE Fall 2008

55

3D scene versus 2D image

Creases

Corners

Faces

Occlusions (for some

viewpoint)

Edges

Junctions

Regions

Blades, limbs, T’s

Stockman MSU/CSE Fall 2008

56

Labeling of simple polyhedra

Labeling of a block

floating in space. BJ and

KI are convex creases.

Blades AB, BC, CD, etc

model the occlusion of

the background. Junction

K is a convex trihedral

corner. Junction D is a Tjunction modeling the

occlusion of blade CD by

blade JE.

Stockman MSU/CSE Fall 2008

57

Trihedral Blocks World Image

Junctions: only 16 cases!

Only 16 possible junctions in 2D formed by viewing 3D corners

formed by 3 planes and viewed from a general viewpoint! From

top to bottom: L-junctions, arrows, forks, and T-junctions.

Stockman MSU/CSE Fall 2008

58

How do we obtain the catalog?

think about solid/empty assignments to

the 8 octants about the X-Y-Z-origin

think about non-accidental viewpoints

account for all possible topologies of

junctions and edges

then handle T-junction occlusions

Stockman MSU/CSE Fall 2008

59

Blocks world labeling

Left: block floating in

space

Right: block glued

to a wall at the back

Stockman MSU/CSE Fall 2008

60

Try labeling these: interpret the

3D structure, then label parts

What does it

mean if we

can’t label

them? If we

can label

them?

Stockman MSU/CSE Fall 2008

61

1975 researchers very excited

very strong constraints on

interpretations

several hundred in catalogue when

cracks and shadows allowed (Waltz):

algorithm works very well with them

but, world is not made of blocks!

later on, curved blocks world work

done but not as interesting

Stockman MSU/CSE Fall 2008

62

Backtracking or

interpretation treeStockman MSU/CSE Fall 2008

63

“Necker cube” has multiple

interpretations

Label the different interpretations

A human staring at one of these cubes typically

experiences changing interpretations. The interpretation

of the two forks (G and H) flip-flops between “front

corner” and “back corner”. What is the explanation?

Stockman MSU/CSE Fall 2008

64

Depth cues in 2D images

Stockman MSU/CSE Fall 2008

65

“Interposition” cue

Def: Interposition occurs when one object occludes

another object, thus indicating that the occluding object

is closer to the viewer than the occluded object.

Stockman MSU/CSE Fall 2008

66

interposition

• T-junctions indicate

occlusion: top is occluding

edge while bar is the

occluded edge

• Bench occludes lamp post

• leg occludes bench

• lamp post occludes fence

• railing occludes trees

• trees occlude steeple

Stockman MSU/CSE Fall 2008

67

• Perspective scaling:

railing looks smaller at the

left; bench looks smaller at

the right; 2 steeples are far

away

• Forshortening: the bench

is sharply angled relative to

the viewpoint; image length

is affected accordingly

Stockman MSU/CSE Fall 2008

68

Texture gradient reveals surface

orientation

( In East Lansing,

we call it “corn”

not “maize’. )

Note also that the

rows appear to

converge in 2D

Texture Gradient: change of image texture along some

direction, often corresponding to a change in distance or

orientation in the 3D world containing the objects creating

Stockman MSU/CSE Fall 2008

69

the texture.

3D Cues from Perspective

Stockman MSU/CSE Fall 2008

70

3D Cues from perspective

Stockman MSU/CSE Fall 2008

71

More 3D cues

Virtual lines

Falsely perceived interposition

Stockman MSU/CSE Fall 2008

72

Irving Rock: The Logic of

Perception; 1982

Summarized an entire career in visual

psychology

Concluded that the human visual

system acts as a problem-solver

Triangle unlikely to be accidental; must

be object in front of background; must

be brighter since it’s closer

Stockman MSU/CSE Fall 2008

73

More 3D cues

2D alignment usually

means 3d alignment

2D image curves create

perception of 3D surface

Stockman MSU/CSE Fall 2008

74

“structured light” can enhance

surfaces in industrial vision

Sculpted object

Potatoes with light stripes

Stockman MSU/CSE Fall 2008

75

Models of Reflectance

We need to look at models for the physics

of illumination and reflection that will

1. help computer vision algorithms

extract information about the 3D world,

and

2. help computer graphics algorithms

render realistic images of model scenes.

Physics-based vision is the subarea of computer vision

that uses physical models to understand image formation

in order to better analyze real-world images.

Stockman MSU/CSE Fall 2008

76

The Lambertian Model:

Diffuse Surface Reflection

A diffuse reflecting

surface reflects light

uniformly in all directions

Uniform brightness for

all viewpoints of a planar

surface.

Stockman MSU/CSE Fall 2008

77

Shape (normals) from shading

Clearly intensity encodes

shape in this case

Cylinder with white paper

and pen stripes

Intensities plotted as a

surface

Stockman MSU/CSE Fall 2008

78

Shape (normals) from shading

Plot of intensity of one image row reveals the 3D shape of

these diffusely reflecting objects.

Stockman MSU/CSE Fall 2008

79

Specular reflection is highly

directional and mirrorlike.

R is the ray of

reflection

V is direction from the

surface toward the

viewpoint

is the shininess

parameter

Stockman MSU/CSE Fall 2008

80

What about models for

recognition

“recognition” = to know again;

How does memory store models

of faces, rooms, chairs, etc.?

Stockman MSU/CSE Fall 2008

81

Human capability extensive

Child age 6 might recognize 3000 words

And 30,000 objects

Junkyard robot must recognize nearly

all objects

Hundreds of styles of lamps, chairs,

tools, …

Stockman MSU/CSE Fall 2008

82

Some methods: recognize

Via

Via

Via

Via

geometric alignment: CAD

trained neural net

parts of objects and how they join

the function/behavior of an object

Stockman MSU/CSE Fall 2008

83

Side view classes of Ford Taurus

(Chen and Stockman)

These were made in

the PRIP Lab from a

scale model.

Viewpoints in between

can be generated from

x and y curvature

stored on boundary.

Viewpoints matched to

real image boundaries

via optimization.

Stockman MSU/CSE Fall 2008

84

Matching image edges to model limbs

Could recognize car model at stoplight or

gate.

Stockman MSU/CSE Fall 2008

85

Object as parts + relations

Parts have size, color, shape

Connect together at concavities

Relations are: connect, above, right of, inside of, …

Stockman MSU/CSE Fall 2008

86

Functional models

Inspired by JJ Gibson’s Theory of

Affordances.

An object is what an object does

* container: holds stuff

* club: hits stuff

* chair: supports humans

Stockman MSU/CSE Fall 2008

87

Louise Stark: chair model

Dozens of CAD models of chairs

Program analyzed for

* stable pose

* seat of right size

* height off ground right size

* no obstruction to body on seat

* program would accept a trash can

(which could also pass as a container)

Stockman MSU/CSE Fall 2008

88

Minski’s theory of frames

(Schank’s theory of scripts)

Frames are learned expectations –

frame for a room, a car, a party, an

argument, …

Frame is evoked by current situation –

how? (hard)

Human “fills in” the details of the

current frame (easier)

Stockman MSU/CSE Fall 2008

89

summary

Images have many low level features

Can detect uniform regions and contrast

Can organize regions and boundaries

Human vision uses several simultaneous

channels: color, edge, motion

Use of models/knowledge diverse and

difficult

Last 2 issues difficult in computer vision

Stockman MSU/CSE Fall 2008

90