thesis defense - People.csail.mit.edu

advertisement

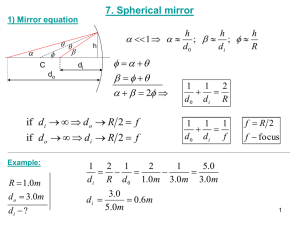

Models for Multi-View Object Class Detection Han-Pang Chiu 1 Multi-View Object Class Detection Multi-View Single-View Same Object Object Class Multi-View Object Class Training Set Test Set 2 The Roadblock • All existing methods for multi-view object class detection require many real training images of objects for many viewpoints. - The learning processes for each viewpoint of the same object class should be related. 3 The Potemkin Model The Potemkin1 model can be viewed as a collection of parts, which are oriented 3D primitives. - a 3D class skeleton: The arrangement of part centroids in 3D. - 2D projective transforms: The shape change of each part from one view to another. 1So-called “Potemkin villages” were artificial villages, constructed only of facades. Our models, too are constructed of facades. 4 Related Approaches Data-Efficiency , Compatibility The Potemkin Model 3D cross-view constraints [Thomas06, Savarese07, Kushal07] 2D explicit 3D model multiple 2D models [Hoiem07, Yan07] [Crandall07, Torralba04, Leibe07] 5 Two Uses of the Potemkin Model 1. Generate virtual training data 2. Reconstruct 3D shapes of detected objects 2D Test Image Multi-View Object Class Detection System Detection Result 3D Understanding 6 Outline Potemkin Model Basic Estimation Class Skeleton Real Training Data Use Generalized 3D Supervised Part Labeling Virtual Training Data Generation 7 Definition of the Basic Potemkin Model • A basic Potemkin model for an object class with N parts. - K view bins - K projection matrices - NK2 transformation matrices - a class skeleton (S1,S2,…,SN): class-dependent 3D Space 2D Transforms K view bins 8 Estimating the Basic Potemkin Model Phase 1 - Learn 2D projective transforms from a 3D oriented primitive 8 Degrees Of Freedom view T, view view view T1, T2, T3, ……………… 9 Estimating the Basic Potemkin Model Phase 2 - We compute 3D class skeleton for the target object class. - Each part needs to be visible in at least two views from the view bins we are interested in. - We need to label the view bins and the parts of objects in real training images. 10 Using the Basic Potemkin Model 11 The Basic Potemkin Model Estimating Synthetic Class-Independent 3D Model Using Real Class-Specific Few Labeled Images Virtual View-Specific All Labeled Images 2D Synthetic Views Part Transforms Generic Transforms Shape Primitives Part Transforms Skeleton Target Object Class 12 Combine Parts Virtual Images Problem of the Basic Potemkin Model 0.2 0 z -0.2 1 -0.4 80 -0.6 60 -0.8 80 6 60 40 40 3 4 y 20 20 0 y 5 0 2 0.5 -20 -20 -40 0 -40 -60 -4000 -2000-100 0 2000 0.5 0 50 100 0 -0.5 x x -50 0 50 x -0.5 -1 80 y 80 60 60 40 40 20 y 20 0 y 0 -20 80 80 -40 60 60 40 40 20 20 0 50 x 100 -40 -60 -4000 -2000 -100 0 2000 -50 0 50 x y -60 -4000 -2000 -50 0 2000 -20 y -60 -4000 -2000 -50 0 2000 1 0 0 -20 -20 -40 -40 -60 -4000 -2000 -50 0 -60 0 x 50 -4000 -2000 -50 0 0 50 x 13 Outline Potemkin Model Basic Generalized Estimation Class Skeleton Multiple Primitives Real Training Data Supervised Part Labeling Use Virtual Training Data Generation 3D 14 Multiple Oriented Primitives • An oriented primitive is decided by the 3D shape and the starting view bin. View1 View2 ……………………….. View K K views azimuth Multiple Primitives elevation azimuth 2D Transforms 2D views 15 3D Shapes view 2D Transform view T, K view bins 16 The Potemkin Model Estimating Synthetic Class-Independent 3D Model Using Real Class-Specific Few Labeled Images Virtual View-Specific All Labeled Images 2D Synthetic Views Primitive Selection Part Transforms Generic Transforms Shape Primitives Part Transforms Skeleton Infer Part Indicator Target Object Class 17 Combine Parts Virtual Images Greedy Primitive Selection - Find a best set of primitives to model all parts M - Four primitives are enough for modeling four object classes (21 object parts). Greedy Selection view view 0.9 chair 0.85 bicycle car aircraft 0.8 ? Tm, AB m 1, 2,..., M A B A B Quality of Transformation all classes 0.75 0.7 0.65 0.6 0.55 0.5 1 2 3 4 5 6 Number of Greedily Selected Primitives 7 18 8 Primitive-Based Representation 19 The Influence of Multiple Primitives • Better predict what objects look like in novel views Single Primitive Multiple Primitives 20 Virtual Training Images 21 The Potemkin Model Estimating Synthetic Class-Independent 3D Model Using Real Class-Specific Few Labeled Images Virtual View-Specific All Labeled Images 2D Synthetic Views Primitive Selection Part Transforms Generic Transforms Shape Primitives Part Transforms Skeleton Infer Part Indicator Target Object Class 22 Combine Parts Virtual Images Outline Potemkin Model Basic Generalized Estimation Class Skeleton Multiple Primitives Real Training Data SelfSupervised Supervised Part Part Labeling Labeling Use Virtual Training Data Generation 23 Self-Supervised Part Labeling • For the target view, choose one model object and label its parts. • The model object is then deformed to other objects in the target view for part labeling. k=6, o=1, If =0.06657, aff.cost=0.10301, SC cost=0.07626 93 correspondences (unwarped X) 50 50 20 20 100 100 40 40 60 60 80 80 100 100 120 120 140 140 150 150 20 40 60 80100 20 40 60 80100 100 samples 100 samples 50 50 100 100 150 150 20 40 60 80 100 20 40 60 80 100 160 160 10 20 30 40 50 60 70 80 90 100 10 110 75 correspondences (unwarped X) 20 40 60 80 100 20 40 60 80 100 50 100 150 200 50 100 samples 100 150 200 100 samples 10 10 20 20 30 30 40 40 50 50 60 60 70 70 80 20 20 40 40 80 60 60 90 90 80 80 100 100 100 20 30 40 50 60 70 80 90 100 110 k=6, o=1, If =0.055368, aff.cost=0.084792, SC cost=0.14406 100 110 50 100 150 200 50 100 150 110 200 20 40 60 80 100 120 140 160 180 200 220 20 40 60 80 100 120 140 160 180 200 220 24 Multi-View Class Detection Experiment • Detector: Crandall’s system (CVPR05, CVPR07) • Dataset: cars (partial PASCAL), chairs (collected by LIS) • Each view (Real/Virtual Training): 20/100 (chairs), 15/50 (cars) • Task: Object/No Object, No viewpoint identification Object Class: Chair True Positive Rate 1 0.9 0.9 0.8 0.8 0.7 0.7 0.6 0.6 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 0.1 0.1 0 0 0.1 0.2 0.3 0.4 0.5 0.6 False Positive Rate Object Class: Car 1 0.7 0.8 0.9 0 Real + Virtual (self-supervised) Real Real++Virtual Virtual(multiple (multipleprimitives) primitives) Real images Real Real+Real +Virtual Virtual Real + Virtual images (single (single (single from primitive) primitive) all primitive) views Real Realimages Real images images from fromall from allviews views all views Real images Real Realimages Real images images 01 0.1 0.2 0.3 0.4 0.5 0.6 0.7 False Positive Rate 0.8 0.9 25 1 Outline Potemkin Model Basic Generalized 3D Estimation Class Skeleton Multiple Primitives Class Planes Real Training Data Supervised SelfPart Supervised Labeling Part Labeling Use Virtual Training Data Generation 26 Definition of the 3D Potemkin Model • A 3D Potemkin model for an object class with N parts. - K view bins - K projection matrices, K rotation matrices, TR33 - a class skeleton (S1,S2,…,SN) - K part-labeled images -N 3D planes, Qi ,(i 1,…N): ai X+bi Y+ci Z+di =0 3D Space K view bins 27 3D Representation • Efficiently capture prior knowledge of 3D shapes of the target object class. • The object class is represented as a collection of parts, which are oriented 3D primitive shapes. • This representation is only approximately correct. 28 Estimating 3D Planes 80 80 60 60 40 40 20 0 y y 20 0 -20 -20 -40 -40 -60 0 50 100 -50 0 50 x x 80 80 60 60 40 40 20 20 y y 0 0 -20 -20 80 80 60 60 40 40 20 20 -40 -40 -50 0 50 100 x -60 -4000 -2000 2000 0 -100 4000 -50 0 x 50 100 y -60 -4000 -2000 20000 -100 4000 y -60 -4000 -2000 0 -50 2000 4000 -4000 -2000 0 -100 2000 4000 0 0 -20 -20 -40 -40 -60 -4000 -2000-50 0 2000 0 x 50 -60 -4000 -2000-50 0 2000 0 50 x 29 Self-Occlusion Handling 80 80 80 60 40 40 20 20 60 40 20 y y 60 y 0 0 0 -20 -20 -20 -40 -40 -40 -60 -4000 -2000-50 0 2000 0 x 50 -60 -4000 -2000 20000 -100 4000 -60 -50 0 50 100 -4000 -2000 0 -50 2000 4000 0 50 100 x x No Occlusion Handling Occlusion Handling 30 3D Potemkin Model: Car Minimum requirement: four views of one instance Number of Parts: 8 (right-side, grille, hood, windshield, roof, back-windshield, back-grille, left-side) 100 50 60 50 40 0 20 0 0 -50 -20 -50 -40 -100 -60 -20 -10 -140 4 0 x 10 -120 -100 -80 -60 -40 -20 0 20 -100 -20 -104 0 x 10 -100 -50 0 50 -15 -10 0-54 -150 x 510 -100 -50 0 50 100 31 Outline Potemkin Model Basic Generalized 3D Estimation Class Skeleton Multiple Primitives Class Planes Real Training Data Supervised SelfPart Supervised Labeling Part Labeling Use Virtual Training Data Generation Single-View 3D Reconstruction 32 Single-View Reconstruction • 3D Reconstruction (X, Y, Z) from a Single 2D Image (xim, yim) - a camera matrix (M), a 3D plane m11 m12 m13 m14 M m21 m22 m23 m24 m31 m32 m33 m34 m11 X m12Y m13Z m14 xim m31 X m32Y m33Z m34 yim m21 X m22Y m23Z m24 m31 X m32Y m33Z m34 aX bY cZ d 0 33 Automatic 3D Reconstruction • 3D Class-Specific Reconstruction from a Single 2D Image - a camera matrix (M), a 3D ground plane (agX+bgY+cgZ+dg=0) Pi : ai X bi Y ci Z d i 0 offset Geometric 3D Potemkin Context Model (Hoiem et al.05) 100 50 50 0 100 150 -50 200 250 -100 -20 -104 0 x 10 300 50 10 10 100 150 200 P2 250 300 350 -100 -50 0 50 Occluded Part Prediction 50 20 20 P1 100 30 30 40150 40 50 50 200 60 60 250 70 70 300 20 20 50 2D Input 40 40 100 60 60 150 80 80 200 100 100 250 120 120 300 350 Detection Segmentation Self-Supervised 3D Output (Leibe et al. 07) (Li et al. 05) Part Registration 34 Application: Photo Pop-up • Hoiem et al. classified image regions into three geometric classes (ground, vertical surfaces, and sky). • They treat detected objects as vertical planar surfaces in 3D. • They set a default camera matrix and a default 3D ground plane. 35 Object Pop-up The link of the demo videos: http://people.csail.mit.edu/chiu/demos.htm 36 Depth Map Prediction • Match a predicted depth map against available 2.5D data • Improve performance of existing 2D detection systems 50 50 50 50 50 100 100 100 100 100 150 150 150 150 150 200 200 200 200 200 50 100 150 200 250 50 300 100 150 200 250 300 50 100 150 200 250 50 300 100 150 200 250 300 50 50 50 50 50 100 100 100 100 100 150 150 150 150 150 200 200 200 200 200 50 100 150 200 250 300 50 100 150 200 250 300 50 100 150 200 250 300 50 100 150 200 250 300 50 100 150 200 250 50 100 150 200 250 300 300 37 Application: Object Detection • 109 test images and stereo depth maps, 127 annotated cars • 15 candidates/image (each candidate ci: bounding box bi, likelihood li from 2D detector, predicted depth map zi) zs 50 Videre Designs 45 40 50 35 30 100 25 20 150 15 10 200 zi 5 50 Di min 100 150 200 250 300 0 ( Z s (a1Z i a 2 )) 2 a1 , a2 scale offset exp(log( li ) w log(1 Di ) (1 w)) Likelihood from detector Depth consistency 38 Experimental Results • Number of car training/test images: 155/109 • Murphy-Torralba-Freeman detector (w = 0.5) • Dalal-Triggs detector (w=0.6) Murphy-Torralba-Freeman Detector Dalal-Triggs Detector 0.9 0.8 0.6 0.7 0.5 0.4 Detection Rate Detection Rate 0.6 0.3 0.5 0.4 2D Detector 0.3 2D Detector 0.2 2D Detector(With Depth) 2D Detector(With Depth) 0.2 0.1 0.1 0 0 0 0.5 1 1.5 2 2.5 FP per image 3 3.5 4 4.5 5 0 0.5 1 1.5 2 2.5 FP per image 3 3.5 4 4.5 39 5 Quality of Reconstruction • Calibration: Camera, 3D ground plane (1m by 1.2m table) • 20 diecast model cars Average Potemkin Single Plane overlap 77.5 % centroid error orientation error 8.75 mm 73.95 mm Ferrari F1: 26.56%, 24.89 mm, 3.37o 2.34o 16.26o 40 Application: Robot Manipulation • 20 diecast model cars, 60 trials • Successful grasp: 57/60 (Potemkin), 6/60 (Single Plane) The link of the demo videos: http://people.csail.mit.edu/chiu/demos.htm 41 Application: Robot Manipulation • 20 diecast model cars, 60 trials • Successful grasp: 57/60 (Potemkin), 6/60 (Single Plane) 42 Occluded Part Prediction • A Basket instance The link of the demo videos: http://people.csail.mit.edu/chiu/demos.htm 0.6 0.4 2 0.2 4 6 0 z -0.2 3 -0.4 5 -0.6 1 -0.8 -1 -1.2 -0.5 0 0 0.5 0.5 -0.5 1 -1 1.5 y x Extrinsic parameters (object-centered) Extrinsic parameters (object-centered) object 100 Z 0 -100 -200 0 -600 Z object 100 -500 -100 -400 -200 -300 0 -200 -200 -100 -400 Yobject 0 200 Yobject 200 100 100 0 -600 -100 0 -100 Xobject Xobject 43 Contributions • The Potemkin Model: - Provide a middle ground between 2D and 3D - Construct a relatively weak 3D model - Generate virtual training data - Reconstruct 3D objects from a single image • Applications - Multi-view object class detection - Object pop-up - Object detection using 2.5D data - Robot Manipulation 44 Acknowledgements • Thesis committee members - Tómas Lozano-Pérez, Leslie Kaelbling, Bill Freeman • Experimental Help - LableMe and detection system: Sam Davies - Robot system: Kaijen Hsiao and Huan Liu - Data collection: Meg A. Lippow and Sarah Finney - Stereo vision: Tom Yeh and Sybor Wang - Others: David Huynh, Yushi Xu, and Hung-An Chang • All LIS people • My parents and my wife, Ju-Hui 45 Thank you! 46