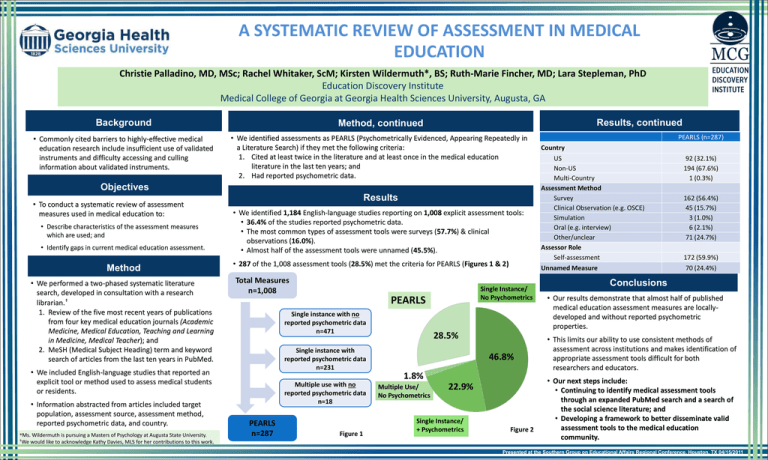

System Review of Assessment in Medical Education

advertisement

A SYSTEMATIC REVIEW OF ASSESSMENT IN MEDICAL EDUCATION Christie Palladino, MD, MSc; Rachel Whitaker, ScM; Kirsten Wildermuth*, BS; Ruth-Marie Fincher, MD; Lara Stepleman, PhD Education Discovery Institute Medical College of Georgia at Georgia Health Sciences University, Augusta, GA Background Method, continued Results, continued • Commonly cited barriers to highly-effective medical education research include insufficient use of validated instruments and difficulty accessing and culling information about validated instruments. • We identified assessments as PEARLS (Psychometrically Evidenced, Appearing Repeatedly in a Literature Search) if they met the following criteria: 1. Cited at least twice in the literature and at least once in the medical education literature in the last ten years; and 2. Had reported psychometric data. Objectives • To conduct a systematic review of assessment measures used in medical education to: • Describe characteristics of the assessment measures which are used; and • Identify gaps in current medical education assessment. Method • We performed a two-phased systematic literature search, developed in consultation with a research librarian.† 1. Review of the five most recent years of publications from four key medical education journals (Academic Medicine, Medical Education, Teaching and Learning in Medicine, Medical Teacher); and 2. MeSH (Medical Subject Heading) term and keyword search of articles from the last ten years in PubMed. Results • We identified 1,184 English-language studies reporting on 1,008 explicit assessment tools: • 36.4% of the studies reported psychometric data. • The most common types of assessment tools were surveys (57.7%) & clinical observations (16.0%). • Almost half of the assessment tools were unnamed (45.5%). • 287 of the 1,008 assessment tools (28.5%) met the criteria for PEARLS (Figures 1 & 2) Total Measures n=1,008 *Ms. Wildermuth is pursuing a Masters of Psychology at Augusta State University. †We would like to acknowledge Kathy Davies, MLS for her contributions to this work. PEARLS Single instance with no reported psychometric data n=471 28.5% Single instance with reported psychometric data n=231 • We included English-language studies that reported an explicit tool or method used to assess medical students or residents. • Information abstracted from articles included target population, assessment source, assessment method, reported psychometric data, and country. Single Instance/ No Psychometrics Multiple use with no reported psychometric data n=18 PEARLS n=287 Figure 1 46.8% 1.8% Multiple Use/ No Psychometrics 22.9% Single Instance/ + Psychometrics Figure 2 PEARLS (n=287) Country US Non-US Multi-Country Assessment Method Survey Clinical Observation (e.g. OSCE) Simulation Oral (e.g. interview) Other/unclear Assessor Role Self-assessment Unnamed Measure 92 (32.1%) 194 (67.6%) 1 (0.3%) 162 (56.4%) 45 (15.7%) 3 (1.0%) 6 (2.1%) 71 (24.7%) 172 (59.9%) 70 (24.4%) Conclusions • Our results demonstrate that almost half of published medical education assessment measures are locallydeveloped and without reported psychometric properties. • This limits our ability to use consistent methods of assessment across institutions and makes identification of appropriate assessment tools difficult for both researchers and educators. • Our next steps include: • Continuing to identify medical assessment tools through an expanded PubMed search and a search of . the social science literature; and • Developing a framework to better disseminate valid . assessment tools to the medical education community. Presented at the Southern Group on Educational Affairs Regional Conference, Houston, TX 04/15/2011