P6 and NetBurst Microarchitecture - ECE Users Pages

advertisement

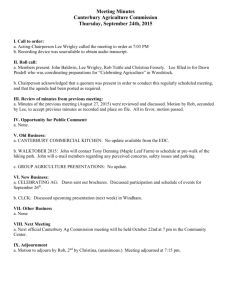

ECE4100/6100 H-H. S. Lee ECE4100/6100 Guest Lecture: P6 & NetBurst Microarchitecture Prof. Hsien-Hsin Sean Lee School of ECE Georgia Institute of Technology February 11, 2003 1 ECE4100/6100 H-H. S. Lee Why studies P6 from last millennium? A paradigm shift from Pentium A RISC core disguised as a CISC Huge market success: Microarchitecture And stock price Architected by former VLIW and RISC folks Multiflow (pioneer in VLIW architecture for superminicomputer) Intel i960 (Intel’s RISC for graphics and embedded controller) Netburst (P4’s microarchitecture) is based on P6 2 ECE4100/6100 H-H. S. Lee P6 Basics One implementation of IA32 architecture Super-pipelined processor 3-way superscalar In-order front-end and back-end Dynamic execution engine (restricted dataflow) Speculative execution P6 microarchitecture family processors include Pentium Pro Pentium II (PPro + MMX + 2x caches—16KB I/16KB D) Pentium III (P-II + SSE + enhanced MMX, e.g. PSAD) Celeron (without MP support) Later P-II/P-III/Celeron all have on-die L2 cache 3 ECE4100/6100 H-H. S. Lee x86 Platform Architecture Host Processor P6 Core L1 Cache (SRAM) Back-Side L2 Cache (SRAM) On-die or on-package Bus GPU Graphics Processor Front-Side Bus AGP System Memory (DRAM) MCH ICH Local Frame Buffer chipset PCI USB 4 I/O ECE4100/6100 H-H. S. Lee Pentium III Die Map 5 EBL/BBL – External/Backside Bus logic MOB - Memory Order Buffer Packed FPU - Floating Point Unit for SSE IEU - Integer Execution Unit FAU - Floating Point Arithmetic Unit MIU - Memory Interface Unit DCU - Data Cache Unit (L1) PMH - Page Miss Handler DTLB - Data TLB BAC - Branch Address Calculator RAT - Register Alias Table SIMD - Packed Floating Point unit RS - Reservation Station BTB - Branch Target Buffer TAP – Test Access Port IFU - Instruction Fetch Unit and L1 I-Cache ID - Instruction Decode ROB - Reorder Buffer MS - Micro-instruction Sequencer ECE4100/6100 H-H. S. Lee ISA Enahncement (on top of Pentium) CMOVcc / FCMOVcc r, r/m Conditional moves (predicated move) instructions Based on conditional code (cc) FCOMI/P : compare FP stack and set integer flags RDPMC/RDTSC instructions Uncacheable Speculative Write-Combining (USWC) — weakly ordered memory type for graphics memory MMX in Pentium II SIMD integer operations SSE in Pentium III Prefetches (non-temporal nta + temporal t0, t1, t2), sfence SIMD single-precision FP operations 6 ECE4100/6100 H-H. S. Lee RS Disp Exec / WB ROB DIS RET1 RET2 EX 31 32 33 42 43 Dcache2 DCache1 MOB disp 31 32 33 42 43 …….. 40 41 42 43 81 82 83 7 MOB Scheduling Delay … Ret ROB rd RRF wr Retirement in-order boundary DCache1 DCache2 81 82 83 Ret ptr wr .. 81 82 83 … RAT Exec n Exec2 31 32 33 .. AGU … DEC2 Mob wakeup Blocking memory pipeline 81: Mem/FP WB 82: Int WB 83: Data WB DEC1 ROB Scheduling Delay MOB blk MOB wr Non-blocking memory pipeline RS Scheduling Delay IFU3 31 32 33 AGU Multi-cycle inst pipeline … IFU2 82 83 FE in-order boundary Single-cycle inst pipeline IDQ RAT 20 21 22 RS schd Dec2 Br Dec 11 12 13 14 15 16 17 RS Write In-order FE I-Cache ILD Rotate Dec1 Next IP P6 Pipelining IFU1 91 92 93 ECE4100/6100 H-H. S. Lee P6 Microarchitecture External bus Data Cache Unit (L1) Chip boundary Bus Cluster Memory Order Buffer Bus interface unit Memory Cluster AGU Instruction Instruction Fetch Fetch Unit Unit MMX IEU/JEU IEU/JEU Control Flow BTB/BAC FEU Instruction Fetch Cluster (Restricted) Data Flow MIU Instruction Instruction Decoder Decoder Microcode Sequencer Register Alias Table Reservation Station Allocator Issue Cluster 8 ROB & Retire RF Out-of-order Cluster ECE4100/6100 H-H. S. Lee Instruction Fetching Unit data Other fetch requests addr Streaming Buffer Select mux Instruction buffer Next PC Mux Linear Address Instruction Cache ILD Length marks Instruction rotator Victim Cache P.Addr Instruction TLB Prediction marks #bytes consumed by ID Branch Target Buffer IFU1: Initiate fetch, requesting 16 bytes at a time IFU2: Instruction length decoder, mark instruction boundaries, BTB makes prediction IFU3: Align instructions to 3 decoders in 4-1-1 format 9 ECE4100/6100 H-H. S. Lee Dynamic Branch Prediction W0 W1 W2 Pattern History Tables (PHT) W3 New (spec) history 512-entry BTB 1 1 1 0 1 Branch History Register 0 (BHR) 0000 0001 0010 Spec. update 1101 1110 1 1111 Prediction 0 2-bit sat. counter Rc: Branch Result Similar to a 2-level PAs design Associated with each BTB entry W/ 16-entry Return Stack Buffer 4 branch predictions per cycle (due to 16-byte fetch per cycle) Static prediction provided by Branch Address Calculator when BTB misses (see prior slide) 10 ECE4100/6100 H-H. S. Lee Static Branch Prediction No No Unconditional PC-relative? BTB miss? Yes Yes PC-relative? No Return? Yes No BTB’s decision Yes No Indirect jump Conditional? Yes Taken Backwards? Taken Yes Taken 11 No Taken Not Taken Taken ECE4100/6100 H-H. S. Lee X86 Instruction Decode IFU3 complex (1-4) Microinstruction sequencer (MS) simple (1) simple (1) Instruction decoder queue (6 ops) 4-1-1 decoder Decode rate depends on instruction alignment DEC1: translate x86 into micro-operation’s (ops) DEC2: move decoded ops to ID queue MS performs translations either Next 3 inst #Inst to dec S,S,S 3 S,S,C First 2 S,C,S First 1 S,C,C First 1 C,S,S 3 C,S,C First 2 C,C,S First 1 C,C,C First 1 S: Simple C: Complex Generate entire op sequence from microcode ROM Receive 4 ops from complex decoder, and the rest from microcode ROM 12 ECE4100/6100 H-H. S. Lee Allocator The interface between in-order and out-of-order pipelines Allocates “3-or-none” ops per cycle into RS, ROB “all-or-none” in MOB (LB and SB) Generate physical destination Pdst from the ROB and pass it to the Register Alias Table (RAT) Stalls upon shortage of resources 13 ECE4100/6100 H-H. S. Lee Register Alias Table (RAT) FP TOS Adjust Integer RAT Array Array Physical Src (Psrc) FP RAT Array Int and FP Overrides In-order queue Logical Src Renaming Example RRF PSrc EAX 0 25 RAT PSrc’s EBX ECX 0 EDX 0 1 2 ECX 15 Allocator Physical ROB Pointers RRF ROB Register renaming for 8 integer registers, 8 floating point (stack) registers and flags: 3 op per cycle 40 80-bit physical registers embedded in the ROB (thereby, 6 bit to specify PSrc) RAT looks up physical ROB locations for renamed sources based on RRF bit 14 ECE4100/6100 H-H. S. Lee Partial Register Width Renaming FP TOS Adjust Size(2) RRF(1) Array Physical Src FP RAT Array Int and FP Overries In-order queue Logical Src Integer RAT Array INT Low Bank (32b/16b/L): 8 entries INT High Bank (H): 4 entries RAT Physical Src Allocator op0: op1: op2: op3: MOV MOV ADD ADD Physical ROB Pointers from Allocator 32/16-bit accesses: Read from low bank Write to both banks 8-bit RAT accesses: depending on which Bank is being written 15 PSrc(6) AL AH AL AH = = = = (a) (b) (c) (d) ECE4100/6100 H-H. S. Lee Partial Stalls due to RAT read AX EAX write CMP INC JBE EAX, EBX ECX XX ; stall Partial flag stalls (1) MOVB AL, m8 ; ADD EAX, m32 ; stall Partial register stalls TEST EBX, EBX LAHF ; stall XOR EAX, EAX MOVB AL, m8 ; ADD EAX, m32 ; no stall Partial flag stalls (2) Idiom Fix (1) JBE reads both ZF and CF while INC affects (ZF,OF,SF,AF,PF) LAHF loads low byte of EFLAGS SUB EAX, EAX MOVB AL, m8 ; ADD EAX, m32 ; no stall Idiom Fix (2) Partial register stalls: Occurs when writing a smaller (e.g. 8/16-bit) register followed by a larger (e.g. 32-bit) read Partial flags stalls: Occurs when a subsequent instruction read more flags than a prior unretired instruction touches 16 ECE4100/6100 H-H. S. Lee Reservation Stations WB bus 0 Port 0 IEU0 Fadd Fmul Imul Div WB bus 1 Port 1 IEU1 JEU Pfadd Pfshuf Loaded data RS Port 2 AGU0 Ld addr LDA MOB Port 3 AGU1 St addr STA DCU STD St data Port 4 ROB Retired RRF data Gateway to execution: binding max 5 op to each port per cycle 20 op entry buffer bridging the In-order and Out-of-order engine RS fields include op opcode, data valid bits, Pdst, Psrc, source data, BrPred, etc. Oldest first FIFO scheduling when multiple ops are ready at the same cycle 17 Pfmul ECE4100/6100 H-H. S. Lee ReOrder Buffer A 40-entry circular buffer Similar to that described in [SmithPleszkun85] 157-bit wide Provide 40 alias physical registers Out-of-order completion Deposit exception in each entry Retirement (or de-allocation) RS ALLOC ROB RAT After resolving prior speculation Handle exceptions thru MS Clear OOO state when a mis-predicted branch or exception is detected 3 op’s per cycle in program order For multi-op x86 instructions: none or all (atomic) 18 RRF .. . (exp) code assist MS ECE4100/6100 H-H. S. Lee Memory Execution Cluster RS / ROB LD STA STD Load Buffer DTLB FB DCU LD STA Store Buffer EBL Memory Cluster Blocks Manage data memory accesses Address Translation Detect violation of access ordering Fill buffers in DCU (similar to MSHR [Kroft’81]) for handling cache misses (nonblocking) 19 ECE4100/6100 H-H. S. Lee Memory Order Buffer (MOB) Allocated by ALLOC A second order RS for memory operations 1 op for load; 2 op’s for store: Store Address (STA) and Store Data (STD) MOB 16-entry load buffer (LB) 12-entry store address buffer (SAB) SAB works in unison with Store data buffer (SDB) in MIU Physical Address Buffer (PAB) in DCU Store Buffer (SB): SAB + SDB + PAB Senior Stores Upon STD/STA retired from ROB SB marks the store “senior” Senior stores are committed back in program order to memory when bus idle or SB full Prefetch instructions in P-III Senior load behavior Due to no explicit architectural destination 20 ECE4100/6100 H-H. S. Lee Store Coloring x86 Instructions op’s mov (0x1220), ebx std sta std sta ld ld std sta ld mov (0x1110), eax mov ecx, (0x1220) mov edx, (0x1280) mov (0x1400), edx mov edx, (0x1380) (ebx) 0x1220 (eax) 0x1100 (edx) 0x1400 store color 2 2 3 3 3 3 4 4 4 ALLOC assigns Store Buffer ID (SBID) in program order ALLOC tags loads with the most recent SBID Check loads against stores with equal or younger SBIDs for potential address conflicts SDB forwards data if conflict detected 21 ECE4100/6100 H-H. S. Lee Memory Type Range Registers (MTRR) Control registers written by the system (OS) Supporting Memory Types UnCacheable (UC) Uncacheable Speculative Write-combining (USWC or WC) Use a fill buffer entry as WC buffer WriteBack (WB) Write-Through (WT) Write-Protected (WP) E.g. Support copy-on-write in UNIX, save memory space by allowing child processes to share with their parents. Only create new memory pages when child processes attempt to write. Page Miss Handler (PMH) Look up MTRR while supplying physical addresses Return memory types and physical address to DTLB 22 ECE4100/6100 H-H. S. Lee Intel NetBurst Microarchitecture Pentium 4’s microarchitecture, a post-P6 new generation Original target market: Graphics workstations, but … the major competitor screwed up themselves… Design Goals: Performance, performance, performance, … Unprecedented multimedia/floating-point performance Streaming SIMD Extensions 2 (SSE2) Reduced CPI Low latency instructions High bandwidth instruction fetching Rapid Execution of Arithmetic & Logic operations Reduced clock period New pipeline designed for scalability 23 ECE4100/6100 H-H. S. Lee Innovations Beyond P6 Hyperpipelined technology Streaming SIMD Extension 2 Enhanced branch predictor Execution trace cache Rapid execution engine Advanced Transfer Cache Hyper-threading Technology (in Xeon and Xeon MP) 24 ECE4100/6100 H-H. S. Lee Pentium 4 Fact Sheet IA-32 fully backward compatible Available at speeds ranging from 1.3 to ~3 GHz Hyperpipelined (20+ stages) 42+ million transistors 0.18 μ for 1.7 to 1.9GHz; 0.13μ for 1.8 to 2.8GHz; Die Size of 217mm2 Consumes 55 watts of power at 1.5Ghz 400MHz (850) and 533MHz (850E) system bus 512KB or 256KB 8-way full-speed on-die L2 Advanced Transfer Cache (up to 89.6 GB/s @2.8GHz to L1) 1MB or 512KB L3 cache (in Xeon MP) 144 new 128 bit SIMD instructions (SSE2) HyperThreading Technology (only enabled in Xeon and Xeon MP) 25 ECE4100/6100 H-H. S. Lee Recent Intel IA-32 Processors 26 ECE4100/6100 H-H. S. Lee Building Blocks of Netburst System bus Bus Unit L1 Data Cache Level 2 Cache Execution Units Memory subsystem INT and FP Exec. Unit Fetch/ Dec ETC μROM OOO logic Branch history update BTB / Br Pred. Front-end Retire 27 Out-of-Order Engine ECE4100/6100 H-H. S. Lee Pentium 4 Microarchitectue BTB (4k entries) I-TLB/Prefetcher Trace Cache BTB (512 entries) 64 bits IA32 Decoder Code ROM Execution Trace Cache op Queue Allocator / Register Renamer Memory op Queue Memory scheduler INT / FP op Queue 64-bit System Bus Quad Pumped 400M/533MHz 3.2/4.3 GB/sec BIU Fast Slow/General FP scheduler Simple FP INT Register File / Bypass Network FP RF / Bypass Ntwk U-L2 Cache FP FP AGU AGU 2x ALU 2x ALU Slow ALU 256KB 8-way Move Simple Simple Complex MMX Ld addr St addr 128B line, WB Inst. Inst. Inst. SSE/2 48 GB/s 256 bits L1 Data Cache (8KB 4-way, 64-byte line,28WT, 1 rd + 1 wr port) @1.5Gz ECE4100/6100 H-H. S. Lee Pipeline Depth Evolution PREF DEC DEC EXEC WB P5 Microarchitecture IFU1 IFU2 IFU3 DEC1 DEC2 RAT ROB DIS EX RET1 RET2 P6 Microarchitecture TC NextIP TC Fetch Drive Alloc Rename Queue Schedule Dispatch NetBurst Microarchitecture 29 Reg File Exec Flags Br Ck Drive ECE4100/6100 H-H. S. Lee Execution Trace Cache Primary first level I-cache to replace conventional L1 Decoding several x86 instructions at high frequency is difficult, take several pipeline stages Branch misprediction penalty is horrible lost 20 pipeline stages vs. 10 stages in P6 Advantages Cache post-decode ops High bandwidth instruction fetching Eliminate x86 decoding overheads Reduce branch recovery time if TC hits Hold up to 12,000 ops 6 ops per trace line Many (?) trace lines in a single trace 30 ECE4100/6100 H-H. S. Lee Execution Trace Cache Deliver 3 op’s per cycle to OOO engine X86 instructions read from L2 when TC misses (7+ cycle latency) TC Hit rate ~ 8K to 16KB conventional I-cache Simplified x86 decoder Only one complex instruction per cycle Instruction > 4 op will be executed by micro-code ROM (P6’s MS) Perform branch prediction in TC 512-entry BTB + 16-entry RAS With BP in x86 IFU, reduce 1/3 misprediction compared to P6 Intel did not disclose the details of BP algorithms used in TC and x86 IFU (Dynamic + Static) 31 ECE4100/6100 H-H. S. Lee Out-Of-Order Engine Similar design philosophy with P6 uses Allocator Register Alias Table 128 physical registers 126-entry ReOrder Buffer 48-entry load buffer 24-entry store buffer 32 ECE4100/6100 H-H. S. Lee Register Renaming Schemes Data Status RRF Front-end RAT EAX EBX ECX EDX ESI EDI ESP EBP Retirement RAT EAX EBX ECX EDX ESI EDI ESP EBP P6 Register Renaming RF (128-entry) ROB (126) Allocated sequentially ROB (40-entry) Allocated sequentially RAT EAX EBX ECX EDX ESI EDI ESP EBP .. . .. . Data .. . .. . Status NetBurst Register Renaming 33 ECE4100/6100 H-H. S. Lee Micro-op Scheduling op FIFO queues Memory queue for loads and stores Non-memory queue op schedulers Several schedulers fire instructions to execution (P6’s RS) 4 distinct dispatch ports Maximum dispatch: 6 ops per cycle (2 fast ALU from Port 0,1 per cycle; 1 from ld/st ports) Exec Port 0 Fast ALU (2x pumped) FP Move Exec Port 1 Fast ALU (2x pumped) •Add/sub •FP/SSE Move •Add/sub •Logic •FP/SSE Store •Store Data •FXCH •Branches INT Exec •Shift •Rotate FP Exec •FP/SSE Add •FP/SSE Mul •FP/SSE Div 34 •MMX Load Port Store Port Memory Load Memory Store •Loads •LEA •Prefetch •Stores ECE4100/6100 H-H. S. Lee Data Memory Accesses 8KB 4-way L1 + 256KB 8-way L2 (with a HW prefetcher) Load-to-use speculation Dependent instruction dispatched before load finishes Due to the high frequency and deep pipeline depth Scheduler assumes loads always hit L1 If L1 miss, dependent instructions left the scheduler receive incorrect data temporarily – mis-speculation Replay logic – Re-execute the load when mis-speculated Independent instructions are allowed to proceed Up to 4 outstanding load misses (= 4 fill buffers in original P6) Store-to-load forwarding buffer 24 entries Have the same starting physical address Load data size <= store data size 35 ECE4100/6100 H-H. S. Lee Streaming SIMD Extension 2 P-III SSE (Katmai New Instructions: KNI) Eight 128-bit wide xmm registers (new architecture state) Single-precision 128-bit SIMD FP Four 32-bit FP operations in one instruction Broken down into 2 ops for execution (only 80-bit data in ROB) 64-bit SIMD MMX (use 8 mm registers — map to FP stack) Prefetch (nta, t0, t1, t2) and sfence P4 SSE2 (Willamette New Instructions: WNI) Support Double-precision 128-bit SIMD FP Two 64-bit FP operations in one instruction Throughput: 2 cycles for most of SSE2 operations (exceptional examples: DIVPD and SQRTPD: 69 cycles, non-pipelined.) Enhanced 128-bit SIMD MMX using xmm registers 36 ECE4100/6100 H-H. S. Lee Examples of Using SSE X3 X2 X1 X0 xmm1 X3 X2 X1 X0 xmm1 X3 X2 X1 X0 xmm1 Y3 Y2 Y1 Y0 xmm2 Y3 Y2 Y1 Y0 xmm2 Y3 Y2 Y1 Y0 xmm2 xmm1 Y3 .. Y0 Y3 .. Y0 X3 .. X0 X3 .. X0 op op op op X3 op Y3 X2 op Y2 X1 op Y1X0 op Y0 op xmm1 Packed SP FP operation (e.g. ADDPS xmm1, xmm2) X3 X2 X1 X0 op Y0 Scalar SP FP operation (e.g. ADDSS xmm1, xmm2) 37 Y3 Y3 X0 X1 xmm1 Shuffle FP operation (8-bit imm) imm8) (e.g. SHUFPS xmm1, xmm2, 0xf1) ECE4100/6100 H-H. S. Lee Examples of Using SSE and SSE2 SSE X3 X2 X1 X0 xmm1 X3 X2 X1 X0 xmm1 X3 X2 X1 X0 xmm1 Y3 Y2 Y1 Y0 xmm2 Y3 Y2 Y1 Y0 xmm2 Y3 Y2 Y1 Y0 xmm2 xmm1 Y3 .. Y0 Y3 .. Y0 X3 .. X0 X3 .. X0 op op op op X3 op Y3 X2 op Y2 X1 op Y1X0 op Y0 op xmm1 Packed SP FP operation (e.g. ADDPS xmm1, xmm2) X3 X2 X1 X0 op Y0 Scalar SP FP operation (e.g. ADDSS xmm1, xmm2) Y3 Y3 X0 X1 xmm1 Shuffle FP operation (8-bit imm) (e.g. SHUFPS xmm1, xmm2, imm8) 0xf1) SSE2 X1 X0 xmm1 X1 X0 xmm1 X1 X0 Y1 Y0 xmm2 Y1 Y0 xmm2 Y1 Y0 op op X1 op Y1 X0 op Y0 xmm1 Packed DP FP operation (e.g. ADDPD xmm1, xmm2) op X1 X0 op Y0 xmm1 Scalar DP FP operation (e.g. ADDSD xmm1, xmm2) 38 Y1 or Y0 X1 or X0 Shuffle FP DP operation (2-bit imm) (e.g. SHUFPD imm2) SHUFPS xmm1, xmm2, imm8) ECE4100/6100 H-H. S. Lee HyperThreading In Intel Xeon Processor and Intel Xeon MP Processor Enable Simultaneous Multi-Threading (SMT) Exploit ILP through TLP (—Thread-Level Parallelism) Issuing and executing multiple threads at the same snapshot Single P4 Xeon appears to be 2 logical processors Share the same execution resources Architectural states are duplicated in hardware 39 ECE4100/6100 H-H. S. Lee Multithreading (MT) Paradigms Unused Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Execution Time FU1 FU2 FU3 FU4 Conventional Superscalar Single Threaded Chip Fine-grained Coarse-grained Multithreading Multithreading Multiprocessor (CMP) (cycle-by-cycle (Block Interleaving) Interleaving) 40 Simultaneous Multithreading ECE4100/6100 H-H. S. Lee More SMT commercial processors Intel Xeon Hyperthreading Supports 2 replicated hardware contexts: PC (or IP) and architecture registers New directions of usage Helper (or assisted) threads (e.g. speculative precomputation) Speculative multithreading Clearwater (once called Xtream logic) 8 context SMT “network processor” designed by DISC architect (company no longer exists) SUN 4-SMT-processor CMP? 41 ECE4100/6100 H-H. S. Lee Speculative Multithreading SMT can justify wider-than-ILP datapath But, datapath is only fully utilized by multiple threads How to speed up single-thread program by utilizing multiple threads? What to do with spare resources? Execute both sides of hard-to-predictable branches Eager execution or Polypath execution Dynamic predication Send another thread to scout ahead to warm up caches & BTB Speculative precomputation Early branch resolution Speculatively execute future work Multiscalar or dynamic multithreading e.g. start several loop iterations concurrently as different threads, if data dependence is detected, redo the work Run a dynamic compiler/optimizer on the side Dynamic verification DIVA or Slipstream Processor 42