Hands-On Lab:

Drilling Down into

Domino Statistics

Andy Pedisich

Technotics

© 2013 Wellesley Information Services. All rights reserved.

What We’ll Cover …

•

•

•

•

•

•

•

Using console commands to get statistics

Calculating the space saved by using DAOS

Working with a problem server’s surging CPU

Setting up for spreadsheet based data drilling

Comparing server load with average server transactions per hour

Creating other charts using custom Statrep and spreadsheets

Wrap-up

1

Perpetual Statistics

•

Domino servers constantly generate statistics

They track data on a surprising level

On almost every aspect of server operations

Agent manager

Mail and calendaring

The server’s platform

SMTP and Notes mail

LDAP

HTTP

Network

And lots more, too

2

Server Statistics Are Organized Hierarchically

•

Stats are gathered into major categories like these

And then each one has a multitude of subcategories

ADMINP

Mem

Agent

Monitor

Calendar

NET

Database

Platform

Disk

POP3

Domino

Replica

EVENT

Server

HTTP

SMTP

LDAP

Stats

Mail

Update

3

Subcategories of Statistics

•

Here’s a snapshot from the Administrator client showing some of

the statistical hierarchy

This gives you a snapshot of the stats on your server

Use Refresh to get another snapshot

4

Statistics Come in Basic Types

•

•

The basic types of statistics are:

Stats that never change once the server is started

Snapshot stats – reflect what’s going on right now

Cumulative stats that grow from the moment server is started

These stats are available to you for:

Your Domino servers

The platform your server is running on

Your network environment

5

Static Statistics

•

Statistics that don’t change usually represent the operating

environment of the server

Server.Version.Notes = Release 8.5.3FP3

Server.Version.OS = Windows NT 5.0

Server.CPU.Type = Intel Pentium

Disk.D.Size = 71,847,784,448

Mem.PhysicalRAM = 527,433,728

6

Amazing Detail, Yours Free!

•

•

•

This includes OS platform, Domino version, RAM

Lots of information about disks in use

Platform.LogicalDisk.TotalNumofDisks = 3

Platform.LogicalDisk.2.AssignedName = E

Disk.C.Size = 80,023,715,840

And even Network Interface Card (NIC) information

Platform.Network.1.AdapterName = Intel[R] PRO_1000 MT

Server Adapter

Platform.Network.2.AdapterName = Broadcom NetXtreme

Gigabit Ethernet _2

Platform.Network.3.AdapterName = Broadcom NetXtreme

Gigabit Ethernet

7

What Good Are These Static Stats?

•

•

Think these static stats aren’t helpful?

Guess again

They are extremely valuable

If you are collecting stats correctly from all your servers, you can

take a pretty detailed server inventory

Without leaving your desk

From servers all around the world, just by looking at the data

we’re going to collect in the Monitoring Results database

This database is also know by its filename: STATREP.NSF

8

Snapshot Statistics

•

Snapshot stats show what’s happening at the moment you

ask for them

They are changing all the time

Disk.E.Free = 18,679,414,784

Server.Users = 280

Mem.Free = 433,614,848

MAIL.Waiting = 250

The best part about this is that you get lots of Domino-related

stats you wouldn’t get by looking at the operating system’s

performance tools

9

Cumulative Stats

•

Some stats are cumulative

They start counting from zero when you start the server

Server.Trans.Total = 31915

SMTP.MessagesProcessed = 966

Stats, like averages and maximums, are calculated from the

cumulative ones

Server.Users.Peak.Time = 02/21/2006 07:50:33 MST

Platform.Memory.PagesPerSec.Peak = 1,364.1

10

Resetting Statistics

•

•

Some of these cumulative stats can be reset using the following

console command:

Set Statistics statisticname

You can’t use wildcards (*) with this argument!

Here’s an example of why you might want to reset a stat:

Set Stat Server.Trans.Total

Resets the Server.Trans.Total statistic to 0

You might want to reset this stat if:

You are starting to benchmark a new application

You are debugging an agent and want to see if it is more

efficient after changes to its design

11

Platform Stats, Too

•

•

•

Platform stats vary widely from OS to OS

Getting platform stats from within Notes has great value

Track Domino server performance on an OS level even if your

servers run on a variety of operating systems

For example, it’s very common to have a mix of AIX and

Wintel servers

In a few minutes we’ll be discussing threshold tracking

You’ll be able to set notification thresholds universally from

within Notes to track these platform stats

Note

12

Getting to Platform Statistics

•

•

Domino releases 6, 7, and 8 track platform stats automatically

In earlier versions they had to be explicitly enabled and many

times were disabled due to problems with servers crashing

These problems are gone

To see all platform stats – enter this console command

Show stat platform

13

See Server Statistics

•

•

•

Quickest way to see all server stats is to enter console command:

Show stat

Any place you can get to a console, you can access stats that can

tell you a lot about the current state of the server

A SHOW STAT command gives you every statistic the Domino

server has

Several hundred of them!

That’s really too many to deal with at once

14

Can I See That in a Smaller Size?

•

•

Get a better view of the stats showing just what you’re looking for

using the asterisk wildcard

You can ask directly for the top level of the hierarchy

Show stat server

That shows all of the stat hierarchy under “server”

15

You Might Want Only Part of the Data

•

To get a select list of just the stats under the top-level requires the

use of wildcards in your console commands

If you only want Server.Users hierarchy, use the global “*”

Show stat server.users.*

16

What We’ll Cover …

•

•

•

•

•

•

•

Using console commands to get statistics

Calculating the space saved by using DAOS

Working with a problem server’s surging CPU

Setting up for spreadsheet based data drilling

Comparing server load with average server transactions per hour

Creating other charts using custom Statrep and spreadsheets

Wrap-up

17

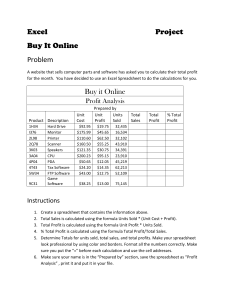

Let’s Have Some Fun With a Spreadsheet and DAOS

•

•

Domino Attachment and Object Service can save you disk space

When using DAOS, Domino saves attachments in an internal

repository

If any other document in any other file or files have that same

document, Domino saves a reference to the file

Attachments are only stored once on the server even

though they might be used in hundreds of databases

That’s why DAOS saves disk space

But now that you’ve implemented DAOS, how much disk space

have you saved

Wouldn’t you like to know how much

precious disk space you’ve saved?

18

How to Calculate DAOS Disk Space Savings

•

•

Your DAOS data must be on a separate drive outside of the data

directory of the server to use this formula

Here’s the formula to determine DAOS disk space savings

DAOS Savings =

Logical Size of all databases –

(Physical size of data directory + Physical size of DAOS

directory)

You can get all three of these statistics using the Notes

Administrator client

You have to dig a little to get them, but they are all there

19

Using the Notes Administrator Client to Get These Stats

•

•

We need three things:

Logical size of all databases

Physical size of data directory

Physical size of DAOS directory

Go to the Notes Administrator client and select the Files tab

The file columns will list the logical and physical size on a perfile basis

But how can you get the totals for all the files?

20

Getting a List of All the Files

•

In the Notes Administrator Client using the Files tab, click on the

All button on the top right of the screen

This will change the way the files on the server are displayed

You’ll no longer see folders

21

The Tree View Changes into a List of Every File

•

You’ll see a complete list of all files on the server

But you won’t see the sum of each column

This data needs to be taken into a spreadsheet for further

analysis

Note in the screen shot that every directory is listed

22

Select All

•

Use the Edit menu to select all records being displayed on the

Notes Administrator Client

Or you can select one of the records and do a Ctrl A

All the records will be selected

23

Copy the Records to the Clipboard

•

Use the Edit Copy menu or do a Ctrl C on the keyboard to copy

all the records

Then open your spreadsheet

24

Paste into Your Spreadsheet

•

Use the Edit Paste menu sequence or a Ctrl V to paste the

contents of your clipboard

The contents of the File tab which listed the disk stats for all of

the files on your server will be pasted into the spreadsheet

Now we can use formulas to do some calculations

25

You Might Want to Widen a Few Columns

•

Widen the columns for Logical Size and Physical Size just to see

what you are working with

Remember, you need the total Logical Size, which is how big

the databases would be if all the attachments were contained in

the database

And you need the total Physical Size, which is how big the

databases actually are

26

Add @Sum Formulas to Logical and Physical Columns

•

Use an @sum formula to total the disk size at the bottom of the

logical size and physical size columns

You can do this also by going to the bottom of each of the

columns and clicking the autosum formula option

This is an Office 2007 screen shot

You now have 2 of the 3 stats you need

27

You Need the Size of the DAOS Storage

•

•

To get the size of the DAOS storage, you have to query the

statistics on the server

Do this by entering the following using the Notes administrator

client server console

Show stat disk.*

Among the disk statistics will be the free space and size of the

disk that houses the DAOS store

Copy them to the clipboard

28

Using the Excel Import Wizard

•

If you are using Excel 2007 or better you can use Paste Special,

and use the text import wizard to allow the disk size values to be

pasted into cell

Otherwise you will have to copy the numbers out of the line of

statistics you pasted in

29

Paste into the Spreadsheet

•

Paste them into the bottom of the spreadsheet

Use a formula to subtract the DAOS free space from the total

DAOS space allocated

That will give you the space used by DAOS

30

Create the Final Formula

•

Create a formula

DAOS Savings = Logical Size of all databases –

(Physical size of data directory + Physical size of DAOS

directory)

It’s something great to show management

•

31

What We’ll Cover …

•

•

•

•

•

•

•

Using console commands to get statistics

Calculating the space saved by using DAOS

Working with a problem server’s surging CPU

Setting up for spreadsheet based data drilling

Comparing server load with average server transactions per hour

Creating other charts using custom Statrep and spreadsheets

Wrap-up

32

Dealing with Problematic Servers

•

Sometimes there are servers with issues that crop up

We would like to collect statistics for analysis from these

systems more frequently than we do from the standard

statistics collection interval

If you try to add a second collection interval on a server

you’ll get this:

33

Each Server Is Allowed to Collect Stats with Only One

Interval

•

A server can only have one

collection interval

•

•

You must create a 2nd collection

document for another server

Don’t forget to add the “collect”

task to servertasks= in NOTES.INI

Let’s look at a server that has

CPU spikes

First we create a statistics

collection document for a

second server to take statistics

from our problem server

34

Set the Collection Interval for Five Minutes

•

Set collection interval for 5

minutes

Do not check any filters!!!

•

They tell the collector to ignore

the statistics you checked

Note that stats are being

logged to a database

called ProblemServer.NSF

Used exclusively to track

CPU util of Traveler task

Note that the data in this

example has been fictionalized

for effect

35

Create a Special View That Tracks CPU Utilization for

Traveler

•

•

In this case it’s the Traveler CPU we want to track

We create a custom view for the collecting database that only has

the server name, the time of collection, and the statistic called

Platform.Process.Traveler.1.PctCpuUtil

This will be used to easily create a graph of the CPU activity

36

Collect the Data, Copy It as a Table from the Custom View

•

•

•

After collecting a week’s worth of data, we experience the CPU

utilization

All the data in the view is selected using Ctrl A

It is copied as a table

Copying views as a table is my favorite feature in Notes

A Monitoring Results template is posted on my web site

A URL to this template is included at the end of the

presentation

37

Data Has Been Copied to a Spreadsheet

•

A simple paste of the data puts it into a spreadsheet where we are

ready to turn it into a chart

38

Use the Tools in Your Spreadsheet to Create a Graph

•

•

Select the columns

Collection Time and

Traveler CPU

Create a graph from the

data

In this example, a scatter

chart type with smooth

lines is being used

39

The Resulting Graph

•

This produces an excellent graph of the CPU utilization over a ten

day period with samples being taken at intervals of 5 minutes

And it took less than 5 minutes to make this chart

One adjustment was made to the x-axis formatting and the

legend was removed

40

What We’ll Cover …

•

•

•

•

•

•

•

Using console commands to get statistics

Calculating the space saved by using DAOS

Working with a problem server’s surging CPU

Setting up for spreadsheet based data drilling

Comparing server load with average server transactions per hour

Creating other charts using custom Statrep and spreadsheets

Wrap-up

41

The Statrep Template’s Only Export View

•

The default Lotus Statrep template’s Spreadsheet Export view just

doesn’t seem to give us enough power

Pulling the data into Excel, then analyzing and graphing the

data can often give you amazing insight into usage patterns

This information will be invaluable when:

Trying to consolidate servers

Troubleshooting performance issues

42

Everything Is Everywhere

•

Keep in mind that every single statistic that is generated is

contained in every document in the Monitoring Results database

You just can’t see it all because it’s not in views or documents

And views are the most important place to have it because

that’s where it gives you the ability to compare samples

And analyze trends

43

The Stats Are There, Now You See Them

•

•

There is a new, customized version

of the Monitoring Results database

TechnoticsR85Statrep.ntf

It has all the views that are on

the original Statrep

Plus over a dozen additional

views to help you analyze the

stats your servers generate

You can download this from my blog

www.andypedisich.com

Look for the link to Admin2013

resources

44

Analysis Tools

•

Let’s cover the basics of the Statrep views used in the data export

process

And the special Excel spreadsheet that contains custom

formulas

45

You Need a Better View of the Situation

•

The data export views are designed to be exported as CSV files

Each has key fields that are important to the export

Hour and Day generate an integer that represents the hour of

the day and a day of the week

Hour 15 = 3:00 PM

Day 1 = Sunday, Day 7 = Saturday

These are used in hourly and daily calculations in

pivot tables

46

Export Views Are All Flat Views

•

•

Any view that is used for exporting data is flat, not categorized

This makes it easier to manipulate in pivot tables in Excel

There are columns in the export views that appear to have no data

They will be filled with a formula when brought into Excel

47

Formulas Are Already Available

•

•

There is a spreadsheet containing my formulas to help you

develop charts for all of this data

It’s available on SocialBizUG.org

Master Formula XLS Stat Exports- Technotics -V 2-4.xls

The views and spreadsheet will all fit together in a few moments

48

What We’ll Cover …

•

•

•

•

•

•

•

Using console commands to get statistics

Calculating the space saved by using DAOS

Working with a problem server’s surging CPU

Setting up for spreadsheet based data drilling

Comparing server load with average server transactions per hour

Creating other charts using custom Statrep and spreadsheets

Wrap-up

49

Transactions Per Hour

•

This can be a very important statistic if you are thinking about

consolidation

Use time span to sample all servers for the best results

It will allow you to compare apples to apples

And because all the export data contains a reference to the day

of the week, you could select the data for Monday through

Friday to get the significant averages

50

Examining Transactions

•

•

If a few servers are performing badly, you might want to know

how many transactions they are processing

Especially if the servers have the same hardware

And if they have a similar number of mail users assigned

I want to compare these servers statistically

What I want to know is:

How many users are hitting these systems?

How many transactions are these servers being forced

to make?

And I want to know these things on a PER HOUR basis

51

Start by Going to the Export Transactions/Users View

•

•

Analysis starts with Export Transactions/Users view

I don’t hesitate to add new views to Statrep

I don’t change the old ones, I just add new ones

Note that Trans/Total is a cumulative stat

And the Trans/Hour column is blank

We have a custom formula to apply to this column after the

data is exported into MS Excel

52

Next, Export View to CSV File

•

I export the contents of the view to a CSV file

The file is always called DELME.CSV so I can find it

It’s overwritten each time I do an export

It’s a good idea to grab the View titles

The import is fast

53

Next, Open the Special Spreadsheet

•

Start Excel and open the spreadsheet containing the formulas to

help you develop charts for all of this data

Master Formulas for Stat Exports- Technotics -V 2-4.xls

This is also a download from my blog

www.andypedisich.com

54

What’s in the Spreadsheet?

•

The spreadsheet contains the formulas that will help to break

down server activity into per hour averages

Don’t worry about the #value errors

Then open the DELME.CSV file

55

We’re into Microsoft Excel for the Analysis

•

Next, we open the DELME.CSV in Excel

Excel knows we want to import it because it’s a CSV file

It opens quickly with no further prompts

56

The Data Is Now in Excel

•

The view brought it in sorted by Server and Collection Time

Remember, we’d like to see the number of transactions

per hour

With the way this spreadsheet is set up, it’s pretty easy to

construct a formula where we simply:

Subtract the last hour’s number of transactions from this

hour’s transactions to get the number per hour

Transactions per hours = Last hour’s transactions –

Current hour’s transactions

57

Tricky Calculations – Server Restarts and Stuff

•

•

Except sometimes when servers are restarted

Then the cumulative stats start over

Or when the next server starts being listed in the statistics

You have to be careful not to subtract without paying attention

to these things

58

Special Formulas to the Rescue

•

To cope with the anomalies in the way the data is listed, I built a

few fairly straightforward formulas you can use on your

spreadsheets

They are in the master formula spreadsheet

Just copy it from the cell

59

Insert the Copied Cells

•

•

•

Move to the delme.csv spreadsheet

Then use the Insert menu to insert the copied cells into your

spreadsheet

Move the cells to the right or down to get them out of the way

You’ll be copying the proper formula into your spreadsheet

Copy that formula down your entire column of data

Save your spreadsheet as an XLS

60

Copy That Cell Down

•

We’re going to make a Pivot Table with our data

The Pivot Table will take our data and let us easily manipulate it

and graph it

Select all the data, including the column titles, and use the

menu to select PivotTable and PivotChart Report

61

Take Defaults

•

If you’re new at this, just take the default answers for the

questions Excel asks

62

The End of the World as You Know It

•

It drops you into the Pivot Table function where you have a field

list to drag and drop into the table

63

Drag Server to the Column Top

•

Drag Server to the column top and Hour to the row names column

64

Drag the Data to the Center of the Table

•

Drag the data you want to the table itself

It defaults to the “Count of Trans/Hour”

But you’ll want to change it to Average, and format it to look

nice, too

65

There You Have It

•

You now have a nice breakdown of the average number of

transactions per hour, per server

66

Easy to Manipulate

•

It’s easy to remove servers and add them back again

And it’s easy to pick the hours that you are interested in, too

67

Graphing Your Results

•

This is where it really gets cool

Just click on the Chart Wizard

And …

68

Bingo, You Have an Instant Chart

•

Stacked bar isn’t what we want, but that was quick!

69

Line Graph Coming

•

Use the icon on the right to change graph types

A line graph is quite effective, most of the time

70

Here’s the Line Graph You Ordered

•

Simple, fast, and straightforward

This is an average of transactions per hour

71

Dress It Up for a Presentation

•

You can fix it up and format it if you need to make a presentation

from the data

72

What We’ll Cover …

•

•

•

•

•

•

•

Using console commands to get statistics

Calculating the space saved by using DAOS

Working with a problem server’s surging CPU

Setting up for spreadsheet based data drilling

Comparing server load with average server transactions per hour

Creating other charts using custom Statrep and spreadsheets

Wrap-up

73

Five Export Views

•

•

There are five different export views on the Admin 2008 Statrep

template from Technotics

Messaging Mail Routed

SMTP Mail Routed

ExportTransaction/Users

Export CPU Util

Export Agent Stats

Along with the other custom

views mentioned earlier

74

Messaging Mail Routed and SMTP Mail Routed

•

The views for exporting the Messaging Mail Routed

and SMTP Mail Routed views use a spreadsheet technique similar

to the one used for analyzing transactions per hour

But there are opportunities for analyzing

Average SMTP Messages processed per hour

Average SMTP Message Size processed per hour

Average Message Recipients processed per hour

Average Mail Total Processed per hour

75

Spreadsheet Concepts Similar

•

You will need to copy a group of formula cells instead of just one

Insert the copied cells the same way as described earlier in this

presentation

76

Messaging Mail Routed

•

The Messaging Mail Routed export process will allow you to

produce a chart like this:

77

SMTP Mail Routed

•

The SMTP Mail Routed will allow you to easily make a chart that

looks like this:

78

Export CPU Utilization

•

The Export CPU Utilization will give you a lot of different charts,

like this nice one averaging transactions per minute:

79

What We’ll Cover …

•

•

•

•

•

•

•

Using console commands to get statistics

Calculating the space saved by using DAOS

Working with a problem server’s surging CPU

Setting up for spreadsheet based data drilling

Comparing server load with average server transactions per hour

Creating other charts using custom Statrep and spreadsheets

Wrap-up

80

Where to Find More Information

•

•

•

•

How can the actual disk savings from DAOS be computed?

www-304.ibm.com/support/docview.wss?uid=swg21418283

Description of HTTP statistics for a Lotus Domino server

www-1.ibm.com/support/docview.wss?uid=swg21207314

Download presentations from my blog – Look for the link to

Admin2013 resources

www.andypedisich.com

How does the notes.ini file parameter 'server_session_timeout'

affect server performance?

www-304.ibm.com/support/docview.wss?uid=swg21293213

81

7 Key Points to Take Home

•

•

•

Static statistics are valuable because they will let you take a

hardware inventory from STATREP.NSF of your entire domain

without leaving your desk

Getting and charting the space savings used by DAOS should be

presented to management

If you want to collect statistics at a different interval than your

primary statistic collection document you must collect from a

different server

82

7 Key Points to Take Home (cont.)

•

•

•

•

Average transactions per hour is a valuable statistic, but it is not

directly available from Domino and must be calculated on a

spreadsheet

You can specify whatever database you want for collect stats, but

it should be designed using the Monitoring Reports template

The best way to export Domino statistic data from STATREP.NSF

is to use comma separated value files (CSV)

The Statrep database has issues, so don’t be bashful about

creating your own views

83

Your Turn!

How to contact me:

AndyP@Technotics.com

www.andypedisich.com

www.technotics.com

84