Download: Glossary

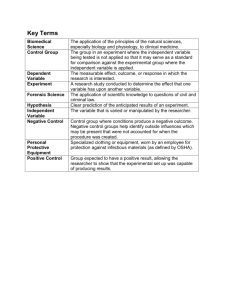

GLOSSARY a-priori assumption – a statement, written in the form of a rule or guideline, about what the data must reveal for the researcher to confirm his or her hypothesis (p. 37). a priori codes – in qualitative analysis, predefined concepts established at the outset of a research project (p. 377).

Abstract

– a commonly used section of a research report, usually about 150 words or less, wherein the researcher summarizes the research question, methods, data and findings (p. 40).

Accretion measure

– a non-reactive measure of behavior based on the evaluation of the physical things people possess and/or discard (p. 271).

Action research

– similar to applied research, a type of research that focuses on problemsolving and involves practitioners in the research design, implementation and evaluation stages

(p. 10).

Alternative hypothesis

– a hypothesis that alleges a difference or association between two variables. The opposite of a null hypothesis (p. 149).

Analysis of variance (ANOVA)

– an inferential statistical technique used to determine whether or not two or more groups are different with respect to a single variable (p. 364).

Anonymity

– a form of privacy wherein the researcher does not have sufficient knowledge to connect specific research information to a specific research subject (p. 53)

Applied research

– in contrast to pure research, a type of research wherein the researcher’s initial intention is to apply the knowledge gained to a specific problem or issue. Applied research can later be used to expand the body of knowledge (p. 10).

Archival data

– data made available by an individual, group or organization for the purpose of analysis (p. 271).

Attributes

– the variations within a variable. A variable’s attributes are the different characteristics or values within the variable. Male and female are the attributes of the variable gender (p. 117).

Baselines

– measurement standards established over time by other researchers. Also referred to as a benchmark or concurrent reliability (p. 125).

Benchmarks

– measurement standards established over time by other researchers. Also referred to as a baseline or concurrent reliability (p. 125).

Beta coefficient

– one of two statistics used in a multiple regression model to measure the individual effect of an independent variable on a dependent variable. Also called a standardized coefficient (p. 370).

Bias

– a condition that causes a sample to be unrepresentative of the population from which it came (p. 164).

Bibliography

– a commonly used section of a research report that lists, by author’s last name, the sources that were used by a researcher. This section may also be referred to as references (p.

40).

Case(s)

– the individual components of a population or sample. These may also be referred to as members or elements (p. 164).

Case study

– often a qualitative research method involving a detailed analysis of a single event, group or person for the purpose of understanding how a particular context gives rise to this event, group or person. Case studies can focus on either typical or extreme cases (p. 298).

Causality

– also may be referred to as a causal relationship. Used to describe a condition between two or more variables wherein a change in one variable results in a change to another variable (p. 99).

Causal rules – a set of conditions that, when confirmed, establish a causal relationship between two or more variables. There are three causal rules – temporal order, correlation and a lack of plausible alternative explanations (p. 99).

Census

– a study of an entire population (p. 163).

Central limit theorem

– the theoretical basis upon which the science of sampling is based.

Proposes that repeated samples from the same population are likely to be equivalent (p. 162).

Chi square

– an inferential statistical technique used to determine whether there is a statistically significant difference between what we expected to happen and what actually happened. The operative statistic is called the chi square statistic. If the statistical significance of the chi square statistic is .05 or less, we can conclude that the difference between what actually happened and what was supposed to happen was not due to chance (p. 365).

Chi square statistic

– the operative indicator in a chi square statistical analysis that is tested for statistical significance to determine if differences exist between what happened and what was supposed to happen (p. 365).

Closed choice response set

– a type of response set that requires a respondent to choose from a list of predeveloped responses to a question or statement. For example: Check the box that best describes your age. Sometimes referred to as a forced choice response set (p. 252).

Cluster sampling

– a probability sampling technique wherein the sample is collected randomly in a series of stages or from randomly selected natural clusters wherein the cases are more readily accessible (p. 170).

Codes of ethics

– statements of principles that guide professional behavior or practice. Most codes of ethics are created by industry organizations or professional associations (p. 62).

Coding

– a system developed by a researcher that describes the precise process by which information from a survey instrument is captured into a database in preparation for its analysis.

In qualitative research coding refers to a process by which patterns (themes, phrases or ideas) exist with field notes or written transcripts (pp. 257, 311).

Coding rules – a set of procedures developed by researchers to insure that the individuals responsible for recording data during a research project are consistent (p. 38).

Coding sheets – pre-formatted tables used to record information during a field observation or other type of non-reactive research method (p. 285).

Cohort study

– a type of longitudinal researcher wherein the researcher collects data from individuals that share a common characteristics or experience within a defined time period of time (p. 86).

Composite index number – similar to an index or scale wherein the numerical values of the responses to several questions or statements on a survey are summed in order to describe a broader concept (p. 252).

Conceptualization

– the process by which researchers define the concepts that are used in a research project (p. 32).

Conclusion – a commonly used section of a research report that includes a summary of the research project, an evaluation of the strengths and weakness of the research, and/or a set of recommendations for policy changes or questions for future research (p. 40).

Concurrent reliability (method)

– a measure for determining the capacity of a measure to be consistent. This requires a researcher to compare the results from a newly developed measure to benchmarks or baselines, developed over time by other researchers, that measure the same concept or construct. The new measure is considered reliable if the results are similar to the existing benchmarks or baselines (p. 126).

Confidentiality – a form of privacy wherein the researcher has sufficient knowledge to connect specific research information to a specific research subject, but the researcher agrees not divulge the subject’s identity to outsiders (p. 53).

Construct validity

– the extent to which a measure corresponds to other variables that are related to the underlying variable we are interested in studying (p. 123).

Content analysis – a type of non-reactive research wherein the researcher analyzes existing textual information to study human behavior or conditions (p. 274).

Content validity

– the degree to which a measure includes every dimension of the concept (p.

123).

Contingency plan

– an alternative research strategy developed by a researcher to be used in situations that do not allow for the use of the originally planned methodology (p. 36).

Continuous variables

– similar to interval or ratio variables. These variables have an almost unlimited number of values (p. 121).

Control group – one of the two optional features of an experimental design. A group of research subjects that is not exposed to the independent variable that the researcher believes will cause change to a dependent variable (p. 190).

Convenience sampling

– a non-probability sampling technique wherein the sample consists of individuals that are either convenient or readily available to the researcher. This is also known as availability sampling (p. 174).

Correlation

– one of the three causal rules. A change in the cause (independent variable) must result in a change in the effect (dependent variable).

In other words, there must be a relationship between the two variables. May also describe a relationship or association between two variables wherein a change in one variable causes a predictable change to another variable (p. 101).

Cost/benefit analysis

– an evaluation research technique wherein a researcher systematically compares costs and benefits of an existing or future program, process or policy (p. 323).

Criterion validity – the extent to which one measure relates to another, more direct measure of the same concept. Sometimes this is referred to as predictive validity (p. 123).

Critical – a paradigm of research that assumes social science inquiry is based on the assumption that research is not value free and that every researcher brings his or her own particular biases into the research process. Critical researchers do not attempt to be unbiased and instead believe that they should use their research skills to affect social change. Critical criminologists tend to focus on the structure of race, class and gender inequalities and the relationships between these inequalities and crime and punishment (p. 81).

Cross-sectional – a time dimension of research wherein data are collected at a single point in time (p. 82).

Cross-sectional survey – a survey taken at one point in time (p. 83).

Data mining

– a statistical technique or procedure involving the analysis of large data sets for the purpose of finding patterns within it (p. 379).

Deception

– occurs when a researcher inappropriately lends his or her name and reputation to an unscrupulously produced research project (p. 60).

Deductive reasoning

– also referred to as deduction. A method of reasoning wherein a researcher starts with a theory, develops hypotheses based on that theory and then collects information (or data) that either supports or falsifies the theory (p. 87).

Dependent variables

– effect variables. These are the variables that a researcher alleges will change as a result of a change in an independent variable (pp. 104, 139).

Descriptive research

– in contrast to exploratory and explanatory research, a type of research conducted to document or describe social conditions or trends. The objective of descriptive research is to find out what is happening now or what happened in the past. Descriptive research does not attempt to explain the causes of social phenomena (p. 81).

Descriptive statistics

– one of two general categories of statistics. Descriptive statistics inform researchers of the current state of things and describe what is usual or typical (p. 350).

Diagramming – a qualitative analysis technique wherein a researcher explores relationships between various themes by developing a flow chart or hierarchical diagram (p. 378).

Direct response set – a type of response set that encourages a respondent to enter a specific response to a question or statement. For example: What is your age? (p. 252)

Discrete variables

– similar to nominal or ordinal variables. These variables have a fixed number of attributes (p. 121).

Discussion

– a commonly used section of a research report that includes an interpretation of the research findings and a consideration of alternative explanations (p. 40).

Do no harm

– an ethical principle that merely means that during the research process, the researcher should never do anything that will hurt another person or put anyone in danger, including the researcher. This ethical principle includes physical, psychological and legal harm

(p. 49).

Double-barreled question

– in survey research a poorly designed question that asks more than one question simultaneously. For example, “Indicate whether you agree with the following statement: In order to reduce crime, we should spend more money on education and social services.” In this case a respondent, following the directions, would not be able to agree with one crime reduction strategy and disagree with another (p. 250).

Ecological fallacy – a logical thinking error that occurs when a researcher reaches a conclusion or makes a prediction about individual behavior based solely on observations that were gathered at the group level (p. 90).

Element(s)

– the individual components of a population or sample. These may also be referred to as members or cases (p. 164).

Erosion measure

– a non-reactive measure of behavior based on the evaluation of how physical objects or spaces are used by people (p. 271).

Ethics

– a set of principles, code of conduct or values that researchers adhere to in order to insure their research is not harmful (p. 48).

Ethnocentrism

– the belief in the superiority of one’s own culture. This adversely affects the quality of ethnographic research (p. 309).

Ethnographic research – attempts to understand social phenomena within the context or from the perspective of a particular culture or group. Ethnographic research is very similar to field research, which relies on observations of people and places in their natural setting. But ethnographic researchers are more than merely covert observers (p. 308).

Ethnography

– a qualitative research method involving an in-depth study of a culture for the purpose of understanding that culture and its inner workings (p. 299).

Evaluation research

– a collection of research methods designed to determine whether a program, process or policy is achieving its intended outcome (p. 321).

Exhaustiveness

– the completeness of a list of attributes. All of the possible attributes for each variable must be included (p. 142).

Expedited review

– an abbreviated or cursory review of a research plan by an Institutional

Review Board. An expedited review is used when the proposed research will likely have a minimal effect on the research subjects (p. 66).

Experimental design (experiment)

– a research method that allows a researcher to measure how much, if any, an independent variable causes a change to a dependent variable. All experiments have, at a minimum, three features – an experimental group, a treatment and a posttest. More sophisticated experimental design models also include a pretest and a control group (p. 187).

Experimental group

– one of the three essential features of an experimental design. A group of research subjects that is exposed to the independent variable that the researcher believes will cause change to a dependent variable (p. 189).

Explanatory research

– in contrast to exploratory and descriptive research, a type of research conducted to find a cause or explanation for social trends and phenomena. Explanatory research goes beyond merely describing and attempts to establish a cause and effect relationship between social phenomena (p. 81).

Exploratory research

– in contrast to descriptive and exploratory research, a type of research conducted on new or emerging social trends. The objective of exploratory research is to find out what is happening (p. 81).

External validity

– the capacity of a research finding from an experimental design model to be applicable to similar research settings. This is also known as generalizability (p. 202).

Extreme case sampling

– a non-probability sampling technique where individuals or situations are selected based on the researcher’s judgment that they are not representative of the population

(p. 178).

Extreme case study

– a type of case study, usually a qualitative method, in which a researcher conducts a detailed analysis of a single event, group, or person that is known to be generally unrepresentative or similar events, groups, or persons (p. 178).

F-ratio – the actual statistic produced by an ANOVA and tested for statistical significance (p.

364).

Face validity

– whether or not a measure appears to be valid, or whether it makes sense (p. 122).

Field research

– a type of non-reactive research wherein the researcher observes behavior in a natural setting (p. 272).

Findings

– a commonly used section of a research report that includes a summary of what the analysis revealed, including the descriptive statistics (p. 40).

Forced choice response set

– a type of response set that requires a respondent to choose from a list of predeveloped responses to a question or statement. For example: Check the box that best describes your age. Sometimes referred to as a closed choice response set (p. 252).

Full review

– a detailed or in depth review of a research plan by an Institutional Review Board.

A full review is used when the proposed research will likely have a substantial effect on the research subjects (p. 66).

Gatekeepers

– influential people within a group, culture, or organization who facilitate a researcher’s access to research subjects of information within groups, cultures, or organizations

(p. 310).

Generalizability

– in broad terms the capacity for a research finding to be applicable to other research settings or contexts (p. 202).

Grounded codes

– concepts discovered during qualitative research, primarily during grounded theory research (p. 377).

Grounded theory research

– a qualitative research method wherein a researcher uses the inductive reasoning process to develop a theory that explains observed behaviors or social

processes. Grounded theory is more of an approach to qualitative research than a specific method

(p. 309).

History

– one of several potential threats to the internal validity of an experimental design model. Potentially occurs when major events happen during an experiment that affect the research subjects and thus the dependent variable (p. 199).

Hypothesis

– a predictive statement that alleges a specific causal relationship between two or more variables (p. 138).

Hypothesis of association

– a statement that alleges a constant and predictable correlation, or relationship, between independent and dependent variables (p. 152).

Hypothesis of difference

– a statement alleging that the independent variable(s) make(s) two or more groups different in some respect (p. 152).

Independent variables

– causal variables. These are the variables that a researcher hypothesizes will cause a change in another variable (p. 139).

Index – a single number that represents a compilation of related measures designed to provide a comprehensive indication of a status or change (p. 127).

Inductive reasoning – also referred to as induction. A method of reasoning wherein a researcher makes a single or series of observation(s) and then develops an explanation (theory) for the behavior (p. 88).

Inferential statistics

– one of two general categories of statistics. Inferential statistics enable researchers to pinpoint the causes of social phenomena or to predict such phenomena (p. 362).

Institutional Review Board (IRB) – a group of experienced researchers who are tasked with the responsibility to review the research proposals in insure they comply with ethical principles, good practice and applicable law (p. 66).

Instrument

– the actual questionnaire or document containing the pre-established questions or statements on a survey or interview (p. 235).

Instrumentation

– one of several potential threats to the internal validity of an experimental deign model. Potentially occurs when differences between the pretest and posttest instruments cause a change in the dependent variable (p. 201).

Internal validity

– the ability of an experimental design model to document the causal relationship between an independent and dependent variable (p. 199).

Internet survey

– a written and self-administered survey instrument that is delivered to a respondent via an electronic medium (such as the internet) and returned electronically upon completion to the researcher by the respondent (p. 236).

Interpretive

– a paradigm of research that assumes social science inquiry is based on the notion that social science research is fundamentally different than research in the natural sciences.

Rather than simply measure human behavior from the ‘outside,’ interpretive social scientists attempt to get ‘inside’ to understand the meaning behind human behavior. This involves interpretation rather than simple observation (p. 81).

Inter-rater reliability (method)

– a method for determining the capacity of a measure to be consistent. Using this method a researcher would ask two or more other researchers to observe and measure the same thing. Then the researcher would compare the results to see if they generally have the same impression of what they observed (p. 125).

Interval – the second highest level of measurement. Interval variables are also categories like nominal level variables and can also be arranged in a logical order like ordinal level variables.

But interval variables have one additional feature that makes them more precise: they have equal differences between their attributes (p. 118)

Intervening variables

– may occur between independent and dependent variables and change the nature of the causal relationship. In other words, they may intervene in the relationship between an independent and dependent variable (p. 139).

Interview

– a survey instrument wherein the respondents are contacted face-to-face and asked to respond to questions and/or statements by a researcher (i.e. interviewer) (p. 237).

Interviewer bias

– potentially caused when an interviewer’s presence or reaction affects the respondent’s responses to the questions and/or statements (p. 235).

Introduction

– a commonly used section of a research report that includes an overview of the research, describes the purpose of the research, the research question and the hypothesis, and includes a statement on how the article is organized (p. 40).

Lack of plausible alternative explanations

– one of the three causal rules . All other reasonable explanations (other independent variables) must be considered and eliminated. In other words, we have to rule out other potential causes (p. 104).

Leading question

– in survey research a poorly designed question that influences a particular response. For example; “Indicate whether you agree with the following statement: Police officers generally make you feel comfortable when you ask them for help, don’t they?” The phrasing of this question might lead the respondent to answer “yes”. A better way to ask this question would be, “Do police officers make you feel comfortable when you ask them for help?” (p. 250)

Level of measurement

– how variables are measured. Variables that are measured at higher levels of measurement are more precise and analytically flexible (p. 117).

Level of precision

– a measure of a sample’s representativeness of the population from which it came (p. 166).

Likert scale

– a rating scale that allows a respondent to more precisely indicate the intensity of agreement to a question or statement. Likert scales often contain five levels – strongly agree, agree, neutral, disagree or strongly disagree (p. 128).

Literature review – an important part of the research process wherein researchers (1) learn what is known and/or not known about a research subject, (2) identify gaps in the body of knowledge, and (3) develop understandings of how other researchers approached similar research challenges.

A commonly used section of a research report that includes a summary of what is known about a particular topic and a description of areas of agreement and disagreement among researchers on the topic (p. 40).

Longitudinal – a time dimension of research wherein data are collected at two or more points in time. There are three types of longitudinal studies – trend studies, panel studies and cohort studies (p. 84).

Mail survey

– a written and self-administered survey instrument that is either mailed or delivered to a respondent and returned via the mail upon completion to the researcher by the respondent (p. 235).

Matching

– a process by which research subjects are assigned to either an experimental or control group by a researcher during an experiment. Typically, researchers identify matching pairs of identical (based on their relevant similarities) research subjects and assign one of the pair to the experimental group and the other to the control group so as to insure that these groups are equivalent with respect to other variables that could affect the outcome of the experiment (p.

215).

Matrices

– tables used during qualitative analysis to illustrate relationships between variables (p.

378).

Maturation

– one of several potential threats to the internal validity of an experimental design model. Potentially caused by natural developmental changes in the research subjects that affect the outcome of the experiment (p. 199).

Mean

– a measure of central tendency. The arithmetic average. Calculated by summing the value of all cases and dividing this sum by the total number of cases in a sample or population.

Requires data measured at the interval or ratio levels (p. 351).

Measurement

– the process by which social science researchers determine how to assess the social phenomena they study (p. 114).

Measures of central tendency

– descriptive statistics that describe what is normal or usual about a sample of population. The most commonly used measures of central tendency are the mean, median and mode (p. 350).

Measures of variability

– descriptive statistics that describe how much variation or change exists within a population or sample. The most commonly used measures of variability include the range, standard deviation, percentage, percentile, percentile rank and percent change (p. 356).

Median

– a measure of central tendency. The middlemost value. Derived by arranging the cases in descending or ascending order and identifying the value that is greater than or equal to half of the cases and less than or equal to half of the cases in the sample or population. Requires data measured at the ordinal, interval or ratio levels (p. 352) .

Member(s)

– the individual components of a population or sample. These may also be referred to as cases or elements (p. 164).

Memoing – often used in non-reactive research methods, particularly grounded theory. This research procedure involves writing memos or notes during the data collection process. These notes may be made in the margins of an open ended interview form but they are not necessarily part of the respondent’s answer to the questions. They are intended to remind the researcher of his or her thoughts at the time of the interview. These thoughts are often important to the researcher’s understanding of the social phenomenon under investigation. They are used during the analysis phase to remind the researcher what he or she was thinking at the time (p. 311).

Method of reasoning

– describes the method or direction of reasoning used by a researcher.

There are two methods – deductive and inductive (p. 87).

Methodology

– a commonly used section of a research report that includes a description of the methods used by the researcher, including the sampling procedure, the measurement of variables, the data collection method and the analytic techniques (p. 40).

Misrepresentation

– a form of lying. It can involve lying about the data, the results and even authorship (p. 58).

Mode

– a measure of central tendency. The most frequently occurring value. Determined by the frequency of a value within a sample or population. Requires data measured at the nominal, ordinal, interval or ratio levels of measurement (p. 354).

Monograph

– a research report that is too long to appear in a scholarly journal but too short or narrowly focused to justify its publication as a book (p. 13).

Mortality

– one of several potential threats to the internal validity of an experimental design model. Potentially occurs when the loss (for any reason) of a research subject affects the outcome of the experiment (p. 200).

Multiple methods approach

– an evaluation technique that involves the use of multiple data gathering techniques in order to evaluate a program, process or policy (p. 323).

Multiple regression

– an inferential statistical technique used to measure the individual and combined effects of various independent variables on a dependent variable. The multiple

regression model requires data collected at the interval or ratio levels and a hypothesis alleging an association between the variables. In addition, this model enables the researcher to predict the outcome of the dependent variable when given the values for the independent variables (p. 370).

Multi-stage sampling

– a broad category of sampling technique wherein the sample of research subjects ultimately used by the researcher is the result of two or more sampling steps (p. 170).

Mutually exclusive

– the capacity for a list of variable’s attributes to provide a respondent with one and only one response (p. 143).

National Crime Victimization Survey

– a survey of households in the United States conducted periodically by the Department of Justice to determine, among other things, the amount of criminal victimization (p. 243).

Nature of data

– the type of data collected, and in some cases the type of research method used.

There are two types of data – quantitative and qualitative (p. 86).

Negative correlation

– a type of association between two variables wherein an increase in one variable results in a decrease in another variable, or a decrease in one variable results in an increase in another variable (p. 101).

Nominal

– the lowest level of measurement. Nominal variables are merely names or labels. They are simply things we can categorize, such as ethnicity, religion and gender. The attributes (i.e. the categories) of nominally measured variables cannot be arranged in any logical order or sequence (p. 118).

Non-probability sampling

– one of the two major categories of sampling techniques. These techniques do not rely on the random selection of research subjects or cases. As a result, researchers cannot infer that what they learn from a non-probability sample is true of the larger population from which the sample came (p. 173).

Non-reactive research methods – any research method that allows a researcher to gather information from or about research subjects unobtrusively, or without their knowledge that they are being measured or observed (p. 269).

Normal distribution

– a description of a data set wherein 68.2 percent of all cases fall within one standard deviation of the mean, 95.4 percent of all cases fall within two standard deviations of the mean, and 99.9 percent of all cases fall within three standard deviations of the mean. Also, in a normal distribution the values for mean, median, and mode are equal (p. 162).

Normally distributed

– a condition describing data that exist in a normal distribution (p. 162).

Null hypothesis – the hypothesis that alleges no difference or association between two or more variables. The opposite of an alternative hypothesis (p. 150).

One group no pretest experimental design model

– the most basic experimental design model.

This type of design only has the basic elements of the experimental design model – an experimental group, a treatment and a posttest (p. 191).

One group pretest/posttest experimental design model

– an experimental design model that includes an experimental group, a treatment, a posttest and a pretest. This model does not include a control group (p. 193).

Open response set

– a type of response set that allows respondents to write their own responses to questions or statements in a less structured way than a direct response set. For example: In your opinion what is the most pressing problem facing our country today? (p. 252)

Operationalization – the process by which researchers develop procedures for measuring the concepts that are used in a research project (p. 33).

Ordinal – the second lowest level of measurement. Ordinal, like nominal, variables are also categories, but their attributes can be rank ordered (p. 118).

Outliers

– extreme values within a sample or population (p. 352).

Panel study

– a type of longitudinal research wherein the researcher collects data from the same individuals – the panel – over multiple points in time. The difference between a panel study and a trend study, then, is that a panel study follows the same sample of individuals (p. 86).

Paradigm

– a general organizing framework for social theory and empirical research. More succinctly, a paradigm is a lens through which a person views social phenomena. It reflects a researcher’s assumptions about reality (p. 80).

Paradigm(s) of research

– a general organizing framework for social theory and empirical research. There are three common paradigms of research – positivist, interpretive and critical (p.

80).

Pearson r – an inferential statistical technique used to determine whether two variables are associated or correlated. It measures both the degree and nature of the correlation. The Pearson r coefficient ranges from -1 to +1. The closer it is to -1 or +1, the higher the level of correlation between the two variables. The closer it is to 0, the lower the level of correlation between the two variables. Positive Pearson r coefficients indicate a positive correlation between the two variables. Negative Pearson r coefficients indicate a negative correlation between the two variables (p. 367).

Peer review

– a process wherein well informed researchers rigorously review the research of another scholar to determine the quality of the research prior to its publication in a scholarly journal (p. 12).

Percentage

– a measure of variability. A descriptive statistic representing a portion of a sample or population based on a denominator of one hundred percent (p. 359).

Percent change

– a measure of variability. A descriptive statistic that indicates how much something changed from one time to the next (p. 360).

Percentile

– a measure of variability. A descriptive statistic representing where a particular value ranks within a distribution. By convention a percentile rank represents the percent of cases that equal or are less than that value (p. 359).

Percentile rank

– represents the percent of cases that are equal to or less than a particular value within a distribution (p. 359).

Physical traces

– a non-reactive research methodology that observes human behavior indirectly by studying physical objects or residue that is left behind (p. 271).

Placebo

– an inert or ineffectual version of a treatment designed to insure control group members are unaware that they are not receiving an actual treatment (p. 191).

Plagiarism

– a form of research misrepresentation wherein an author presents the work of another as his or her own intellectual property or creative work (p. 59).

Population

– the entire set of individuals or groups that is relevant to a research project (p. 163).

Positive correlation – a type of association between two variables wherein an increase in one variable results in an increase in another variable or a decrease in one variable results in a decrease in another variable (p. 101).

Positivist

– a paradigm of research that assumes social science inquiry is most like research in the natural sciences. A positivist relies on empirical observations and may attempt to establish a causal relationship between variables (p. 80).

Post hoc tests

– statistical tests that provide additional or detailed insight into a statistical finding

(p. 364).

Posttest

– one of the three essential features of an experimental design. An observation or measurement conducted by the researcher of the dependent variable after the treatment is administered (p. 189).

Predictive validity

– refers to the extent to which one measure relates to another, more direct measure of the same concept. Sometimes this is referred to as criterion validity (p. 123).

Prestige bias

– in survey research a poorly designed question that includes an authoritative reference that may influence a respondent’s answer. For example, “Indicate whether you agree with the following statement: Experts are right that routine police patrol is not an effective crime control strategy.” A respondent is more likely to agree with this statement because it includes the phrase “experts are right.” (p. 250)

Pretest

– one of the two optional features of an experimental design. An observation or measurement conducted by the researcher of the dependent variable before the treatment is administered (p. 190).

Pretesting

– a process by which a survey researcher or interviewer evaluates the ability of an instrument to produce the data or information it was intended to produce using a small subset of typical respondents (p. 254).

Privacy

– the right to be left alone. Closely associated with this right is the right to prohibit others from knowing things about you that you do not want them to know. Researchers have an obligation to protect the privacy of their research subjects. This means that the researcher must not disclose private information about a research subject without that person’s permission.

Disclosure of information by a researcher that causes a research subject to be unduly harmed, or even inconvenienced, is a serious breach of ethical standards (p. 53).

Probability sampling – one of the two major categories of sampling techniques. These techniques rely on the random selection of research subjects or cases. As a result, researchers can infer that what they learn from a probability sample is true of the larger population from which the sample came (p. 168).

Pure research

– in contrast to applied research, a type of research wherein the researcher’s initial intention is to expand the body of knowledge. Pure research can later be applied to address a specific problem or issue (p. 10).

Purpose of research

– describes what the research hopes to achieve. There are three common purposes of research – exploratory, descriptive and explanatory (p. 81).

Pyramiding

– a technique often used during the literature review process whereby a researcher accesses the sources used by previous researchers to (1) gain more insight into the research subject, and (2) identify the most authoritative research on a particular topic (p. 29).

Qualitative data analysis

– a type of analysis wherein the subjective and interpretive data produced by qualitative research methods is evaluated within the context of a research question

(p. 373).

Qualitative research

– a broad category of research methods that attempt to produce a more detailed understanding of human behavior and its motivations without relying exclusively on numerical measures. Qualitative research is a tradition in scientific inquiry that does not rely principally on numerical data and quantitative measures. Instead, it attempts to develop a deeper understanding of human behavior. It is more concerned about how and why humans behave as they do (p. 298).

Quantitative data analysis – a type of analysis wherein the objective values assigned to variables are evaluated (p. 349).

Quantitative research

– a broad category of research methods that attempt to produce a more detailed understanding of human behavior and its motivations relying on numerical measures.

Quantitative research is a tradition in scientific inquiry that relies principally on numerical data and quantitative measures (p. 86).

Random assignment – a process by which research subjects are assigned to either an experimental or control group by a researcher during an experiment. Typically, researchers use random assignment to place research subjects into the experimental and control groups to insure these groups are equivalent with respect to other variables that could affect the outcome of the experiment (p. 215).

Random sampling error

– the difference between the results the researcher gets from the sample and the results the researcher might have gotten had he or she actually polled the entire population (p. 164).

Random selection – distinguishes probability sampling from non-probability sampling. When we say that the sample has been randomly selected, we mean that all the members or elements of the population have an equal and non-zero chance of being selected into the sample (p. 168).

Range – a measure of variability. Represented by the difference between the highest and lowest value in a sample or population. The range is computed by subtracting the smallest value from the largest value in a sample or population (p. 356).

Rate

– a measure of variability. A descriptive statistic used to compare the frequency of events between samples of different sizes (p. 360).

Ratio

– the highest and most precise level of measurement. Ratio level variables have all of the features of nominal, ordinal and interval variables plus the added benefit of an absolute zero (p.

118).

Reactivity

– one of several potential threats to the external validity of an experimental design model. Potentially occurs when the research subjects change their behavior because they become aware that they are being observed or measured by a researcher (p. 202).

Reductionism

– a logical thinking error that occurs when a researcher reaches a conclusion or makes a prediction about group behavior based solely on observations gathered at the individual level (p. 90).

References

– a commonly used section of a research report that lists, by author’s last name, the sources that were used by a researcher. This section may also be referred to as a bibliography (p.

40).

Regression – one of several potential threats to the internal validity of an experimental design model. Occurs when an initial treatment effect diminishes over time, indicating that the independent variable has no long-term effect (p. 201).

Reliability

– refers to the ability of a measure to consistently measure the concept it claims to measure. A measure is reliable if it produces relatively similar results every time we measure our concept (p. 124).

Research

– as a verb to research means to follow a logical process that uses specific concepts, principles, tools and techniques to produce knowledge. As a noun research refers to a collection of information that represents what we know about a particular topic (p. 3).

Research fraud

– an extreme form of misrepresentation. It involves lying about or fabricating research findings (p. 58).

Research methods

– The tools, techniques and procedures that researchers use to ask and answer research questions, and in turn, expand the body of knowledge (p. 3).

Research process

– a set of specific steps that when done correctly produce data upon which researchers produce findings and draw conclusions. The research process is linear in that the steps should be conducted in a certain order. The research process is also cyclical in that researchers often find it necessary to revisit previous steps and make revisions (p. 25).

Research question – an interrogative statement that describes what a researcher wants the research to reveal. Research questions should be measurable, unanswered, doable and disinteresting (p. 145).

Respondent

– the person, group or entity that responds to a survey (p. 235).

Response rate

– the percentage of survey instruments that are completed and returned to the researcher by the respondents (p. 256).

Response sets

– in survey research, the options provided to the respondent by the researcher which are the ways in which the respondent is allowed to answer a question or respond to a statement (p. 251).

Reversals – repeated and alternatively formatted questions or statements on surveys and interviews designed to determine evaluate respondent attentiveness or truthfulness (p. 253).

Sample

– a subset of a population that is measured or studied to provide insight into the overall population (p. 163).

Sampling

– a scientific technique that allows a researcher to learn something about a population by studying a few members, or a sample of that population (p. 160).

Sampling frame

– a list of all the members of elements of a population directly from which a sample is selected (p. 164).

Sampling plan

– the specific process or procedure a researcher uses to select a sample from a population (p. 164).

Scale

– a method for quantitatively measuring a single phenomenon or variable using some type of rating system. Scales are typically found on surveys (p. 128).

Scholarly journal

– sometimes referred to as an academic journal, some researchers publish their research in these printed and electronic periodical publications. Most journals are published from two to four times per year. The research appearing in a journal is normally submitted to a peer review process prior to its publication (p. 12).

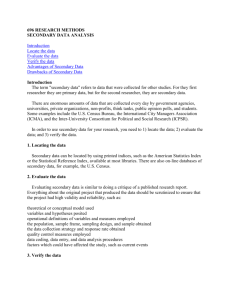

Secondary analysis

– a type of non-reactive research wherein the researcher analyzes previously collected or archival data (p. 273).

Segmenting – a process by which qualitative researchers organize or categorize written data with respect to the concepts their research intends to measure (p. 376).

Selection bias – in sampling any process that systematically increases or decreases the chances that certain members of a population will be selected into the sample. Ideally, all members or elements of a population should have an equal chance of being selected into the sample. If not, there will be selection bias and the sample will not be representative of the population. In experimental design research this is one of several potential threats to the external validity of an experimental design model. Potentially occurs when the researcher fails to establish equivalency between the research subjects in the experimental and control groups (pp. 165, 203).

Simple random sampling

– a probability sampling technique wherein cases are randomly selected from a complete list of the entire population. Also referred to as systematic random sampling (p. 169).

Snowball sampling

– a non-probability sampling technique wherein the sample consists of individuals who are referred to the researcher individually by previous research subjects (p. 176).

Solomon Four Group experimental design model

– an experimental design model that includes: an experimental group that is pretested, treated and posttested; a control group that is pretested and posttested, but not treated; an experimental group that is treated and posttested, but not pretested; and control group that is posttested but neither pretested nor treated (p. 196).

Spearman rho

– a statistical technique designed to evaluate the level and nature of a correlation between two variables measured at the ordinal level (p. 370).

Split half reliability (method)

– a method for determining the capacity of a measure to be consistent. This method requires a researcher to split a measure in half and administer each half to two similar groups. The measure is considered reliable if the results from each half are similar

(p. 125).

Sponsorship bias

– occurs when the sponsor of a research project attempts to influence the study design and/or interpretation of the data (p. 56).

Spurious

– a logical thinking error that occurs when a researcher falsely proposes a causal relationship between two or more variables (p. 107).

Standard deviation

– a measure of variability. This descriptive statistics represents how much each value varies from the mean. Higher standard deviations represent higher levels of variability

(p. 356).

Standardized coefficient

– one of two statistics used in a multiple regression model to measure the individual effect of an independent variable on a dependent variable. Also called a beta coefficient (p. 370).

Statistical significance

– a measure of the probability that the statistic is due to chance. As a general rule, if the statistical significance of a statistic is .05 or less, we conclude that the results are not due to chance. The .05 level of statistical significance means that there is a 5 in 100 chance that the results are due to pure chance (p. 363).

Stratified random sampling

– a probability sampling technique wherein cases are selected from well defined strata within the overall population to further enhance the representativeness of the overall sample (p. 172).

Suppression

– occurs when a research sponsor fails to disclose findings that do not benefit the organization (p. 56).

Survey

– a type of data collection method or research methodology wherein individuals or groups are asked to respond to questions and/or statements (p. 234).

Survey research method

– a data collection procedure or process that involves the use of a survey or interview instrument wherein individuals or groups are asked to respond to questions and/or statements (p. 234).

Systematic random sampling

– a probability sampling technique wherein cases are randomly selected from a complete list of the entire population. Also referred to as simple random sampling (p. 170).

Telephone survey

– a written survey instrument wherein the respondents are contacted by telephone and asked to respond to questions and/or statements by a researcher (p. 237).

Temporal order

– one of the three causal rules. The cause (independent variable) must happen prior to the effect (dependent variable). In other words, the cause must happen first, then the effect (p. 99).

Testing

– one of several potential threats to the internal validity of an experimental design model. Exposing research subjects to a pretest prior to the treatment can change the outcome of the posttest (p. 200).

Test–retest reliability (method)

– a method for determining the capacity of a measure to be consistent. This method requires the researcher to administer a measure twice to the same group to see of the results are similar. The measure is considered reliable if the results from each test administration are similar (p. 124).

Theory – a statement or set of statements that attempts to explain the social world and/or social behavior (p. 31).

Thick description

– a detailed account of a field site or an in-depth description of a field experience. Often used by field researchers (p. 313).

Time dimension of research

– describes the time frame in which the data collection takes place.

There are two broad categories of the time dimension of research – cross-sectional and longitudinal (p. 82).

Transcription – the process of producing a written transcript of video and audio recordings collected during research, normally qualitative research (p. 374).

Treatment

– one of the three essential features of an experimental design. The independent variable that the researcher alleges will be the cause of a change in the dependent variable (p.

189).

Trend studies – a type of longitudinal research wherein the research collects the same data at different times from different samples of the same population. Trend studies are also known as repeated cross-sectional studies, because researchers are basically conducting the same crosssectional study at different points in time (p. 86). t-score

– the actual statistic produced by a t-test and tested for statistical significance (p. 363). t-test – an inferential statistical technique used to determine whether or not two groups are different with respect to a single variable (p. 363).

Two group no pretest experimental design model – an experimental design model that includes an experimental group, a treatment, a posttest and a control group. This model does not include a pretest (p. 194).

Two group pretest/posttest experimental design model

– an experimental design model that includes an experimental group, a treatment, a posttest, a pretest and a control group. This experimental design model is sometimes referred to as the classic experimental design model (p.

195).

Typical case sampling

– a non-probability sampling technique where individuals or situations are selected based on the researcher’s judgment that they are representative of the population (p.

178).

Typical case study

– a type of case study, usually a qualitative method, in which a researcher conducts detailed analysis of a single event, group, or person that is known to be generally representative of similar events, groups, or persons (p. 308).

Typology

– a method for classifying observations, people or situations into nominal categories.

Typologies are often used in qualitative research (p. 128).

Uniform Crime Reports

– a survey of law enforcement agencies from throughout the United

States conducted annually by the Federal Bureau of Investigation to determine, among other things, the amount of reported crime (p. 242).

Unit of analysis

– the ‘whom’ or ‘what’ about which the researcher collects information. There are numerous units of analysis, including individuals, groups, communities, nations and so on (p.

89).

Unobtrusive observation – an observation of individual or group behavior that is made without being noticed (p. 271).

Unstandardized coefficient

– one of two statistics used in a multiple regression model to measure the individual effect of an independent variable on a dependent variable (p. 370).

Validity

– refers to the ability of a measure to accurately measure the concept it claims to measure (p. 122).

Variability

– a descriptive statistic that indicates how much change exists within a distribution

(p. 356).

Variable

– any characteristic of an individual, group, organization or social phenomenon that changes. Age, gender, income, education are all variables (p. 117).

Voluntary and informed consent

– a research subject’s agreement to participate in a research project. To be voluntary the consent must be given without influence, pressure or force. This means that the research subject is free to decide not to participate without fear of reprisal. The research subject must be given the option of revoking his or her consent at anytime during the research process. To be informed the research subject must be fully aware of what he or she is expected to do and must know of any potential dangers that are associated with his or her participation in the research (p. 54).

Vulnerable population

– a group of research subjects who, by virtue of their age, physical or mental condition, legal status or life situation, have a diminished capacity to negotiate their affairs or protect themselves from harm. Vulnerable populations include children, the mentally ill, the infirm, the elderly and even prisoners (p. 55).