AD - RWTH Aachen University

advertisement

Automatische Differentiation zur

Empfindlichkeitsanalyse und

Gradientenberechnung

Christian Bischof

Institute for Scientific Computing

RWTH Aachen University

bischof@sc.rwth-aachen.de

www.sc.rwth-aachen.de

AD Sommerschule

Bommerholz, 13. – 18. August 2006

Motivation

Science needs Derivatives

Numerical Methods: e.g. solutions of ODE, PDE

Sensitivity Analysis: assess parameter impact,

model validity, uncertainty, and robustness.

Design Optimization: improve design parameter.

Inverse Problems: adjust model parameters to fit

observed data.

Parameter Identification: e.g., reaction coeff’s

Data Assimilation: e.g., initial states of PDE

Local Model

based on

Taylor Series

f ( x h) f ( x )

f

2

*

h

O

(

)

h

x

Uncertainty Due to Experimental Variables

Multi-Element High-Lift Airfoil: Y30P30N

CL

CL =

M

M

(

M = M 0 M

)

Uncertainty in Lift Due to

Uncertainty in Mach Number

= 0

CL =

(

CL

)

Uncertainty in Lift Due to

Uncertainty in Angle of Attack

Courtesy of Larry Green

NASA Langley, MDOB

Planform and Thickness Optimization

M = 0.84, = 3.06 degrees

129x65x33 grid (32 zones, 21 wing sections), 9 optimization cycles

coloring proportional to the

surface pressure coefficient

for 33x9x9 grid analysis of

129x65x33 grid design

Baseline wing

Courtesy of Larry Green

Multidisciplinary Optimization Branch

NASA Langley Research Center

“Optimized” wing

10% - 1% move limits

xle, crd, and thk active

What is f ?

A formula: e.g. y(1) = x(2); y(2) = -c/m*x(1) (right-hand side

of the differential equation for a horizontal oscillator)

A “higher-level” mathematical object:

e.g. y = xT A x + b, y = g(u,t) dt

A computer program: e.g. SEPRAN, a 3-D CFD solver,

400,000 lines of Fortran, or FCAP3, a chip simulator of

Hewlett-Packard, 15,000 lines of ANSI-C, or a module of

the TURBOMOLE system for molecular simulation.

The problem: Fast and accurate computation of derivatives of

computer programs with little effort

Computing Derivatives of

Computer Codes

Work

Oldfashioned

Way:

Accuracy and

Speed required

human effort

Handcoding

Divided

Differences

SymbolicAssisted

Accuracy Speed

???

Automatic

Automatic

Differentiation: Divided

Differentiation f

f ( x h) f ( x ) / h

x

Fast & accurate Differences

AD+Brains

derivatives, fast

Automatic Differentiation (AD)

- Theoretical Underpinnings -

Automatic Differentiaton (AD)

Given a computer program, AD generates a new program,

which applies the chain rule of differential calculus, e.g.,

d f ( w, v ) f d w f d v

*

*

dx

w dx v dx

f f

to elementary operations (i.e.,

are known).

,

w v

• AD does not generate truncation- or cancellation errors.

• AD generates a program for the computation of derivative

values, not derivative formulae.

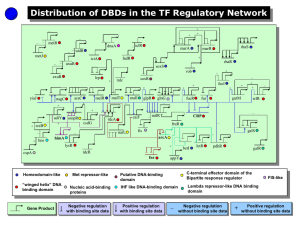

Program ~ Computational Graph

• A computer program can be viewed as the “generator” of a “computational

graph.”

• Each node in the graph corresponds to a value computed by the program

executing with a specific set of data.

subroutine f(x,y)

real x(2), y(2)

x(1)S0

do i = 1, 2

if ((x(i)) .ge. 0) then x(2)P0

y(i) = sqrt(x(i))

else

y(i) = 2.0 * x(i)

endif

enddo

y(2) = y(1) * y(2) * x(2)

return

end

The code

The computational graph

(v(1), v(2)) == inputs (x(1), x(2)), and (v(3), v(6)) == outputs (y(1), y(2)).

Linearized Computational Graph

• The “linearized computational graph” extends the “computational graph” with

partial derivatives for each arc (so-called elementary partial derivatives).

• Partial derivatives may depend on input function values for nonlinear

operations, but are constant for linear operations.

subroutine f(x,y)

real x(2), y(2)

do i = 1, 2

if ((x(i)) .ge. 0) then

y(i) = sqrt(x(i))

else

y(i) = 2.0 * x(i)

endif

enddo

y(2) = y(1) * y(2) * x(2)

return

end

The code

The linearized computational graph

AD ~ Path Accumulation

Let Pm,n be the set of all

paths from node m to node

n in the graph, and let i

j indicate that node i is a

direct predecessor of node

j in the graph. Then...

dv(n)

v ( j )

dv(m) pPm,n i j v (i )

i,j p

The derivative of v(n) with respect to v(m) is the sum over

all paths of the product of the partials along that path.

dy ( 2) dv( 6 ) v (6) v (3)

*

dx (1) dv( 1 ) v (3) v (1)

dy ( 2) dv( 6 ) v (6) v (5) v ( 4) v (6) v (5)

*

*

*

dx ( 2) dv( 2 ) v (5) v ( 4) v ( 2) v (5) v ( 2)

AD ~ Path Accumulation

The derivative of v(n) with respect to v(m) is the sum over

all paths of the product of the partials along a path from

v(m) to v(n).

The various AD techniques can be interpreted as exploiting

different strategies for computing this sum over all paths.

(possible because of associativity of chain rule).

There is no known algorithm for computing derivatives of a

program/computational graph with minimal complexity

(conjectured to be NP-hard).

The Forward Mode

Associate a “gradient” with every program variable and

apply derivative rules for elementary operations.

t:=1.0

do i=1 to n step 1

if (t>0) then

t := t*x(i);

endif

endif

t:=1.0; t:=0.0;

do i=1 to n step 1

if (t>0) then

t:= x(i)*t+t*x(i);

t := t*x(i);

endif

endif

The computation of p (directional) derivatives requires

gradients of length p many vector linear combinations,

e.g., x(i) = (0,.,0,1,0,.,0) results in t == dt/dx(1:n).

The Reverse Mode

Associate an “adjoint” with every program variable and

apply the following rules to the “inverted” program:

s = f(v,w) _v += ds/dv* _s; _w += ds/dw* _s;

tval(0):=1.0; tctr:=0;

do i=1 to n step 1

if (tval(tctr)>0) then

jump(i):=‘true’; tctr=tctr+1;

tval(tctr):=tval(tctr-1)*x(i);

2. Step:

Adjoint computation

_tval(tctr)=1.0,

all other _*=0.

Upon exit,

_x(i)==dt/dx(i)

1. Step:

Reversible program

(Single Assignment Form)

do i=n downto 1 step -1

if (jump(i) == ‘true’) then

_tval(tctr-1)+=x(i)*_tval(tctr);

_x(i)+=tval(tctr-1)*_tval(tctr);

_tval(tctr)=0; tctr=tctr-1;

Remarks on AD (inputs x, outputs y)

FM = Forward Mode

computes dy/dx*S (S is called “seed matrix”) by propagating

sensitivities of intermediate values with respect to input values.

For p input values of interest, runtime and memory scale at

most with p. May be much less e.g. for sparse Jacobians.

RM = Reverse Mode

computes WT *dy/dx by propagating sensitivities of output

values with respect to intermediate values.

For q output values of interest, RM runtime scales with q.

Memory requirements are harder to predict, and greatly depend

on structure of program. Great for computing „long gradients“.

AD Tools

ADIFOR 2.0 and ADIC

n

F77 or C code for f

ADIFOR

x(n) independent,

y(m) dependent var’s ADIC

w.r.t. differentiation

Runtime “seed matrix” S

allows computation of

• whole Jacobian

• chaining of derivatives

• exploitation of sparsity

• Jacobian-vector products

• column selection

F77 or C

m

code for

y

x

p

S

ADIFOR 2.0 (Fortran 77):

w/ Alan Carle and Mike

Fagan, Rice University

ADIC (ANSI-C):

w/ Paul Hovland and

Boyana Norris, MCS/ANL

Recent versions of

ADIFOR/ADIC also compute

2nd order derivatives.

Sample ADIFOR 2.0 Scriptfile

# subroutine to be differentiated

AD_TOP

= deba

# number of derivatives to be computed

AD_PMAX

=5

# independent variables

AD_IVARS

= b,c,d,nu100,nu210

# dependent variables

AD_OVARS

= hmin,pmaxe,cfhp

# Name of file listing names of files

# containing deba and all subroutines called by it.

AD_PROG

= deba.cmp

ADIFOR/ADIC Processing

ANSI-C or

Fortran Code

Control

Files

After initial setup,

usually only input

code changes.

ADIC or

ADIFOR

Code with

Derivatives

Libraries, e.g.,

• SparsLinC

• ADIntrinsics

Compile

& Link

User’s

Derivative

Driver

Derivative

Program

ADIFOR 2.0-generated Code

w = z(1)*z(2)*z(3)*z(4)

r1_v=z(1)*z(2)

r2_v=r1_v*z(3)

r1_b=z(4)*z(3)

r2_b=z(4)*r1_v

r3_b=r1_b*z(2)

r4_b=r1_b*z(1)

do g_i = 1,g_p

g_w(g_i) =

+

+

+

enddo

w = r2_v * z(4)

dw/dz(4)

dw/dz(3)

dw/dz(1)

dw/dz(2)

r3_b

r4_b

r2_b

r2_v

*

*

*

*

is transformed into

reverse (or

adjoint) mode

of AD

g_z(g_i,1)

g_z(g_i,2)

g_z(g_i,3)

g_z(g_i,4)

original value

g_w(:), g_z(:,i) contain derivatives

dw/dz(i) * S and d z(i)/dx * S.

g_p is the no. of columns of S.

forward mode of AD

Typical Code Expansion ~ Factor 3

ADIC-generated Code

w = z[1]*z[2]*z[3]*z[4]

ad_loc_0

ad_loc_1

ad_loc_2

ad_adj_0

ad_adj_1

ad_adj_2

ad_adj_3

=

=

=

=

=

=

=

is transformed into

DERIV_VAL(z[1])*DERIV_VAL(z[2]);

ad_loc_0 * DERIV_VAL(z[3]);

ad_loc_1 * DERIV_VAL(z[4]);

ad_loc_0 * DERIV_VAL(z[4]);

DERIV_VAL(z[3])*DERIV_VAL(z[4]);

DERIV_VAL(z[1])*ad_adj_1;

DERIV_VAL(z[2])*ad_adj_1;

dw/dz[4]

w

dw/dz[3]

dw/dz[2]

dw/dz[1]

reverse (or adjoint)

mode of AD

ad_grad_axpy_4(DERIV_GRAD(w), ad_adj_3,DERIV_GRAD(z[1]),

ad_adj_2,DERIV_GRAD(z[2]),ad_adj_0,DERIV_GRAD(z[3]),

ad_loc_1,DERIV_GRAD(z[4]))

DERIV_VAL(w) = ad_loc_2;

original value

DERIV_GRAD(x): Value of program variable x.

DERIV_GRAD(x): Deriv. Object assoc. with x.

forward mode

of AD

typedef struct {

double value;

double grad[ad_GRAD_MAX];

} DERIV_TYPE

ADIFOR Example – ILTIS (1)

Dynamic and kinetic behavior of an all-terrain

vehicle.

Mass matrix m, coriolis forces k, external forces

qe, generalized position coordinates y,

generalized velocity koordinates z.

Integration with explicit Euler for simplicity, would

like to obtain dm/dy, dm/dz, dk/dy, dk/dz, dqe/dy

und dqe/dz.

Program comprises 11,172 lines of code in 7

subroutines (some of them automatically

generated by NEWEUL).

ADIFOR Example – ILTIS (2)

parameter (ly=10, lz=10, lm=55 ,lk=10)

double precision y(ly),z(lz)

double precision m(lm),k(lk), qe(lk)

...

call integr(y,z,m,k,qe)

...

Code is in files

iltissub.f

iltisuser.f

cholesky.f

variab.f

subne6.f

subne8.f

subne10.f

Call-Tree:

Integr

variab

blatt

shock

rad

tire

ne6

ne8

ne10

cholesky

lesolv

ADIFOR Example – ILTIS (3)

iltis.comp:

iltis.adf:

AD_TOP = integr

top-level subroutine

AD_PMAX = 20

number of derivatives

AD_IVARS = y,z

independent variables

AD_OVARS = m,k,qe dependent variables

AD_SEP = _

for naming variables

AD_OUTPUT_DIR = ILTIS.AD output directory

AD_EXCEPTION_FLAVOR = performance

fastest code

AD_PROG = iltis.comp where is source located

Adifor AD_SCRIPT=iltis.adf

iltissub.f

iltisuser.f

cholesky.f

variab.f

subne6.f

subne8.f

subne10.f

ADIFOR

Example

– ILTIS (4)

ADIFOR generates

71,887 lines of code,

a factor 6,4 times the

length of the source

code.

This is due to nature

of NEWEUL code (no

loops, long righthand sides).

parameter (ly=10, lz=10 ,lp=6, lm=55 ,lk=10)

double precision y(ly),z(lz),m(lm),k(lk), qe(lk)

parameter (g_pmax_ = 20)

integer g_p_, ldg_y, ldg_z, ldg_k, ldg_qe, ldg_m

parameter (ldg_y = 20, ldg_z = 20, ldg_k = 20)

Parameter (ldg_qe = 20, ldg_m = 20)

double precision g_y(ldg_y, 10), g_z(ldg_z, 10),

*g_k(ldg_k, 10), g_qe(ldg_qe, 10), g_m(ldg_m, 55)

g_p_ = g_pmax_

c initialize seed matrices

do 20 i=1,ldg_y

do 10 j=1,ly

g_y(i,j) = 0.

if (i.eq.j) g_y(i,j) = 1.

10

continue

20 continue

do 40 i=1,ldg_z

do 30 j=1,lz

g_z(i,j) = 0.

if (i.eq.j+ly) g_z(i,j) = 1.

30

continue

40

continue

call g_integr(g_p_, y, g_y, ldg_y, z, g_z, ldg_z,

*m, g_m, ldg_m, k, g_k, ldg_k, qe, g_qe, ldg_qe)

Driver

For

ADIFORgenerated

code

Some Other AD Tools

(http://www.autodiff.org)

Fortran 77, some Fortran 90:

ADIFOR 3.0 (FM + RM): Mike Fagan & Alan Carle (Rice University)

TAF (FM + RM, d f + d2f): Ralf Giering & Thomas Kaminski (Fastopt GmbH)

TAPENADE (FM + RM): Laurent Hascoet (INRIA)

ANSI-C/C++:

ADOL-C: (FM/RM, dkf): Andreas Griewank & Andrea Walther

(TU Dresden)

TAC under development (see TAF above)

Matlab:

ADiMAT (Matlab, FM): C.B. and Andre Vehreschild (RWTH Aachen)

MAD (Matlab, FM): S. Forth and R. Kharche (U Hertfordshire)

ADMAT (Matlab, FM/RM+ d2f): Arun Verma (Cornell University)

Programming Issues

FLUENT.AD

(joint work w/ M. Bücker, A. Rasch, J. Risch, E. Slusanschi)

Collaborative research project SFB 540:

“Model-based experimental analysis of

kinetic phenomena in multiphase fluid

systems”

Simulation of wavy film running down a vertical wall

using FLUENT ® V4.5.2

Inst. for Heat Transfer and Air Conditioning, Aachen University

(Prof. Ulrich Renz)

Two-phase flow (wavy film and gas or steam)

k,eturbulence model with parameters ce1 , ce2 , cm , sk , se

Microscopic Particle Image Velocimetry (MPIV) measurements of velocity profile

in direction of wall at the bottom of the strip

Goal: Adjust model parameters to match simulation and experiment

Type Mismatches (Example A)

Original code

subroutine foo1(x)

double x(...)

subroutine foo2(x)

complex x(...)

double x(100000)

if (x is double)

call foo1(x)

elseif (x is complex)

call foo2(x)

endif

Type Mismatches (Example B)

Original code

subroutine foo1(x)

real x(*)

call foo2(x)

Otherwise x is not used in foo1

subroutine foo2(x)

integer x(*)

Different Lengths in COMMON

Original code

main prog

double x(10), y

common /data/ x, y

subroutine foo(...)

double u(10)

common /data/ u

Different # of Parameters

Original code

subroutine foo(x,y,z)

call foo(x,y)

Fluent versus Fluent.AD

Fluent

Fluent.AD

Ratio

AD/Original

Lines of Code

(w/ comments)

Lines of Code

(w/o

comments)

Number of

Files

Number of

subroutines

and functions

1,592,188

1,620,430

1.02

673,774 ADIFOR´s

706,463interprocedural

1.05

dependence analysis

realizes that many files

are not on the path from

1474

1025

0.69

„independent“ to

„dependent“ variables.

2411

1249

0.52

Overflows in Fluent.AD

Dynamic range of derivative code often is larger than

that of original code.

This may lead to overflows in the derivative code, in

particular in 32-bit arithmetic.

Original Code:

if (cendiv.eq.0.0)

cendiv = zero

endif

axp = axp+ap/cendiv

Note: The value of „zero“

is a small number, not 0.0

AD-generated code:

r4_v = ap / cendiv

r4_b = 1.0 / cendiv

r5_b = (-r4_v) / cendiv

do g_i_ = 1, g_pmax_

g_axp(g_i_) = g_axp(g_i_)

+ r4_b * g_ap(g_i_)

+ r5_b * g_cendiv(g_i_)

enddo

axp = axp + r4_v

Intrinsics and Discontinuities

Handling Intrinsics

(see also ADIFOR exception handling, joint work w/ G. Corliss & A. Griewank)

Some Language Intrinsics are not globally differentiable, e.g.

max(x,y).

An AD tool needs some value for d max(x,y)/ dx and

d max(x,y)/ dy even when x == y!

For example, rain = max(0.0,rain) suggests d max(x,y)/ dx = 0, d

max(x,y)/ dy = 1

There is no choice that is always “right”.

AD knows little about analysis

AD of lapy2 of the LAPACK

min(| x |, | y |)

x y max(| x |, | y |) 1

max(| x |, | y |)

2

2

to prevent overflow.

In AD-code of

x y

2

2

an exception occurs, if

| x || y |

But only necessary, if

x y0

2

Pitfalls

AD of Iterative Solvers

Iterative Evaluation of Implicit Function

Application of AD to iterative Solver

Results on Iterative Derivative Convergence

(joint work w/ A. Griewank, A. Carle, G. Corliss, K. Williamson)

TAMRF Transonic Flow Code

Delayed Derivative Convergence

TFS

CFD package developed at the Aerodynamics

Institute of Aachen University

2D/3D simulation of compressible / incompressible

flows – Navier-Stokes

~ 25,000 lines of (mostly) Fortran 77

~ 227 subroutines

TFS applications within SFB 401:

simulation of the wake flow field (e.g. vortex / jet

interaction)

as part of an optimization framework for airfoil design

shape optimization

Convergence of Derivatives

(joint work w/ M. Bücker, A. Rasch, E. Slusanschi)

cL

iterations

- Derivative usually converges

later than the original function

(also with DD).

cL

AD

AD start at 1001 iter.

AD start at 2001 iter.

- Converges faster, if AD starts

later.

Speeding up derivative

computation by delaying the

start of AD.

iterations

Delayed Velocity and

Pressure Derivatives

pressure

cD

pressure

x1

cD

x1

Performance of the Delayed Propagation

k = TFS iterations

l = TFS.AD

iterations

fact = 16.5 =

Exec time TFS.AD

Exec time TFS

Performance = k + fact*l

Impact of Strategic

Use of Mathematics

AD in Neutron Scattering

(M. Bücker and J. Ette)

Nonlinear least-squares formulation to determine

value of one (!) parameter from 12,237 data sets.

Theoretical scattering function consists of about

3,000 lines of Fortran code.

Originally used: NAG E04FDF, quasi-Newton

method with divided-difference gradient:

586 sec. on HP V-class, 13 evals of f(.),

warning about dubious quality of result!

AD enables use of modified Gauss-Newton

Method NAG E04GEF:

498 sec., 6 evals of (f, f), no warnings.

Eval. of f takes 38.7 sec., (f, f) takes 69.2 sec.

A Closer Look at g_voigt(...)

The subroutine „voigt“ is a leaf in the call graph and

accounts for almost 40% of the runtime of the code.

subroutine voigt(u,v,x,y)

Adifor 2.0

subroutine g_voigt(n,u,g_u,ldg_u,v,x,y,g_y,ldg_y)

From parameter list:

(x,y) input (complex number). (u,v) output.

v and x are passive (no gradients g_v, g_x)

u and y are active (gradients g_u, g_y)

ADIFOR‘s dependence analysis tells us that

only du/dy is needed!

Original Function voigt(..)

Let i = sqrt(-1) and x, y, u, v real.

Then for given z = x + i*y

subroutine voigt(u,v,x,y)

computes w = u + i*v defined by

where

This was not apparent from the 53 lines of code, which

was rather convoluted (8 goto‘s!). The author told us.

„Handcoded“ Derivative

After some tedious, but elementary mathematics:

Thus, derivatives can be computed by executing

original function voigt(..) first, followed by

Impact of Strategic Use of

Mathematics

Out of roughly 3000 lines of code, one subroutine comprising 53

lines of code was treated „special“, ADIFOR took care of the rest.

Handcoded derivative for subroutine voigt() is 6.9 times faster due

to exploitation of mathematical properties.

Overall time to compute (f, f) is reduced to 41.5 seconds from 69.2

seconds.

Overall execution time of parameter identification is reduced to 333

seconds from 498 seconds.

Inspection and optimization is possible due to transparency of

ADIFOR-generated output.

An AD-enhanced library routine would have been even better!

Going beyond Forward and

Reverse Mode

AD recap

FM = Forward Mode computes dy/dx*S.

Complexity ~ no. of columns of S.

RM = Reverse Mode computes WT *dy/dx.

Flop complexity ~ no. of rows of W, memory

depends. Great for computing „long gradients“.

The FM was discovered by Beda et al. In 1959, the

RM 1970 by Linnainma. Thereafter, several

rediscoveries and spirited discussions about

superiority of either approach.

AD & chain rule can be applied at arbitrary levels

of abstraction.

Chain rule is associative.

Neither FM nor RM is optimal

AD path accumulation can be

effected by a node elimination

game on the linearized graph.

FM

Minimal complexity AD

schemes requires elimination

of s as first node.

RM

Chaining vs. Preaccumulation

Given: f:x(n) -> y(k) & g:y(k) -> z(m) as well as a FM tool.

Would like to compute dz/dx.

Implicit chaining: (Default für FM tools)

Compute dy/dx with f.AD and use it as seed matrix for

g.AD Derivative vectors of length n are used

throughout.

Preaccumulation:

1) Compute dy/dx with f.AD 2) Compute dz/dy with

g.AD 3) Chain derivatives with matrix-matrix

multiply. Derivative vectors of length n in f.AD, of

length k in g.AD.

Hierarchical AD Approaches

View a program unit as

elementary unit for AD (“tile”)

and compute “its” derivatives

with appropriate method.

Apply chain rule.

In ADIFOR/ADIC 2-level

scheme:

Stmt derivs with RM

Overall chaining with FM

Potential for much greater

savings with larger tiles.

Stencil Tiling

Want to compute

d fval(:,:) / dx(1: p)

for i = 1 to nx do

for j = 1 to ny do

fval(i,j) = fct(13 x’s)

Compute d fct / d (13 ivar) independently, then

multiply with d (13 ivar)/d x(1:p).

d fct / d (13 ivar)

computed how?

ADIFOR

approach

Speedup for 60 Factor 2.2

indep. variables

Full Reverse

Mode

Factor 4.1

Level 1: Statement

Level 2: Loop Body

(Stencil)

Level 3: FM overall

Good choice of strategy depends on data- and

control- flow information.

AD at a matrix level

dg/dx = 4(Mb)T*y*xT

dg/dy = 2(Mb)T*A

This derivative is free at

the matrix level. Would

cost O(n3) with FM, O(n2 +

?) with RM at scalar level.

AD & Parallelism

Collaborative Research Center SFB 401

Flow Modulation and FluidStructure Interaction at

Airplane Wings

TFS (“The Flow Solver”) CFD package developed at

Aerodynamics Institute (AIA) of RWTH Aachen (Prof. Schröder)

• 2D/3D simulation of compressible and incompressible flows

• ~ 24,000 lines of (mostly) Fortran 77

• ~ 220 subroutines

Joint work w/ M. Bücker, A. Rasch, E. Slusanschi,

M. Meinke & F. Zurheide (AIA), A. Spiegel & C. Terboven (CCC)

TFS.AD

Need sensitivities of lift and drag coefficients w.r.t.

and y-angle (2 derivatives)

TFS

24,000 loc

230 subroutines

TFS.AD

ADIFOR 2

27,500 loc

102 subroutines

+ 128 routines from

original code

in total:

~ 38,000 loc

Domain Decomposition

P1

P2

Shared Memory

Parallelization

P1

Overlap (ghost cells)

Solution of Helmholtz equation on a

regular mesh, using an iterative Jacobi

method with over-relaxation

P2

Distributed Memory

Parallelization

Need to replicate and exchange

data at domain boundaries

AD of parallel TFS

Existing parallel version of TFS

Different processors operate on different blocks

Exchange of values at boundaries via MPI (MessagePassing Interface - distr. mem. Progr. Standard)

MPI-specific calls encapsulated in one single file

Easy to insert additional MPI calls for TFS.AD

(send/receive

like

write/read)

call

mpi_isend(a,isn,

mpi_double_precision,

(ipr - 1), 57,

+mpi_comm_world, request, ierror)

call mpi_isend(g_a, n*ldg_a, mpi_double_precision,

+(ipr - 1), 57, mpi_comm_world, request, ierror)

call mpi_recv(a, n, mpi_double_precision, (ipr - 1), 57,

+mpi_comm_world, request, ierror)

call mpi_recv(g_a, n*ldg_a, mpi_double_precision,

+(ipr - 1), 57, mpi_comm_world, request, ierror)

Performance of parallel TFS

3D Computation for BAC 3-11/RES/30/21

TFS (min:sec)

TFS.AD (min:sec)

(2 derivatives)

factor

serial

5:38

36:37

~6.5

parallel

(3 MPI tasks)

2:37

16:09

~6.2

speedup

2.15

2.27

CPU time for computing 100 iterations on Sun E2900 with 1200MHz US-IV

Block 0: 35640 points

Block 1: 17091 points

Block 2: 24687 points

Block-only

parallelization has

inherent work

imbalance!

TFS: Timeline 0:00 – 2:18

Block 0: 35640 points Process 0

Block 1: 17091 points Process 1

Block 2: 24687 points Process 2

TFS: Activity Charts 0:00 – 2:18

Process 0

Block 0: 35640 points

Block 1: 17091 points

Block 2: 24687 points

23.7 % of execution

time is spent for MPI

communication

Process 1

Process 2

even with perfect load

balance, possible speedup is

limited by # blocks

OpenMP

De facto standard for shared memory programming

language extensions for Fortran, C and C++ (compiler directives)

Each parallel region generates a new team of threads

private and shared variables

Worksharing constructs ( omp do ) indicate parallel execution on

different data

c$omp parallel

c$omp do private(k,tmp) shared(x,y,z)

do k=1,L

Different loop iterations

tmp=sin(z(k))

can be executed

x(k)= y(k)*tmp

independently by different

enddo

threads

c$omp end do

c$omp end parallel

OpenMP Parallelization of TFS

Select most time- consuming routines in TFS for

parallelization with OpenMP

routine

% cpu time

(TFS)

% cpu time

(TFS.AD)

Max. Speedup

(Amdahl‘s Law)

ausm

33.3

55.3

visc

12.5

12.1

viscsrc

9.9

8.3

funcq

5.7

1.7

vort

5.2

3.7

strain

3.6

3.7

70.2

84.8

TFS

TFS.AD

3.36

6.58

AD code may benefit

even more from OpenMPparallelizaion

AD of OpenMP code

c$omp parallel do private(k,tmp)

c$omp+shared(x,y,z)

OpenMP directives are

treated as comments by

do k=1,L

ADIFOR (leaves them

do i=1,n

where they are)

g_tmp(i)=cos(z(k))*g_z(i,k)

enddo

tmp=sin(z(k))

do i=1,n

g_x(i,k)= g_y(i,k)*tmp + y(k)*g_tmp(i)

enddo

x(k)= y(k)*tmp

enddo

c$omp end parallel do

AD of OpenMP code

c$omp parallel do private(k,tmp,g_tmp,i)

c$omp+shared(x,y,z,g_x,g_y,g_z)

OpenMP directives are

treated as comments by

do k=1,L

ADIFOR (leaves them

do i=1,n

where they are)

g_tmp(i)=cos(z(k))*g_z(i,k)

enddo

tmp=sin(z(k))

• Classification of derivative

do i=1,n

variables into shared and private can

g_x(i,k)= g_y(i,k)*tmp + y(k)*g_tmp(i)

be done automatically by AD tools

enddo

• New potential for parallelism

x(k)= y(k)*tmp

through AD-generated derivative

enddo

loops.

c$omp end parallel do

Performance of OpenMPparallelized TFS and TFS.AD

Speedup of TFS and TFS.AD for varying # threads

8

7

Speedup (TFS)

Speedup (TFS.AD)

6

5

4

3

2

1

0

1

2

4

8

12

16

20

E2900 1200MHz Ultra Sparc IV , 12 dual core CPU (24 PE), 48 GB Memory

SUN Fortran 95 v 7.1 compiler with flags: –fast –dalign –openmp

Speedup of hybrid MPI-OpenMP

parallelized TFS and TFS.AD

3 MPI tasks and varying # OpenMP threads

8

7

Speedup(TFS)

Speedup(TFS.AD)

6

5

4

3

2

1

0

3x1

3x2

3x3

3x4

3x5

3x6

3x7

E2900 1200MHz Ultra Sparc IV , 12 dual core CPU (24 PE), 48 GB Memory

SUN Fortran 95 v 7.1 compiler with flags: –fast –dalign –openmp

You cannot escape parallel

programming in the future

The Impact of Moore‘s Law

The number of transistors

on a chip is still doubling

every 18 months

Intel-Processors:

… but the clock speed is

no longer growing that fast.

Instead we’ll see many more

cores per chip!

Clock Speed (MHz)

Transistors (x1000)

Source: Herb Sutter

www.gotw.ca/publications/concurrencyddj.htm

Design Considerations

Power consumption (and heat generated)

Scales linearly with #transistors but quadratically with clock

rate

There is no Moore‘s Law for memory latency

Memory latency halves only every six years.

Conclusion for commodity chips: Instead of striving for

faster clock speed, tightly integrate multiple instances of

slower processor cores on a chip.

IBM Blue Gene is most radical example – 1024 CPUs/rack.

Sun Fire T2000 (chip size 378 mm2, 72 W)

Memory

Memory

Memory

Memory

25.6 GB/sec.

4 DDR2 memory controllers on chip

.75 MB L2

.75 MB L2

.75 MB L2

.75 MB L2

Internal Crossbar 134 GB/s

FPU

core

core

core

core

core

core

core

core

8 KB

L1

8 KB

L1

8 KB

L1

8 KB

L1

8 KB

L1

8 KB

L1

8 KB

L1

8 KB

L1

One Floating-Point unit (FPU) shared among all processors.

1GHz

Terminology

Chip(-level) multiprocessing (CMP) :

multiple processor "cores" are included in the same integrated

circuit, executing independent instruction streams.

Chip(-level) multithreading (CMT)

Multiple threads are executed within one processor core at the

same

time. Only one is active at a given time (time-slicing).

Chip Level Parallelism

Chip-Level Multiprocessing

= 1.11 ns

UltraSPARC IV+, CMP

superscalar, dual core

2 x 4 sparc v9 instr/cycle

1 active thread per core

= 0.66 ns

Opteron 875, CMP

superscalar, dual core

2 x 3 x86Multithreading

instr/cycle

Chip-Level

1 active thread per core

= 0.45 ns

UltraSPARC T1, CMP+CMT

Single issue, 8 cores

8 x 1 sparc v9 instr/cycle

4 active threads per core

context switch comes for free

= 1.0 ns

context switch

time

Sparse Matrix Vector Multiplication

What, if the Niagara could really compute with

floating point numbers …

900

800

700

MFLOPS / MOPS

SF T2000

SF T2000

(1x Niagara 1 GHz)

SF T2000

long long

SF 2900

long long

SF T2000

double

SF 2900

double

600

500

SF 2900

(12x US IV

1,2 GHz)

400

19,6 Mio Enonzeros

300

233,334 matrix dimension

200

225 MB memory footprint

100

0

0

5

10

15

20

# threads

25

30

35

Assessment of AD

Automatic Differentiation (AD) Tools: Efficient derivative

programs with very little human effort. Tools are improving,

both w.r.t. language coverage and performance.

AD + Brains: Methodologies for exploiting high-level user

knowledge about program structure and algorithmic

underpinnings.

Program-based sensitivity analysis with AD is a valuable

tool for accelerating the program development cycle

Sensitivities provide clues to inadequacies of the

model.

Sensitivities are inherently consistent with evaluation of

the model.

www.autodiff.org

Other AD Projects at Aachen

SHEMAT groundwater transport code

Inst. for Applied Geoscience, RWTH Aachen

SFB540: AD of DROPS (RWTH Aachen) incl. Grid

sensitivities

AD of Matlab and Java

AD of CapeML, a domain-specific languages based on

XML for chemical process control

an example of a „little language“

Sensitivity of optimal solutions

Exploitation of shared-memory parallelism in AD

www.sc.rwth-aachen.de

• Collaborators:

• Martin Bücker, Monika Petera, Arno Rasch, Emil Slusanschi, Andre

Vehreschild, Andreas Wolf, Inst. for Scientific Computing, RWTH Aachen

• Dieter an Mey, Samuel Sarholz, Christian Terboven, Center for Computing

and Communications, RWTH Aachen

• ICCS06 Conference, www.iccs.org:

• May 30, 2006, Redding,

Minisymposium on Automatic Differentiation and Applications

•Ressources:

• Bücker, M.; Corliss, G.; Hovland, P.; Naumann, U.; Norris, B. (Eds.),

Automatic Differentiation: Applications, Theory, and Implementations,

Springer 2006.

•G. Corliss, A. Griewank, C. Faure, L. Hascoet, and U. Naumann, editors,

Automatic Differentiation: From Simulation to Optimization, Springer, 2001.

• A. Griewank, Evaluating Derivatives: Principles and Techniques of

Algorithmic Differentiation, SIAM, Philadelphia, 2000.

• M. Berz, C. Bischof, G. Corliss, and A. Griewank, editors, Computational

Differentiation: Techniques, Applications, and Tools, SIAM, Philadelphia,

1996.