Evaluation, Measurement and Assessment Cluster 14

Evaluation, Measurement and Assessment

Cluster 14

Basic Terminology

• Evaluation: a judgment-decision making about performance

• Measurement: a number representing an evaluation

• Assessment: procedure to gather information(variety of them)

• Norm-referenced test: Testing in which scores are compared with the average performance of others

• Criterion-referenced testing: Testing in which score are compared to a fixed (set performance standard.) Measure the mastery of very specific objectives.

• Example: Driver’s License Exam

Norm-Referenced Tests

• Performance of others as basis for interpreting a person’s raw score

(actual number of correct test items)

• Three types: 1) Class 2) School District 3) National

• Score reflects general knowledge vs. mastery of specific skills and information

• Uses: measuring overall achievement and selection of few top candidates

• Limitations:

– no indication of prerequisite knowledge for more advanced material has been mastered

– less appropriate for measuring affective and psychomotor objectives

– encourages competition and comparison scores

Criterion-Referenced Tests

• Comparison with a fixed standard

• Example: Driver’s License

• Use: Measure mastery of a very specific objective when goal is to achieve set standard

• Limitations

– absolute standards difficult to set in some areas

– standards tend to be arbitrary

– not appropriate comparison when others are valuable

Comparing Norm- and

Criterion-Referenced Tests

• Norm-referenced

– General ability

– Range of ability

– Large groups

– Compares people to people-comparison groups

– Selecting top candidates

• Criterion-referenced

– Mastery

– Basic skills

– Prerequisites

– Affective

– Psychomotor

– Grouping for instruction

What do Test Scores Mean?

Basic Concepts

• Standardized test:

Tests given under uniform conditions and scored and reported according to uniform procedures. Items and instructions have been tried out and administered to norming sample group

• Norming sample: large sample of students serving as a comparison group for scoring standardized tests

• Frequency distributions

: record showing how many scores fall into set groups, listing number of people who obtained particular scores

• Central tendency:

Typical score for a group of scores. Three measures:

– Mean-average

– Median-middle score

– Mode/bimodal (two modes)-most frequent

• Variability:

Degree of difference or deviation from the mean

– Range: difference between the highest and lowest score

– Standard deviation: measure of how widely the scores vary from the mean-further from the mean, greater SD

– Normal Distribution:

Bell shaped curve is an example-Figure 39.2, p. 509

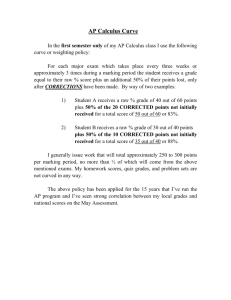

Frequency Distribution

Histogram

(Bar graph of a frequency distribution)

3

Students

2

1

0

40 45 50 55 60 65 70 75 80 85 90 95 100

Scores on Test

Calculating the Standard Deviation

• Calculate the mean: c

• Subtract the mean from each score: (c-c)

• Square each difference: (c-c)

2

• Add all the squared differences: S(c-c)

2

• Divide by the number of scores: S(c-c)

2

•

• Find the square root : S(c-c)

2

N

N

Normal Distributions

• The “bell curve”

• Mean, median, mode all at the center of the curve

• 50% of scores above the mean

• 50% of scores below the mean

• 68% of scores within one standard deviation from the mean

Types of Scores

• Percentile rank : Percentage of those in the norming sample who scored at or below a raw score

• Grade-equivalent

: Tells whether students are performing at levels equivalent with other students at their own age/grade level

• averages obtained from different norming samples for each grade

• different forms of test often used for different grades

• high score indicates superior mastery of material at that grade level rather than the capacity/ability for doing advanced work

• often misleading

• Standard scores : scores based on the standard deviation

– z scores: standard score indicating the number of standard deviations a person is above or below the mean-no negative numbers

–

T scores: Standard score with a mean of 50 and a standard deviation of 10

– Stanine scores: Whole number scores from 1 to 9, each representing a wide range of raw scores.

Interpreting Test Scores

• No test provides a perfect picture of one’s abilities

• Reliability : Consistency of test results

• Test-Retest Reliability-consistency of scores on 2 separate administrations of the same test

• Alternate-Form Reliability- consistency of scores on two equivalent versions of a test

• Split-Half Reliability-degree to which all the test items measure the same abilities

• True score : Hypothetical mean of all of an individual’s scores if repeated testing under ideal conditions

• Standard error of measurement

: standard deviation of scores from hypothetical true score; the smaller the standard error the more reliable the test

• Confidence intervals : Range of scores within which an individual’s particular “true” score is likely to fall

• Validity

– Content-related-do test items reflect content addressed in class/texts”

– Criterion-PSAT and SAT-predictor of of performance based on prior measure

– Construct-related-IQ, motivation-evidence gathered over years

See Guidelines, p. 514: Increasing Reliability and Validity

Achievement Tests

• Measure how much student has learned in specific content areas

• Frequently used achievement tests

• Group tests for identifying students who need more testing or for homogenous ability grouping

• Individual tests for determining academic level or diagnosis of learning problems

• The standardized scores reported:

– NS: National Stanine Score

– NCE: National Curve Equivalent

– SS: Scale Score

– NCR: Raw score

– NP: National Percentile

– Range

• See Figure 40.1, p. 520-521

Diagnostic Tests

• Identify strengths and weaknesses

• Most often used by trained professionals

• Elementary teachers may use for reading, math

Aptitude Tests

• Measure abilities developed over years

• Used to predict future performance

• SAT/PSAT

• ACT/SCAT

• IQ and aptitude

• Discussing test scores with families

• Controversy continues over fairness, validity, biasness

Issues in Testing

• Widespread testing

(see Table 14.3, p. 534)

• Accountability and high stakes testing-misuses, Table 40.3, p. 526

• Testing teachers-accountability of student performance as well as teacher knowledge in teacher tests

See Point/Counterpoint, p. 525

Desired Characterstics of a Testing Program

1)Match the content standards of district 6) Include all students

2)Be part of a larger assessment plan 7) Provide appropriate remediation

3)Test complex thinking

4)Provide alternative assessment strategies for students with disabilities

5)Provide opportunities for retesting

8) Make sure all students have had adequate opportunity to learn material

9) Take into account the student’s language

10) Use test results FOR children, not

AGAINST them

New Directions in Standardized

Testing

• Authentic assessments

– Problem of how to assess complex, important, real-life outcomes

– some states are developing/have developed authentic assessment procedures

– “Constructed-response-formats” have students create, rather than select, responses; demands more thoughtful scoring

• Changes in the SAT-now have a writing component

• Accommodating diversity in testing

Formative Assessments

• 2 basic purposes: 1) guide teachers in planning 2) help to identify problem areas

• Pretests

– Aid teacher in planning-what learners know and don’t know

– Identify weaknesses: diagnostic

– Are not graded

Summative Assessments

• Occurs at the end of instruction

• Provides a summary of accomplishments

• End of chapter, midterms, final exam

• Purpose is to determine final achievement

Planning for Testing

• Test frequently

• Test soon after learning

• Use cumulative questions

• Preview ready-made tests

Objective Testing

• Objective: not open to many interpretations

• Measures a broad range of material

• Multiple choice most versatile

• Lower and higher level items

• Difficult to write well

• Easy to score

Key Principles: Writing Multiple Choice Questions

• Clearly written stem

• Present a single problem

• Avoid unessential details

• State the problem in positive terms

• Use “not,” “no,” or “except” sparingly or mark them: NOT , no, except

• Do not test extremely fine discriminations

• Put most wording in the stem

• Check for grammatical match between stem and alternatives

• Avoid exclusive and inclusive words all, every, only, never, none

• Avoid two distracters with the same meaning

• Avoid exact textbook language

• Avoid overuse of all or none of the above

• Use plausible distracters

• Vary the position of the correct answer

• Vary the length of correct answers— long answers are often correct

• Avoid obvious patterns in the position of your correct answer

Essay Testing

• Requires students to create an answer

• Most difficult part is judging quality of answers

• Writing good, clear questions can be challenging

• Essay tests focus on less material

• Require a clear and precise task

• Indicate the elements to be covered

• Allow ample time for students to answer

• Should be limited to complex learning objectives

• Should include only a few questions

Evaluating Essays: Dangers

• Problems with subjective testing

– Individual standards of the grader

– Unreliability of scoring procedures

– Bias: wordy essays, neatly written with few grammatical errors often get more points and may completely off point

Evaluating Essays: Methods

• Construct a model answer

• Give points for each part of the answer

• Give points for organization

• Compare answers on papers that you gave comparable grades

• Grade all answers to one question before moving on to the next question/test

• Have another teacher grade tests as a cross-check

Effects of Grades and Grading

• Effects of Failure-can be positive or negative motivator

• Effects of Feedback-

– helpful if reason for mistake is clearly explained, in a positive constructive format, so that the same mistake is not repeated

– encouraging, personalized written comments are appropriate

– oral feedback and brief written comments for younger students

• Grades and Motivation

– grades can motivate real learning but appropriate objectives are the key

– should reflect meaningful learning

– working for a grade and working for learning should be the same

• Grading and Reporting

– Criterion-Referenced vs. Norm-Referenced

Criterion-Referenced

• Mastery of objectives

• Criteria for grades set in advance

• Student determines what grade they want to receive

• All students could receive an “A”

Norm-Referenced Grading

• Grading on the curve

• Students compared to other students

• “Average” becomes the anchor for other grades

• Fairness issue

• “Adjusting” the curve

• Point system for combining grades from many assignments

• Points assigned according to assignment’s importance and student’s performance

• Grades are influenced by level of difficulty of the test and concerns of the teacher

• Percentage grading involves assigning grades based on how much knowledge each student has acquired

• Grading symbols A-F commonly used to represent percentage categories

• Grades are influenced by level of difficulty of tests/assignments and concerns of the individual teacher

Contract System and Rubrics

•Specific types, quantity and quality of work required for each grade

•Students ‘contract” to work for a grade-great ‘start over”

•Can overemphasize quantity of work at the expense of quality

•Revise Option: Revise and improve work

Effort and Improvement Grades?

• BIG question: Should grades be based on how much a student improves or on the final level of learning?

• Using improvement as a standard penalizes the best students who naturally improve the least

• Individual Learning Expectations (ILE) system allows everyone to earn improvement points base don personal averages

• Dual Marking system is a way to include effort in grades

Parent/Teacher Conferences

• Make plenty of deposits starting on week two!

• Plan ahead

• Start positive

• Use active listening and problem solving

• Establish a partnership

• Plan follow-up contacts

• Tell the truth!

• Be prepared with samples

• End positive