Yang-Term-Filtering... - School of Computer Science

advertisement

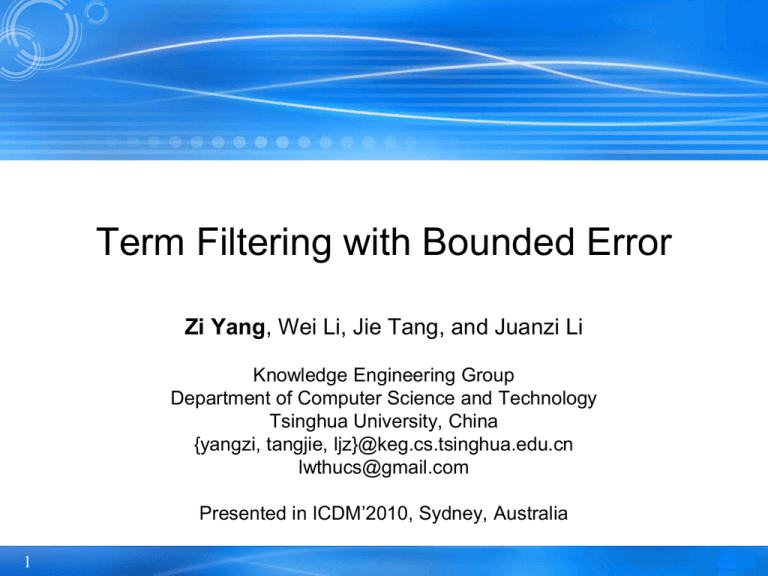

Term Filtering with Bounded Error

Zi Yang, Wei Li, Jie Tang, and Juanzi Li

Knowledge Engineering Group

Department of Computer Science and Technology

Tsinghua University, China

{yangzi, tangjie, ljz}@keg.cs.tsinghua.edu.cn

lwthucs@gmail.com

Presented in ICDM’2010, Sydney, Australia

1

Outline

•

•

•

•

•

•

2

Introduction

Problem Definition

Lossless Term Filtering

Lossy Term Filtering

Experiments

Conclusion

Introduction

• Term-document correlation matrix

– 𝑀: a |𝑉| × |𝐷| co-occurrence matrix,

the number of times

that term 𝑤𝑖 occurs in

document 𝑑𝑗

How can we overcome

the disadvantage?

– Applied in various text mining tasks

• text classification [Dasgupta et al., 2007]

• text clustering [Blei et al., 2003]

• information retrieval [Wei and Croft, 2006].

– Disadvantage

• high computation-cost and intensive memory access.

3

Introduction (cont'd)

• Three general methods:

– To improve the algorithm itself

– High performance platforms

• multicore processors and distributed machines [Chu

et al., 2006]

– Feature selection approaches

• [Yi, 2003]

We follow this line

4

Introduction (cont'd)

• Basic assumption

– Each term captures more or less information.

Limitations

• Our goal

- A generic definition of information

– A subspace

of afeatures

(terms)

loss for

single term

and a set of

– Minimal

information

terms

in termsloss.

of any metric?

• Conventional

solution: loss of each

- The information

– Step 1:

Measure document,

each individual

individual

andterm

a setorofthe

dependency

to a group of terms.

documents?

• Information gain or mutual information.

– Step 2: Remove

features

with

low

scores

in

Why should we consider the

importance or high scores in redundancy.

information loss on documents?

5

Introduction (cont'd)

• Consider a simple example:

w

(2,

2)

A

Doc 1

Doc 2

AA B

AA B

What we have done?w (1,1)

B

- Information loss for both terms and

• Question:

any loss of information if A and B

documents

are- Term

substituted

a Bounded

single term

Filteringby

with

ErrorS?

problem

- Developonly

efficient

algorithms loss for terms

– Consider

the information

•YES! Consider that 𝜖𝑉 is defined by some state-ofthe-art pseudometrics, cosine similarity.

– Consider both

•NO! Because both documents emphasize A more

than B. It’s information loss of documents!

6

Outline

•

•

•

•

•

•

7

Introduction

Problem Definition

Lossless Term Filtering

Lossy Term Filtering

Experiments

Conclusion

Problem Definition - Superterm

• Want

– less information loss

– smaller term space

Group terms into superterms!

• Superterm: 𝑠 = {𝑛(𝑠, 𝑑𝑗 )}𝑗 .

8

the number of occurrences of

superterm 𝑠 in document 𝑑𝑗

Problem Definition – Information loss

• Information loss during term merging?

– user-specified

measures 𝑑(⋅,⋅)

Can be chosendistance

with different

methods: winner-take-all,

• Euclidean

metric and cosine similarity.

average-occurrence, etc.

– superterm 𝑠 substitutes a set of terms 𝑤𝑖

• information loss of terms error𝑉 (𝑤) = 𝑑(𝐰, 𝐬).

• information loss of documents error𝐷 (𝑑) = 𝑑(𝐝, 𝐝′).

Doc 1

AA B

Doc 1

A

B

9

Doc 2

A transformed representation

for document 𝑑 after merging

AA B

Doc 1

Doc 2

2 2

1 1

S

S

Doc 2

1.5 1.5

1.5 1.5

errorW 0

errorD 0

Problem Definition – TFBE

• Term Filtering with Bounded Error

(TFBE)

– To minimize the size of superterms |𝑆| subject

to the following constraints:

(1) 𝑆 = 𝑓(𝑉) = ∐𝑠∈𝑆 𝑠,

(2) for all 𝑤 ∈ 𝑉, error𝑉 (𝑤) ≤ 𝜖𝑉 ,

(3) for all 𝑑 ∈ 𝐷, error𝐷 (𝑑) ≤ 𝜖𝐷 .

Mapping function

from terms to

superterms

10

Bounded by userspecified errors

Outline

•

•

•

•

•

•

11

Introduction

Problem Definition

Lossless Term Filtering

Lossy Term Filtering

Experiments

Conclusion

Lossless Term Filtering

• Special case

– NO information loss of any term and document

𝜖𝑉 = 0 and 𝜖𝐷 = 0

• Theorem: The exact optimal solution of the

problem is yielded by grouping terms of the

same vector

Thisrepresentation.

case is applicable?

• YES!

Algorithm

On Baidu Baike dataset (containing

– Step 1: find “local” superterms for each document

1,531,215

documents)

• Same occurrences within the document

The

vocabulary

sizeor

1,522,576

-> 714,392from

– Step

2: add, split,

remove superterms

global superterm set> 50%

12

Outline

•

•

•

•

•

•

13

Introduction

Problem Definition

Lossless Term Filtering

Lossy Term Filtering

Experiments

Conclusion

Lossy Term Filtering

• General case

𝜖𝑉 > 0 and 𝜖𝐷 > 0

• A greedy algorithm

NP-hard!

reduce

the size

ofby 𝜖𝑉 .

– Step Further

1: Consider

the constraint

given

• Similar

to Greedy Cover

Algorithm

candidate

superterms?

– Step

2: Verify the validity of

the candidates

Locality-sensitive

hashing

(LSH)with

𝜖𝐷 .

• Computational Complexity

#iterations << |V|

– 𝑂(|𝑉|2 + |𝑉|𝑇|𝐷| + 𝑇 ′ (|𝑉|2 + 𝑇|𝐷|))

– Parallelize the computation for distances

14

Efficient Candidate Superterm

Generation

• LSH

• Basic idea:

Same hash value

a projection vector(with several randomized

hash projections)

(drawn from the

Gaussian

distribution)

Now

search

𝑤(𝜖Close

𝑉 )-near

to each other

(in the

original space)

neighbors in all the

buckets!

• Hash function for the

Euclidean distance

𝐫⋅𝐰+𝑏

ℎ 𝐰 =

the width of the𝑟,𝑏

𝑤(𝜖𝑉 )

(Figure modified based on http://cybertron.cg.tubuckets [Datar et al., 2004].

berlin.de/pdci08/imageflight/nn_search.html)

(assigned empirically)

15

Outline

•

•

•

•

•

•

16

Introduction

Problem Definition

Lossless Term Filtering

Lossy Term Filtering

Experiments

Conclusion

Experiments

• Settings

– Datasets

• Academic: ArnetMiner (10,768 papers and 8,212

terms)

• 20-Newsgroups (18,774 postings and 61,188 terms)

– Baselines

• Task-irrelevant feature selection: document

frequency criterion (DF), term strength (TS), sparse

principal component analysis (SPCA)

• Supervised method (only on classification): Chistatistic (CHIMAX)

17

Experiments (cont'd)

• Filtering results on Euclidean metric

– Findings

• Errors ↑, term radio ↓

• Same bound for terms,

bound for documents ↑,

term ratio ↓

• Same bound for

documents, bound for

terms ↑, term ratio ↓

18

Experiments (cont'd)

• The information loss in terms of error

Avg. errors

19

Max errors

Experiments (cont'd)

• Efficiency Performance of Parallel

Algorithm and LSH*

* Optimal resulting from different choice of 𝑤 𝜖𝑉 tuned

20

Experiments (cont'd)

• Applications

– Classification (20NG)

– Clustering (Arnet)

– Document retrieval (Arnet)

21

Outline

•

•

•

•

•

•

22

Introduction

Problem Definition

Lossless Term Filtering

Lossy Term Filtering

Experiments

Conclusion

Conclusion

• Formally define the problem and perform a

theoretical investigation

• Develop efficient algorithms for the

problems

• Validate the approach through an extensive

set of experiments with multiple real-world

text mining applications

23

Thank you!

24

References

• Blei, D. M., Ng, A. Y. and Jordan, M. I. (2003). Latent Dirichlet

Allocation. Journal of Machine Learning Research 3, 993-1022.

• Chu, C.-T., Kim, S. K., Lin, Y.-A., Yu, Y., Bradski, G., Ng, A. Y.

and Olukotun, K. (2006). Map-Reduce for Machine Learning on

Multicore. In NIPS '06 pp. 281-288,.

• Dasgupta, A., Drineas, P., Harb, B., Josifovski, V. and Mahoney,

M. W. (2007). Feature selection methods for text classification.

In KDD'07 pp. 230-239,.

• Datar, M., Immorlica, N., Indyk, P. and Mirrokni, V. S. (2004).

Locality-sensitive hashing scheme based on p-stable

distributions. In SCG'04 pp. 253-262,.

• Wei, X. and Croft, W. B. (2006). LDA-Based Document Models

for Ad-hoc Retrieval. In SIGIR '06 pp. 178-185,.

• Yi, L. (2003). Web page cleaning for web mining through

feature weighting. In IJCAI '03 pp. 43-50,.

25