Exploring Parallelism with

advertisement

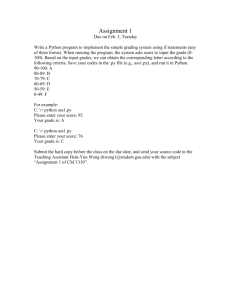

Exploring Parallelism with Joseph Pantoga Jon Simington Issues Between Python and C - Python is inherently slower than C - Especially using libraries that take advantage of Python’s relationship with C / C++ code - Thanks interpreter & dynamic typing scheme - Python 3 can be comparable to C in some respects, but still slower on the average case (we use Python 2.7.10) - Python too popular? - So many devs with so many ideas leads to many incomplete projects, but plenty of room for contribution The Global Interpreter Lock - A lock enforced by the Python interpreter to avoid sharing memory with nonthread-safe threads - Limits the amount of parallelism through concurrency when using multiple threads - Very little, if any speedup on a multiprocessor machine The Global Interpreter Lock def countdown(n): while n > 0: n -= 1 Sequential count = 100000000 countdown(count) 7.8s t1 = Thread(target=countdown, args=(count//2,)) t2 = Thread(target=countdown, args=(count//2,)) 2 Threads 15.4s t1.start(); t2.start() t1.join(); t2.join() t1 t2 t3 t4 = = = = Thread(target=countdown, Thread(target=countdown, Thread(target=countdown, Thread(target=countdown, args=(count//4,)) args=(count//4,)) args=(count//4,)) args=(count//4,)) - The GIL ruins everything! - Thread-based Parallelism is often not worth it with Python 4 Threads 15.7s t1.start(); t2.start(); t3.start(); t4.start() t1.join(); t2.join(); t3.join(); t4.join() *test completed on 3.1GHz x4 machine with Python 2.7.10 Getting around the GIL - Make calls to outside libraries and circumvent the interpreter’s rules entirely - Python modules that call external C libraries have inherent latency - BUT! In certain cases, Python + C MPI performance can be comparable to the native C libraries How does Python + C compare to C? - The following was tested on the Beowulf class cluster `Geronimo` at CIMEC with ten Intel P4 2.4GHz processors, each equipped with 1GB DDR 333MHz RAM connected together on a 100Mbps ethernet switch. The mpi4py library was compiled with MPICH 1.2.6 from mpi4py import mpi import numarray as na sbuff = na.array(shape=2**20,type=na.Float64) wt = mpi.Wtime() if mpi.even: mpi.WORLD.Send(buffer, mpi.rank rbuff = mpi.WORLD.Recv(mpi.rank else: rbuff = mpi.WORLD.Recv(mpi.rank mpi.WORLD.Send(buffer, mpi.rank + 1) + 1) - 1) - 1) wt = mpi.Wtime() - wt tp = mpi.WORLD.Gather(wt, root=0) if mpi.zero: print tp http://www.cimec.org.ar/ojs/index.php/cmm/article/viewFile/8/11 How does Python + C compare to C? The rest of the graphs display time analysis from similar programs, with only the MPI instruction differing. http://www.cimec.org.ar/ojs/index.php/cmm/article/viewFile/8/11 http://www.cimec.org.ar/ojs/index.php/cmm/article/viewFile/8/11 How does Python + C compare to C? http://www.cimec.org.ar/ojs/index.php/cmm/article/viewFile/8/11 http://www.cimec.org.ar/ojs/index.php/cmm/article/viewFile/8/11 How does Python + C compare to C? http://www.cimec.org.ar/ojs/index.php/cmm/article/viewFile/8/11 - For large data sets, Python performs very similarly to C - Python has less bandwidth available as mpi4py uses an MPI library from C to perform general networking calls - But, in general, Python is slower than C Python’s Parallel Programming Libraries - Message Passing Interface (MPI) - pyMPI - mpi4py - uses the C MPI library directly - Pypar - Scientific Python (MPIlib) - MYMPI - Bulk Synchronous Parallel (BSP) - Scientific Python (BSPlib) pyMPI - Almost-full MPI instruction set - Requires a modified Python interpreter which allows for ‘interactive’ parallelism - Not maintained since 2013 - The modified interpreter is the parallel application -> Have to recompile the interpreter whenever you want to do different tasks Pydusa formerly MYMPI - 33KB Python module -- no custom Python interpreter to maintain - While the MPI Standard contains 120+ routines, MYMPI contains 35 “important” MPI routines - Syntax is very similar to the Fortran, C MPI libraries - Your Python code is the parallel application pypar - No modified interpreter needed! - Still maintained on GitHub - Few MPI interfaces are implemented - Can’t handle topologies well and prefers simple data structures in parallel calculations mpi4py - Still being maintained on Bitbucket (updated 11/23/2015) - Makes calls to external C MPI functions to avoid GIL - Attempts to borrow ideas from other popular modules and integrate them together Scientific Python - GREAT documentation -> Easy to use with their examples - Supports both MPI and BSP - Requires installation of both an MPI and a BSP library Is Parallelism Fully Implemented? - From our research so far, we have not found a publically-available Python package that fully implements the full MPI instruction set - Not all popular languages have complete and extensive libraries for every task or use case! Conclusion - You CAN create parallel programs and applications with Python - Doing so efficiently can require the compilation of a large custom Python Interpreter - Should they try to keep it in future versions or even maintain the current implementations? - From our research it seems like the community has done just about all they could do to bring parallelism to Python but some sacrifices have to be made, mainly a restriction on what data types can and can’t be supported Conclusion Cont. - Maybe Python isn’t the best language to implement parallel algorithms in, but there are many other languages besides C and Fortran which have interesting approaches to solving parallel problems Julia - Really good documentation for parallel tasks with examples - Able to send a task to n connected computers and asynchronously receive the results back, both upon request, and automatically when the task completes - Has pre-defined topology configurations for networks like all-to-all and masterslave - Allows for custom worker configurations to fit your specific topology Go - Fairly good documentation, along with an interactive interpreter on site to learn the basics without installing anything. - Initial installation comes with all required libraries for parallel coding. So no extra libraries to search for or install. - Lightweight and easy to learn - Can write several parallel programs using simple functions in Go Questions? Sources http://www.researchgate.net/profile/Mario_Storti/publication/220380647_MPI_for_Python/links/00b495242ba3 b30eb3000000.pdf http://www.researchgate.net/profile/Leesa_Brieger/publication/221134069_MYMPI__MPI_programming_in_Python/links/0c960521cd051bc649000000.pdf http://uni.getrik.com/wp-content/uploads/2010/04/pyMPI.pdf http://www.researchgate.net/profile/Konrad_Hinsen/publication/220439974_HighLevel_Parallel_Software_Development_with_Python_and_BSP/links/09e4150c048e4e7cd8000000.pdf http://www.researchgate.net/profile/Ola_Skavhaug/publication/222545480_Using_B_SP_and_Python_to_simplif y_parallel_programming/links/0fcfd507e6cac3eb63000000.pdf http://downloads.hindawi.com/journals/sp/2005/619804.pdf Sources http://geco.mines.edu/workshop/aug2010/slides/fri/mympi.pdf http://sourceforge.net/projects/pydusa/ http://docs.julialang.org/en/latest/manual/parallel-computing/ http://dirac.cnrs-orleans.fr/plone/software/scientificpython http://dirac.cnrs-orleans.fr/ScientificPython/ScientificPythonManual/