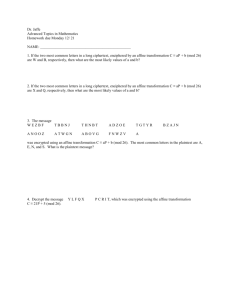

ppt - UCSD Computer Vision

Three Brown Mice:

See How They Run

Kristin Branson, Vincent Rabaud, and Serge Belongie

Dept of Computer Science, UCSD http://vision.ucsd.edu

Problem

We wish to track three agouti mice from video of a side view of their cage.

Motivation

Mouse Vivarium Room

A vivarium houses thousands of cages of mice.

Manual, close monitoring of each mouse is impossible.

Motivation

Behavior

Analysis

Algorithm

Automated behavior analysis will allow for

Improved animal care.

More detailed and exact data collection.

Activity

Eating

Scratching

Reproduction

Rolling

…

Motivation

Tracking

Algorithm

Behavior

Analysis

Algorithm

Automated behavior analysis will allow for

Improved animal care.

More detailed and exact data collection.

An algorithm that tracks individual mice is a necessity for automated behavior analysis.

Activity

Eating

Scratching

Reproduction

Rolling

…

A Unique Tracking Problem

Tracking multiple mice is difficult because

The mice are indistinguishable.

They are prone to occluding one another.

They have few (if any) trackable features.

Their motion is relatively erratic.

Simplifying Assumptions

We benefit from simplifying assumptions:

The number of objects does not change.

The illumination is relatively constant.

The camera is stationary.

Tracking Subproblems

We break the tracking problem into parts:

Track separated mice.

Detect occlusions.

Track occluded/occluding mice.

Tracking Subproblems

We break the tracking problem into parts:

Track separated mice.

Detect occlusions.

Track occluded/occluding mice.

Tracking Subproblems

We break the tracking problem into parts:

Track separated mice.

Detect occlusions.

Track occluded/occluding mice.

Tracking Subproblems

Occlusion Start

We break the tracking problem into parts:

Tracking through Occlusion

Segmenting is more difficult when a frame is viewed out of context.

Tracking through Occlusion

Segmenting is more difficult when a frame is viewed out of context.

Using a depth ordering heuristic, we associate the mouse at the start of an occlusion with the mouse at the end of the occlusion.

We track mice sequentially through the occlusion, incorporating a hint of the future locations of the mice.

Outline

Background/Foreground classification.

Tracking separated mice.

Detecting occlusions.

Tracking through occlusion.

Experimental results.

Future work.

Outline

Background/Foreground classification.

Tracking separated mice.

Detecting occlusions.

Tracking through occlusion.

Experimental results.

Future work.

Background/Foreground

Image History

Modified Temporal

Median

Current Frame

Estimated Background

Thresholded

Absolute

Difference

Foreground/

Background

Classification

Outline

Background/Foreground classification.

Tracking separated mice.

Detecting occlusions.

Tracking through occlusion.

Experimental results.

Future work.

Tracking Separated Mice

We model the distribution of the pixel locations of each mouse as a bivariate Gaussian .

If the mice are separated, they can be modeled by a Mixture of Gaussians.

We fit the parameters using the EM algorithm.

mean covariance

Outline

Background/Foreground classification.

Tracking separated mice.

Detecting occlusions.

Tracking through occlusion.

Experimental results.

Future work.

Detecting Occlusions

Occlusion events are detected using the GMM parameters.

We threshold how “close” together the mouse distributions are.

The Fisher distance in the x -direction is the distance measure: d

F

(

,

2

), (

x x 1 x 1 2

,

x 2

2

)

(

(

x 1 x 1

2

x 2 x 2

2

)

)

2

/ 2

Outline

Background/Foreground classification.

Tracking separated mice.

Detecting occlusions.

Tracking through occlusion.

Experimental results.

Future work.

Tracking through Occlusion

The pixel memberships during occlusion events must be reassigned.

Pixel Memberships

Frame t

a a

4

1

,

a a

5

2 a

3 a

6

“Best” Affine Transformation

Frame t to t +1

Pixel Memberships

Frame t + 1

a a

4

1

,

a a

5

2 a a

6

3

“Best” Affine Transformation

Frame t+1 to t+2

…

Best Affine Transformation

Pixel Memberships

Frame t

a a

4

1

,

a a

5

2 a

3 a

6

“Best” Affine Transformation

Frame t to t +1

Pixel Memberships

Frame t + 1

a a

4

1

,

a a

5

2 a a

6

3

“Best” Affine Transformation

Frame t+1 to t+2

…

Affine Flow Assumptions

Affine flow estimation assumes

Brightness Constancy: image brightness of an object does not change from frame to frame.

I x u

I y v

I t

0

The per-frame motion of each mouse can be described by an affine transformation.

u v (

( x , x , y ) y )

a

1 a

4

a

2 a

5 x x

a

3 a

6 y y

Frame t Frame t +1

Standard Affine Flow

In general, these assumptions do not hold.

We therefore minimize least-squares sense.

I x u

I y v

I t in the

The best a given only the affine flow cue minimizes where z

H

0

I x

, I x x , I x

( x , y

)

Μ w ( x , y )( z

T a y , I y

, I y x , I y y

T

.

I t

)

2

Affine Flow Plus Prior

The affine flow cue alone does not give an accurate motion estimate.

Affine Flow Plus Prior

The affine flow cue alone does not give an accurate motion estimate.

Suppose we have a guess of the affine transformation, â , to bias our estimate.

The best a minimizes the â .

H

0

and is near

Affine Flow Plus Prior

Our criterion is:

H

w ( x , y

, y

)

Μ ( x

)( z

T a

I t

)

2

( a

ˆ

)

T a

1

( a

ˆ

) regularization term

Taking the partial derivative of H [ a ] w.r.t. a , setting it to 0, and solving for a gives:

a

(

Z

T

WZ

Σ

a

1

)

1

(

Z

T

WI

t

Σ

a

1 ˆ

)

What is â?

We use the depth order cue to estimate â.

We assume the front blob at the start and end of the occlusion are the same mouse.

Start (frame 1 )

End (frame n )

What is â?

The succession of frame to frame motions transforms the initial front mouse into the final front mouse.

We set the per-frame prior estimate â to reflect this.

1 : n

(

μ

1

,

Σ

1

)

1

1 : 2

(

μ

2

,

Σ

2

)

ˆ

2 : 3

(

μ

3

,

Σ

3

)

3 : 4

(

μ

4

,

Σ

4 a

)

ˆ n

1 : n

(

μ n

,

Σ n

)

2 3 4 n

Affine Interpolation

We estimate the frame to frame motion, â, by linearly interpolating the total motion,

1 : n a

1 : n

(

μ

1

,

Σ

1

)

1

(

μ

2

,

Σ

2

) (

μ

3

,

Σ

3

) (

μ

4

,

Σ

4

) (

μ n

2 3 4 n

,

Σ n

)

Affine Interpolation

Given the initial and final mouse distributions, we compute the total transformation : t

t

1 : : n

μ

n

μ

1

,

1 : : n

Σ

1 n

/ 2

O

T

Σ

n

1 / 2

Translation

Rotation

& Skew

Orthogonal

Matrix

( t

1 : n

, A

1 : n

)

(

μ

1

,

Σ

1

)

1

(

μ n

,

Σ n

) n

Affine Interpolation

From the total transformation, we estimate the per-frame transformation: t

ˆ n t

1 : n

1

,

A

1

1 : n

/( n

1 )

y

Depth Order Heuristic

Estimating which mouse is in front relies on a simple heuristic: the front mouse has the lowest (largest) y-coordinate.

x

Pixel Membership Estimation

Pixel Memberships

Frame t

a a

4

1

,

a a

5

2 a

3 a

6

“Best” Affine Transformation

Frame t to t +1

Pixel Memberships

Frame t + 1

a a

4

1

,

a a

5

2 a a

6

3

“Best” Affine Transformation

Frame t+1 to t+2

…

Pixel Membership Estimation

We assign membership based on the weighted sum of the proximity and motion similarity.

Proximity criterion :

J l

[ p ]

( p

μ t

1

)

T Σ t

1

1

( p

μ t

1

)

Motion similarity criterion :

J m

[ p ]

local

[( u local

t x

)

2

( v local

t y

)

2

]

Local optical flow estimate

Outline

Background/Foreground classification.

Tracking separated mice.

Detecting occlusions.

Tracking through occlusion.

Experimental results.

Future work.

Experimental Results

We report initial success of our algorithm in tracking three agouti mice in a cage.

x

Experimental Results

Viewed in another way: t

Conclusions

Video

Simple

Tracker

Mouse

Positions

Detect

Occlusions

Occlusion

Starts & Ends

Occlusion

Reasoning

Mouse

Positions

We presented three modules to track identical, non-rigid, featureless objects through severe occlusion.

The novel module is the occlusion tracking module.

Conclusions

Video

Simple

Tracker

Mouse

Positions

Detect

Occlusions

Occlusion

Starts & Ends

Occlusion

Reasoning

Mouse

Positions

We presented three modules to track identical, non-rigid, featureless objects through severe occlusion.

The novel module is the occlusion reasoning module.

Conclusions

Frame n a

1 : 2 a

2 : 3 a

3 : 4

Frame 1 Frame 2 Frame 3 Frame 4

While the occlusion tracker operates sequentially, it incorporates a hint of the future locations of the mice.

This is a step in the direction of an algorithm that reasons forward and backward in time.

Future Work

More robust depth estimation.

More robust separated mouse tracking

(e.g. BraMBLe).

Different affine interpolation schemes.

References

[1] D. Comaniciu, V. Ramesh, and P. Meer. Kernel-based object tracking. In

Pattern Analysis and Machine Intelligence, volume 25 (5), 2003.

[2] J. G årding. Shape from surface markings. PhD thesis, Royal Institute of

Technology, Stockholm, 1991.

[3] T. Hastie, R. Tibshirani, and J. Friedman. The Elements of Statistical Learning.

Springer Series in Statistics. Springer Verlag, Basel, 2001.

[4] M. Irani and P. Anandan. All about direct methods. In Vision Algorithms:

Theory and Practice. Springer-Verlag, 1999.

[5] M. Isard and J. MacCormick. BraMBLe: A Bayesian multiple-blob tracker. In

ICCV, 2001.

[6] B. Lucas and T. Kanade. An iterative image registration technique with an application to stereo vision. In DARPA Image Understanding Workshop, 1984.

[7] J. MacCormick and A. Blake. A probabilistic exclusion principle for tracking multiple objects. IJCV, 39(1):57 –71, 2000.

References

[8] Measuring Behavior: Intl. Conference on Methods and Techniques in

Behavioral Research, 1996 –2002.

[9] S. Niyogi. Detecting kinetic occlusion. In ICCV, pages 1044 –1049, 1995.

[10] J. Shi and C. Tomasi. Good features to track. In CVPR, Seattle, June 1994.

[11] H. Tao, H. Sawhney, and R. Kumar. A sampling algorithm for tracking multiple objects. In Workshop on Vision Algorithms, pages 53 –68, 1999.

[12] C. Twining, C. Taylor, and P. Courtney. Robust tracking and posture description for laboratory rodents using active shape models. In Behavior

Research Methods, Instruments and Computers, Measuring Behavior Special