Simulated annealing for convex optimization

advertisement

Simulated annealing for

convex optimization

Adam . Kalai: TTI-Chicago

Santosh Vempala: MIT

Bar Ilan University

2004

1

100-million dollar endowment

(thanks, Toyoda!)

12 tenure-track slots, 18 visitors

On University of Chicago campus

Optional teaching

Advising graduate students

2/28

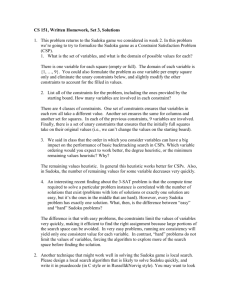

Outline

Simulated

annealingannealing gives the best

Simulated

A method

for blind

search:guarantees for this

known

run-time

f:X!, minx2X f(x)

problem.

Neighbor structure N(x) µ X

Useful in practice

It is optimal among a class of

Difficult to analyze

random search techniques.

A generalization of linear programming

Minimize a linear function over a convex set S ½ n

Example: min 2x1+5x2-11x3 with x12+5x22+3x32 · 1

Set S specified by membership oracle M: n ! {0,1}

M(x) = 1 $ x 2InShigh dimensions

Difficult, cannot use most linear programming techniques

[GLS81,BV02]

3/28

Steepest descent

4/28

Random Search

5/28

Simulated Annealing [KGV83]

Phase 1: Hot (Random)

Phase 2: Warm (Bias down)

Phase 3: Cold (Descent)

(Descend)

6/28

Simulated Annealing

f:X!, minx2X f(x)

Proceed in phases i=0,1,2,…,m

Geometric temperature

Temperature Ti = T0(1-)i

schedule

In phase i, do a random walk with stationary

distribution i:

i(x) / e-f(x)/Ti

Boltzmann distribution

i=0:Metropolis

near uniform

! i=m: dist

near

filter for stationary

: optima

From x, pick random neighbor y.

If (y)>(x), move to y.

If (y)·(x) move to y with prob. (y)/(x)

7/28

Simulated Annealing

Great blind search technique

Works well in practice

Little theory

Exponential time

Planted graph bisection [JS93]

Fractal functions [S91]

8/28

Convex optimization

minimize f(x) = c ¢ x =

height

x 2 S = hill

Find the bottom of the hill

using few pokes

(membership queries)

9/28

Convex optimization

minimize f(x) = c ¢ x =

height

x 2 S ½ n = hill

Find the bottom of the hill

using few pokes

(membership queries)

• Ellipsoid method:

O*(n10) queries

• Random walks [BV02]

O*(n5) queries

n=# dimensions

10/28

Walking in a convex set

Metropolis filter for stationary dist :

From x, pick random neighbor y.

If (y)>(x), move to y.

If (y)·(x), move to y

with prob. (y)/(x)

11/28

Walking in a high-dimensional convex set

12/28

Hit and run

C

To sample with

stationary dist.

Pick a random direction

through the point

C = S Å line in direction

Take a random point

from |C

S

13/28

Hit and run

C

S

Start from a point x,

random from dist.

After O*(n3) steps, you

have a new random

point, “almost

independent” from x

[LV03]

Difficult analysis

14/28

Random walks for optimization [BV02]

Each phase, volume

decreases by ¼ 2/3

In n dimensions, O(n)

phases to halve distance

to opt.

15/28

Annealing is slightly faster

minx 2 S c ¢ x

Use distributions:

i(x) / e-c¢x/Ti

.

Boltzmann distribution

Geometric temperature

schedule

After O(

) phases, halve distance to opt.

That’s compared to O(n) phases [BV02]

16/28

Annealing Optimality

Assumptions:

Sequence of distributions 1,2,…

Each density di is log-concave:

Consecutive densities di, di+1 overlap:

Requires at least *( ) phases

Simulated Annealing does it in O*(

) phases

17/28

Lower bound idea

mean mi = Ei[c ¢ x]

variance i2 = Ei[(c ¢ x – mi)2]

overlap

lemma: mi – mi+1 · (i+i+1)ln(2P)

follows from log-concavity of i

log-concave ! P(t std dev’s from mean) < e-t

In worst case, e.g. cone, small std dev

i · (mi - min c ¢ x)/

18/28

Worst case: a cone

minx 2 S x0

S = { x2 n | -x0 · x1,x2,…,xn-1 · x0 · 10}

Uniform dist. on S|x0 <

linear program

mean ¼ – /n

std dev ¼ /n

Boltzmann dist. e- x/

mean ¼ n

std dev ¼

19/28

20/28

Any convex shape

Fix convex set S and direction c.

Fix mean m = E[c ¢ x]

d(x)=f(c¢x), log-concave

Conjecture:

The log-concave distribution over S with

largest variance i2 = Ei[(c ¢ x – mi)2] is a

Boltzmann dist. (exponential dist.)

21/28

Upper bound basics

Dist i / e-c¢x/Ti

Lemma: Ei[c ¢ x] · (minx 2 S c ¢ x ) + n|c|Ti

22/28

Upper bound difficulties

Not sufficient that distributions overlap

An expected warm start:

23/28

Shape estimation

Estimate covariance with O*(n) samples

Similar issues with hit and run

24/28

Shape re-estimation

Shape estimate is covariance matrix (normalized)

OK as long as relative estimates are accurate within

a constant factor

In most cases shape changes little

No need for re-estimation

Cube, ball, cone, …

In worst case, shape may change every phase

Increase run-time by factor of n

Differs from simulated annealing

25/28

Run-time guarantees

Annealing: O*(n0.5) phases

State-of-the-art walks [LV03]

Worst case: O*(n) samples per phase

(for shape)

O*(n3) steps per sample

Total: O*(n4.5)

(compare to O*(n10) [GLS81], O*(n5) [BV02])

26/28

Conclusions

Random search is useful for convex

optimization [BV02]

Simulated annealing can be analyzed for

convex optimization [KV04]

It’s opt among random search procedures

Annoying shape re-estimation

Difficult analyses of random walks [LV02]

Weird: no local minima!

Analyzed for other problems?

27/28

Reverse annealing [LV03]

Start near single point v

Idea

Sample from density / e-|x-v|/Ti in phase i

Temperature increases

Move from single point to uniform dist

Estimate volume increase each time

Able to do in O*(n4) rather than O(n4.5)

Similar algorithm analysis

28/28