Codes

advertisement

Probabilistic verification

Mario Szegedy, Rutgers

www/cs.rutgers.edu/~szegedy/07540

Lecture 4

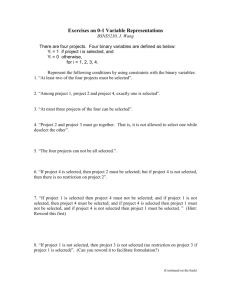

Codes I (outline)

Madhu’s notes:

http://people.csail.mit.edu/madhu/coding/ibm/lect1x4.pdf

• It all started with Shannon: binary symmetric (noisy) channels

• Malicious (rather than random) errors

• Error correcting codes and their parameters

Papadimitriou’s notes:

http://www.cs.berkeley.edu/~pbg/cs270/notes/lec13.pdf

• Reed-Solomon (RS) codes

• Berlekamp-Welsh (BW) decoding algorithm for RS codes

Madhu’s notes:

http://people.csail.mit.edu/madhu/FT04/scribe/lect07.ps

• A generalization of the BW decoding algorithm by Sudan

My notes:

• Generalized Reed-Solomon codes and its parameters

• Self-correcting properties of the generalized RS codes (local

decodability)

In 1948 Shannon published A Mathematical Theory

of Communication article in two parts in the July and

October issues of the Bell System Technical Journal.

Claude Shannon

This work focuses on the problem of how best to

encode the information a sender wants to transmit.

In this fundamental work he used tools in probability

theory, developed by Norbert Wiener, which were in

their nascent stages of being applied to

communication theory at that time.

1916 – 2001

Shannon developed information entropy as a

measure for the uncertainty in a message while

essentially inventing the field of information theory.

some channel models

Input X

P(y|x)

output Y

transition probabilities

memoryless:

- output at time i depends only on input at time i

- input and output alphabet finite

Example: binary symmetric channel

(BSC)

1-p

Error Source

0

0

E

X

+

Input

p

Y X E

Output

1

1

1-p

E is the binary error sequence s.t. P(1) = 1-P(0) = p

X is the binary information sequence

Y is the binary output sequence

Other models

0

0 (light on)

X

1

p

1-p

0

1-e

e

Y

0

E

1 (light off)

P(X=0) = P0

e

1

1-e

1

P(X=0) = P0

Z-channel (optical)

Erasure channel (MAC)

Erasure with errors

0

p

1-p-e

e

0

E

p

1

e

1-p-e

1

General noisy channel is an arbitrary bipartite graph

1

p11

1

p22

3

2

3

2

p35

4

5

pij is the probability that upon sending i the receiver

receives j. (pij) is a stochastic matrix.

Original message

+ redundancy

Encoded message

Original message

- decoding

Received message

Rate of encoding

Original message

m

R = |m| / |E(m)|

Encoded message

E(m)

For a fixed channel is there a fixed rate that allows almost

certain recovery?

(m→ infinity)

Madhu’s notes:

http://people.csail.mit.edu/madhu/coding/ibm/lect1x

4.pdf

THEOREM:

For binary symmetric channel with error probablity p we can find code

with rate:

1-H(p) , where

H(p) = p log2 p + (1-p) log2 (1-p) (Entropy function)

DEFINITIONS:

Hamming distance of two binary strings is Δ(x,y)

Hamming ball with radius r around binary string x is B(x,r)

BASIC:

For r=pn we have |B(x,r)| ≈ 2H(p)n

r

x

E(m’)

ENCODING: (R = 1-H(p) )

pn

y

E: {0,1}Rn → {0,1}n, random

DECODING:

Given y = E(m) + error, find the unique m’ such that Δ(y,E(m’)) ≤ pn, if

such m’ exists, otherwise decode arbitrarily.

ANALYSIS:

Pr[decoding error] ≤

Pr[Δ(y,E(m) ≥ pn ] + Pr[ many codewords in B(y,pn)] ≤

small

+

2H(p)n 2Rn 2-n

x

Pr[ A, B intersect ]=

|A||B|/|X| (A is random)

A

B

No better rate is possible

Transmit random messages. Decoding error is large for any code

with rate > 1-H(p).

Distribution of E(m) + error

PROOF:

The decoding procedure D partitions {0,1}n into 2Rn parts.

{0,1}n

Decodes to m

2n / 2Rn

versus

R ≤ 1-H(p)

2H(p)n

C= (n,k,d)q codes

•

•

•

•

•

•

n = Block length

k = Information length

d = Distance

k/n = Information rate

d/n = Distance rate

q = Alphabet size

Linear Codes

• C = {x | x Є L}

(L is a linear subspace of Fqn)

• Δ(x,y) = Δ(x-z,y-z)

• min Δ(0,x) = min Δ(x,y)

x,y Є L

• k = dimension of the message space = dim L

• n = dimension of the whole space (in which the code words lie)

• Generator matrix: {xG | x

• “Parity” check matrix: {y

∑k}

n

Є ∑ | yH = 0}

Є

Reed-Solomon codes

• The Reed–Solomon code is an error-correcting code that works by

oversampling a polynomial constructed from the data.

• C is a [n, k, n-k+1] code; in other words, it is a linear code of length n

(over F) with dimension k and minimum distance n-k+1.