Design Review of MPS Japan FID 109182

advertisement

Optimizing Pattern

Recognition Systems

Professor Mike Manry

University of Texas at Arlington

Outline

Introduction

Feature Selection

Complexity Minimization

Recent Classification Technologies

Neural Nets

Support Vector Machines

Boosting

A Maximal Margin Classifier

Growing and Pruning

Software

Examples

Conclusions

Intro -- Problems In Pattern Recognition Systems

Pattern Recognition Application

Raw

Images

Poor selection

of Training

Images

Segment

Poor

Algorithms

chosen

Feature

Extraction

Poor

highly

redundant

feature

vectors

Classify

Z

Inefficient

improperly

sized

classifier

Intro -- Problems In Pattern Recognition Systems

Good

Raw

Images

Feature

Extraction

Segment

Classify

Poor

Results

Problems: We usually don’t know which block(s) are bad

Leftmost blocks may be more expensive and difficult to change

Solution: Using the system illustrated on the next slide, we can quickly

optimize the rightmost blocks and narrow the list of potential

problems.

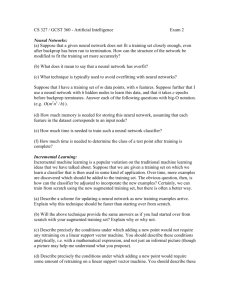

Intro -- IPNNL Optimization System

( www-ee.uta.edu/eeweb/ip/ )

Pattern Recognition Application

Segment

Raw

Images

Classify

Feature

Extraction

Training

Data

{ z,ic }

Feature

Selection

Interpret

x

Final

Subset

x

Final

Classifier

x

Predict Size

and

Performance

Dim(z) = N’

Dim(x) = N

N << N’

Classifier

Choices

IPNNL Optimization System

Prune

and

Validate

Intro -- Presentation Goals

Examine blocks in the Optimization System:

• Feature Selection

• Recent Classification Technologies

• Growing and Pruning of Neural Net Classifiers

Demonstrate the Optimization System on:

• Data from Bell Helicopter Textron

• Data from UTA Bioengineering

• Data from UTA Civil Engineering

Example System

Text Reader

yp1 =.01

yp2 =.01

yp3 =.01

yp4 = .91

Segment

yp5 =.01

Feature

Extraction

Classifier

yp6 =.01

yp7 =.01

w2

2D DFT

yp8 =.01

w1

yp9 =.01

yp10 =.01

Feature Selection

- Combinatorial Explosion

Number of size-N subsets of N’ features is

NS =

N’

N

( )

• Scanning: Generate candidate subsets

• Subset Evaluation: Calculate subset goodness, and save the

best ones

z

Scanning

Method

Subset

Evaluation

Metric

Candidate x

Chosen

Subsets x

Feature Selection

Example of Combinatorial Explosion

Given data for a classification problem with

N’ = 90 candidate features,

• there are 9.344 x 1017 subsets of size N=17

• and a total of 290 = 1.2379 x 1027 subsets

Feature Selection

- Scanning Methods

Available Methods :

Brute Force (BF) Scanning: Examine every subset (See previous slide)

Branch and Bound (BB) [1]: Avoids examining subsets known to be poor.

Plus L minus R (L-R) [1]: Adds L good features and eliminates the R worst

features

Floating Search (FS) [2]: Faster than BB.

Feature Ordering (FO) [1]: Given the empty subset, repeatedly add the best

additional feature to the subset. Also called Forward Selection.

Ordering Based Upon Individual Feature Goodness (FG)

Let G measure the goodness of a subset scanning method, where larger G

means increased goodness. Then

G(FG) < G(FO) < G(L-R) < G(FS) < G(BB) = G(BF)

Feature Selection

Scanning Methods: Feature Goodness vs. Brute Force

• Suppose that available features are:

z1 = x + n, z2 = x + n, z3 = y + 2n, and z4 = n

where x and y are useful and n is noise.

• FG: Nested subsets {z1}, {z1, z2 }, {z1, z2 , z3 }

• BF: Optimal subsets {z2}, {z1, z4 }, {z1, z3 , z4 }

since x = z1 - z4 and y = z3 - 2z4

• An optimal subset may include features that the

FG approach concludes are useless.

Feature Selection

- Subset Evaluation Metrics (SEMs)

Let x be a feature subset, and let xk be a candidate feature

for possible inclusion into x.

Requirements for SEM f()

•f ( x U xk ) < f (x), ( U denotes union )

•f() related to classification success (Pe for example )

Example SEMs

• Brute Force Subset Evaluation: Design a good classifier

and measure Pe

• Scatter Matrices [1]

Feature Selection

A New Approach [3]

Scanning Approach : Floating Search

SEM

: Pe for piecewise linear classifier

FE1

FE2

Segmented

Data

FE3

FE4

FE5

Feature

Extraction

Large

Feature

Vector z

Feature

Selection

Small

Feature

Vector x

At the output, the absence of groups 1, 3, and 5 reveals problems with those groups

Feature Selection

Example 1

Classification of Numeral Images: N’ = 16 features and Nc = 10

(Note: Subsets not nested)

Chosen Subsets

Error % Versus N

Error %

{6, }

14

{6, 9, }

{6, 9, 14, }

12

{6, 9, 14, 13, }

{6, 9, 14, 13, 3, }

10

{6, 9, 14, 13, 3, 15, }

{6, 9, 14, 13, 11, 15, 16, }

8

{6, 9, 14, 13, 11, 15, 16, 3, }

6

{6, 9, 14, 13, 11, 15, 16, 3, 4, }

{6, 9, 14, 13, 11, 15, 16, 3, 4, 1, }

4

{6, 9, 14, 13, 11, 15, 16, 3, 4, 1, 12, }

{6, 9, 14, 13, 11, 15, 16, 3, 4, 1, 12, 7, }

{6, 9, 14, 13, 11, 15, 16, 3, 4, 1, 12, 7, 8, } 2

{6, 9, 14, 13, 11, 15, 16, 3, 4, 1, 12, 7, 8, 5, }

0

{6, 9, 14, 13, 11, 15, 16, 3, 4, 1, 12, 7, 8, 5, 2, }

{6, 9, 14, 13, 11, 15, 16, 3, 4, 1, 12, 7, 8, 5, 2, 10, }

1

2

3

4

5

6

7

8

9

10

Subset Size N

11

12

13

14

15

16

Feature Selection

Example 2

Classification of Sleep Apnea Data (Mohammad Al-Abed and

Khosrow Behbehani): N’ = 90 features and Nc = 2

(Note: subsets not nested)

Error % Versus N

Chosen Subsets

30

Error %

{11, }

25

{11, 56, }

{11, 56, 61, }

20

{11, 28, 55, 63, }

{11, 28, 55, 63, 27, }

{11, 28, 55, 63, 62, 17, }

15

{11, 28, 55, 63, 53, 17, 20, }

{11, 28, 55, 63, 53, 17, 20, 62, }

10

{11, 28, 55, 63, 53, 17, 20, 4, 40, }

{11, 28, 55, 63, 53, 26, 20, 4, 40, 8, }

{11, 28, 22, 19, 53, 26, 20, 4, 40, 30, 80, }

5

{11, 28, 22, 19, 53, 26, 20, 4, 40, 30, 80, 85, }

{11, 28, 22, 19, 53, 26, 20, 4, 40, 30, 80, 85, 8, }

0

{11, 28, 22, 19, 53, 26, 20, 4, 40, 30, 80, 85, 8, 48, }

1

{11, 28, 22, 19, 53, 26, 20, 4, 40, 30, 80, 85, 8, 48, 38, }

{11, 28, 22, 19, 53, 26, 20, 4, 40, 30, 80, 85, 8, 48, 45, 87, }

{11, 28, 13, 19, 53, 26, 65, 4, 40, 30, 80, 85, 8, 48, 75, 87, 18, }

4 7 10 13 16 19 22 25 28 31 34 37 40 43 46 49 52 55 58 61 64 67 70 73 76 79 82 85 88

Subset Size N

Complexity Minimization

Complexity: Defined as the number of free parameters or coefficients

in a processor

Complexity Minimization (CM) Procedure

• Minimize training set Pe for each classifier size, with respect to all

weights or coefficients. Nw is the number of weights or coefficients.

• Measure Pe for a validation data set.

• Choose that network that minimizes the validation set’s Pe

• CM leads to smaller, better classifiers, if it can be performed. It is

related to structural risk minimization (SRM) [4,5].

• Implication: To perform SRM, we need methods for quickly varying

network size during training (growing) or after training (pruning)

Complexity Minimization

E

validation

Nw

Vary Nw until validation error is minimized.

Recent Classification Technologies

Neural Nets

Minimize MSE E(w), where tpi = 1 for the correct class (i = ic )

and 0 for an incorrect class (i = id ) .

yi(x) is the ith output discriminant of the trained classifier.

Neural net support vector x satisfies: yic(x) – max{ yid (x)} = 1

The MSE between yi(x) and bi(x) = P(i|x) is

Theorem 1 [5,6]: As the number of training patterns Nv increases,

the training error E(w) approaches e(w)+C, where C is a constant.

Recent Classification Technologies

Neural Nets

Advantages

•

•

•

•

Neural net outputs approximate Bayes discriminant P(i | x)

Training modifies all network weights

CM easily performed via growing and pruning methods

Accommodates any size training data file

Problems

• E(w) and e(w) are not proportional to Pe, and can increase when

Pe decreases.

• From Theorem 1,

yi = bi + εi,

where εi is random zero-mean noise. Noise degrades performance,

leaving room for improvement via SVM training and boosting.

Recent Classification Technologies

Support Vector Machines [4,5]

SVM: A neural net structure with these properties:

• Output weights form hyperplane decision boundaries

• Input vectors xp satisfying yp(ic) = +b and max{ y (id) } = -b are

called support vectors

• Correctly classified input vectors xp outside the decision margins

do not adversely affect training

• Incorrectly classified xp do not strongly affect training

• In some SVMs, Nh (number of hidden units) initially equals Nv

(the number of training patterns)

• Training may involve quadratic programming

Recent Classification Technologies

Support Vector Machines

x2

b

SV

b

SV

SV

SV

x1

SVM discriminant

LMS discriminant

Correctly classified patterns distort the LMS discriminant

Recent Classification Technologies

Support Vector Machines

Advantage: Good classifiers from small data sets

Problems

•

•

•

•

SVM design methods are practical only for small data sets

Training difficult when there are many classes

Kernel parameter found by hit or miss

Fails to minimize complexity (far too many hidden

units required, input weights don’t adapt) [7]

Current Work

• Modelling SVMs with much smaller neural nets.

• Developing Regression-based maximal margin classifiers

Recent Classification Technologies

Boosting [5]

yik(x): ith class discriminant for the kth classifier. K is the

number of classifiers being fused.

In discriminant fusion, we calculate the ak so that the

weighted average discriminant,

has better performance than the yik(x)

Adaboost [5] sequentially picks a training subset {xp, tp}k, from the

available data and designs yik(x) and ak so they are functions of the

previous (k-1) classifiers.

Recent Classification Technologies

Boosting

Advantage

• Final classifier can be used to process video signals in real

time when step function activations are used

Problems

• Works best for the two-class case, uses huge data files.

• Final classifier is a large, highly redundant neural net.

• Training can take days, CM not performed.

Future Work

• Pruning and modelling should be tried to reduce redundancy.

• Feature selection should be tried to speed up training

Recent Classification Technologies

Problems with Adaboost

N = 3, Nc = 2, K = 3 (number of classifiers being fused )

Future Work : Prune and model ADA boosted networks

A Maximal Margin Classifier

Problems With MSE Type Training

The ith class neural net discriminant can be modeled as

yp(i)= tp(i) + εp(i)

This additive noise εp(i) degrades the performances of

regression-based classifiers, as mentioned earlier.

Correctly classified patterns contribute to MSE, and can

adversely affect training (See following page). In other

words, regression-based training tries to force all training

patterns to be support vectors

A Maximal Margin Classifier

Problems With MSE Type Training

x2

x1

(1) Standard regression approach tries to force all training vectors

to be support vectors

(2) Red lines are counted as errors, even though those patterns are

classified more correctly than desired

(3) Outliers and poor pattern distribution can distort decision

boundary locations

A Maximal Margin Classifier[8]

Existence of Regression-Based Optimal Classifiers

Let X be the basis vector (hidden units + inputs) of a neural net. The

output vector is y = W·X

The minimum MSE is found by solving

R·WT = C

where

R = E[X·XT ] and

C = E[X·tT ]

(1)

(2)

If an “optimal” coefficient matrix Wopt exists, Copt = R·(Wopt)T from

(1), so Copt exists. From (2), we can find Copt if the desired output

vector t is defined correctly.

Regression-based training can mimic other approaches.

A Maximal Margin Classifier

Regression Based Classifier Design [8]

Consider the Empirical Risk (MSE)

If

or

yp(ic) > tp(ic)

yp(id) < tp(id)

(Correct class discriminant large)

(Incorrect class discriminant small)

Classification error (Pe ) decreases but MSE ( E) increases.

A Maximal Margin Classifier

Regression Based Classifier Design-Continued

The discrepancy is fixed by re-defining the empirical risk as

If yp(ic) > tp(ic ) set tp‘(ic) = yp(ic)

If yp(id) < tp(id ) for an incorrect class id, set tp‘(id) = yp(id).

In both cases, tp‘(i) is set equal to yp(i) so no error is counted.

This algorithm partially mimics SVM training since correctly

classified patterns do not affect the MSE too much.( Related to

Ho-Kashyap procedures, [5] pp.249-256 )

A Maximal Margin Classifier

Problems With MSE Type Training

x2

x1

Recall that non-support vectors contribute to the MSE, E

A Maximal Margin Classifier

Errors Contributing to E’

x2

x1

In E’, only errors (green lines) inside the margins are minimized.

Some outliers are eliminated.

A Maximal Margin Classifier

Comments

The proposed algorithm:

(1) Adapts to any number of training patterns

(2) Allows for any number of hidden units

(3) Makes CM straightforward

(4) Is used to train the MLP. The resulting classifier is called a

maximal margin classifier (MMC)

Questions:

(1) Does this really work ?

(2) How do the MMC and SVM approaches compare ?

A Maximal Margin Classifier

Two-Class Example

Numeral Classification:

N = 16, Nc = 2, Nv = 600

Goal: Discriminate numerals 4 and 9.

SVM: Nh > 150, Et = 4.33% and Ev = 5.33 %

MMC: Nh = 1 , Et = 1.67% and Ev = 5.83 %

Comments: The 2-Class SVM seems better, but the price is too

steep (Two orders of magnitude more hidden units required).

A Maximal Margin Classifier

Multi-Class Examples

Numeral Classification:

N = 16, Nc = 10, Nv = 3,000

SVM: Nh > 608, Ev = 14.53 %

MMC: Nh = 32 , Ev = 8.1 %

Bell Flight Condition Recognition:

N = 24,

Nc = 39, Nv = 3,109

SVM: Training fails

MMC: Nh = 20 , Ev = 6.97 %

Nh - number of hidden units

Nv - number of training patterns

Ev – validation error percentage

Conclusion: SVMs may not work for medium and large size

multi-class problems. This problem is well-known among

SVM researchers.

Growing and Pruning

Candidate MLP Training Block

If complexity minimization (CM) is used, the resulting

Ef(Nh) curve is monotonic

Ef(w)

validation

Nw

Practical ways of approximating CM, are growing and pruning.

Growing and Pruning

Growing

Ef(w)

validation

Nw

Growing: Starting with no hidden units, repeatedly add

Na units and train the network some more.

Advantages: Creates a monotonic Ef(Nh) curve.

Usefulness is concentrated in the first few units added.

Disadvantage: Hidden units are not optimally ordered.

Growing and Pruning

Pruning [9]

Ef(w)

validation

Nw

Pruning: Train a large network. Then repeatedly remove a

less useful unit using OLS.

Advantages: Creates a monotonic Ef(Nh) curve. Hidden units

are optimally ordered

Disadvantage: Usefulness is not concentrated in the first

few units

Growing and Pruning

Pruning a Grown Network [10]

Data set is for inversion of radar scattering from bare soil surfaces.

It has 20 inputs and 3 outputs

Training error for Inversion of Radar Scattering

Growing and Pruning

Pruning a Grown Network

Validation error for Radar Scattering Inversion

Growing and Pruning

Pruning a Grown Network

Prognostics data set for onboard flight load synthesis (FLS) in

helicopters, where we estimate mechanical loads on critical

parts, using measurements available in the cockpit. There are 17

inputs and 9 outputs.

Training error for

Prognostics data

Growing and Pruning

Pruning a Grown Network

Validation error plots for Prognostics data

Growing and Pruning

Pruning a Grown Network

Data set for estimating phoneme likelihood functions

in speech, has 39 inputs and 117 outputs

Training error

for

Speech data

Growing and Pruning

Pruning a Grown Network

Validation error for Speech data

Growing and Pruning

• Remaining Work: Insert into the IPNNL

Optimization System

IPNNL Software

Motivation

Theorem 2 (No Free Lunch Theorem [5] ) : In the

absence of assumptions concerning the training data,

no training algorithm is inherently better than

another.

Comments:

•Assumptions are almost always made, so this

theorem is rarely applicable.

•However, the theorem is right to the extent that

given training data, several classifiers should be tried

after feature selection.

IPNNL Software

Block Diagram

MLP

PLN

FLN

Feature

Selection

LVQ

Size

Train

Prune &

Validate

SVM

Data

RBF

Final

Network

SOM

Analyze

Your Data

Select

Network

Type

Produce Final

Network

IPNNL Software

Examples

The IPNNL Optimization system is demonstrated on:

• Flight condition recognition data from Bell

Helicopter Textron (Prognostics Problem)

• Sleep apnea data from UTA Bioengineering (Prof.

Khosrow Behbehani and Mohammad Al-Abed)

• Traveler characteristics data from UTA Civil

Engineering (Prof. Steve Mattingly and Isaradatta

Rasmidatta)

Examples

Bell Helicopter Textron

• Flight condition recognition (prognostics) data

from Bell Helicopter Textron

• Features: N’ = 24 cockpit measurements

• Patterns: 4,745

• Classes: Nc = 39 helicopter flight categories

Run Feature selection, and save new training and

validation files with only 18 features

Run MLP sizing, decide upon 12 hidden units

Run MLP training, save the network

Run MLP pruning, with validation. Final network

has 10 hidden units.

Examples

Behbehani and Al-Abed

• Classification of Sleep Apnea Data (Mohammad

•

Al-Abed and Khosrow Behbehani):

• Features: N’ = 90 features from Co-occurrence

features applied to STDFT

• Patterns: 136

• Classes: Nc = 2 ( Yes/No )

• Previous Software: Matlab Neural Net Toolbox

Run Feature selection, and save new training and

validation files with only 17 features. The curve is

ragged because of the small number of patterns.

Run MLP sizing, decide upon 5 hidden units

Run MLP training, save the network

Run MLP pruning, with validation. Final network

has 3 hidden units.

Examples

- Mattingly

and Rasmidatta

• Classification of traveler characteristics data

(Isaradatta Rasmidatta and Steve Mattingly):

• Features: N’ = 22 features

• Patterns: 7,325

• Classes: Nc = 3 (car, air, bus/train )

• Previous Software: NeuroSolutions by

NeuroDimension

Run Feature selection, and save new training and

validation files with only 4 features. The flat curve

means few features are needed.

Run MLP sizing, decide upon 2 hidden units. Flat curve

means few hidden units, if any, are needed.

Run MLP training, save the network

Run MLP pruning, with validation. Final network

has 1 hidden unit.

Conclusions

• An effective feature selection algorithm has been developed

• Regression-based networks are compatible with CM

• Regression-based training can extend maximal margin concepts to

many nonlinear networks

• Several existing and potential blocks in the IPNNL Optimization

System have been discussed

• The system has been demonstrated on three pattern recognition

applications

• A similar Optimization System is available for

approximation/regression applications

References

[1] K. Fukunaga, Introduction to Statistical Pattern Recognition, 2nd edition, Academic Press, 1990.

[2] P. Pudil, J. Novovicova, J. Kittler, " Floating Search Methods in Feature Selection " Pattern Recognition

Letters, vol 15 , pp 1119-1125, 1994

[3] Jiang Li, Michael T. Manry, Pramod Narasimha, and Changhua Yu, “Feature Selection Using a Piecewise

Linear Network”, IEEE Trans. on Neural Networks, Vol. 17, no. 5, September 2006, pp. 1101-1115

[4] Vladimir N. Vapnik, Statistical Learning Theory, John Wiley & Sons, 1998.

[5] Duda, Hart and Stork, Pattern Classification, 2nd edition, John Wiley and Sons, 2001.

[6] Dennis W. Ruck et al., "The Mulitlayer Perceptron as an Approximation to a Bayes Optimal Discriminant

Function," IEEE Trans. on Neural Networks, Vol. 1, No. 4, 1990.

[7] Simon Haykin, "Neural Networks: A Comprehensive Foundation“, 2nd edition.

[8] R.G. Gore, Jiang Li, Michael T. Manry, Li-Min Liu, Changhua Yu, and John Wei, "Iterative Design of Neural

Network Classifiers through Regression". International Journal on Artificial Intelligence Tools, Vol. 14,

Nos. 1&2 (2005) pp. 281-301.

[9] F. J. Maldonado and M.T. Manry, "Optimal Pruning of Feed Forward Neural Networks Using the Schmidt

Procedure", Conference Record of the Thirty Sixth Annual Asilomar Conference on Signals, Systems, and

Computers., November 2002, pp. 1024-1028.

[10] P. L. Narasimha, W.H. Delashmit, M.T. Manry, Jiang Li, and F. Maldonado, “An Integrated GrowingPruning Method for Feedforward Network Training,” NeuroComputing, vol. 71, Spring 2008, pp. 28312847.