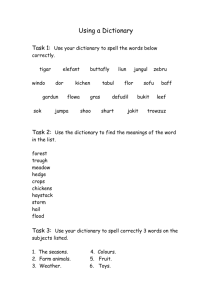

Document

Discovery of Conserved

Sequence Patterns Using a

Stochastic Dictionary Model

Authors Mayetri Gupta

& Jun S. Liu

Presented by Ellen Bishop

12/09/2003

1

“ofallthewordsinthisunsegmentedphraseth erearesomehidden”

The challenge is to develop an algorithm for DNA sequences that can partition the sequence into meaningful “words”

2

Presentation Outline

Introduction

MobyDick

Stochastic Dictionary-based Data

Augmentation (SDDA)

Algorithm Extensions

Results

3

Introduction

Some new challenges now that there are publicly available databases of genome sequences:

How do genes regulate the requirements of specific cells or for cells to respond to changes?

How can gene regulatory networks be analyzed more efficiently?

4

Gene Regulation

Transcription Factors (TF) play a critical role in gene expression

Enhance it or Inhibit it

Short DNA motifs 17-30 nucleotides long often correspond to TF binding sites

Build model for TF binding sites given a set

DNA sequences thought to be regulated together

5

MobyDick

Dictionary building algorithm developed in 2000 by Bussemaker, Li and Siggia

Decomposes sequences into the most probable set of words

Start with dictionary of single letters

Test for the concatenation of each pair of words and its frequency

Update dictionary

6

MobyDick results

Tested on the first 10 chapters of Moby

Dick

4214 unique words, @1600 of them repeats

Result had 3600 unique words

Found virtually all 1600 repeated words

7

SDDA

Stochastic Dictionary-based Data

Augmentation

Stochastic words represented by probabilistic word matrix (PWM)

Some definitions

D=dictionary size

=sequence data generated by concatenation of words

D ={M

1 .

,… M single letters

D

} the concatenated of words, including

P =p(M

1

)…p(M

D

) probability vector

A i

={A ik motifs

… A nk

M k

(A

} denotes the site indicators for ik

=1 or 0)

8

SDDA

Some definitions

q=4, also A,G,C,T are the first 4 words in dictionary

={P

1 .

,… P k

} sequence partition so each part P i corresponds to a dictionary word

N( ) = total number of partitions

N

Mj

( )=number of occurrences of word type M partition j in the w j .

(j=1…D) denotes word lengths

The D-q motif matrices are denoted by { q+1

(D)

…

D

}=

If the k th word is width w then its probability matrix is

k

= { 1 k

… wk

}

9

Probabilistic Word Matrix

G

T

A

C

.85

.07

.8

.02

.12

.05

.78

.07

.01

.01

.1

0

.05

.1

.12

.01

.96

.01

.85

.02

ACAGG=.85*.78*.8*.96*.85=.4328

GCAGA=.1*.78*.8*.96*.12=.0072

10

General idea of Algorithm

So we start with D

(1)

={A,G,C,T} and estimate the likelihood of those 4 words in the dataset.

Then we look at any pair of letters, say AT. If it is over-represented and in comparison to

D

(1) (2) then it is added to the dictionary D and this is repeated for all the pairs.

Consider all the concatenations of all the pairs of words in D dictionary D

(n+1) than by chance.

(n) and form a new by including those new words that are over-represented or more abundant

11

SDDA Algorithm

1) Partitioning: sample for words given the current value of the stochastic word matrix and word usage probabilities

Do a recursive summation of probabilities to evaluate the partial likelihood up to every point in the sequence

L i

( )= P(

[i-wk+1:j]

| )L i-wk

( )

Words are sampled sequentially backward, starting at the end of the sequence. Sample for a word starting at position i, according to the conditional probability

P(A ik

=1|A i+wk

, )=P(

[i:i+wk-1]

|

k

,p)L i-1

( )/L i+wk-1

( )

If none of the words are selected then the appropriate single letter word is assumed & k is decremented by 1.

12

SDDA Algorithm

2) Parameter Update:

Given the partition A,

update the word stochastic matrix update the word probabilities vector P

D by sampling their posterior distribution

3) Repeat steps 1 and 2 until convergence, when MAP

(maximum a posteriori) score stops increasing. This is a method of “scoring” optimal alignment and is calculated with each iteration.

4) Increase dictionary size D=D+1. Repeat again from step 1 but now

D-1 is a known word matrix

13

Algorithm Extensions

Phase Shift via Metropolis steps

Patterns with variable insertions and deletions (gaps)

Patterns of unknown widths

Motif detection in the presence of “low complexity” regions

14

Phase Shift

If 7,19,8,23 are strongest pattern but algorithm chooses a1=9, a2=21 early on then it is likely to also choose a3=10,a4=25

Metropolis steps solution

a ={a

1

… a m

} are starting positions for an occurrence of a motif

Choose 1 with probability .5 each

Update the motif position a+ with probability min{1, p(a+ | )/p(a| )

15

Patterns with: gaps/unknown widths

Gaps - Additional recursive sum in the partitioning step(1) using

io

ie

Do

De

Insertion-opening probability

Insertion-extension probability

Deletion-opening probability

Deletion-extension probability

Unknown Widths - The authors also enhanced their algorithm to determine the likely pattern width if it is unspecified.

16

Motif Detection with “low complexity” regions

AAAAAAA…

CGCGCGCG…

The stochastic dictionary model is expected to control this by treating these repeats as a series of adjacent words

17

Results

Two case studies are provided

Simulated dataset with background polynucleotide repeats

CRP binding sites

18

Relative performance of the SDDA compared to BioProspector & AlignAce

EVAL2

SDDA SDDA

Success Falsepositive a) .24 1

.48

.6

.07

.06

.72

.96

b) .24

.48

.72

.96

.5

.7

1

.9

.9

1

.12

.02

.03

.12

.05

.03

1

.7

.1

0

.6

0

1

.7

BP BP

Success Falsepositive

.02

0

-

-

0

.09

.01

0

0

0

.1

.1

.1

0

AA AA

Success Falsepositive

.3

0 -

.43

-

-

-

.52

.62

.36

19

Credits

Slide 6,7

Bussemaker,H.J., Li, H and Siggia, E.D. (2000),

“Building a Dictionary for Genomes:Identification of Presumptive

Regulatory Sites by Statistical Analysis”,Proceedings of the

National Academy of Science USA, 97, 10096-10100.

Slide 9

Liu,J.S., Gupta,M., Liu, X., Mayerhofere, L. and

Lawrence, C.E.,”Statistical Models for Biological Sequence Motif

Discovery”,1-19

Slide 14

Lawrence, C.E., Altschul, S.F.,Boguski, M.S.,

Liu,J.S.,Neuwald, A.F., and Wootton,J.C. (1993), “Detecting

Subtle Sequence Signals: A Gibbs Sampling Strategy for Multiple

Alignment”, Science, 262,208-214.

20

Bibliography

Bussemaker,H.J., Li, H and Siggia, E.D. (2000), “Building a

Dictionary for Genomes:Identification of Presumptive Regulatory

Sites by Statistical Analysis”,Proceedings of the National

Academy of Science USA, 97, 10096-10100.

Lawrence, C.E., Altschul, S.F.,Boguski, M.S., Liu,J.S.,Neuwald,

A.F., and Wootton,J.C. (1993), “Detecting Subtle Sequence

Signals: A Gibbs Sampling Strategy for Multiple Alignment”,

Science, 262,208-214.

Liu,J.S., Gupta,M., Liu, X., Mayerhofere, L. and Lawrence,

C.E.,”Statistical Models for Biological Sequence Motif

Discovery”,1-19

21