90-786 Intermediate Empirical Methods

advertisement

Lecture 7. Random Variables

and Probability Distributions

David R. Merrell

90-786 Intermediate Empirical

Methods for Public Policy and

Management

AGENDA

Review

Bayes Rule

Two Types of Error

Discrete Random Variables

Bernoulli Random Variables

Bayes Rule

P(A | B) = P(A) P(B | A)

P(B)

Proof:

P(A and B) = P(A|B)P(B) = P(B|A)P(A)

Total Probability Rule

A1

A2

B

A4

A3

P ( B ) P ( B and Ai ) P ( B| Ai )P ( Ai )

i

i

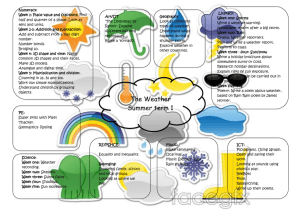

Application of Bayes Rule: Weather

Forecasting

P(rain) = .3

P(likely | rain) = .95

P(unlikely | no rain) = .9

P( rain| likely )

P( likely| rain)P( rain)

P( likely| rain)P( rain) P( likely| no rain)P( no rain)

(.95)(.3)

.80

(.95)(.3) (.1)(.7 )

Interpretations of Bayes Rule

Conditioning Flip

P( A)

P( A| B )

P( B| A)

P( B )

Knowledge Change

P( B| A)

P( A| B )

P( A)

P( B )

Example: HIV Testing

A: observe positive HIV test result

B: actually HIV positive

~B: actually not HIV positive

P( A| B)P( B)

P( B| A)

P( A| B)P( B) P( A|~ B)P(~ B)

Two Types of Error

Test

positive

Test

negative

Actually Actually

HIV+

HIVfalse

positive

false

negative

Conditional Probabilities

P( false positive) = P( test positive|HIV-)

P( false negative) = P( test negative|HIV+)

sensitivity = P( test positive|HIV+)

specificity = P( test negative|HIV-)

Note:

sensitivity = 1 P( false negative)

specificity = 1 P( false positive)

Distinguishability

Low, e.g., P(A|B) = .9 and P(A|~B) = .6

.9 P( B )

P( B| A)

.9 P( B ).6(1 P( B ))

.9 P( B )

.6.3P( B )

High, e.g., P(A|B) = .99 and P(A|~B) = .1

.99 P( B )

P( B| A)

.1 .89 P( B )

Low Distinguishability

P(B|A):L

1.0

0.5

0.0

0.0

0.5

P(B)

1.0

High Distinguishability

P(B|A):H

1.0

0.5

0.0

0.0

0.5

P(B)

1.0

Now...

Discrete Random Variables

Bernoulli Random Variables

Random Variable

Random Variable is

a variable whose value is determine by the

outcome of some experiment

a measurable outcome of each member of a

population

an individual observation

Could be

discrete (can only take countable values) or

continuous (can take any value along interval)

Discrete Probability Distribution

List all possible values of X with their

respective probabilities

Characteristics:

list of outcomes is exhaustive

outcomes are mutually exclusive

sum of probabilities is 1

Example 1. Flip Three Coins

Sample space is HHH, HHT, HTH, THH,

HTT, THT, TTH, TTT

X = the number of heads, X = 0, 1, 2,

3

Probability Distribution:

x

0

1

2

3

P(x)

1/8

3/8

3/8

1/8

P(x)

3/8

2/8

1/8

0

1

2

3

Calculating Probabilities

P(X = 2) = P{HHT or HTH or THH} =

3/8

P(X< 2) = ?

P(X = 1 or X = 2) =?

Expected Value

The expected value (mean) of a

probability distribution is a weighted

average: weights are the probabilities

Expected Value: E(X) = = xiP(xi)

Calculating Expected Value for the 3Coin Flip

E(X) =

0(1/8) + 1(3/8) + 2(3/8) + 3(1/8) = 1.5

Variance

V(X) = E(X-)2

Calculating Variance for the 3-Coin

Flip

x

(x-

(x- p(x)

2

2.25(1/8)

2

0.25(3/8)

2

2

(2-1.5)

0.25(3/8)

3

(3-1.5)2

2.25(1/8)

0

1

(0-1.5)

(1-1.5)

Example 2. Network Models:

In-Degree and Out-Degree

(2, 4)

(1, 1)

(2, 0)

(1, 1)

(1, 0)

“Like and Ye Shall Be Liked”

Y

X

In-Degree

0

1

2

3

4

0

0.11

0.05

0.06

Out-Degree

1

2

0.05

0.09

0.08

0.04

0.1

0.07

3

4

0.04

0.08

0.05

0.04

0.05

0.09

Probability

0.12

0.1

0.08

0.06

4

0.04

0.02

2

0

0 1

In-Degree

0

2 3

4

Out-Degree

Probability Calculations

0.06

0.04

0.1

0.04

P ( X 2) ?

P (Y 3) ?

P ( X 0 | Y 0) ?

P (Y 4 | X 0) ?

0.04

0.28

Mean Calculations

E(X )

E (Y )

E ( X |Y 0)

E (Y| X 4)

Next Time ...

Binomial Process

Binomial Distribution

Poisson Process

Poisson Distribution