Document

advertisement

Agents –

Background

Vicki H. Allan

1

An Agent in its Environment

AGENT

action

output

Sensor Input

ENVIRONMENT

2

“Agent enjoys the following properties:

• autonomy - agents operate without the direct

intervention of humans or others, and have some kind of

control over their actions and internal state;

• social ability - agents interact with other agents (and

possibly humans) via some kind of agent-communication

language;

• reactivity: agents perceive their environment and

respond in a timely fashion to changes that occur in it;

• pro-activeness: agents do not simply act in response to

their environment, they are able to exhibit goal-directed

behaviour by taking initiative.” (Wooldridge and

Jennings, 1995)

3

Agents

• Need for computer systems to act in our

best interests

• “The issues addressed in Multiagent

systems have profound implications for our

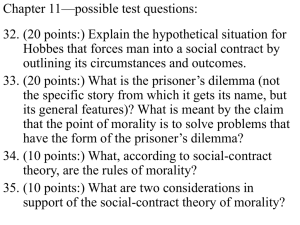

understanding of ourselves.” Wooldridge

• Example – how do you make a decision

about buying a car

4

Agent Environments

• not have complete control (influence only)

(Ex: elevators in Old Main)

• deterministic vs. non-deterministic effect

• accessible (get complete state info) vs

inaccessible environment (Ex. stock market)

• episodic (single episode, independent of

others) vs. non-episodic (history sensitive)

(Ex. grades in class)

5

Exercise

• There are three blue hats and two brown

hats.

• The men are lined up such that one man

can see the backs of the other two, the

middle man can see the back of the front

man, and the front man can’t see

anybody.

• One of the five hats is placed on each

man's head. The remaining two hats are

hidden away.

• The men are asked what color of hat they

are wearing. Time passes.

• Front man correctly guesses the color of

his hat.

• What color was it, and how did he guess

correctly?

6

Concept

• Everyone else is as smart as you

7

Game of Chicken

• Consider another type of encounter — the game of

chicken:

(Think of James Dean in Rebel without a Cause:

swerving = coop, driving straight = defect.)

• Difference to prisoner’s dilemma:

Mutual defection is most feared outcome.

8

Question:

• How do we communicate our desires to an

agent?

• May be muddy: You want to graduate with a

4.0, have a job making $100K a year, have

opportunities for growth, and have quality

of life.

• If you can’t have it all, what is most valued?

9

Answer: Utilities

• Assume we have just two agents: Ag = {i, j}

• Agents are assumed to be self-interested: they have

preferences over how the environment is

• Assume W = {w1, w2, …}is the set of “outcomes” that

agents have preferences over

• We capture preferences by utility functions which map

an outcome to a rational number.

• Utility functions lead to preference orderings over

outcomes.

10

What is Utility?

• Utility is not money (but it is a useful analogy)

• Typical relationship between utility & money:

11

Dominant Strategies

• Recall that

– Agents’ utilities depend on what strategies other

agents are playing

– Agents’ are expected utility maximizers

• A dominant strategy is a best-response for player

i

– They do not always exist

– Inferior strategies are called dominated

12

Dominant Strategy Equilibrium

• A dominant strategy equilibrium is a strategy

profile where the strategy for each player is

dominant (so neither wants to change)

• Known as “DUH” strategy.

• Nice: Agents do not need to counter speculate

(reciprocally reason about what others will do)!

13

Prisoners’ dilemma

Two people are arrested for a

crime. If neither suspect

confesses, both get light sentence.

If both confess, then they get sent

to jail. If one confesses and the

other does not, then the confessor

gets no jail time and the other gets

a heavy sentence.

Ned

Confess

Don’t

Confess

Confess

-10, -10

0, -30

-30, 0

-1, -1

Kelly

Don’t

Confess

14

Prisoners’ dilemma

Kelly will confess.

Ned

Same holds for Ned.

Confess

Don’t

Confess

Confess

-10, -10

0, -30

Kelly

Don’t

Confess

15

Prisoners’ dilemma

So the only outcome that

involves each player

choosing their dominant

strategies is where they

both confess.

Solve by iterative

elimination of dominant

strategies

Ned

Confess

Don’t

Confess

Confess

-10, -10

Kelly

Don’t

Confess

16

Example: Prisoner’s Dilemma

• Two people are arrested for a crime. If neither suspect confesses, both get

light sentence. If both confess, then they get sent to jail. If one confesses

and the other does not, then the confessor gets no jail time and the other gets

a heavy sentence.

•

(Actual numbers vary in different versions of the problem, but relative values are the

same)

Pareto optimal

Dom.

Str. EqConfess

not pareto

Don’t

optimal

Confess

Confess

Don’t

Confess

-10,-10

0,-30

-30,0

-1,-1

Optimal

Outcome

17

Example: Bach or Stravinsky

• A couple likes going to concerts together. One loves Bach but

not Stravinsky. The other loves Stravinsky but not Bach.

However, they prefer being together than being apart.

B

B

S

S

2,1

0,0

0,0

1,2

No dominant

strategy

equilibrium

18

Example: Paying for Bus fare

• Getting back to the Gatwick airport. Steve had planned to pay

for all of us, but left to find son. Came for funds. Do I pay, or

say my husband will?

Pay for 2

Pay for 2

Not Pay

Pay for 4

0,0

-25, -25

-100,-100

0,0

No dominant

strategy

equilibrium

19

Research Questions

• Can we apply game theory to solve

seemingly unrelated problems?

• Ex: traffic control

• Ex: sharing Operating System

resources

20

Exercise

• You participate in a game show in

which prizes of varying values occur

at equal frequency. Two of you win

a prize.

• There are 10 types of prizes of

varying values. Assume, a prize of

type 10 is the best and a prize of

type 1 is the worst.

• Without knowing the other’s prize,

both asked if they want to exchange

the prizes they were given.

• If both want to exchange, the two

exchange prizes.

• What is your strategy?

21

Employee Monitoring

• Employees can work hard

or shirk

• Salary: $100K unless

caught shirking

• Cost of effort: $50K

• Managers can monitor or

not

• Value of employee output:

$200K

• Profit if employee doesn’t

work: $0

• Cost of monitoring: $10K

22

What is your strategy?

• Work hard?

• Shirk?

23

Employee Monitoring

Employee

Work

Shirk

Manager

Monitor

No Monitor

50 , 90 50 , 100

0 , -10 100 , -100

• No equilibrium in pure strategies

• What do the players do?

24

Mixed Strategies

• Randomize – surprise the rival

• Mixed Strategy:

• Specifies that an actual move be chosen randomly

from the set of pure strategies with some specific

probabilities.

25

Research question

• What features does a good solution

have?

26

Pareto Efficient Solutions: f

represents possible solutions for two

players

U2

f1

f2

f3

f4

U1

27

Pareto Efficient Solutions

U2

f1

f 2 Pareto

dominates f 3

f2

f3

f4

U1

28

Auctions

•

•

•

•

Dutch

English

First Price Sealed Bid

Second Price Sealed Bid

29

Auction Parameters

• Goods can have

– private value (Aunt Bessie’s Broach)

– public/common value (oil field to oil companies)

– correlated value (partially private, partially values

of others): consider the resale value

• Winner pays

– first price (highest bidder wins, pays highest price)

– second price (to person who bids highest, but pay

value of second price)

• Bids may be

– open cry

– sealed bid

• Bidding may be

– one shot

– ascending

– descending

30

Dutch (Aalsmeer)

flower auction

31

32

Research Questions

• How can we design an agent to function in

the electronic marketplace?

• Give the new possibilities, made possible

via an electronic auction, what

“mechanisms” can be designed to elicit

desirable properties?

33

How do you counter speculate?

• Consider a Dutch auction

• While you don’t know what the other’s valuation is, you

know a range and guess at a distribution (uniform, normal,

etc.)

• For example, suppose there is a single other bidder whose

valuation lies in the range [a,b] with a uniform distribution.

If your valuation of the item is v, what price should you

bid?

• Thinking about this logically, if you bid above your

valuation, you lose. If you bid lower than your valuation,

you increase profit.

• If you bid very low, you lower the probability that you will

34

ever get it.

What is expected profit

(Dutch auction)?

• Try to maximize your expected profit.

• Expected profit (as a function of a specific bid) is the

probability that you will win the bid times the amount

of your profit at that price.

• Let p be the price you bid for an item. v be your

valuation. [a,b] be the uniform range of others bid.

• The probability that you win the bid at this price is

the fraction of the time that the other person bids

lower than p. (p-a)/(b-a)

• The profit you make at p is v-p

• Expected profit as a function of p is the function

• = (v-p)*(p-a)/(b-a) + 0*(1- (p-a)/(b-a))

35

Finding maximum profit is a simple

calculus problem

• Expected profit as a function of p is the function

(v-p)*(p-a)/(b-a)

• Take the derivative with respect to p and set that

value to zero. Where the slope is zero, is the

maximum value. (as second derivative is negative)

• f(p) = 1/(b-a) * (vp -va -p2+pa)

• f’(p) = 1/(b-a) (v-2p+a) = 0

• p=(a+v)/2 (half the distance between your bid and

the min range value)

36

Ultimatum Bargaining with

Incomplete Information

37

Ultimatum Bargaining with

Incomplete Information

• Player 1 begins the game by drawing a chip from

the bag. Inside the bag are 30 chips ranging in

value from $1.00 to $30.00.

• Both must agree to split the amount. Player 2

does not see the chip.

• Player 1 then makes an offer to Player 2. The

offer can be any amount in the range from $0.00

up to the value of the chip.

• Player 2 can either accept or reject the offer. If

accepted,Player 1 pays Player 2 the amount of the

offer and keeps the rest. If rejected, both

38

players get nothing.

Questions:

Experimental

Results

1) How much should Player 1 offer Player 2?

2) Does the amount of the offer depend on the size of the chip?

2) What should Player 2 do?

Should Player 2 accept all offers or only offers above a specified amount?

Explain.

Composition of Urn:

0 - 30

5 - 25

10 - 20

Mean % of Pie Offered to Receiver:

31.2%

34.2%

42.4%

39

Coalition Formation

•

•

•

•

•

Tasks need the skills of several workers

Tasks have various worth

Agents have various costs

How do you decide who works together?

What do you pay each one?

40

Research Questions

• Computing the optimal coalition is NPhard. How do you form good coalitions in

an efficient manner?

• How do you form coalitions when the

information is incomplete?

• How do you form coalitions in a dynamic

environment – with agents

entering/leaving?

41

Voting Mechanisms

• How do we make decisions that respond to

various individuals preference funtions?

• Ex: selecting new faculty based on various

different evaluations

• Want to decide what to serve for

refreshments the last day of class. How do

we decide?

42

Borda Paradox – remove loser, winner changes

(notice, c is always ahead of removed item)

• a > b > c >d

• b > c > d >a

• c>d>a>b

• a>b>c>d

• b > c > d> a

• c >d > a >b

• a <b <c < d

a=18, b=19, c=20, d=13

a>b>c

b > c >a

c >a>b

a>b>c

b>c>a

c > a >b

a <b <c

a=15,b=14, c=13

When loser is removed, next loser becomes winner!

43

Research Question

• Do individuals always act the way the

theory says they should?

• If not, why not? Is the theory wrong?

44

Allais Paradox

• In 1953, Maurice Allais published a paper

regarding a survey he had conducted in 1952, with

a hypothetical game.

• Subjects "with good training in and knowledge of

the theory of probability, so that they could be

considered to behave rationally", routinely

violated the expected utility axioms.

• The game itself and its results have now become

famous as the "Allais Paradox".

45

The most famous structure is the following:

Subjects are asked to choose between the following 2 gambles, i.e. which

one they would like to participate in if they could:

Gamble A: A 100% chance of receiving $1 million.

Gamble B: A 10% chance of receiving $5 million, an 89% chance of

receiving $1 million, and a 1% chance of receiving nothing.

After they have made their choice, they are presented with another 2

gambles and asked to choose between them:

Gamble C: An 11% chance of receiving $1 million, and an 89% chance of

receiving nothing.

Gamble D: A 10% chance of receiving $5 million, and a 90% chance of

receiving nothing.

46

• This experiment has been conducted many,

many times, and most people invariably prefer

A to B, and D to C.

• So why is this a paradox?

.

47

•

The expected value of A is $1 million, while the expected value of B is $1.39

million. By preferring A to B, people are presumably maximizing expected utility,

not expected value.

By preferring A to B, we have the following expected utility relationship:

u(1) > 0.1 * u(5) + 0.89 * u(1) + 0.01 * u(0), i.e.

0.11 * u(1) > 0.1 * u(5) + 0.1 * u(0)

Adding 0.89 * u(0) to each side, we get:

0.11 * u(1) + 0.89 * u(0) > 0.1 * u(5) + 0.90 * u(0),

implying that an expected utility maximizer consistent with the first choice must

prefer C to D.

•

The expected value of C is $110,000, while the expected value of D is $500,000, so

if people were maximizing expected value, they should in fact prefer D to C.

However, their choice in the first stage is inconsistent with their choice in the

second stage, and herein lies the paradox.

48