aaai2016understandingtrafficdynamics-160218135325

advertisement

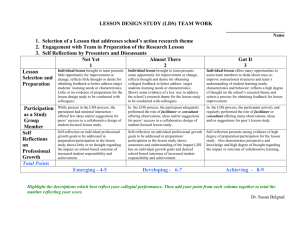

1 Understanding City Traffic Dynamics Utilizing Sensor and Textual Observations Pramod Anantharam, Krishnaprasad Thirunarayan, Surendra Marupudi, Amit Sheth, and Tanvi Banerjee Ohio Center of Excellence in Knowledge-enabled Computing(Kno.e.sis), Wright State University, USA The Thirtieth AAAI Conference on Artificial Intelligence (AAAI-16), February 12–17, Phoenix, Arizona, USA. Multimodal Manifestation of Events: Traffic Scenario 2 3 Multimodal Data Integration: Traffic Scenario • Why? – Explain/Interpret average speed and link travel time variations using events provided by city authorities and traffic events shared on Twitter – Past work: Predict congestion based on historical sensor data • What? – Combine • 511.org data about Bay Area Road Network Traffic – E.g., Average speed and link travel time data stream (Sensor data) – E.g., (Happened or planned) event reports (Textual data) • Tweets that report events including ad hoc ones (Textual data) 4 Multimodal Data Integration: Traffic Scenario • How? – Step 1: Extract textual events from tweets stream – Step 2: Build statistical models of normalcy, and thereby anomaly, for sensor time series data – Step 3: Correlate multimodal streams, using spatio-temporal information, to explain “anomalies” in sensor time series data with textual events 5 Multimodal Data Integration: Traffic Scenario • How? – Step 1: Extract textual events from tweets stream – Step 2: Build statistical models of normalcy, and thereby anomaly, from numerical sensor data streams – Step 3: Correlate multimodal streams, using spatio-temporal information, to explain “anomalies” in sensor time series data with textual events 6 Extracting Textual Events from Tweets: Annotation + Extraction 39,208 traffic related incidents extracted from over 20 million tweets1 NER – Named Entity Recognition OSM – Open Street Maps Pramod Anantharam, Payam Barnaghi, Krishnaprasad Thirunarayan, and Amit Sheth. 2015. Extracting City Traffic Events from Social Streams. ACM Trans. Intell. Syst. Technol. 6, 4, Article 43 (July 2015), 27 pages. DOI=10.1145/2717317 http://doi.acm.org/10.1145/2717317 1Event Extraction Tool on Open Science Foundation: https://osf.io/b4q2t/wiki/home/ 7 Multimodal Data Integration: Traffic Scenario • How? – Step 1: Extract textual events from tweets stream – Step 2: Build statistical models of normalcy, and thereby anomaly, from numerical sensor data streams – Step 3: Correlate multimodal streams, using spatio-temporal information, to explain “anomalies” in sensor time series data with textual events 8 Building Normalcy Models of Traffic Dynamics*: Challenges Multiple events Event interactions Varying influence Speed in km/h Time of Day (approx. 1 observation/minute) *Traffic Dynamics here refers to speed and travel time variations observed in sensor data Image credit: http://traffic.511.org/index 9 Possible Causes of Nonlinearity in Traffic Dynamics • Temporal landmarks : peak hour vs. off-peak traffic vs. weekend traffic • Effect of location • Scheduled events such as road construction, baseball game, or music concert • Unexpected events such as accidents, heavy rains, fog • Random variations (viz., stochasticity) such as people visiting downtown by mere coincidence 10 Modeling City Traffic Dynamics: A Closer Look Speed of vehicles reported by sensors on the road • • Do we know all the events? Do we know all the event interactions? Events • Do we know the number of event participants? • • People Influx How are the participants traveling? Do we know the vehicle volume on the road? Vehicle Influx • • Do we know the speed of vehicles on the road? What is the nature of the speed time series data? Vehicle Speed Hidden State Observed Evidence Image credits: http://bit.ly/1N1wu5g, http://bit.ly/1O8d9gn, http://bit.ly/1N8L5tf, http://bit.ly/1HLDYui 11 Nature of the Problem to Linear Dynamical System (LDS) 1. There are both hidden states and observed evidence v1 v2 … vT s1 s1 … sT 2. Current observed evidence depends on the current hidden state v1 v2 … vT s1 s1 … sT v1 v2 … vT s1 s1 … sT 3. Current hidden state depends on the previous hidden states For simplicity of explanation, we consider vehicle influx as a hidden variable and the observed speed as evidence variable Vehicle influx at a certain point in time t would influence speed of vehicles as the same time t Vehicle influx at a certain point in time t depends only on the previous vehicle influx (firstorder Markov process) 12 Linear Dynamical Systems Model v1 v2 … vT s1 s1 … sT The transition model is specified by At and the observation model is specified by Bt along with associated noise Replacing discrete valued state and observation nodes in the rain example (previous slide) with continuous valued states and observations, we get an LDS model the has transition and observation models The joint distribution over all the hidden and observed variables is shown along with the conditional distributions Barber, David. Bayesian reasoning and machine learning. Cambridge University Press, 2012. 13 Hourly Traffic Dynamics Over all Mondays between Aug-14 to Jan-15 x-axis: observation number for each hour of day y-axis: average speed of vehicles in km/h 14 Learning Context Specific LDS Models Step 1: Index data for each link for day of week and hour of day utilizing the traffic domain knowledge for piecewise linear approximation Step 2: Find the “typical” dynamics by computing the mean and choosing the medoid for each hour of day and day of week Step 3: Learn LDS parameters for the medoid for each hour of day (24 hours) and each day of week (7 days) resulting in 24 × 7 = 168 models for each link hj di Mo n. Tue Mo n. Tu . We d. Thu. Fri. Speed/travel-time time series data from a link Sat. Sun. Time series data for each hour of day (1-24) for each day of week (Monday – Sunday) e. We d. Th u. Fr i. Sa t. Su n. Mean time series computed for each day of week and hour of day along with the medoid LDS(1,1), LDS(1,2) ,…., LDS . (1,24) . . LDS(7,1), LDS(7,2) ,…., LDS(7,24) 7 × 24 168 LDS models for each link; Total models learned = 425,712 i.e., (2,534 links × 168 models per link) 15 Utilizing Context Specific LDS Models to Learn Normalcy Loglikelihood score 16 Tagging Anomalies using Context Specific LDS Models (Output) Anomalies Lik(1,1), Lik(1,2) ,…., Lik(1,24) Log likelihood min. and max. values obtained from five number summary . . . L= Lik(7,1), Lik(7,2) ,…., Lik(7,24) 7 × 24 (di,hj) (min. likelihood) Partition based on (di,hj) Compute Log Likelihood for each hour of observed data (di,hj) Partition based on (di,hj) LDS(hj,di) Yes (Training phase) Train ? hj di Tag Anomalous hours using the Log Likelihood Range LDS(1,1), LDS(1,2) ,…., LDS(1,24) . . . LDS(7,1), LDS(7,2) ,…., LDS(7,24) No Speed and travel-time time Observations from a link 7 × 24 (Input) 17 Multimodal Data Integration: Traffic Scenario • How? – Step 1: Extract textual events from tweets stream – Step 2: Build statistical models of normalcy, and thereby anomaly, from numerical sensor data streams – Step 3: Correlate multimodal streams, using spatio-temporal information, to explain “anomalies” in sensor time series data with textual events 18 Explaining Anomalies in Sensor Data using Textual Events City Traffic Events from Twitter (Anantharam 2014) https://osf.io/b4q2t/wiki/home/ ⟨et, el, est, eet, ei⟩ Δte = est ~ eet Explains City tweets Explained_by Link sensor data (if there is an overlap between Δte and Δta) Anomalies Δta = ast - 1 hour ~ aet + 1 hour ⟨ast, aet⟩ • If an anomaly is detected on a link L and during time period [tst, tet], then the anomaly is explained by an event if the event occurred in the vicinity within 0.5km radius and during [tst-1, tet+1]. • CAVEAT: An anomaly may not be explained because of missing data. 19 Real-World Dataset and Scalability Issues • Data collected from San Francisco Bay Area between May 2014 to May 2015 – 511.org: • 1,638 traffic incident reports • 1.4 billion speed and travel time observations – Twitter Data: 39,208 traffic related incidents extracted from over 20 million tweets1 • Naïve implementation for learning normalcy models for 2,534 links resulted in 40 minutes per link (~ 2 months of processing time for our data) – 2.66 GHz, Intel Core 2 Duo with 8 GB main memory • Scalable implementation by exploiting the nature of the problem resulted in learning normalcy models within 24 hours – The Apache Spark cluster used in our evaluation has 864 cores and 17TB main memory. 1Extracting city traffic events from social streams. https://osf.io/b4q2t/wiki/home/ 20 Evaluation Results 21 Conclusion • Events extracted from social media can complement sensor data • Knowledge of the domain can be utilized for a piecewise linear approximation of non-linear speed dynamics • Events reported by people most likely manifests in sensor data relative to traffic events by formal sources. • Short-term events manifest as anomalies in sensor data while the long-term events may not result in anomaly manifestations 22 Acknowledgements This material is based upon work supported by the National Science Foundation under Grant No. EAR 1520870 titled “Hazards SEES: Social and Physical Sensing Enabled Decision Support for Disaster Management and Response”. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. 23 Thank you Thank you, and please visit us at http://knoesis.org Hazards SEES: Social and Physical Sensing Enabled Decision Support for Disaster Management and Response Link to the paper: http://www.knoesis.org/library/resource.php?id=2228