lecture slides

advertisement

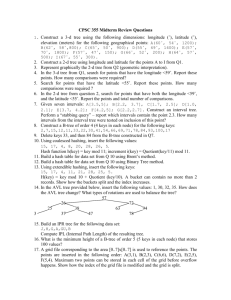

CS232A: Database System Principles INDEXING 1 Indexing Given condition on attribute find qualified records Qualified records value Attr = value ? value value Condition may also be • Attr>value • Attr>=value 2 Indexing • Data Stuctures used for quickly locating tuples that meet a specific type of condition – Equality condition: find Movie tuples where Director=X – Other conditions possible, eg, range conditions: find Employee tuples where Salary>40 AND Salary<50 • Many types of indexes. Evaluate them on – – – – Access time Insertion time Deletion time Disk Space needed (esp. as it effects access time) Topics • Conventional indexes • B-trees • Hashing schemes 4 Terms and Distinctions • Primary index – the index on the attribute (a.k.a. search key) that determines the sequencing of the table • Secondary index – index on any other attribute • Dense index – every value of the indexed attribute appears in the index • Sparse index – many values do not appear A Dense Primary Index 10 20 30 40 50 70 80 90 100 120 140 150 10 20 30 40 50 70 80 90 100 120 Sequential File Dense and Sparse Primary Indexes Dense Primary Index 10 20 30 40 50 70 80 90 100 120 140 150 10 20 30 40 50 70 80 90 100 120 + can tell if a value exists without accessing file (consider projection) + better access to overflow records Sparse Primary Index 10 30 50 80 100 140 160 200 10 20 30 40 50 70 80 90 100 120 Find the index record with largest value that is less or equal to the value we are looking. + less index space more + and - in a while Sparse vs. Dense Tradeoff • Sparse: Less index space per record can keep more of index in memory • Dense: Can tell if any record exists without accessing file (Later: – sparse better for insertions – dense needed for secondary indexes) 7 Multi-Level Indexes • Treat the index as a file and build an index on it • “Two levels are usually sufficient. More than three levels are rare.” • Q: Can we build a dense second level index for a dense index ? 10 100 250 400 600 750 920 1000 10 30 50 80 100 140 160 200 250 270 300 350 400 460 500 550 10 20 30 40 50 70 80 90 100 120 A Note on Pointers • Record pointers consist of block pointer and position of record in the block • Using the block pointer only saves space at no extra disk accesses cost Representation of Duplicate Values in Primary Indexes • Index may point to first instance of each value only 10 40 70 100 10 10 10 40 40 70 70 70 100 120 Deletion from Dense Index Delete 40, 80 • Deletion from dense primary index file with no duplicate values is handled in the same way with deletion from a sequential file • Q: What about deletion from dense primary index with duplicates Header Header 10 20 30 50 70 90 10 20 30 50 70 90 100 120 Lists of available entries Deletion from Sparse Index Delete 40 • if the deleted entry does not appear in the index do nothing 10 30 50 80 100 140 160 200 Header 10 20 30 50 70 80 90 100 120 Deletion from Sparse Index (cont’d) Delete 30 • if the deleted entry does not appear in the index do nothing • if the deleted entry appears in the index replace it with the next search-key value – comment: we could leave the deleted value in the index assuming that no part of the system may assume it still exists without checking the block 10 40 50 80 100 140 160 200 Header 10 20 40 50 70 80 90 100 120 Deletion from Sparse Index (cont’d) Delete 40, then 30 • if the deleted entry does not appear in the index do nothing • if the deleted entry appears in the index replace it with the next search-key value • unless the next search key value has its own index entry. In this case delete the entry Header 10 50 80 100 140 160 200 Header 10 20 50 70 80 90 100 120 Insertion in Sparse Index Insert 35 • if no new block is created then do nothing 10 30 50 80 100 140 160 200 Header 10 20 30 35 50 70 80 90 100 120 Insertion in Sparse Index Insert 15 • if no new block is created then do nothing • else create overflow record – Reorganize periodically – Could we claim space of next block? – How often do we reorganize and how much expensive it is? – B-trees offer convincing answers 10 30 50 80 100 140 160 200 Header 10 20 30 50 70 80 90 100 120 16 Secondary indexes File not sorted on secondary search key Sequence field 30 50 20 70 80 40 100 10 90 60 17 Secondary indexes Sequence field • Sparse index 30 20 80 100 90 ... 30 50 20 70 80 40 100 10 does not make sense! 90 60 18 Secondary indexes Sequence field • Dense index 10 50 90 ... sparse high level 10 20 30 40 30 50 50 60 70 ... 80 40 First level has to be dense, next levels are sparse (as usual) 20 70 100 10 90 60 19 Duplicate values & secondary indexes 20 10 20 40 10 40 10 40 30 40 20 Duplicate values & secondary indexes one option... Problem: excess overhead! • disk space • search time 10 10 10 20 20 30 40 40 40 40 ... 20 10 20 40 10 40 10 40 30 40 21 Duplicate values & secondary indexes another option: lists of pointers Problem: variable size records in index! 10 20 10 20 20 40 30 40 10 40 10 40 30 40 22 Duplicate values & secondary indexes 10 20 30 40 50 60 ... Yet another idea : Chain records with same key? 20 10 20 40 10 40 10 40 30 40 Problems: • Need to add fields to records, messes up maintenance • Need to follow chain to know records 23 Duplicate values & secondary indexes 20 10 10 20 30 40 20 40 10 40 50 60 ... 10 40 30 40 buckets 24 Why “bucket” idea is useful • Enables the processing of queries working with pointers only. • Very common technique in Information Retrieval Indexes Name: primary Dept: secondary Year: secondary Records EMP (name,dept,year,...) 25 Advantage of Buckets: Process Queries Using Pointers Only Find employees of the Toys dept with 4 years in the company SELECT Name FROM Employee WHERE Dept=“Toys” AND Year=4 Year Index Dept Index Toys PCs Pens Suits Aaron Helen Suits Pens 4 3 Jack Jim PCs Toys 4 4 Joe Nick Toys PCs 3 2 Walt Yannis Toys Pens 5 1 Intersect toy bucket and 2nd Floor bucket to get set of matching EMP’s 1 2 3 4 This idea used in text information retrieval cat dog Buckets known as Documents ...the cat is fat ... ...my cat and my dog like each other... ...Fido the dog ... Inverted lists 27 Information Retrieval (IR) Queries • Find articles with “cat” and “dog” – Intersect inverted lists • Find articles with “cat” or “dog” – Union inverted lists • Find articles with “cat” and not “dog” – Subtract list of dog pointers from list of cat pointers • Find articles with “cat” in title • Find articles with “cat” and “dog” within 5 words 28 Common technique: more info in inverted list cat Title 5 Author 10 Abstract 57 dog d1 Title Title d3 d2 100 12 29 Posting: an entry in inverted list. Represents occurrence of term in article Size of a list: 1 Rare words or mis-spellings 106 Common words (in postings) Size of a posting: 10-15 bits (compressed) 30 Vector space model w1 w2 w3 w4 w5 w6 w7 … DOC = <1 0 0 1 1 0 0 …> Query= <0 PRODUCT = 0 1 1 0 0 0 …> 1 + ……. = score 31 • Tricks to weigh scores + normalize e.g.: Match on common word not as useful as match on rare words... 32 Summary of Indexing So Far • Basic topics in conventional indexes – multiple levels – sparse/dense – duplicate keys and buckets – deletion/insertion similar to sequential files • Advantages – simple algorithms – index is sequential file • Disadvantages – eventually sequentiality is lost because of overflows, reorganizations are needed Example continuous Index 10 20 30 33 40 50 60 free space 70 80 90 (sequential) 39 31 35 36 32 38 34 overflow area (not sequential) 34 Outline: • Conventional indexes • B-Trees NEXT • Hashing schemes 35 • NEXT: Another type of index – Give up on sequentiality of index – Try to get “balance” 36 180 200 150 156 179 120 130 100 101 110 30 35 3 5 11 120 150 180 30 100 B+Tree Example n=3 Root 37 95 81 57 Sample non-leaf to keys to keys to keys < 57 57 k<81 81k<95 to keys 95 38 57 81 95 To record with key 57 To record with key 81 To record with key 85 Sample leaf node: From non-leaf node to next leaf in sequence 39 In textbook’s notation n=3 30 35 Leaf: 30 35 Non-leaf: 30 30 40 Size of nodes: n+1 pointers (fixed) n keys 41 Non-root nodes have to be at least half-full • Use at least Non-leaf: (n+1)/2 pointers Leaf: (n+1)/2 pointers to data 42 n=3 Non-leaf 120 150 180 30 Leaf 30 35 min. node 3 5 11 Full node 43 B+tree rules tree of order n (1) All leaves at same lowest level (balanced tree) (2) Pointers in leaves point to records except for “sequence pointer” 44 (3) Number of pointers/keys for B+tree Max Max Min ptrs keys ptrsdata Non-leaf (non-root) Leaf (non-root) Root Min keys n+1 n (n+1)/2 (n+1)/2- 1 n+1 n (n+1)/2 (n+1)/2 n+1 n 1 1 45 Insert into B+tree (a) simple case – space available in leaf (b) leaf overflow (c) non-leaf overflow (d) new root 46 n=3 30 31 32 3 5 11 30 100 (a) Insert key = 32 47 (a) Insert key = 7 30 30 31 3 57 11 3 5 7 100 n=3 48 180 200 160 179 150 156 179 180 120 150 180 160 100 (c) Insert key = 160 n=3 49 (d) New root, insert 45 40 45 40 30 32 40 20 25 10 12 1 2 3 10 20 30 30 new root n=3 50 Deletion from B+tree (a) Simple case - no example (b) Coalesce with neighbor (sibling) (c) Re-distribute keys (d) Cases (b) or (c) at non-leaf 51 (b) Coalesce with sibling n=4 40 50 10 20 30 40 10 40 100 – Delete 50 52 (c) Redistribute keys n=4 35 40 50 10 20 30 35 10 40 35 100 – Delete 50 53 (d) Non-leaf coalese n=4 25 – Delete 37 40 45 30 37 30 40 25 26 30 20 22 10 14 1 3 10 20 25 40 new root 54 B+tree deletions in practice – Often, coalescing is not implemented – Too hard and not worth it! 55 Comparison: B-trees vs. static indexed sequential file Ref #1: Held & Stonebraker “B-Trees Re-examined” CACM, Feb. 1978 56 Ref # 1 claims: - Concurrency control harder in B-Trees - B-tree consumes more space For their comparison: block = 512 bytes key = pointer = 4 bytes 4 data records per block 57 Example: 1 block static index k1 k1 127 keys 1 data block k2 k2 k3 (127+1)4 = 512 Bytes -> pointers in index implicit! k3 up to 127 contigous blocks 58 Example: 1 block B-tree k1 k1 1 data block k2 63 keys k2 ... k63 k3 next 63x(4+4)+8 = 512 Bytes -> pointers needed in B-tree blocks because index and data are not contiguous up to 63 blocks 59 Size comparison Static Index # data blocks height 2 -> 127 128 -> 16,129 16,130 -> 2,048,383 2 3 4 Ref. #1 B-tree # data blocks height 2 -> 63 64 -> 3968 3969 -> 250,047 250,048 -> 15,752,961 2 3 4 5 60 Ref. #1 analysis claims • For an 8,000 block file, after 32,000 inserts after 16,000 lookups Static index saves enough accesses to allow for reorganization Ref. #1 conclusion Static index better!! 61 Ref #2: M. Stonebraker, “Retrospective on a database system,” TODS, June 1980 Ref. #2 conclusion B-trees better!! 62 Ref. #2 conclusion B-trees better!! • DBA does not know when to reorganize – Self-administration is important target • DBA does not know how full to load pages of new index 63 Ref. #2 conclusion B-trees better!! • Buffering – B-tree: has fixed buffer requirements – Static index: large & variable size buffers needed due to overflow 64 • Speaking of buffering… Is LRU a good policy for B+tree buffers? Of course not! Should try to keep root in memory at all times (and perhaps some nodes from second level) 65 Interesting problem: For B+tree, how large should n be? … n is number of keys / node 66 Assumptions • You have the right to set the disk page size for the disk where a B-tree will reside. • Compute the optimum page size n assuming that – The items are 4 bytes long and the pointers are also 4 bytes long. – Time to read a node from disk is 12+.003n – Time to process a block in memory is unimportant – B+tree is full (I.e., every page has the maximum number of items and pointers Can get: f(n) = time to find a record f(n) nopt n 68 FIND nopt by f’(n) = 0 Answer should be nopt = “few hundred” What happens to nopt as • Disk gets faster? • CPU get faster? 69 Variation on B+tree: B-tree (no +) • Idea: – Avoid duplicate keys – Have record pointers in non-leaf nodes 70 K1 P1 to keys < K1 K2 P2 K3 P3 to record to record to record with K1 with K2 with K3 to keys to keys K1<x<K2 K2<x<k3 to keys >k3 71 170 180 150 160 145 165 110 120 90 100 70 80 50 60 30 40 10 20 25 45 85 105 (but keep space for simplicity) 130 140 • sequence pointers not useful now! n=2 65 125 B-tree example 72 So, for B-trees: MAX Non-leaf non-root Leaf non-root Root non-leaf Root Leaf MIN Tree Ptrs Rec Keys Ptrs Tree Ptrs Rec Ptrs Keys n+1 n n 1 n n 1 n+1 n n 2 1 1 1 n n 1 1 1 (n+1)/2 (n+1)/2-1 (n+1)/2-1 (n+1)/2 (n+1)/2 73 Tradeoffs: B-trees have marginally faster average lookup than B+trees (assuming the height does not change) in B-tree, non-leaf & leaf different sizes Smaller fan-out in B-tree, deletion more complicated B+trees preferred! 74 Example: - Pointers - Keys - Blocks - Look at full 4 bytes 4 bytes 100 bytes (just example) 2 level tree 75 B-tree: Root has 8 keys + 8 record pointers + 9 son pointers = 8x4 + 8x4 + 9x4 = 100 bytes Each of 9 sons: 12 rec. pointers (+12 keys) = 12x(4+4) + 4 = 100 bytes 2-level B-tree, Max # records = 12x9 + 8 = 116 76 B+tree: Root has 12 keys + 13 son pointers = 12x4 + 13x4 = 100 bytes Each of 13 sons: 12 rec. ptrs (+12 keys) = 12x(4 +4) + 4 = 100 bytes 2-level B+tree, Max # records = 13x12 = 156 77 So... 8 records B+ B ooooooooooooo ooooooooo 156 records 108 records Total = 116 • Conclusion: – For fixed block size, – B+ tree is better because it is bushier 78 Outline/summary • Conventional Indexes • Sparse vs. dense • Primary vs. secondary • B trees • B+trees vs. B-trees • B+trees vs. indexed sequential • Hashing schemes --> Next 79 Hashing Records • hash function h(key) returns address of bucket or record • for secondary index buckets are required • if the keys for a specific hash value do not fit into one page the bucket is a linked list of pages key h(key) Buckets key h(key) key Records Example hash function • Key = ‘x1 x2 … xn’ n byte character string • Have b buckets • h: add x1 + x2 + ….. xn – compute sum modulo b 81 This may not be best function … Read Knuth Vol. 3 if you really need to select a good function. Good hash function: Expected number of keys/bucket is the same for all buckets 82 Within a bucket: • Do we keep keys sorted? • Yes, if CPU time critical & Inserts/Deletes not too frequent 83 Next: example to illustrate inserts, overflows, deletes h(K) 84 EXAMPLE 2 records/bucket INSERT: h(a) = 1 h(b) = 2 h(c) = 1 h(d) = 0 0 d 1 a c 2 b e 3 h(e) = 1 85 EXAMPLE: deletion Delete: e f c 0 a 1 b c d e 2 3 f g d maybe move “g” up 86 Rule of thumb: • Try to keep space utilization between 50% and 80% Utilization = # keys used total # keys that fit • If < 50%, wasting space • If > 80%, overflows significant depends on how good hash function is & on # keys/bucket 87 How do we cope with growth? • Overflows and reorganizations • Dynamic hashing • Extensible • Linear 88 Extensible hashing: two ideas (a) Use i of b bits output by hash function b h(K) 00110101 use i grows over time…. 89 (b) Use directory h(K)[0-i ] . . . to bucket . . . 90 Example: h(k) is 4 bits; 2 keys/bucket i= 1 1 0001 00 01 1 2 1001 1010 1100 Insert 1010 i=2 1 2 1100 10 11 New directory 91 Example continued i= 2 00 01 10 11 Insert: 0111 2 0000 0001 1 2 0001 0111 0111 2 1001 1010 2 1100 0000 92 Example continued 0000 2 i= 2 0001 00 0111 2 01 10 11 Insert: 1001 1001 3 1001 1010 1001 2 3 1010 1100 2 i=3 000 001 010 011 100 101 110 111 93 Extensible hashing: deletion • No merging of blocks • Merge blocks and cut directory if possible (Reverse insert procedure) 94 Deletion example: • Run thru insert example in reverse! 95 Summary Extensible hashing + Can handle growing files - with less wasted space - with no full reorganizations - Indirection (Not bad if directory in memory) - Directory doubles in size (Now it fits, now it does not) 96 Linear hashing • Another dynamic hashing scheme Two ideas: (a) Use i low order bits of hash b 01110101 grows i (b) File grows linearly 97 Example b=4 bits, 0101 0000 1010 00 i =2, 2 keys/bucket • insert 0101 • can have overflow chains! 0101 1111 Future growth buckets 01 10 11 m = 01 (max used block) Rule If h(k)[i ] m, then look at bucket h(k)[i ] else, look at bucket h(k)[i ] - 2i -1 98 Example b=4 bits, 0101 0000 1010 00 0101 0101 1111 i =2, 2 keys/bucket • insert 0101 1010 1111 Future growth buckets 01 10 11 m = 01 (max used block) 10 11 99 Example Continued: How to grow beyond this? i=2 3 0000 0 00 100 0101 0101 0 01 101 1010 010 110 0101 0101 1111 0 11 111 100 101 ... m = 11 (max used block) 100 101 100 When do we expand file? • Keep track of: # used slots total # of slots =U • If U > threshold then increase m (and maybe i ) 101 Summary Linear Hashing + Can handle growing files - with less wasted space - with no full reorganizations + No indirection like extensible hashing - Can still have overflow chains 102 Example: BAD CASE Very full Very empty Need to move m here… Would waste space... 103 Summary Hashing - How it works - Dynamic hashing - Extensible - Linear 104 Next: • Indexing vs Hashing • Index definition in SQL • Multiple key access 105 Indexing vs Hashing • Hashing good for probes given key e.g., SELECT … FROM R WHERE R.A = 5 106 Indexing vs Hashing • INDEXING (Including B Trees) good for Range Searches: e.g., SELECT FROM R WHERE R.A > 5 107 Index definition in SQL • Create index name on rel (attr) • Create unique index name on rel (attr) defines candidate key • Drop INDEX name 108 Note CANNOT SPECIFY TYPE OF INDEX (e.g. B-tree, Hashing, …) OR PARAMETERS (e.g. Load Factor, Size of Hash,...) ... at least in SQL... 109 Note ATTRIBUTE LIST MULTIKEY INDEX (next) e.g., CREATE INDEX foo ON R(A,B,C) 110 Multi-key Index Motivation: Find records where DEPT = “Toy” AND SAL > 50k 111 Strategy I: • Use one index, say Dept. • Get all Dept = “Toy” records and check their salary I1 112 Strategy II: • Use 2 Indexes; Manipulate Pointers Toy Sal > 50k 113 Strategy III: • Multiple Key Index I2 One idea: I1 I3 114 Example Art Sales Toy Dept Index 10k 15k 17k 21k 12k 15k 15k 19k Example Record Name=Joe DEPT=Sales SAL=15k Salary Index 115 For which queries is this index good? Find Find Find Find RECs RECs RECs RECs Dept = “Sales” Dept = “Sales” Dept = “Sales” SAL = 20k SAL=20k SAL > 20k 116 Interesting application: • Geographic Data y DATA: ... x <X1,Y1, Attributes> <X2,Y2, Attributes> 117 Queries: • What city is at <Xi,Yi>? • What is within 5 miles from <Xi,Yi>? • Which is closest point to <Xi,Yi>? 118 Example 40 10 25 20 20 10 e h 30 20 15 35 i n o j k m 10 5 h i f 15 15 j k g l m d e c b f l a d c g 20 a b • Search points near f • Search points near b n o 119 Queries • • • • Find Find Find Find points points points points with Yi > 20 with Xi < 5 “close” to i = <12,38> “close” to b = <7,24> 120 • Many types of geographic index structures have been suggested • Quad Trees • R Trees 121 Two more types of multi key indexes • Grid • Partitioned hash 122 Grid Index Key 2 V1 V2 X1 X2 …… Xn Key 1 Vn To records with key1=V3, key2=X2 123 CLAIM • Can quickly find records with – key 1 = Vi Key 2 = Xj – key 1 = Vi – key 2 = Xj • And also ranges…. – E.g., key 1 Vi key 2 < Xj 124 But there is a catch with Grid Indexes! V2 V3 X1 X2 X3 X4 X1 X2 X3 X4 Like Array... V1 X1 X2 X3 X4 • How is Grid Index stored on disk? Problem: • Need regularity so we can compute position of <Vi,Xj> entry 125 Solution: Use Indirection V1 V2 V3 V4 X1 X2 X3 Buckets ---- ------------- Buckets *Grid only contains pointers to buckets 126 With indirection: • Grid can be regular without wasting space • We do have price of indirection 127 Can also index grid on value ranges Salary Grid 1 2 50K- 3 8 0-20K 20K-50K 1 Linear Scale 2 3 Toy Sales Personnel 128 Grid files + - Good for multiple-key search Space, management overhead (nothing is free) - Need partitioning ranges that evenly split keys 129 Partitioned hash function Idea: Key1 010110 1110010 h1 h2 Key2 130 EX: h1(toy) h1(sales) h1(art) . . h2(10k) h2(20k) h2(30k) h2(40k) . . Insert =0 =1 =1 =01 =11 =01 =00 000 001 010 011 100 101 110 111 <Fred> <Joe><Sally> <Fred,toy,10k>,<Joe,sales,10k> <Sally,art,30k> 131 h1(toy) =0 000 <Fred> h1(sales) =1 001 <Joe><Jan> h1(art) =1 010 <Mary> . 011 . <Sally> h2(10k) =01 100 h2(20k) =11 101 <Tom><Bill> h2(30k) =01 110 <Andy> h2(40k) =00 111 . . • Find Emp. with Dept. = Sales Sal=40k 132 h1(toy) =0 000 h1(sales) =1 001 h1(art) =1 010 . 011 . h2(10k) =01 100 h2(20k) =11 101 h2(30k) =01 110 h2(40k) =00 111 . . • Find Emp. with Sal=30k <Fred> <Joe><Jan> <Mary> <Sally> <Tom><Bill> <Andy> look here 133 h1(toy) =0 000 <Fred> h1(sales) =1 001 <Joe><Jan> h1(art) =1 010 <Mary> . 011 . <Sally> h2(10k) =01 100 h2(20k) =11 101 <Tom><Bill> h2(30k) =01 110 <Andy> h2(40k) =00 111 . . • Find Emp. with Dept. = Sales look here 134 Summary Post hashing discussion: - Indexing vs. Hashing - SQL Index Definition - Multiple Key Access - Multi Key Index Variations: Grid, Geo Data - Partitioned Hash 135 Reading Chapter 5 • Skim the following sections: – 5.3.6, 5.3.7, 5.3.8 – 5.4.2, 5.4.3, 5.4.4 • Read the rest 136 The BIG picture…. • Chapters 2 & 3: Storage, records, blocks... • Chapter 4 & 5: Access Mechanisms - Indexes - B trees - Hashing - Multi key • Chapter 6 & 7: Query Processing NEXT 137