Learning #2

advertisement

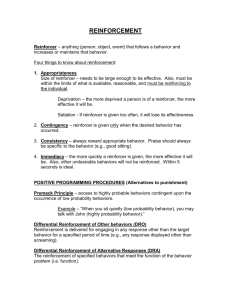

Classical conditioning forms associations between stimuli (a CS and the US that it signals). It involves respondent behavior, actions that are automatic responses to a stimulus. For example, salivating in response to food and later in response to a tone. In operant conditioning, organisms associate their own behavior/actions with consequences. Actions/behaviors followed by reinforcers increase, those followed by punishers decrease. Behavior that operates on the environment to produce rewarding or punishing stimuli is called operant behavior. Operant Classical Law of Effect: the law of effect was developed by Edward Thorndike (18741949). The law of effect states that rewarded behavior is likely to recur. Behaviors that are followed by favorable consequences become more likely and behaviors followed by unfavorable consequences become less likely. Edward Thorndike’s law of effect is one of the few laws of psychology. Thorndike developed his law through research with cats. The cats were placed in puzzle boxes that required the cat to perform various actions. The cats were enticed then rewarded with fish, once they had made it out of the box. Thorndike discovered that the cats performance tended to improve with successive trials. This illustrates his law of effect. Using the work of Thorndike as a starting point, Skinner developed a behavioral technology that reveals principles of behavior control. Skinner was able to train pigeons to do many behaviors that were not pigeon like. Skinner designed an operant chamber for his studies. It is commonly known as the Skinner box. The box has a key or bar that the animal must press . The action releases a reward of food or water. Shaping behavior is essential to operant conditioning. Shaping is important because humans and animals rarely perform the desired behaviors the first time around. They need a hint as to what is required. How does shaping occur? Shaping is a process where reinforcers are used to guide a subject’s actions toward a desired behavior. Shaping works by step by step reinforcement of appropriate behavior. If the researcher wants the rat to press a bar: Step 1: reward when it approaches the bar Step 2: once this is normal behavior do not reward unless the rat moves closer to the bar Step 3: once this is normal behavior do not reward unless the rat touches the bar Step 4: do not reward unless the rat presses the bar This process is called successive approximations. Reward behaviors that are ever closer to the goal but do not reward any other actions. Through this process animals can be taught complex behaviors. We can use shaping to understand nonverbal subjects. In 1964, Herrnstein and Loveland trained pigeons to respond to discriminative stimuli. They would reinforce the pigeon for pecking at a picture of a human face but not other pictures. The face is the discriminative stimulus. After being trained to discriminate among objects like flowers, humans, cars, etc, the pigeons could usually identify a new picture and place it in the correct category.(Bhatt,1988; Wasserman,1993) A reinforcer is any event that strengthens the behavior it follows. Reinforcers can be tangible, verbal or can be an activity. Positive reinforcement: increasing behaviors by presenting positive stimuli. A positive reinforcer is any stimulus that, when presented after a response, strengthens the response. Negative reinforcement: increasing behaviors by stopping or reducing negative stimuli (such as shock). A negative reinforcer is any stimulus that, when removed after a response, strengthens the response Positive Add a desirable stimulus Examples: getting a hug Getting a paycheck Receiving a food reward Praise or attention They can vary with circumstances, what is appealing to one may not appeal to someone else, or may not appeal to you at that time Remove an aversive stimulus Examples: buckle up to stop the beeping Hit the snooze to stop alarm Take drugs to stop withdrawal pangs Take an aspirin to stop pain Escape shock Negative reinforcers are not punishment Negative Primary reinforcers: an innately reinforcing stimulus, such as one that satisfies a biological need. Example: getting food when hungry or receiving pain relief when you have a headache. Conditioned reinforcers: a stimulus that gains its reinforcing power through its association with a primary reinforcer, also known as a secondary reinforcer. For example, Skinners rats associate a light with food. If they turn on the light, they receive food, therefore they will work to turn on the light. The light has become a conditioned reinforcer. Researchers (Briers et al.,2006) looked into the research question that because food and money are linked, people who are hungry would be more money hungry. People were less likely to donate to a charity when food deprived, and less likely to share money with fellow participants when food aromas where present. Immediate reinforcers: reinforcers that occur immediately after the desired behavior. In animal research immediate reinforcers are critical. Humans however will respond to delayed reinforcers. The paycheck you receive after a week or more of working is an example of delayed reinforcement. Those that are willing to accept delayed gratification have been shown to become socially competent and high-achieving adults. Continuous reinforcement: reinforcing the desired response every time it occurs Partial (intermittent) reinforcement: reinforcing a response only part of the time, results in slower acquisition of a response but much greater resistance to extinction than continuous reinforcement Example: slot machines Partial reinforcement schedules: Fixed-ratio schedules: reinforces a response only after a set number of responses: example: after you buy 10 you get one free deals Variable-ratio schedules: reinforces a response after a varied number of responses: example: slot machines Fixed-interval schedules: reinforces the response only after a set amount of time elapses Variable-interval schedules: reinforces the response after varying amounts of time have elapsed Cognitive processes do impact operant conditioning. For example, rats and other animals on a fixed interval schedule will respond more frequently as the time for a reinforcer grows near. The rats behave with expectation. They act as if they expect repeating the response to produce the reward. Latent learning: rats were placed in a maze and allowed to explore it for 10 days. They received a food reward for completing the maze. The rats demonstrated their prior knowledge by completing it as quickly as rats that had been rewarded for running the maze all along. The rats seem to have developed a cognitive map. The rats prior knowledge is not activated until a researcher places a reward in the goal box at the end of the maze. They developed latent learning, which is learning that is not evident until there is an incentive to demonstrate the learning. Insight: we suddenly see the answer where before it seemed as if there was no answer. The problem is a baby robin falls into a narrow opening 30 inches deep in a cement block wall. How can the robin be rescued? A 10 year old boy solved the problem, when several construction workers could not. Add sand a little at a time giving the bird time to adjust to the sand. As the san rises so will the bird. Intrinsic motivation: a desire to perform a behavior solely for its own sake Extrinsic motivation: a desire to perform a behavior to receive the promised reward or avoid the threatened punishment. Excessive rewards can undermine the intrinsic motivation. People may believe that if they must be rewarded that they behavior as no value on its own. Motivation studies: Deci/Ryan; if you want a behavior to be long term focus on the intrinsic/Patall; choice encourages intrinsic motivation/Boggiano; rewards effective if used not to control but to signal a job well done/Eisenberger&Rhoades, Henderlong& Lepper; rewards can boost creativity, raise performance, increase confidence and increase enjoyment An animal’s biological predispositions will restrict its ability to be trained through operant conditioning. Hamsters could be operantly conditioned (with a food reinforcer)to rear up or to dig but not to wash its face. Standing up and digging are part of the hamsters’ normal food seeking behaviors but face washing is not.(Shettleworth, 1973) Instinctive drift: occurs when trained animals revert to their biologically predisposed patterns. The Brelands (students of Skinner) began a business training animals for entertainment purposes. They worked with a variety of animals and began with the assumption that you could train almost any response with operant training. They soon discovered that biological predispositions interfered. Pigs trained to pick up coins and carry them soon began to drop them and push them with their snouts. This is an example of instinctual drift. Education: immediate feedback and pacing to fit each individual student was Skinner’s dream. Although, we are not yet taught by computers, the computer has changed education with online testing, interactive learning software and web-based learning. Sports: using reinforcement techniques to improve athletic performance. For example, teaching golf students to putt by beginning with very short putts and rewarding success, then over time lengthening the putts.(Simek,O’Brien,1981,88) Work: reinforcing appropriate work behaviors with immediate reinforcement. The reinforcement can be either financial or praise, both can be effective. Reward specific behaviors, not just a vague concept like merit.(Baron,1988)(Peters, Waterman,1982) Home: reinforce appropriate behaviors in children by reinforcing correct behaviors as opposed to punishing incorrect behaviors.(Wierson,Forehand,1994) Self-improvement: reinforce the desired behaviors and extinguish the undesired ones. Psychologists suggest the following four steps:1)State your goal in measurable terms 2) Monitor how often you engage in the desired behavior 3) Reinforce the desired behavior 4) Reduce the rewards gradually Observational learning: learning by observing others, also called social learning We learn all types of behaviors by imitating others. Those we imitate are the models and our imitation is called modeling. Mirror neurons play a role in observational learning. These neurons fire when an action is performed but also when the same action is observed. Our mirror neurons underlie our strong social nature. Young humans’ behaviors are shaped by imitation. Babies will imitate certain actions at a very young age. By 8 to 16 months, infants will imitate novel gestures (Jones,2007). By 12 months, they will look where an adult is looking (Brooks & Meltzoff,2005) By 14 months, they will imitate acts seen on TV (Meltzoff & Moore, 1989,1997) In 1961, Bandura set up an experiment researching imitative behavior/observational learning. A preschool age child is in a room working on a drawing, in the same room and adult is playing with toys. The adult suddenly gets up and for about 10 minutes beats, throws and even kicks a large Bobo doll, while yelling. The child is then taken to another room with really cool toys, but the experimenter tells the child that they cannot play with those toys. The child is then taken to another room, where there are a few toys, including a Bobo doll. The child is left alone. Those that have been with the adult model are much more likely to respond to the Bobo doll in a similar fashion than are children who were not exposed to the adult model. The children not only acted similar to the adults, they mimicked exactly the adults actions and words. Why would the children imitate the behavior? Why do we imitate certain models? Bandura believed that it was connected to reinforcements and punishments. We anticipate a behavior’s consequences by watching the model. We imitate people we perceive as like us or as successful and admirable. Prosocial effects: positive models can have prosocial effects. For example, want to raise a reader, read to them, surround them with books, read with them, read in front of them. Positive role models can encourage positive behaviors. Models are most effective when consistent, words and actions match. Parents are powerful models. Antisocial effects: observational learning of negative, antisocial behaviors. May explain why the children of abusive parents are more aggressive. Media may have an impact. For example, homicide rates rose during the same time period that TV was widely spreading across the country. The violence viewing effect (Donnerstein, 1998)indicates that the amount of violence watched , the attractiveness of the perpetrator, and the lack of a negative outcome are linked to aggressive violent behaviors. The violence viewing effect stems from 1) imitation and 2) desensitization over time.