Conceptual Issues and the Development and Use of Selected

advertisement

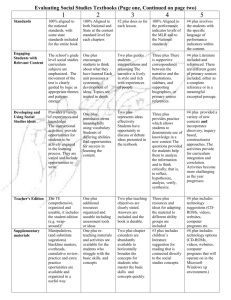

Conceptual Issues and the Development and Use of Selected Response Assessments The following is an excerpt from a paper delivered at the Annual Meeting of the National Council of Measurement in Education (1998) which was authored by Jeffrey Oescher, Juanita Haydel, and Rick Stiggins. This section describes the development of objective tests in the context of the three conceptual issues described earlier in the paper. Some of this section deals with relatively new ideas like changes in terminology, the match between the achievement target and assessment strategy, and student-centered classroom assessment. Most of the information, however, is a reorganization of the basic ideas surrounding objective test development traditionally discussed in measurement and assessment texts. Selected Response Assessments A number of years ago I wrote an item-writing guide that was intended to help teachers write objective test items. Stiggins (1997) has drawn issue with the use of this term and offers an alternative: selected response assessments. The first part of the term is self-explanatory. Selected response relates to the type of item format that asks students to choose a single best answer or a very limited set of acceptable answers. The evaluation of the response is right or wrong, correct or incorrect, acceptable or unacceptable, etc. Four formats fall into this category: multiple choice, true/false, matching, and fill-in-the-blank. The second part of the term, assessment, relates to the use of these item formats across a multitude of situations other than the traditional test. Classroom teachers use selected response items for homework assignments, classroom exercises, quizzes, and other formative and summative assessment related activities. In addition teachers are commonly combining selected response formats with essay and extended response formats. Matching Selected Response Assessments to Achievement Targets The idea of matching the type of assessment and desired achievement target is an explicit example of the conceptual development in the field of classroom assessment. Few of us at this time remember the significant step toward ensuring quality classroom assessments represented by this idea. There are two purposes for which selected response items are appropriate. The first, as you might assume, is for assessing knowledge or mastery of specific content. This encompasses Bloom’s taxonomic .levels of recall and comprehension as well as Stiggins’ level of knowing. While it is possible to address higher level cognitive targets, it is difficult. Often other assessment methods such as essays and performance assessments provide a better fit for such targets. The second purpose for which this format is most appropriate is to assess affective characteristics such as attitudes, self-efficacy, self-esteem, preferences, values, etc. The typical response formats like Likert, Thurstone, or semantic differential scales are non-dichotomous in nature. That is, the respondent chooses their response from among several options such as strongly disagree, disagree, neutral, agree, or strongly agree. There is no correct or incorrect answer, only that which best describes the respondent’s perceptions or feelings. Developing Selected Response Assessments Stiggins (1997) suggests several contextual factors that should be considered once a match between the achievement target and the assessment strategy has been established. They are listed below. Document1 Students have an appropriate level of reading to understand the exercise. Students have time to respond to all items. High-quality assessments have been developed and are available from the text, teacher's manual, other teachers, etc. Computer support is available to assist with item development, storage and retrieval, printing, scoring, and analysis. The efficiency of the selected response format is important. 1 If after considering such factors, a teacher decides to develop or use a selected response assessment, the stages in its development or selection are straightforward. They have been discussed extensively in the classroom measurement and assessment literature, so I will mention them only briefly. Developing a Test Plan. A test plan systematically identifies an appropriate sample of achievement. This is accomplished thorough either the use of a table of specifications or creating instructional objectives. The important issue to emphasize is not the format of the plan, but its existence. Planning is critically important to the development of a test. Effective planning saves time and energy; it is very effective; and it contributes to a clear understanding of the intended achievement targets. A table of specifications graphically relates the content of the test with the level of knowledge at which that content is to be tested. Table 1 is an example of such a table from an introductory graduate research course. Table 1 Table of Specifications for Unit 1 Content Sources of knowledge Scientific evidence-based knowledge Research designs Types of research Research reports Total Level of Knowledge Apply Analyze Synthesize 1 Comp 1 3 1 2 6 1 3 10 1 2 17 20 2 4 37 10 1 1 17 0 0 Eval 1 Total Recall 2 1 5 The first column represents the content material covered prior to the first examination; in this case it is somewhat consistent with the concepts covered over a four-week period of time. The first row represents the levels of knowledge at which the content is to be mastered. While the use of any taxonomy of knowledge will facilitate test development, that found in Stiggins (2008) to be quite helpful in terms of differentiating items at various levels of the taxonomy. The numbers within the cells of the matrix represent the number of items written to a specific content and level of knowledge. The differences in the number of items usually reflects differences in the amount of instructional time spent on each topic, the amount of material in each category, or the relative importance of material in each category. In Table 2, five (5) items address the Sources of Knowledge. Two (2) of these are written at the recall level. One (1) item reflects the comprehension level, one t(1) he analysis level, and one (1) the evaluation level. Overall, thirty-seven (37) items will be on the test. Obviously there is greater emphasis on the Research Designs (20 items) than Sources of Knowledge (5), Scientific Evidence-Based Inquiry (6), or Types of Research or Research Reports (4 items). On the other hand, more emphasis is placed on recall and comprehension level items (17 and 12) than those at the other levels. An alternative to the table of specifications is the development of a list of instructional objectives that act very much like the cells of a table of specifications. Each objective specifies the knowledge to be brought bear and the action to be taken. An example of an objective taken from same introductory research test is as follows. Table 2 provides additional examples of the objectives for the same unit in the introductory research class discussed above. (The links within each objective refer to an outline discussing the content of that objective.) Assess the appropriateness of relying on each source of knowledge for specific decisions. Document1 2 In this objective, the student is being asked to evaluate the appropriateness on relying on specific sources in specific situations. Table 2 Learning Objectives for Unit 1 Number 1 2 3 4 5 6 7 8 9 10 11 12 13 14 Objective Identify four (4) sources used to make decisions in education1.1 and the limitations associated with each source1.2. Assess the appropriateness of relying on each source for specific decisions1.3. Define the term research2.1. Defend research as a valuable source of information and knowledge in education2.2. Discuss the need for scientific evidence-based inquiry3.1 in education. Identify six characteristics associated with scientific evidence-based inquiry3.2. Define theory4.1 and explain its importance in scientific evidence-based inquiry4.2 Identify the six (6) steps typically used to conduct research5.1. Discuss the way in which each step contributes to the credibility of the results5.2. Explain how research results can become scientific evidence-based information5.3. Compare quantitative and qualitative approaches to research in terms of their goals 6.1; the research designs used6.2; the samples from which information is collected 6.3; the actual data, data collection techniques, and data analyses6.4; the researcher’s role6.5; context6.6; and common terminology6.7. Distinguish between non-experimental and experimental quantitative research designs7. Describe the characteristics of the following quantitative research designs: descriptive 8.1, comparative8.2, correlational8.3, causal comparative8.4, true-experimental8.5, quasiexperimental8.6, and single subject8.7. Identify the characteristics of case studies9. Describe the goals of the following qualitative research designs: phenomenology 10.1, ethnography10.2, and grounded theory10.3. Identify the characteristics of analytical research11.1. Identify two types of analytical designs11.2. Identify the characteristics of mixed-methods design12. Understand the differences between basic, applied, action, and evaluation research 13. Identify the components of an educational research report14.1. Provide a brief description of the function of each component14.2. Identify these components in a research report14.3. Selecting Material to Test. The second task in the development of a test is to select the material to be tested. This entails sampling specific items to address a particular cell of the table of specifications from the host of items that might measure that cell. For example, we might study five ways of -knowing- in an introductory educational research course (i.e., experience; custom, tradition, authority; inductive reasoning; deductive reasoning; and scientific inquiry). The cell from the table of specifications in Table 2 requires the construction of three (3) items. The question becomes, “Which of these knowledge sources are to be selected?” We would like to offer two factors in considering the answer. The first represents the recognition of the subjective nature of selecting a sample of items from a larger body of content and thinking called a domain. While most teachers do not have sophisticated test banks from which they can systematically sample items, they do have a very real sense of what is important in terms of content and cognition. We would like to recognize that expertise in the context of a clearly defined and understood achievement target. For example, in the table of specifications depicted in Table 2, the Sources of Knowledge questions at the analysis (1) and evaluation (1) level are likely to involve scientific inquiry, as that is the basic source of knowledge being studied in the semester. The discussion of all other sources is relevant only to their limitations in comparison to those of scientific inquiry. While a decision to focus these questions on this specific knowledge is ultimately subjective in nature, such subjectivity is not problematic given the knowledge of the context within this content is being studied. Document1 3 The second method for sampling items involves identifying important learning objectives related to the content. In practice, we identify most, if not all, of the important learning objectives prior to teaching or assessing. Carefully thinking about the emphasis of each objective will determine the specific objectives for which we can develop items. Writing Items. There is a wealth of information available on writing selected response items (Stiggins, 2008; Nitko and Brookhart, 2007; Nitko, 2001; Gronlund, 1998; Gronlund and Brookhart, 2009; Stiggins, Arter, Chappuis, and Chappius, 2007). Most of this is in the form of suggestions for each specific item format. I can offer the following quality checklist that summarizes many of these suggestions. General Guidelines for All Formats Items are clearly written and focused. A question is posed. The lowest possible reading level is used. Irrelevant clues are eliminated. Items have been reviewed by a colleague. The scoring key has been checked. Guidelines for Multiple Choice Items The item stem poses a direct question. Any repetition is eliminated from the response. Only one best or correct answer is provided. The response options are brief and parallel. The number of response options offered fits the item context. Guidelines for True/False Items The statement is entirely true or false. Guidelines for Matching Exercises Clear directions on how to match are discussed. The list of items to be matched is brief. Any lists consist of homogeneous entries. The response options are brief and parallel .Extra response options are offered. Guidelines for Fill-In Items A direct question is posed. One blank is needed to respond. The length of the blank is not a clue to the answer. Selecting from Among the Four Selected Response Formats. The last aspect of test development we would like to address is the strengths of each of the four formats. This knowledge can be extremely helpful when trying to decide which format to use. The multiple-choice format has four distinct advantages. First, most multiple-choice assessments can be scored electronically using optical scanning and test scoring technologies. This in and of itself is an efficient and effective way to score tests. It has an added advantage in that most scoring programs provide item analysis data, which can provide insights into common misconceptions among students. A third advantage relates to situations where a teacher can identify the single correct or best answer and identify a number of viable incorrect responses. An example of a simple computational statistics problem like Document1 4 computing the standard deviation exemplifies this advantage. We know the skills of the student that computes the answer correctly. We might also gain some insight into the skills of students choosing other responses if those responses systematically reflect aspects of the computation. For example, two alternatives might reflect the differences between answers incorrectly using (x²) and (x)². By examining a student's choice of one of these alternatives we can understand what mistakes were made. Finally, multiple-choice tests have the advantage of being able to test a broad range of targets with a minimal amount of testing time. Matching and true/false items have distinct advantages also. In the case of the matching item, the major advantage is its efficiency. One way to think of them is as a series of multiple choice items presented at once. The true/false item has the advantage of requiring very short response times. This is extremely valuable if there is a great deal of information to cover and the teacher needs to ask many items. In addition, both formats share two of the advantages of multiple-choice items: they can be machine scored and analyzed and they can provide insight into common misconceptions. While each of these three formats has unique advantages, they share a common fault -all are susceptible to guessing. Obviously the true/false item is most problematic in this regard. However, when guessing is a problem, fill-in-the-blank items have a distinct advantage over the other formats. Student Involvement in the Assessment Process Earlier in this paper I described Airasian's assessment model as one in which planning, instruction, and assessment were related in a multi-directional, interactive manner (Airasian, 1994). That is, assessment feeds planning and instruction as well as visa-versa. I suggested this represented the nature of assessment in the context of current cognitive and instructional theory. Of particular interest in this regard is the belief that assessments can be woven in the learning environment to such an extent that they involve teachers as well as students. This involvement has been termed “student-centered assessment.” Some examples of studentcentered classroom assessment follow. Develop a set of objectives for a test before ever teaching the unit, being sure to identify not only to content but the cognitive process required to deal with that content (e.g., a table of test specifications for a final unit test before the unit is ever taught). Share the table with students. Explain your expectations. A clear vision of the valued outcomes will result, and instruction can be tailored to promote student success. Involve students in the process of devising a test plan, or involve them from time to time in checking back to the blueprint to see together, as partners, whether you need to make adjustments in the test plan and/or chart your progress. Once you have the test plan completed, write a few items each day as the unit unfolds. Such items will necessarily reflect instructional priorities. Involve students in writing practice test items. Students will have to evaluate the importance of the various elements of content and become proficient in using the kinds of thinking and problem solving valued in the classroom. As a variation on that idea, provide unlabeled exercises and have students map them into the cells of the table of specifications. Have students use the test blueprint to predict how they are going to do on each part of the test before they take it. Then have them analyze how they did, part-by-part, after taking it. If the first test is for practice, such an analysis will provide valuable information to help them plan their preparation for the real thing. Have students work in teams where each team has responsibility for finding ways to help everyone in class score high in one cell, row, or column of the table of specifications. Use lists of unit objectives and tables of test specifications to communicate with other teachers about instructional priorities, so as to arrive at a clearer understanding of how those priorities fit together across instructional levels or school buildings. Document1 5 Store test items by content and reasoning category for reuse. If the item record also included information on how students did on each item, you could revise the item or instruction when these analyses indicate trouble. Document1 6