Units of English spelling-to-sound mapping

advertisement

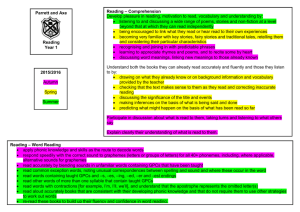

Simplifying reading: Implications for instruction Janet Vousden University of Warwick Michelle Ellefson, Nick Chater, Jonathan Solity Overview English spelling-to-sound inconsistency and reading rational analysis of English reading applying the simplicity principle analysis of some common reading programmes Spelling-to-sound mappings spelling-to-sound mappings in English are not transparent at sub-lexical level some spellings are consistent: “ck”: duck - /dʌk/, mock - /mok/, etc and a simple grapheme-phoneme rule will suffice; ck - /k/ others are not: “ea”: beach - /biːtʃ/, real - /rɪəl/, great - /ɡreɪt/, or head - /hɛd/ grapheme should, four, level country, - “ou”tenuous, grapheme soul, is credited journal, with cough, having pompous 10 different pronunciations (Gontijo, Gontijo, & Shillcock, 2003) most e.g.,obvious round, group, at the overall measure of (in)consistency in a language is its orthographic depth: average number of pronunciations per grapheme for English, orthographic depth estimates 2.1 - 2.4 (Berndt, Reggia, & Mitchum, 1987; Gontijo, Gontijo, & Shillcock, 2003) polysyllabic text 1.7 (Vousden, 2008) monosyllabic text compare e.g. Serbo-Croat which has OD of 1 how do literacy levels in English compare with other languages? can differences in consistency account for the difficulty in learning to read English? yes - inconsistency clearly increases difficulty of learning to read compared with more consistent languages (Frith, Wimmer & Landerl, 1998) Language Real-words Nonwords Greek 98 92 Finnish 98 95 German 98 94 Italian 95 89 Spanish 95 89 Swedish 95 88 Dutch 95 82 Icelandic 94 86 Norwegian 92 91 French 79 85 Portuguese 73 77 Danish 71 54 Scottish English 34 29 Data: % correct reading scores (adapted from Seymour, Aro, & Erskine, 2003). lag in performance persists through school years % correct Data: non-word reading accuracy (reproduced from Frith, Wimmer, & Landerl, 1998) 100 90 80 70 60 50 40 30 20 10 0 German English 6 7 8 9 10 Age 11 12 13 Most often, vowel graphemes are inconsistent, but can use immediate context to resolve ambiguity C V C - C V or V C ambiguity can be resolved by considering the following consonant (a rime unit) rather than the previous consonant (Treiman et al., 1995) ea pronounced to rhyme with breath when followed by ‘d’ ~80% pronounced to rhyme with meat when followed by ‘p’ 100% also, rime units are more consistent than graphemes 23% graphemes inconsistent 15% rimes inconsistent Choosing spelling-to-sound mappings influences from developmental literature (do rimes or gpcs predict reading ability?) variety of approaches from reading schemes (Rhymeworld, THRASS, etc) so many to choose from, ~2000 rime mappings ~300 grapheme mappings and many are inconsistent 15% rimes, 23% graphemes Rational analysis Attempt to explain behaviour in terms of adaptation to environment, independent of details of cognitive architecture Solution adopted by cognitive architecture should reflect structure of environment e.g., Anderson & Schooler (1991) showed that the probability that a memory will be needed over time matches the availability of human memories same factors that predict memory performance also predict the odds that an item will be needed i.e. reliable effects of recency and frequency factors that affect performance of skilled readers should be reflected in the statistical structure of the language, e.g. frequency and consistency effects of word frequency in naming and lexical decision effects of rime frequency on word-likeness judgements and pronunciation effects of grapheme frequency in letter search and word priming experiments by examining linguistic factors that skilled readers have adapted to, could the input be more optimally structured for learners? Analyses of spelling-to-sound mappings rational analysis predicts the most frequent and consistent mappings best predict pronunciation interested in the frequency & consistency of mappings at level of words, rimes, and graphemes, and their ability to predict correct pronunciation CELEX database: 7,297 different monosyllabic words, 10,924,491 words in total Words Proportion of Text Read Frequency in 100,000's 14 12 10 8 6 4 2 0 0 50 100 150 Rank order of Frequency 200 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 0 100 200 300 Number of Words 400 500 Onsets and rimes Exclude 100 most frequent words: 7,197 diffrent words, total of 2,263,264 words Create table of onset and rime mapping frequencies, remove all but most frequent of inconsistent mappings Rime Frequency oul - /əʊl/ 731 oul - /aʊl/ 175 oul - /uːl/ 12 ove - /ʌv/ 4779 ove - /uːv/ 1852 ove - /əʊv/ 838 Rimes 500 50 450 45 400 40 350 35 Frequency in 1000's Frequency in 1000's Onsets 300 250 200 150 30 25 20 15 100 10 50 5 0 0 0 10 20 30 Rank Order of Frequency 40 50 0 20 40 60 Rank Order of Frequency 80 100 0 Onsets 10 Onsets 20 Onsets 30 Onsets 40 Onsets 80 Onsets Proportion of Text Read (%) 60 50 40 30 20 10 0 0 20 40 60 Number of Rimes 80 100 GPCs exclude 100 most frequent words: 7197 diffrent words, total of 2,263,264 words create table of GPC mapping frequencies, remove all but most frequent of inconsistent mappings Rime Frequency g - /g/ 133930 g - /dʒ/ 33342 g - /ʒ/ 98 i - /ɪ/ 153606 i - /aɪ/ 46628 i - /iː/ 455 GPCs 10 9 60 7 Percentage of Text Read Frequency in 100,000's 8 6 5 4 3 2 1 50 40 30 20 10 0 0 0 20 40 Rank Order of Frequency 60 80 0 20 40 60 Number of GPCs 80 100 Percentage of Text Read 100 95 90 Grapheme Phoneme Onset/Rime 85 80 75 70 0 100 200 Number of Mappings 300 Summary some words much more frequent than others, therefore sight vocabulary very effective for small number of words, up to ~100 sub-lexical units also have skewed frequency distribution, and learning the most frequent mappings predicts high potential outcome high initial gains with GPCs, greater overall gain with rimes in the long run What is the optimal size unit to learn? Potential benefits for reading outcome are larger for onset/rimes, but is this out-weighed by the cost of remembering many more mappings? Can we measure the potential benefit from, and cost of, remembering mappings for GPCs onset/rimes A combination of both ? The Simplicity Principle reading, like much high-level cognition, involves finding patterns in data, but many patterns are compatible with any finite set of data - so how does the cognitive system choose from the possibilities? Using the simplicity principle, choose the simplest explanation of the data - intuitively, has long history (Occam’s razor) can quantify simplicity by measuring (shortest) description from which data can be reconstructed - trade off brevity against goodness of fit cognition as compression implement with minimum description length (MDL) more regularity = more compression no regularity = no compression, just reproduce data can measure compression with Shannon’s (1948) coding theorem - more probable events are assigned shorter code lengths: length/bits = log2(1/p) measure code length to specify: hypothesis about data (mappings) data, given hypothesis (decoding accuracy, given mappings) Method determine mappings & frequencies from monosyllabic corpus of children’s reading materials (Stuart et al., 2003), for mapping sizes: words CV/C (head/coda) C/VC (onset/rime) GPCs determine code length to describe mappings decoding accuracy, given mappings for each mapping size Table 1. A list of reading schemes/series used by over a third of schools in the survey Name of scheme % using scheme Ginn 360 74% Storychest 58% Magic Circle 58% 1 2 3 and Away 50% Griffin Pirates 43% Breakthrough to Literacy 41% Bangers and Mash 40% Wide range readers 38% Dragon Pirates 37% Through the rainbow 34% Ladybird read-it-yourself 33% Humming birds 32% Thunder the dinosaur 29% Link Up 29% Gay Way 27% Monster 27% Oxford Reading Tree 27% Once Upon a Time 26% Trog 26% Included in database? Yes Yes Yes Yes Yes Yes Yes Yes Yes Yes Yes Yes Yes Yes Code length for mappings words CV/C C/VC GPCs letter sound freq letter sound freq letter sound freq wiː bi bI 1 d d 7 t t 17 kæn ca kæ 1 g g 4 i I 11 bʌt do dɒ 1 f f 1 a æ 11 ɒn fro frɒ 2 wh w 1 g g 7 miː ra ræ 1 k k 4 c k 4 bæk n n 9 an æn 4 ay eɪ 2 kʌm k k 1 og ɒg 2 a*e eɪ 1 frɒg lp lp 1 elp elp 1 (1/p(w)) ++ log log22(1/p(i)) (1/p(iː))+ +log log length = log2(1/p(b)) + 2(1/p(newline)) 2(1/p(space)) log2(1/p(b)) + log2(1/p(I)) + log2(1/p(newline)) Code length for decoding accuracy apply letter-to-sound rules to produce a list of pronunciations arrange in rank order of most probable (computed from letter-to-sound frequencies) & note rank of correct pronunciation bread breId bri:d brɛd bread bri:d brɛd breId code length for data, given hypothesis = log2(1/p(rank=2)) 14 12 log2(1/p) 10 8 6 4 2 0 0 0.2 0.4 0.6 p 0.8 1 Simulations overall comparison between different unit sizes for whole vocabulary how does code length vary as a function of size of vocabulary for each unit size? optimize number of mappings by removing those that reduce total code length compare different reading schemes Comparing different unit sizes for whole vocabulary 90000 80000 rules acc tot 70000 Cost/bits 60000 50000 40000 30000 20000 10000 0 Words CV/C C/VC Rule size GPCs Code length as a function of vocabulary size 90000 4000 words words CV/C CV/C C/VC C/VC GPC GPC 80000 3500 Cost/bits Cost/bits 70000 3000 60000 2500 50000 2000 40000 1500 30000 1000 20000 500 10000 0 0 0 10 500 30 1000 2000 501500 70 Vocabulary Vocabularysize size 2500 90 3000 Optimizing number of mappings All mappings Mapping Size Mappings remaining N Total code length N Total code length Words 3000 77,825 - - Onset/rimes 1141 48,612 404 29,420 GPCs 240 10,845 114 8,536 GPCs: Description length reduced by removing mainly inconsistent, low frequency mappings Comparing different reading schemes Scheme Jolly Phonics N GPC rules 43 Hutzler et al. (2004) 67 ERR (Solity & Vousden, 2008) 77 Letters & Sounds 94 THRASS 106 30000 rules acc total 25000 Cost/bits 20000 15000 10000 5000 0 Jolly N=43 30000 Hutzler N=71 ERR N=77 LettSou N=94 THRASS N=106 simplicity N=111 all N=240 rules acc total 25000 Cost/bits 20000 15000 10000 5000 0 43 71 77 94 106 N rules 111 240 Decoding accuracy by scheme 80 70 60 % correct 50 Schemes Simplicity 40 30 20 10 0 Jolly N=43 Hutzler N=71 ERR N=77 LettSou N=94 THRASS N=106 all N=240 simplicity N=111 ERR implemented as a reading intervention in 12 Essex schools: Data: from Shapiro & Solity (2008) 60 BAS Raw Score 50 Comparison ERR 40 reading difficulty 30 20 serious reading difficulty 10 0 base YR Y1 Compariso n ERR 20% 5% 5% 1% Y2 School Age increase in reading scores significantly greater for ERR schools Some conclusions small amount of sight vocabulary accounts for large proportion of text, but only small vocabularies most simply described by whole words Complements recent work by Treiman and colleagues that shows children learn better when association between sound and print is non-arbitrary As a homogenous set, GPCs provide a simpler explanation of the data choosing the best set could be important