pptx - University of Maryland Institute for Advanced Computer Studies

advertisement

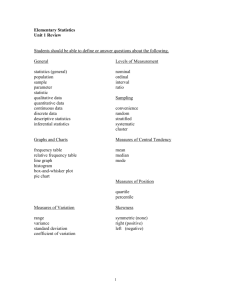

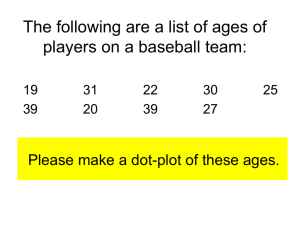

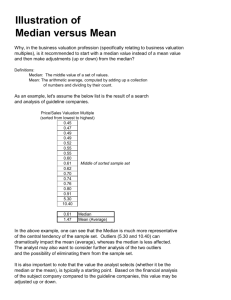

2. Big Data and Basic Statistics ENEE 759D | ENEE 459D | CMSC 858Z Prof. Tudor Dumitraș Assistant Professor, ECE University of Maryland, College Park http://ter.ps/759d https://www.facebook.com/SDSAtUMD Today’s Lecture • Where we’ve been – Security principles vs. security in practice – Introduction to security data science • Where we’re going today – Big Data and basic statistics – Pilot project: proposal, approach, expectations • Where we’re going next – Basic statistics (part 2) 2 The Trouble With Big Data • Last time we talked about the data deluge – Data created/reproduced in 2010: 1,200 exabytes – Data collected to find the Higgs boson: 1 gigabyte / s – Yahoo: 200 petabytes across 20 clusters – Security: • Global spam in 2011: 62 billion / day • Malware variants created in 2011: 403 million • We can store all this data (1 GB ~ ¢6) • Analyzing the data can produce remarkable insights How to analyze multi-TB data sets? 3 Challenges for Dealing with Big Data • Big Data is hard to move around Read 1 MB sequentially from main memory 150 µs Send 1 MB over 10 Gbps switch 1,000 µs Read 1 MB from 15K RPM disk 1,000 µs Compress 1 MB w/ fast algorithm (e.g., QuickLZ, Snappy) 3,000 μs Send 1 MB across datacenter Send 1 MB from France datacenter to Los Angeles 100,000 µs 9,000,000 µs • Engineers must grasp parallel processing techniques – To access 1 TB in 1 min, must distribute data over 20 disks – MapReduce? Parallel DB? Dryad? Pregel? OpenMPI? PRAM? • Engineers must understand how to interpret data correctly 4 Processing Data in Parallel • How big is ‘Big Data’? (data volume) – Real answer: it depends – When your manager asks: 10-20 TB 5-8 TB Distributed system 1 TB Single-node parallel DB • Parallel DB • MapReduce Relational DB • MySQL • Postgres • etc. • Parallelism does not reduce asymptotic complexity – O(N log N) algorithm is still O(N log N) when run in parallel on K machines – But the constants are divided by K (and can have K > 1000) 5 Data Collection Rate • Sometimes the data collection rate is too high (data velocity) – It may be too expensive to store all the data – The latency of data processing may not support interactivity • Example: There are 600 million collisions/s per second in the Large Hadron Collider at CERN – This would amount to collecting ~1 PB/s (David Foster, CERN) – They only record one in 1013 (ten trillion) collisions (~ 100 MB/s – 1 GB/s) • Techniques for dealing with data velocity – Sampling (as in the LHC) – Stream processing – Compression (e.g. Snappy, QuickLZ, RLE) 6 • In some cases operating on lightly compressed data reduces latency! The Curse of Many Data Formats • Data comes from many sources and in many formats, often not standardized or even documented (data variety) – This is also known as the ‘data integration problem’ • Example: It is difficult for security products to analyze all the relevant data sources In 84% of [targeted attacks between 2004-2012] clear evidence of the breach was present in local log files. DARPA ICAS/CAT BAA, 2013 • A good approach: schema-on-read – The DB way: data loaded must have a schema (columns, data types, constraints) • In practice, enforcing a schema on load means that some data is discarded – The MapReduce way: store raw data, parse when analyzing 7 Junk Data is a Reality • Data Quality (also called information quality, data veracity) – Can the data be trusted? • Example: information on vulnerabilities and attacks from Twitter – Is there inherent uncertainty in the values recorded? • Example: anti-virus (AV) detections are often heuristic, not black-and-white – Does the data collection procedure introduce noise or biases? • Example: data collected using an AV product is from security-minded people 8 Attributes of Big Data • The 3 Vs of Big Data (source: ‘Challenges & Opportunities with Big Data,’ SIGMOD Community Whitepaper, 2012) – Data Volume: the size of the data – Data Velocity: the data collection rate – Data Variety: the diversity of sources and formats • One more important attribute – Data Quality: • Are any data items corrupted or lost (e.g. owing to errors while loading)? • Is the data uncertain or unreliable? • What is the statistical profile of the data set? (e.g. distribution, outliers) – You must understand how to interpret data correctly 9 Exploratory Data Analysis What does the data look like? (basic inspection) • It is often useful to inspect the data visually (e.g. open it in Excel) • Example: road test data from 1974 Motor Trend magazine mpg cyl disp hp drat wt qsec vs am gear carb Mazda RX4 21 6 160 110 3.9 2.62 16.46 0 1 4 4 Mazda RX4 Wag 21 6 160 110 3.9 2.875 17.02 0 1 4 4 Datsun 710 22.8 4 108 93 3.85 2.32 18.61 1 1 4 1 Hornet 4 Drive 21.4 6 258 110 3.08 3.215 19.44 1 0 3 1 Hornet Sportabout 18.7 8 360 175 3.15 3.44 17.02 0 0 3 2 Valiant 18.1 6 225 105 2.76 3.46 20.22 1 0 3 1 Duster 360 14.3 8 360 245 3.21 3.57 15.84 0 0 3 4 … 10 Basic Data Visualization What does the data look like? (basic inspection) • Scatter plot (Matlab: plot, scatter R: ggplot(…) + geom_point()) – – ! ! Determine a relationship between two variables Reveal trends, clustering of data Do not rely on colors alone to distinguish multiple data groups (e.g. cylinders) Watch out for overplotting Source: Kanich et al., Spamalytics Stem Plots and Histograms What are the most frequent values in the data? • Stem-and-leaf plot (R: stem) – Shows the bins with the most values and the values – Not that popular since the dawn of the computer age, but effective 10 12 14 16 18 20 22 24 26 28 30 32 | | | | | | | | | | | | 44 3 3702258 438 17227 00445 88 4 03 44 49 • Histogram (R: ggplot(…) + geom_histogram()) ! Pay attention to the bin width 12 Statistical Distributions What does the data look like? (more rigorous) • Probability density function (PDF) of the values you measure Tail of the distribution – PDF(x) is the probability that the metric takes the value x – ò b a PDF(x)dx = Pr[a £ metric £ b] – Estimation from empirical data (Matlab: ksdensity R: density) • Cumulative density function (CDF) 75th percentile median 25th percentile – CDF(x) is the probability that the metric takes a value less than x CDF ( x ) = x ò PDF ( u) du = Pr[metric £ x] -¥ – Estimation (R: ecdf) 13 Summary Statistics What does the data look like? (in summary) • Measures of centrality – Mean = sum / length (mean) – Median = half the measured values are below this point (median) – Mode = measurement that appears most often in the dataset 80% of analytics is sums and averages. Aaron Kimball, wibidata Mode Median Mean • Measures of spread – Range = maximum – minimum (range) – Standard deviation (σ) (Matlab: std R: sd) 1 n Xi X n 1 i 1 – Coefficient of variation = σ / mean • Independent of the measurement units 14 2 Percentiles and Outliers What does the data look like? (in summary) • Percentiles – Nth percentile: X such that N% of the measured samples are less than X • The median is the 50th percentile • The 25th and 75th percentiles are also called the 1st and 3rd quartiles (Q1 and Q3), respectively – Matlab: prctile R: quantile ! The “five number” summary of a data set: <min, Q1, median, Q3, max> • Outliers – “Unusual” values, significantly higher/lower than the other measurements ! Must reason about them: Measurement error? Heavy-tailed distribution? An interesting (unexplained) phenomenon? – Simple detection tests: Xoutlier > X + 3s • 3σ test • 1.5 * IQR Xoutlier > Q3 +1.5 (Q3 - Q1 ) • R package outliers ! The median is more robust to outliers than the mean 15 Boxplots What does the data look like? (comparisons) • Box-and-whisker plots are useful for comparing probability distributions – – – – The box represents the size of the inter-quartile range (IQR) The whiskers indicate the maximum and minimum values The median is also shown Matlab: boxplot R: ggplot(..)+geom_boxplot() • In 1970, US Congress instituted a random selection process for the military draft – All 366 possible birth dates were placed in a rotating drum and selected one by one – The order in which the dates were drawn defined the priority for drafting ! Boxplots show that men born later in the year were more likely to be drafted 16 From http://lib.stat.cmu.edu/DASL/Stories/DraftLottery.html Pilot Project • Propose a security problem and a data set – Some ideas available on the web page • 1–2 page report due on September 18th (hard deadline) – Hypothesis – Volume: how much data (e.g. number of rows, columns, bytes) – Velocity: how fast is the data updated – Variety: how to access/analyze the code programmatically • JSON/CSV/DB dump, screen scrape, etc.; • What language / library to use to read the data – Data quality • Statistical distribution? Outliers? Missing fields? Junk data? etc. 17 A Note On Homework Submissions • Submit BibTeX files in plain text – No Word DOC, no RTF, no HTML! – Do not remove BibTeX syntax (e.g. the @ sign before entries) • This confuses my parser and I may think that you did not submit the homework if I don’t catch the error! • Make your contribution statements more summarizing than descriptive – Remembering "introduced a methodology" isn't as useful to you later on as "the key idea is XYZ” – Most of you wrote precise statements for weaknesses – do the same for contributions! 18 Discussion Outside the Classrooom • Piazza: https://piazza.com/umd/fall2013/enee759d/home – Post project proposals, reports, project reviews – Post questions and general discussion topics – DO NOT post paper reviews – DO NOT discuss your paper review with your classmates before the submission deadline, on Piazza or anywhere • You are welcome (and encouraged) to discuss the papers after the deadline • Facebook: https://www.facebook.com/SDSAtUMD – For posting interesting articles, videos, etc. related to security data science – For spreading the word 19 Review of Lecture • What did we learn? – Attributes of Big Data – Probability distributions • What’s next? – Paper discussion: ‘Spamalytics: An Empirical Analysis of Spam Marketing Conversion’ – Basic statistics (part 2) • Deadline reminder – Post pilot project proposal on Piazza by the end of the day (soft deadline) • Office hours after class – Second homework due on Tuesday at 6 pm 20