8-Query Optimization

advertisement

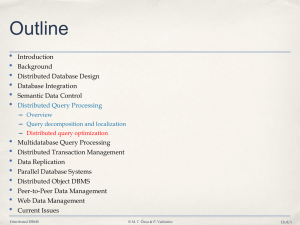

Outline

•

•

•

•

•

•

Introduction

Background

Distributed Database Design

Database Integration

Semantic Data Control

Distributed Query Processing

➡ Overview

➡ Query decomposition and localization

•

•

•

•

•

•

•

•

➡ Distributed query optimization

Multidatabase Query Processing

Distributed Transaction Management

Data Replication

Parallel Database Systems

Distributed Object DBMS

Peer-to-Peer Data Management

Web Data Management

Current Issues

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/1

Step 3 – Global Query

Optimization

Input: Fragment query

• Find the best (not necessarily optimal) global schedule

➡ Minimize a cost function

➡ Distributed join processing

✦

Bushy vs. linear trees

✦

Which relation to ship where?

✦

Ship-whole vs ship-as-needed

➡ Decide on the use of semijoins

✦

Semijoin saves on communication at the expense of more local processing.

➡ Join methods

✦

nested loop vs ordered joins (merge join or hash join)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/2

Cost-Based Optimization

•

Solution space

➡ The set of equivalent algebra expressions (query trees).

•

Cost function (in terms of time)

➡ I/O cost + CPU cost + communication cost

➡ These might have different weights in different distributed environments

(LAN vs WAN).

➡ Can also maximize throughput

•

Search algorithm

➡ How do we move inside the solution space?

➡ Exhaustive search, heuristic algorithms (iterative improvement, simulated

annealing, genetic,…)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/3

Query Optimization Process

Input Query

Search Space

Generation

Transformation

Rules

Equivalent QEP

Search

Strategy

Cost Model

Best QEP

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/4

Search Space

•

•

•

▷◁PNO

Search space characterized by

alternative execution

▷◁ENO

Focus on join trees

EMP

For N relations, there are O(N!)

equivalent join trees that can be

obtained by applying

commutativity and associativity

rules

SELECT

ENAME,RESP

FROM

EMP, ASG,PROJ

WHERE

EMP.ENO=ASG.ENO

AND

ASG.PNO=PROJ.PNO

ASG

▷◁ENO

▷◁PNO

PROJ

EMP

ASG

▷◁ENO,PNO

×

PROJ

Distributed DBMS

PROJ

© M. T. Özsu & P. Valduriez

ASG

EMP

Ch.8/5

Search Space

Restrict by means of heuristics

Perform unary operations before binary operations

…

Restrict the shape of the join tree

➡ Consider only linear trees, ignore bushy ones

Linear Join Tree

Bushy Join Tree

⋈

⋈

⋈

R1

Distributed DBMS

⋈

R4

⋈

R3

R2

R1

© M. T. Özsu & P. Valduriez

⋈

R2

R3

R4

Ch.8/6

Search Strategy

How to “move” in the search space.

Deterministic

Start from base relations and build plans by adding one relation at each step

Dynamic programming: breadth-first

Greedy: depth-first

Randomized

Search for optimalities around a particular starting point

Trade optimization time for execution time

Better when > 10 relations

Simulated annealing

Iterative improvement

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/7

Search Strategies

•

⋈

Deterministic

⋈

⋈

⋈

⋈

R1

•

R2

R1

Randomized

R1

Distributed DBMS

R2

R2

⋈

⋈

R3

R2

R3

R1

⋈

⋈

⋈

R3

R4

R1

© M. T. Özsu & P. Valduriez

R2

R3

Ch.8/8

Cost Functions

•

Total Time (or Total Cost)

➡ Reduce each cost (in terms of time) component individually

➡ Do as little of each cost component as possible

➡ Optimizes the utilization of the resources

Increases system throughput

•

Response Time

➡ Do as many things as possible in parallel

➡ May increase total time because of increased total activity

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/9

Total Cost

Summation of all cost factors

Total cost

= CPU cost + I/O cost + communication cost

CPU cost

= unit instruction cost * no.of instructions

I/O cost

= unit disk I/O cost * no. of disk I/Os

communication cost = message initiation + transmission

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/10

Total Cost Factors

•

Wide area network

➡ Message initiation and transmission costs high

➡ Local processing cost is low (fast mainframes or minicomputers)

➡ Ratio of communication to I/O costs = 20:1

•

Local area networks

➡ Communication and local processing costs are more or less equal

➡ Ratio = 1:1.6

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/11

Response Time

Elapsed time between the initiation and the completion of a query

Response time = CPU time + I/O time + communication time

CPU time

= unit instruction time * no. of sequential instructions

I/O time

= unit I/O time * no. of sequential I/Os

communication time = unit msg initiation time * no. of sequential msg

+ unit transmission time * no. of sequential bytes

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/12

Example

Site 1

x units

Site 3

Site 2

y units

Assume that only the communication cost is considered

Total time = 2 × message initialization time + unit transmission time * (x+y)

Response time = max {time to send x from 1 to 3, time to send y from 2 to 3}

time to send x from 1 to 3 = message initialization time

+ unit transmission time * x

time to send y from 2 to 3 = message initialization time

+ unit transmission time * y

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/13

Optimization Statistics

•

•

•

Primary cost factor: size of intermediate relations

➡ Need to estimate their sizes

Make them precise more costly to maintain

Simplifying assumption: uniform distribution of attribute values in a

relation

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/14

Statistics

•

For each relation R[A1, A2, …, An] fragmented as R1, …, Rr

➡ length of each attribute: length(Ai)

➡ the number of distinct values for each attribute in each fragment:

•

card(AiRj)

➡ maximum and minimum values in the domain of each attribute: min(Ai),

max(Ai)

➡ the cardinalities of each domain: card(dom[Ai])

The cardinalities of each fragment: card(Rj) Selectivity factor of each

operation for relations

➡ For joins

SF ⋈ (R,S) =

Distributed DBMS

card(R ⋈S)

card(R) * card(S)

© M. T. Özsu & P. Valduriez

Ch.8/15

Intermediate Relation Sizes

Selection

size(R) = card(R) × length(R)

card(F(R)) = SF(F) × card(R)

where

S F(A = value) =

1

card(∏A(R))

max(A) – value

S F(A >value) =

max(A) – min(A)

value – max(A)

S F(A <value) =

max(A) – min(A)

SF(p(Ai) p(Aj)) = SF(p(Ai)) × SF(p(Aj))

SF(p(Ai) p(Aj)) = SF(p(Ai)) + SF(p(Aj)) – (SF(p(Ai)) × SF(p(Aj)))

SF(A{value}) = SF(A= value) * card({values})

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/16

Intermediate Relation Sizes

Projection

card(A(R))=card(R)

Cartesian Product

card(R × S) = card(R) * card(S)

Union

upper bound: card(R S) = card(R) + card(S)

lower bound: card(R S) = max{card(R), card(S)}

Set Difference

upper bound: card(R–S) = card(R)

lower bound: 0

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/17

Intermediate Relation Size

Join

➡ Special case: A is a key of R and B is a foreign key of S

card(R ⋈A=B S) = card(S)

➡ More general:

card(R ⋈ S) = SF⋈ * card(R) × card(S)

Semijoin

card(R ⋉A S) = SF⋉(S.A) * card(R)

where

SF⋉(R ⋉A S)= SF⋉(S.A) =

Distributed DBMS

card(∏A(S))

card(dom[A])

© M. T. Özsu & P. Valduriez

Ch.8/18

Histograms for Selectivity

Estimation

•

•

For skewed data, the uniform distribution assumption of attribute values

yields inaccurate estimations

Use an histogram for each skewed attribute A

➡ Histogram = set of buckets

•

✦

Each bucket describes a range of values of A, with its average frequency f

(number of tuples with A in that range) and number of distinct values d

✦

Buckets can be adjusted to different ranges

Examples

➡ Equality predicate

✦

With (value in Rangei), we have: SF(A = value) = 1/di

➡ Range predicate

✦

Requires identifying relevant buckets and summing up their frequencies

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/19

Histogram Example

For ASG.DUR=18: we have SF=1/12 so the card of selection is 300/12

= 25 tuples

For ASG.DUR≤18: we have min(range3)=12 and max(range3)=24 so the

card. of selection is 100+75+(((18−12)/(24 − 12))*50) = 200 tuples

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/20

Centralized Query Optimization

•

Dynamic (Ingres project at UCB)

➡ Interpretive

•

Static (System R project at IBM)

➡ Exhaustive search

•

Hybrid (Volcano project at OGI)

➡ Choose node within plan

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/21

Dynamic Algorithm

Decompose each multi-variable query into a sequence of mono-variable

queries with a common variable

Process each by a one variable query processor

➡ Choose an initial execution plan (heuristics)

➡ Order the rest by considering intermediate relation sizes

No statistical information is maintained

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/22

Dynamic Algorithm–

Decomposition

•

Replace an n variable query q by a series of queries

q1q2 … qn

•

where qi uses the result of qi-1.

Detachment

➡ Query q decomposed into q' q" where q' and q" have a common variable

•

which is the result of q'

Tuple substitution

➡ Replace the value of each tuple with actual values and simplify the query

q(V1, V2, ... Vn) (q' (t1, V2, V2, ... , Vn), t1R)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/23

Detachment

q:

SELECT

V2.A2,V3.A3, …,Vn.An

FROM

R1 V1, …,Rn Vn

WHERE

P1(V1.A1’)AND P2(V1.A1,V2.A2,…, Vn.An)

q':

SELECT

V1.A1 INTO R1'

FROM

R1 V1

WHERE

P1(V1.A1)

q": SELECT

V2.A2, …,Vn.An

FROM

R1' V1, R2 V2, …,Rn Vn

WHERE

P2(V1.A1, V2.A2, …,Vn.An)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/24

Detachment Example

Names of employees working on CAD/CAM project

q1:SELECT

FROM

WHERE

AND

AND

EMP.ENAME

EMP, ASG, PROJ

EMP.ENO=ASG.ENO

ASG.PNO=PROJ.PNO

PROJ.PNAME="CAD/CAM"

Distributed DBMS

q11:

SELECT

FROM

WHERE

PROJ.PNO INTO JVAR

PROJ

PROJ.PNAME="CAD/CAM"

q':

SELECT

FROM

WHERE

AND

EMP.ENAME

EMP,ASG,JVAR

EMP.ENO=ASG.ENO

ASG.PNO=JVAR.PNO

© M. T. Özsu & P. Valduriez

Ch.8/25

Detachment Example (cont’d)

q':

SELECT

FROM

WHERE

AND

EMP.ENAME

EMP,ASG,JVAR

EMP.ENO=ASG.ENO

ASG.PNO=JVAR.PNO

q12:

SELECT

FROM

WHERE

ASG.ENO INTO GVAR

ASG,JVAR

ASG.PNO=JVAR.PNO

q13:

SELECT

FROM

WHERE

EMP.ENAME

EMP,GVAR

EMP.ENO=GVAR.ENO

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/26

Tuple Substitution

q11 is a mono-variable query

q12 and q13 is subject to tuple substitution

Assume GVAR has two tuples only: 〈E1〉 and 〈E2〉

Then q13 becomes

q131:

q132:

Distributed DBMS

SELECT

EMP.ENAME

FROM

EMP

WHERE

EMP.ENO="E1"

SELECT

EMP.ENAME

FROM

EMP

WHERE

EMP.ENO="E2"

© M. T. Özsu & P. Valduriez

Ch.8/27

Static Algorithm

Simple (i.e., mono-relation) queries are executed according to the best

access path

Execute joins

➡ Determine the possible ordering of joins

➡ Determine the cost of each ordering

➡ Choose the join ordering with minimal cost

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/28

Static Algorithm

For joins, two alternative algorithms :

•

Nested loops

for each tuple of external relation (cardinality n1)

for each tuple of internal relation (cardinality n2)

join two tuples if the join predicate is true

end

end

•

➡ Complexity: n1* n2

Merge join

sort relations

merge relations

➡ Complexity: n1+ n2 if relations are previously sorted and equijoin

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/29

Static Algorithm – Example

Names of employees working on the CAD/CAM project

Assume

➡ EMP has an index on ENO,

➡ ASG has an index on PNO,

➡ PROJ has an index on PNO and an index on PNAME

ASG

PNO

ENO

EMP

Distributed DBMS

PROJ

© M. T. Özsu & P. Valduriez

Ch.8/30

Example (cont’d)

Choose the best access paths to each relation

➡ EMP:

sequential scan (no selection on EMP)

➡ ASG:

sequential scan (no selection on ASG)

➡ PROJ:

index on PNAME (there is a selection on PROJ based on PNAME)

Determine the best join ordering

➡ EMP ▷◁ASG ▷◁PROJ

➡ ASG ▷◁PROJ ▷◁EMP

➡ PROJ ▷◁ASG ▷◁EMP

➡ ASG ▷◁EMP ▷◁PROJ

➡ EMP × PROJ ▷◁ASG

➡ PRO × JEMP ▷◁ASG

➡ Select the best ordering based on the join costs evaluated according to the

two methods

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/31

Static Algorithm

Alternatives

ASG

PROJ

ASG ⋈ EMP ASG ⋈ PROJ

PROJ ⋈ ASG PROJ × EMP

EMP

EMP

⋈

ASG EMP × PROJ

pruned

pruned

pruned

(ASG ⋈ EMP) ⋈ PROJ

pruned

(PROJ ⋈ ASG) ⋈ EMP

Best total join order is one of

((ASG ⋈ EMP) ⋈ PROJ)

((PROJ ⋈ ASG) ⋈ EMP)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/32

Static Algorithm

•

((PROJ ⋈ ASG) ⋈ EMP) has a useful index on the select attribute and direct

access to the join attributes of ASG and EMP

•

Therefore, chose it with the following access methods:

➡ select PROJ using index on PNAME

➡ then join with ASG using index on PNO

➡ then join with EMP using index on ENO

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/33

Hybrid optimization

•

•

•

In general, static optimization is more efficient than dynamic optimization

➡ Adopted by all commercial DBMS

But even with a sophisticated cost model (with histograms), accurate cost

prediction is difficult

Example

➡ Consider a parametric query with predicate

WHERE R.A = $a

/* $a is a parameter

➡ The only possible assumption at compile time is uniform distribution of

•

values

Solution: Hybrid optimization

➡ Choose-plan done at runtime, based on the actual parameter binding

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/34

Hybrid Optimization Example

$a=A

$a=A

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/35

Join Ordering in Fragment

Queries

•

Ordering joins

➡ Distributed INGRES

➡ System R*

➡ Two-step

•

Semijoin ordering

➡ SDD-1

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/36

Join Ordering

•

Consider two relations only

if size(R) < size(S)

R

S

if size(R) > size(S)

•

Multiple relations more difficult because too many alternatives.

➡ Compute the cost of all alternatives and select the best one.

✦

Necessary to compute the size of intermediate relations which is difficult.

➡ Use heuristics

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/37

Join Ordering – Example

Consider

PROJ ⋈PNO ASG ⋈ENO EMP

Site 2

ASG

ENO

PNO

EMP

PROJ

Site 1

Distributed DBMS

Site 3

© M. T. Özsu & P. Valduriez

Ch.8/38

Join Ordering – Example

Execution alternatives:

1. EMP Site 2

2. ASG Site 1

Site 2 computes EMP'=EMP ⋈ ASG

EMP' Site 3

Site 1 computes EMP'=EMP ⋈ ASG

EMP' Site 3

Site 3 computes EMP' ⋈ PROJ

Site 3 computes EMP’ ⋈ PROJ

3. ASG Site 3

4. PROJ Site 2

Site 3 computes ASG'=ASG ⋈ PROJ

ASG' Site 1

Site 2 computes PROJ'=PROJ

PROJ' Site 1

⋈

Site 1 computes ASG' ▷◁EMP

Site 1 computes PROJ' ⋈ EMP

ASG

5. EMP Site 2

PROJ Site 2

Site 2 computes EMP ⋈ PROJ ⋈ ASG

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/39

Semijoin Algorithms

•

Consider the join of two relations:

➡ R[A] (located at site 1)

➡ S[A](located at site 2)

•

Alternatives:

1.

Do the join R ⋈AS

2.

Perform one of the semijoin equivalents

R ⋈AS

(R ⋉AS) ⋈AS

R ⋈A (S ⋉A R)

(R ⋉A S) ⋈A (S ⋉A R)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/40

Semijoin Algorithms

•

Perform the join

➡ send R to Site 2

•

➡ Site 2 computes R ⋈A S

Consider semijoin (R ⋉AS) ⋈AS

➡ S' = A(S)

➡ S' Site 1

➡ Site 1 computes R' = R ⋉AS'

➡ R' Site 2

➡ Site 2 computes R' ⋈AS

Semijoin is better if

size(A(S)) + size(R ⋉AS)) < size(R)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/41

Distributed Dynamic Algorithm

1.

2.

1.

2.

Execute all monorelation queries (e.g., selection, projection)

Reduce the multirelation query to produce irreducible subqueries

q1 q2 … qnsuch that there is only one relation between qi and qi+1

Choose qi involving the smallest fragments to execute (call MRQ')

Find the best execution strategy for MRQ'

a) Determine processing site

b) Determine fragments to move

3.

Repeat 3 and 4

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/42

Static Approach

•

•

•

•

•

Cost function includes local processing as well as transmission

Considers only joins

“Exhaustive” search

Compilation

Published papers provide solutions to handling horizontal and vertical

fragmentations but the implemented prototype does not

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/43

Static Approach – Performing

Joins

•

Ship whole

➡ Larger data transfer

➡ Smaller number of messages

•

➡ Better if relations are small

Fetch as needed

➡ Number of messages = O(cardinality of external relation)

➡ Data transfer per message is minimal

➡ Better if relations are large and the selectivity is good

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/44

Static Approach –

Vertical Partitioning & Joins

1. Move outer relation tuples to the site of the inner relation

(a) Retrieve outer tuples

(b) Send them to the inner relation site

(c) Join them as they arrive

Total Cost = cost(retrieving qualified outer tuples)

+ no. of outer tuples fetched * cost(retrieving qualified

inner tuples)

+ msg. cost * (no. outer tuples fetched * avg. outer

tuple size)/msg. size

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/45

Static Approach –

Vertical Partitioning & Joins

2. Move inner relation to the site of outer relation

Cannot join as they arrive; they need to be stored

Total cost

= cost(retrieving qualified outer tuples)

+ no. of outer tuples fetched * cost(retrieving

matching inner tuples from temporary storage)

+ cost(retrieving qualified inner tuples)

+ cost(storing all qualified inner tuples in temporary

storage)

+ msg. cost * no. of inner tuples fetched * avg. inner

tuple size/msg. size

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/46

Static Approach –

Vertical Partitioning & Joins

3. Move both inner and outer relations to another site

Total cost = cost(retrieving qualified outer tuples)

+ cost(retrieving qualified inner tuples)

+ cost(storing inner tuples in storage)

+ msg. cost × (no. of outer tuples fetched * avg. outer

tuple size)/msg. size

+ msg. cost * (no. of inner tuples fetched * avg. inner

tuple size)/msg. size

+ no. of outer tuples fetched * cost(retrieving inner

tuples from temporary storage)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/47

Static Approach –

Vertical Partitioning & Joins

4.Fetch inner tuples as needed

(a) Retrieve qualified tuples at outer relation site

(b) Send request containing join column value(s) for outer tuples to inner

relation site

(c) Retrieve matching inner tuples at inner relation site

(d)Send the matching inner tuples to outer relation site

(e) Join as they arrive

Total Cost = cost(retrieving qualified outer tuples)

+ msg. cost * (no. of outer tuples fetched)

+ no. of outer tuples fetched * no. of

inner tuples fetched * avg. inner tuple

size * msg. cost / msg. size)

+ no. of outer tuples fetched * cost(retrieving

matching inner tuples for one outer value)

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/48

Dynamic vs. Static vs Semijoin

•

•

Semijoin

➡ SDD1 selects only locally optimal schedules

Dynamic and static approaches have the same advantages and drawbacks

as in centralized case

➡ But the problems of accurate cost estimation at compile-time are more severe

•

✦

More variations at runtime

✦

Relations may be replicated, making site and copy selection important

Hybrid optimization

➡ Choose-plan approach can be used

➡ 2-step approach simpler

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/49

2-Step Optimization

1.

At compile time, generate a static plan with operation ordering and

access methods only

2.

At startup time, carry out site and copy selection and allocate operations

to sites

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/50

2-Step – Problem Definition

•

Given

➡ A set of sites S = {s1, s2, …,sn} with the load of each site

➡ A query Q ={q1, q2, q3, q4} such that each subquery qi is the maximum

processing unit that accesses one relation and communicates with its

neighboring queries

➡ For each qi in Q, a feasible allocation set of sites Sq={s1, s2, …,sk} where each site

•

stores a copy of the relation in qi

The objective is to find an optimal allocation of Q to S such that

➡ the load unbalance of S is minimized

➡ The total communication cost is minimized

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/51

2-Step Algorithm

•

•

For each q in Q compute load (Sq)

While Q not empty do

1.

Select subquery a with least allocation flexibility

2.

Select best site b for a (with least load and best benefit)

3.

Remove a from Q and recompute loads if needed

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/52

2-Step Algorithm Example

•

•

•

•

•

Let Q = {q1, q2, q3, q4} where q1 is

associated with R1, q2 is associated

with R2 joined with the result of q1,

etc.

Iteration 1: select q4, allocate to s1,

set load(s1)=2

Iteration 2: select q2, allocate to s2,

set load(s2)=3

Iteration 3: select q3, allocate to s1,

set load(s1) =3

Iteration 4: select q1, allocate to s3 or

s4

Note: if in iteration 2, q2, were allocated to s4, this would have produced

a better plan. So hybrid optimization can still miss optimal plans

Distributed DBMS

© M. T. Özsu & P. Valduriez

Ch.8/53