ppt

CS244

Lecture 8:

Sound Strategies

For

Internet Measurement

Rob Sherwood

Background

Who am I?

• Stanford 2008-2011;

Visiting Researcher/PostDoc

• Currently CTO of Big Switch Networks

Research Background

• Internet Security

• Peer-to-Peer

• Internet Measurement

• Software Defined Networking

2

Sound Strategies

Big Money Questions:

•Why this paper?

– Hint: not because it’s short

•Who is Vern Paxon?

3

Why Measure The Internet?

• Isn’t it man-made? Why not just model it?

Partial Answers:

• Statistical models of packet arrival and traffic matrices inaccurate

• The actual topology is unknown

• Many parts are intentionally obscured for commercial gain

• Apply natural science principles

4

You Said

• Patrick Harvey, "Many of the potential pitfalls in data gathering and analysis that the paper notes--while relevant to non-Internet data--seem to be somewhat exacerbated by the heavily-layered Internet architecture.

This may be especially true of ‘misconception’, the potential for which means that sound data analysis likely must not treat Internet abstractions as opaque as is typical in the development of many actual systems, but instead take into account many layers and modules in addition to those of most immediate proximity to the measured data."

5

Accuracy versus Precision?

• Hard/formal definition?

• Why is this so important for measurement?

6

SigFigs?

• “Real” science depends heavily on

Significant Figures

– E.g., C=2.997,924,58 x10 8 meters/second

• How do we apply SigFigs with computer systems?

• gettimeofday() == 1130322148.939977000

7

Implicit Assumptions?

• About Time?

• About TCP?

• About Routers/Switches?

8

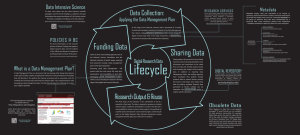

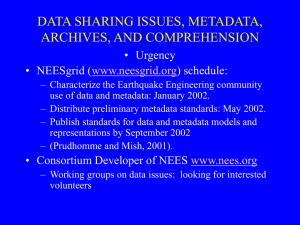

Metadata

• What is this? Why is it important?

Critical tip:

• Save exact cut-and-paste command for every graph

• People will ask you to reproduce

War Stories:

• DNS data

• OptAck – Nick’s class last year

9

You Said

• Anonymous 1, "I feel like the first half of this paper could have been titled "Reasons to Never Ever Use tcpdump".

• Anonymous 2, “The author says this advice is drawn from his experiences so now I am bit skeptical of every chart I see.

”

1

0

Misconceptions vs. Calibration?

• Obviously misconceptions are bad

– What can we do about them?

– Is “learn lots of domain knowledge” enough?

• What does Calibration mean in practice?

• Answer: if you want to do it right:

– “measure twice or more , cut publish once”

– Can be painstaking, but better than retraction

– Great Firewall of China visualization

1

1

Are Large Datasets Still Hard?

• Paper was published in 2004

– Most of the lessons were learned before then

• 10+ years later, we have Hadoop, AWS,

– Is big data management still an issue?

• My Dissertation gathered 4+TB (!!! )

– Needed tuned RAID, mysql, condor, and 300+ machines to process

1

2

Why Is Reproduction Hard?

• Or important?

• Truth time:

– who has already had this problem?

1

3

Why is Publishing Data Hard?

Practical answer:

•Privacy is Important

•Very hard to get consent

– Map IPs to people?

– People don’t understand the cost or benefit

•Most academic institutions have a “fail fast” approach to legal threats

Anonymizing and De-anonymizing data

•Huge research topic; very interesting

1

4

You Said

• Anonymous, " I am a bit worried about the conflict that might appear between the two ideals of collecting an abundance of metadata and making datasets publicly available. If a lot of metadata is collected in order to allow for the reuse of the data in many settings, this only increases the complexity of dealing with privacy issues in regards to the use of the data"

1

5

Ethical Internet Measurement

• Follow Up Papers/IMC Guidelines

• “BotNet Labs”/Password distribution

• Open Question:

– Should Internet Measurement go through IRB approval?

• War story:

– Multiple accidental DoS experiments

– Very unhappy people == unhappy advisor

1

6

You Said

• Anonymous, "I feel the authors should have discussed the issue of intrusiveness of a measurement technique in the accuracy/ misconception section -- the authors rightly describe the importance of collecting metadata especially for publicly made data. But how much metadata should one collect - and what if this metadata collection actually causes an overhead and skews the results?"

1

7

Conclusion

• Very few of these concepts apply to just the Internet

• Rob’s claim:

– This paper made me a better scientist

– Which then made me a better system designer

• Additional Questions/Comments?

1

8