From Memory to Problem Solving: Mechanism Reuse in a Graphical

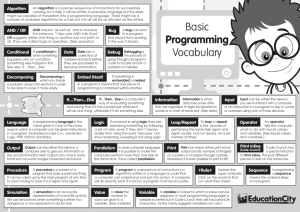

advertisement

From Memory to Problem Solving: Mechanism Reuse in a Graphical Cognitive Architecture Paul S. Rosenbloom 8/5/2011 The projects or efforts depicted were or are sponsored by the U.S. Army Research, Development, and Engineering Command (RDECOM) Simulation Training and Technology Center (STTC) and the Air Force Office of Scientific Research, Asian Office of Aerospace Research and Development (AFOSR/AOARD). The content or information presented does not necessarily reflect the position or the policy of the Government, and no official endorsement should be inferred. Cognitive Architecture Cognitive architecture: hypothesis about fixed structure underlying intelligent behavior – Defines core memories, reasoning processes, learning mechanisms, external interfaces, etc. – Yields intelligent behavior when add knowledge and skills Symbolic working memory – May serve as a Unified Theory of Cognition Long-term memory of rules the core of virtual humans and intelligent agents or robots Decide what to do next the basis for artificial general intelligence based on preferences generated by rules Reflect when can’t decide Learn results of reflection Interact with world Soar 3-8 2 ICT 2010 Diversity Dilemma How to build architectures that combine: – Theoretical elegance, simplicity, maintainability, extendibility – Broad scope of capability and applicability Embodying a superset of existing architectural capabilities – Cognitive, perceptuomotor, emotive, social, adaptive, … Hybrid Mixed Long-Term Memory Prediction-Based Learning Hybrid Short-Term Memory 3 Graphical Architecture Soar 3-8 D e c i s i o n Soar 9 Goals of This Work Extend graphical memory architecture to (Soar-like) problem solving – Operator generation, evaluation, selection and application – Reuse existing memory mechanisms, based on graphical models, as much as possible Evaluate ability to extend architectural functionality while retaining simplicity and elegance – Evidence for ability of approach to resolve diversity dilemma 4 LTM Problem Solving in Soar PM Base level Selection WM – Generate, evaluate, select and apply operators Generation: Retractable rule firing – LTM(WM) WM Evaluation: Retractable rule firing – LTM(WM) PM (Preferences) Selection: Decision procedure – PM(WM) WM Application: Latched rule firing – LTM(WM) WM Meta level (not focus here) Decision Cycle 5 D Elaboration cycles + decision Elaboration Cycle Parallel rule match + firing Match Cycle Pass token within Rete rule-match network Graphical Models Enable efficient computation over multivariate functions by decomposing them into products of subfunctions – Bayesian/Markov networks, Markov/conditional random fields, factor graphs p(u,w,x,y,z) = p(u)p(w)p(x|u,w)p(y|x)p(z|x) u x w f(u,w,x,y,z) = f1(u,w,x)f2(x,y,z)f3(z) w y u z y x f1 z f2 f3 Yield broad capability from a uniform base – State of the art performance across symbols, probabilities and signals via uniform representation and reasoning algorithm (Loopy) belief propagation, forward-backward algorithm, Kalman filters, Viterbi algorithm, FFT, turbo decoding, arc-consistency and production match, … Support mixed and hybrid processing Several neural network models map onto them 6 The Graphical Architecture Factor Graphs and the Summary Product Algorithm Summary product processes messages on links – Messages are distributions over domains of variables on link – At variable nodes messages are combined via pointwise product – At factor nodes input product is multiplied with factor function and then all variables not in output are summarized out .2 .4 .1 f(u,w,x,y,z) = f1(u,w,x)f2(x,y,z)f3(z) w u y x f1 .06 .08 .01 m(u) = å m(x)´ m(w) ´ f (x, w, u) z f2 .3 .2 .1 x,w f3 A single settling of the graph can efficiently compute: Variable marginals Maximum a posterior (MAP) probs. 7 A Hybrid Mixed Function/Message Representation Represent both messages and factor functions as multidimensional continuous functions – Approximated as piecewise linear over rectilinear regions y\x [0,10> [10,25> [25,50> [0,5> 0 .2y 0 [5,15> .5x 1 .1+.2x+.4y Discretize domain for discrete distributions & symbols 0.6 [1,2>=.2, [2,3>=.5, [3,4>=.3, … 0.4 0.2 0 Booleanize range (and add symbol table) for symbols [0,1>=1 Color(x, Red)=True, 8 [1,2>=0 Color(x, Green)=False Graphical Memory Architecture Developed general knowledge representation layer on top of factor graphs and summary product Differentiates long-term and working memories – Long-term memory defines a graph – Working memory specifies peripheral factor nodes Working memory consists of instances of predicates WMob1:O1 ob2:O2), (weight object:O1 value:10) (Next Provides fixed evidence for a single settling of the graph Long-term memory consists of conditionals – Generalized rules defined via predicate patterns and functions Patterns define conditions, actions and condacts (a neologism) Functions are mixed hybrid over pattern variables in conditionals 9 Each predicate induces own working memory node Conditionals Conditions test WM Actions propose changes to WM CONDITIONAL Concept-Weight condacts: (concept object:O1 class:c) (weight object:O1 value:w) function: Condacts test and change WM Functions modulate variables CONDITIONAL Transitive conditions: (Next ob1:a ob2:b) (Next ob1:b ob2:c) actions: (Next ob1:a ob2:c) WM Pattern w\c Walker Table … [1,10> .01w .001w … [10,20> .2-.01w “ … [20,50> 0 .025-.00025w … [50,100> “ “ … Pattern Join Function Join WM All four can be freely mixed 10 Memory Capabilities Implemented A rule-based procedural memory CONDITIONAL Transitive Semantic and Next(a,b) episodic declarative memories Conditions: – Semantic: Based Next(b,c) on cued object features, statistically predict Actions: Next(a,c) object’s concept plus all uncued features Concept (S) Pattern CONDITIONAL ConceptWeight Condacts: Concept(O1,c) WM Weight(O1,w) A constraint memory Join Weight (C) Beginnings of an imagery memory Function: 11 w\c Walker Table … [1,10> .01w .001w … [10,20> .2-.01w “ … [20,50> 0 .025-.00025w … [50,100> “ “ … Mobile (B) Color (S) Legs (D) Alive (B) Additional Aspects Relevant to Problem Solving Open World versus Closed World Predicates Predicates may be open world or closed world – Do unspecified WM regions default to false (0) or unknown (1)? – A key distinction between declarative and procedural memory Open world allows changes within a graph cycle – Predicts unknown values within a graph cycle – Chains within a graph cycle – Retracts when WM basis changes Closed world only changes across cycles – Chains only across graph cycles – Latches results in WM 12 Additional Aspects Relevant to Problem Solving Universal versus Unique Variables Predicate variables may be universal or unique Universal act like rule variables – Determine all matching values – Actions insert all (non-negated) results into WM And delete all negated results from WM Unique act like random variables – Determine distribution over best value – Actions insert only a single best value into WM Negations clamp values to 0 Join Negate Changes WM – Action combination subgraph: + 13 Additional Aspects Relevant to Problem Solving Link Memory The last message sent along each link in the graph is cached on the link – Forms a set of link memories that last until messages change – Subsume alpha & beta memories in Rete-like rule match cycle 14 LTM Problem Solving in the Graphical Architecture LM Base level Selection WM – Generate, evaluate, select and apply operators Generation: (Retractable) Open world actions – LTM(WM) WM Evaluation: (Retractable) Actions + functions – LTM(WM) LM Selection: Unique variables – LM(WM) WM Application: (Latched) Closed world actions – LTM(WM) WM 15 Meta level (not focus here) Graph Cycle Message cycles + WM change Message Cycle Process message within factor graph Eight Puzzle Results Preferences encoded via functions and negations CONDITIONAL goal-best ; Prefer operator that moves a tile into its desired location :conditions (blank state:s cell:cb) (acceptable state:s operator:ct) (location cell:ct tile:t) (goal cell:cb tile:t) :actions (selected states operator:ct) :function 10 CONDITIONAL previous-reject ; Reject previously moved operator :conditions (acceptable state:s operator:ct) (previous state:s operator:ct) :actions (selected - state:s operator:ct) Total of 19 conditionals* to solve simple problems in a Soar-like fashion (without reflection) – 747 nodes (404 variable, 343 factor) and 829 links – Sample problem takes 6220 messages over 9 decisions (13 sec) 16 Conclusion Soar-like base-level problem solving grounds directly in mechanisms in graphical memory architecture – – – – – – Factor graphs and conditionals knowledge in problem solving Summary product algorithm processing Mixed functions symbolic and numeric preferences Link memories preference memory Open world vs. closed world generation vs. application Universal vs. unique generation vs. selection Almost total reuse augurs well for diversity dilemma – Only added architectural selected predicate for operators Also progressing on other forms of problem solving – Soar-like reflective processing (e.g., search in problem spaces) – POMDP-based operator evaluation (decision-theoretic lookahead) 17