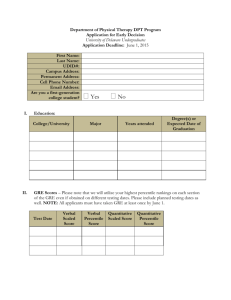

Office of Educational Assessment

advertisement