Linking Data to Instruction

advertisement

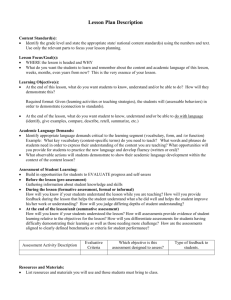

Linking Data to Instruction Jefferson County School District January 19, 2010 RTI Assessment Considerations • Measurement strategies are chosen to… – Answer specific questions – Make specific decisions • Give only with a “purpose” in mind – There is a problem if one doesn’t know why the assessment is being given. 2 Types of Assessments 1. Screening Assessments - Used for ALL students to identify those who may need additional support (DIBELS, CBM, Office Discipline Referrals for behavior, etc.) 2. Formative Assessment/Progress Monitoring - Frequent, on-going assessments that shows whether the instruction is effective and impacting student skill development (DIBELS, CBM, etc) 3. Diagnostic Assessments - Pinpoint instructional needs for students identified in screenings (Quick Phonics Screener, Survey Level Assessments, Curriculum Based Evaluation Procedures, etc.) ALL PART OF AN ASSESSMENT PROCESS WITHIN RTI! 3 Universal Screening Assessments Universal screening occurs for ALL students at least three times per year Procedures identify which students are proficient (80%) and which are deficient (20%). Good screening measures: Are reliable, valid, repeatable, brief, and easy to administer Are not intended to measure everything about a student, but provide an efficient an unbiased way to identify students who will need additional support (Tier 2 or Tier 3) Help you assess the overall health of your Core program (Are 80% of your students at benchmark/proficiency?) Why Use Fluency Measures for Screening? • Oral Reading Fluency and Accuracy in reading connected text is one of the best indicators of overall reading comprehension (Fuchs, Fuchs, Hosp, & Jenkins, 2001) • We always examine fluency AND accuracy • Without examining accuracy scores, we are missing a BIG piece of the picture • Students MUST be accurate with any skill before they are fluent. Oral reading fluency (ORF) does not tell you everything about a student’s reading skill, but a child who cannot read fluently cannot fully comprehend written text and will need additional support. Linking Screening Data to Instruction • Questions to consider: – Are 80% of your students proficient based on set criteria (benchmarks, percentiles, standards, etc)? • If not, what are the common instructional needs? – i.e. fluency, decoding, comprehension, multiplication, fractions, spelling, capitalization, punctuation, etc • What is your plan to meet these common instructional needs schoolwide/grade-wide? – – – – – Improved fidelity to core? More guided practice? More explicit instruction? Improved student engagement? More professional development for staff? Progress Monitoring Assessments • Help us answer the question: • Is what we’re doing working? • • • • Robust indicator of academic health Brief and easy to administer Can be administered frequently Must have multiple, equivalent forms – (If the metric isn’t the same, the data are meaningless) • Must be sensitive to growth Screening/Progress Monitoring Tools: Reading • DIBELS PSF, NWF – Pros: Free, quick and easy, good research base, benchmarks, quick, linked to instruction – Cons: Only useful in Grade K-2 • ORF (DIBELS, AIMSWEB, etc) – Pros: Free, good reliability and validity, easy to administer and score – Cons: May not fully account for comp in a few students • MAZE – Pros: Quick to administer, may address comprehension more than ORF, can administer to large groups simultaneously, useful in secondary – Cons: Time consuming to score, not as sensitive to growth as ORF • OAKS – Pros: Already available, compares to state standards – Cons: Just passing isn’t good enough, not linked directly to instruction, needs to be used in conjunction with other measures Screening/Progress Monitoring Tools: Math • CBM Early Numeracy Measures – Pros: Good reliability, validity, brief and easy to administer, – Cons: Sensitivity to growth, only useful in K-2 • Math Fact Fluency – Pros: Highly predictive of struggling students – Cons: No benchmarks, only a small piece of math screening • CBM Computation – Pros: Quick and easy to administer, sensitive to growth, surface validity – Cons: Predictive validity questionable, not linked to current standards • CBM Concepts and Applications – Pros: Quick and easy to administer, good predictive validity. Linked to NCTM Focal Points (AIMSWEB) – Cons: Not highly sensitive to growth, newer measures • easyCBM – Pros: Based on NCTM Focal Points, computer-based administration and scoring – Cons: Untimed (does not account for fluency), lengthy (administer no more than once every 3 weeks), predictive validity uncertain Screening/Progress Monitoring Tools: Writing • CBM Writing – Pros: Easy to administer to large groups, can obtain multiple scores from single probe – Cons: time consuming to score, does not directly measure content of writing – Correct Writing Sequences (CWS, %CWS) • Pros: Good reliability, validity, sensitive to growth at some grade levels • Cons: Time consuming to score, not as sensitive to growth in upper grades, %CWS not sensitive to growth – Correct Minus Incorrect Writing Sequences (CIWS) • Pros: Good reliability, validity, sensitive to growth in upper grades • Cons: Time consuming to score, not sensitive to growth in lower grades Screening & Progress Monitoring Resources • National Center Response to Intervention (www.rti4success.org) • Intervention Central (www.interventioncentral.com) • AIMSweb (www.aimsweb.com) • DIBELS (https://dibels.uoregon.edu) • easy CBM (www.easycbm.com) • The ABC’s of CBM (Hosp, Hosp,& Howell, 2007) Diagnostic Assessments • The major purpose for administering diagnostic tests is to provide information that is useful in planning more effective instruction. • Diagnostic tests should only be given when there is a clear expectation that they will provide new information about a child’s difficulties learning to read that can be used to provide more focused, or more powerful instruction. Diagnostic Assessment Questions • “Why is the student not performing at the expected level?” • “What is the student’s instructional need?” Start by reviewing existing data Diagnostic Assessments • • • • • • Quick Phonics Screener (Hasbrouck) DRA Error Analysis Survey Level Assessments In-Program Assessments (mastery tests, checkouts, etc) Curriculum-Based Evaluation Procedures – "any set of measurement procedures that use direct observation and recording of a student’s performance in a local curriculum as a basis for gathering information to make instructional decisions”(Deno, 1987) • Any informal or formal assessments that answer the question: Why is the student having problems? The Problem Solving Model 1. Define the Problem: • What is the problem and why is it happening? 2. Design Intervention: • What are we going to do about the problem? 3. Implement and Monitor: • Are we doing what we intended to do? 4. Evaluate Effectiveness: • Did our plan work? 15 Using the data to inform interventions • What is the student missing? • What does your data tell you? • Start with what you already have, and ask “Do I need more info?” Phonemic Awareness Phonics Fluency & Accuracy Vocabulary Comprehension Using your data to create interventions: An Example Adapted from Organizing Fluency Screening Data: Making the Instructional Match Group 1: Accurate and Fluent Group 2: Accurate but Slow Rate Group 3: Inaccurate and Slow Rate Group 4: Inaccurate but High Rate Regardless of the skill focus, organizing student data by looking at accuracy and fluency will assist teachers in making an appropriate instructional match! Digging Deeper with Screening Data • Is the student accurate? – Must define accuracy expectation • Consensus in reading research is 95% • Is the student fluent? – Must define fluency expectation • Fluency Measuring Tools: – Curriculum-Based Measures (CBM) – AIMSWeb (grades 1 - 8) – Fuch’s reading probes (grades 1 - 7) – DIBELS (grades K - 6) Organizing Fluency Data: Making the Instructional Match Group 1: Accurate and Fluent Core Instruction *Check Comp* Group 3: Inaccurate and Slow Rate +Decoding then fluency Group 1: Dig Deeper in the areas of reading comprehension, including Group 2: vocabulary and specific comprehension Accurate but strategies. Group 2: Build reading fluency Slow Rate skills. (Repeated Reading, Paired Reading, etc.) +Fluency Embed comprehension checks/strategies. building Group 3: Conduct an error analysis to determine instructional need. Teach to the instructional need paired with fluency building strategies. Embed comprehension Group 4: checks/strategies. Inaccurate but Group 4: Conduct Table-Tap Method. If student High Rate can correct error easily, teach student to selfmonitor reading accuracy. If reader cannot selfSelfcorrect errors, complete an error analysis to Monitoring Determine instructional need. Teach to the instructional need. Data Summary 3rd Grade Class- Fall DIBELS: ORF => 77 Student Accuracy WCPM Jim 97% 58 wcpm Nancy 87% 59 wcpm Ted 89% 90 wcpm Jerry 98% 85 wcpm Mary 99% 90 wcpm Day 4’s Activity 5 Group 1: Accurate and Fluent Group 2: Accurate but Slow Rate Group 3: Inaccurate and Slow Rate Group 4: Inaccurate but High Rate ACTIVITY: •Based on criteria for the grade level, place each student’s name into the appropriate box. •Organizing data based on performance(s) assists in grouping students for instructional purposes. •Students who do not perform well on comprehension tests, have a variety of instructional needs. Match the Student to the Appropriate Box: >95% acc. Group 1: Accurate and Fluent Jerry Mary Group 2: Accurate but Slow Rate Jim And 77 wcpm. Student Jim Ted WCPM 97% 58 wcpm 87% 59 wcpm 89% 90 wcpm Jerry 98% 85 wcpm Mary 99% 90 wcpm Nancy Group 3: Group 4: Inaccurate and Slow Inaccurate but High Ted Rate Rate Nancy Accuracy Regardless of Skill… • • • • • • • • Phonemic Awareness Letter Naming Letter Sounds Beginning Decoding Skills Sight Words Addition Subtraction Fractions Instructional “Focus” Continuum Accurate at Skill Fluent at Skill Able to Apply Skill IF no, teach skill. If yes, move to fluency If no, teach fluency/ automaticity If yes, move to application If no, teach application If yes, the move to higher level skill/concept Digging Deeper • In order to be “diagnostic” – Teachers need to know the sequence of skill development – Content knowledge may need further development – How deep depends on the intensity of the problem. OR Phonemic Awareness Developmental Continuum Hard Phoneme deletion and manipulation Blending and segmenting individual Easy phonemes Onset-rime blending and segmentation Syllable segmentation and blending Sentence segmentation Rhyming Word comparison THEN check here! Screening Assessments: Not Always Enough • Screening assessments do not always go far enough in answering the question: – We will need to “DIG DEEPER!” • Quick phonics screener • Error Analysis • Curriculum Based Evaluation When does this happen? • Tier 1 Meetings How Frequent: 2-3 times per year (after benchmarking/screening occurs) How Long: Who Attends: What is the Focus: 1-2 hours per grade level All grade level teachers, SPED teacher, principal, Title staff, specialists, instructional coach Talk about schoolwide data, evaluate health of core and needed adjustments for ALL students Data Used: Screening When does this happen? • Tier 2 Meetings How Frequent: Every 4-6 weeks (by grade level) How Long: Who Attends: 30-45 minutes All grade level teachers, SPED teacher, principal, Title teacher, specialists, instructional coach Talk about intervention groups. Adjust, continue, discontinue interventions based on district decision rules What is the Focus: Data Used: Screening, Progress Monitoring, sometimes Diagnostic When does this happen? • Tier 3 (Individual Problem Solving) Meetings How Frequent: As needed based on individual student need and district decision rules How Long: Who Attends: 30-60 minutes Gen ed teacher, SPED teacher, principal, specialists, school psych, instructional coach, parents Problem-solve individual student needs. Design individualized interventions using data. What is the Focus: Data Used: Screening, Progress Monitoring, and Diagnostic Useful Resources • What Works Clearinghouse – http://ies.ed.gov/ncee/wwc/ • Florida Center for Reading Research – http://www.fcrr.org/ • National Center on Response to Intervention – http://www.rti4success.org/ • Center on Instruction – http://www.centeroninstruction.org/ • Oregon RTI Project – http://www.oregonrti.org/ • Curriculum Based Evaluation: Teaching and Decision Making (Howell & Nolet, 2000) • The ABCs of CBM (Hosp, Hosp & Howell, 2007)