Ghent (ParCo) Talk 8/31/2011

advertisement

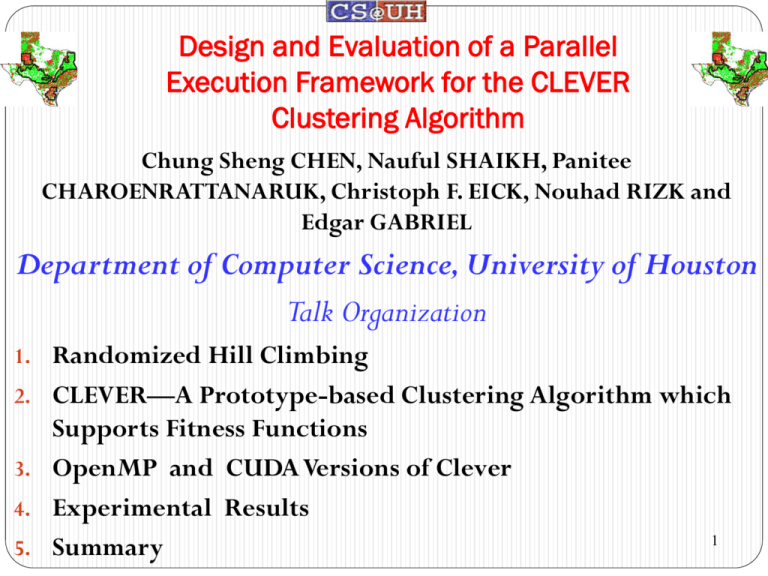

Design and Evaluation of a Parallel

Execution Framework for the CLEVER

Clustering Algorithm

Chung Sheng CHEN, Nauful SHAIKH, Panitee

CHAROENRATTANARUK, Christoph F. EICK, Nouhad RIZK and

Edgar GABRIEL

Department of Computer Science, University of Houston

Talk Organization

1. Randomized Hill Climbing

2. CLEVER—A Prototype-based Clustering Algorithm which

Supports Fitness Functions

3. OpenMP and CUDA Versions of Clever

4. Experimental Results

5. Summary

1

1. Randomized Hill Climbing

Neighborhood

Randomized Hill Climbing: Sample p points randomly in the neighborhood of the currently

best solution; determine the best solution of the n sampled points. If it is better than the

current solution, make it the new current solution and continue the search; otherwise,

terminate returning the current solution.

Advantages: easy to apply, does not need many resources, usually fast.

Problems: How do I define my neighborhood; what parameter p should I choose?

Eick et al., ParCo11, Ghent

Example Randomized Hill Climbing

f(x,y,z)=|x-y-0.2|*|x*z-0.8|*|0.3-z*z*y|

with x,y,z in [0,1]

Maximize

Neighborhood Design: Create solutions 50 solutions s, such

that:

s= (min(1, max(0,x+r1)), min(1, max(0,y+r2)), min(1, max(0, z+r3))

with r1, r2, r3 being random numbers in [-0.05,+0.05].

Eick et al., ParCo11, Ghent

2. CLEVER: Clustering with Plug-in Fitness Functions

In the last 5 years, the UH-DMML Research Group at the

University of Houston developed families of clustering

algorithms that find contiguous spatial clusters by maximizing a

plug-in fitness function.

This work is motivated by a mismatch between evaluation

measures of traditional clustering algorithms (such as cluster

compactness) and what domain experts are actually looking for.

Plug-in Fitness Functions allow domain experts to instruct

clustering algorithms with respect to desirable properties of

“good” clusters the clustering algorithm should seek for.

Eick et al., ParCo11, Ghent

4

8

Region Discovery Framework

Eick et al., ParCo11, Ghent

10

Region Discovery Framework3

The algorithms we currently investigate solve the following problem:

Given:

A dataset O with a schema R

A distance function d defined on instances of R

A fitness function q(X) that evaluates clusterings X={c1,…,ck} as follows:

q(X)= cX reward(c)=cX i(c) *size(c)

with 1

Objective:

Find c1,…,ck O such that:

1.

cicj= if ij

2.

X={c1,…,ck} maximizes q(X)

3.

All cluster ciX are contiguous (each pair of objects belonging to ci has to be delaunayconnected with respect to ci and to d)

4.

c1…ck O

5.

c1,…,ck are usually ranked based on the reward each cluster receives, and low reward

clusters are frequently not reported

Eick et al., ParCo11, Ghent

12

Example1: Finding Regional Co-location Patterns in Spatial Data

Figure 1: Co-location regions involving deep and

shallow ice on Mars

Figure 2: Chemical co-location

patterns in Texas Water Supply

Objective: Find co-location regions using various clustering algorithms and novel fitness functions.

Applications:

1. Finding regions on planet Mars where shallow and deep ice are co-located, using point and

raster datasets. In figure 1, regions in red have very high co-location and regions in blue have anti

co-location.

2. Finding co-location patterns involving chemical concentrations with values on the wings of

their statistical distribution in Texas’ ground water supply. Figure 2 indicates discovered regions

and their associated chemical patterns.

Example 2: Regional Regression

13

Geo-regression approaches: Multiple regression functions are

used that vary depending on location.

Regional Regression:

I. To discover regions with strong relationships between

dependent & independent variables

II. Construct regional regression functions for each region

III. When predicting the dependent variable of an object, use

the regression function associated with the location of the

object

Eick et al., ParCo11, Ghent

Representative-based Clustering

Attribute1

2

1

3

4

Attribute2

Objective: Find a set of objects OR such that the clustering X

obtained by using the objects in OR as representatives minimizes q(X).

Characteristic: cluster are formed by assigning objects to the closest

representative

Popular Algorithms: K-means, K-medoids/PAM, CLEVER, CLEVER,

9

Eick et al., ParCo11, Ghent

A prototype-based clustering algorithm which supports plug-

in fitness function

Uses a randomized hill climbing procedure to find a “good”

set of prototype data objects that represent clusters

“good” maximize the plug-in fitness function

Search for the “correct number of cluster”

CLEVER is powerful but usually slow;

CLEVER

Hill Climbing Procedure

Neighboring

solutions generator

Assign cluster

members

Plug-in fitness

function

10

Eick et al., ParCo11, Ghent

Pseudo Code of CLEVERs)

Inputs: Dataset O, k’, neighborhood-size, p, q, , object-distance-function d or

distance matrix D, i-max

Outputs: Clustering X, fitness q(X), rewards for clusters in X

Algorithm:

1. Create a current solution by randomly selecting k’ representatives from O.

2. If i-max iterations have been done terminate with the current solution

3. Create p neighbors of the current solution randomly using the given

neighborhood definition.

4. If the best neighbor improves the fitness q, it becomes the current solution.

Go back to step 2.

5. If the fitness does not improve, the solution neighborhood is re-sampled

by generating p’ (more precisely, first 2*p solutions and then (q-2)*p solutions

are re-sampled) more neighbors. If re-sampling does not lead to a better solution,

terminate returning the current solution (however, clusters that receive a reward of 0 will

be considered outliers and non-reward clusters are therefore not returned); otherwise, go back

to step 2 replacing the current solution by the best solution found by re-sampling.

11

3. PAR-CLEVER :

A Faster Clustering Algorithm

• OpenMP

• CUDA (GPU computing)

• MPI

• Map/Reduce

12

Eick et al., ParCo11, Ghent

10Ovals

Size:3,359

Fitness function: purity

Earthquake

Size: 330,561

Fitness function: find clusters with high variance with respect

to earthquake depth

Yahoo Ads Clicks

full size: 3,009,071,396; subset:2,910,613

Fitness function: minimum intra-cluster distance

13

Eick et al., ParCo11, Ghent

Assign cluster members: O(n*k)

1.

1.

2.

3.

Data parallelization

Highly independent

The first priority for parallelization

Fitness value calculation : ~ O(n)

3. Neighboring solutions generation: ~ O(p)

2.

n:= number of object in the dataset

k:= number of clusters in the current solution

p:= sampling rate (how many neighbors of the current solution

are sampled)

14

Eick et al., ParCo11, Ghent

crill-001 to crill-016 (OpenMP)

Processor

: 4 x AMD Opteron(tm) Processor 6174

CPU cores

: 48

Core speed : 2200 MHz

Memory

: 64 GB

crill-101 and crill-102 (GPU Computing—NVIDIA CUDA)

Processor

: 2 x AMD Opteron(tm) Processor 6174

CPU cores

: 24

Core speed : 2200 MHz

Memory

: 32 GB

GPU Device : 4 x Tesla M2050,

Memory

: 3 Gb

CUDA cores : 448

15

Eick et al., ParCo11, Ghent

100val Dataset ( size = 3359 )

p=100, q=27, k’=10, η = 1.1, th=0.6, β = 1.6,

Interestingness Function=Purity

Threads

Loop-level

Loop-level + Incremental

Updating

Task-level

1

6

12

24

48

Time(sec)

248.49

50.52

30.09

20.58

16.39

Speedup

1.00

4.92

8.26

12.07

15.16

Efficiency

1.00

0.82

0.69

0.50

0.32

Time(sec)

229.88

49.43

29.99

20.28

15.61

Speedup

1.00

4.65

7.67

11.34

14.73

Efficiency

1.00

0.78

0.64

0.47

0.31

Time(sec)

248.49

41.83

21.67

11.44

6.40

Speedup

1.00

5.94

11.47

21.72

38.84

Efficiency

1.00

0.99

0.96

0.90

0.81

Iterations = 14, Evaluated neighbor solutions = 15200, k = 5, Fitness = 77187.7

16

Eick et al., ParCo11, Ghent

10Oval

Time(sec)

60.00

50.00

40.00

30.00

20.00

10.00

0.00

0

6

12

18

24

30

36

42

48

54

Num Threads

Loop-level

Loop-level + Incremental Updating

Task-level

17

Eick et al., ParCo11, Ghent

Earthquake Dataset ( size = 330,561 )

p=50, q=12, k’=100, η =2, th=1.2, β = 1.4,

Interestingness Function=Variance High

Threads

1

6

12

24

48

185.39

35.27

23.17

12.38

10.20

Speedup

1

5.26

8.00

14.97

18.18

Efficiency

1

0.88

0.67

0.62

0.38

30.24

9.18

6.89

6.06

6.84

Speedup

1

3.29

4.39

4.99

4.42

Efficiency

1

0.55

0.37

0.21

0.09

185.39

31.95

17.19

9.76

6.14

Speedup

1

5.80

10.79

19.00

30.18

Efficiency

1

0.97

0.90

0.79

0.63

Time(hours)

Loop-level

Time(hours)

Loop-level + Incremental

Updating

Time(hours)

Task-level

Iterations = 216, Evaluated neighbor solutions = 21,950, k = 115

18

Eick et al., ParCo11, Ghent

Earthquake

Time(hours)

40.00

30.00

20.00

10.00

0.00

0

6

12

18

24

30

36

42

48

54

Num Threads

Loop-level

Loop-level + Incremental Updating

Task-level

19

Eick et al., ParCo11, Ghent

Yahoo Reduced Dataset ( size = 2910613 )

p=48, q=7, k’=80, η =1.2, th=0, β = 1.000001,

Interestingness Function=Average Distance to Medoid

Threads

1

6

12

24

48

154.62

29.25

16.74

12.12

9.94

Speedup

1

5.29

9.24

12.75

15.55

Efficiency

1

0.88

0.77

0.53

0.32

28.30

8.15

6.71

5.55

5.68

Speedup

1

3.47

4.22

5.10

4.98

Efficiency

1

0.58

0.35

0.21

0.10

154.62

25.78

12.97

6.63

3.42

Speedup

1

6.00

11.92

23.33

45.21

Efficiency

1

1.00

0.99

0.97

0.94

Time(hours)

Loop-level

Time(hours)

Loop-level + Incremental

Updating

Time(hours)

Task-level

Iterations = 10, Evaluated neighbor solutions = 480, k = 94

20

Eick et al., ParCo11, Ghent

Yahoo

Time(hours)

30.00

20.00

10.00

0.00

0

6

12

18

24

30

36

42

48

54

Num Threads

Loop-level

Loop-level + Incremental Updating

Task-level

21

Eick et al., ParCo11, Ghent

100val Dataset ( size = 3359 )

p=100, q=27, k’=10, η = 1.1, th=0.6, β = 1.6,

Interestingness Function=Purity

Run Time (seconds)

1.33

1.32

1.34

1.32

1.33

1.32

Avg:1.327

Iterations = 12, Evaluated neighbor solutions = 5100, k = 5

vs.

OpenMP

Task-level

#threads

Time(sec)

Sequential

6

12

24

48

248.49

41.83

21.67

11.44

6.40

Iterations = 14, Evaluated neighbor solutions = 15200, k = 5, Fitness = 77187.7

22

CUDA version evaluate 5100 solutions in 1.327 seconds 15200 solutions in 3.95

seconds

Speed up = Time(CPU) / Time(GPU)

•63x speed up compares to sequential version

•1.62x speed up compares to 48 threads OpenMP

Earthquake Dataset ( size = 330561 )

p=50, q=12, k’=100, η =2, th=1.2, β = 1.4,

Interestingness Function=Variance High

Run Time (seconds)

138.95

146.56

143.82

139.10

146.19

147.03

Avg:143.61

Iterations = 158, Evaluated neighbor solutions = 28,900, k = 92

vs.

OpenMP

Task-level

#threads

Time(hours)

Sequential

6

12

24

48

185.39

31.95

17.19

9.76

6.14

Iterations = 216, Evaluated neighbor solutions = 21950, k = 115

23

CUDA version evaluate 28000 solutions in 143.61 seconds 21950 solutions in 109.07 seconds

Speed up = Time(CPU) / Time(GPU)

•6119x speed up compares to sequential version

•202x speed up compares to 48 threads OpenMP

Eick et al., ParCo11, Ghent

The representatives are read frequently in the computation that assigns objects

to clusters. The results presented earlier cached the representatives into the

shared memory for a faster access.

The following table compares the performances between CLEVER with and

without caching the representatives on the earthquake data set. The data size of

the representatives being cached is 2MB

The result shows that caching the representatives has very little improvement

on the runtime (0.09%) based on the

Earthquake Dataset ( size = 330561 )

p=50, q=12, k’=100, η =2, th=1.2, β = 1.4,

Interestingness Function=Variance High

Run Time (seconds)

Cache

138.95

No-cache 144.63

24

146.56

143.82

139.10

146.19

147.03

Avg:143.61

139.9

144.27

144.5

144.71

144.44

Avg:143.74

Iterations = 158, Evaluated neighbor solutions = 28,900, k = 92

Eick et al., ParCo11, Ghent

The OpenMP version uses a object oriented programming

(OOP) design inherited from its original implementation but

the redesigned CUDA version is more a procedural

programming implementation.

CUDA hardware has higher bandwidth which contributed to

the speedup a little

Caching contributes little of the speedup (we already

analyzed that)

25

Eick et al., ParCo11, Ghent

CUDA and OpenMP results indicate good scalability parallel algorithm using

26

multi-core processors—computations which take days can now be performed

in minutes/hours.

OpenMP

Easy to implement

Good Speed up

Limited by the number of cores and the amount of RAM

CUDA GPU

Extra attentions needed for CUDA programming

Lower level of programming: registers, cache memory…

GPU memory hierarchy is different from CPU

Only support for some data structures;

Synchronization between threads in blocks is not possible

Super speed up, some of which are still subject of investigation Eick et al., ParCo11, Ghent

More work on the CUDA version

Conduct more experiments which explain what works well

and which doesn’t and why it does/does not work well

Analyze impact of the capability to search many more

solutions on solution quality in more depth.

Implement a version of CLEVER which conducts multiple

randomized hill climbing searches in parallel and which

employs dynamic load balancingmore resources are

allocated to the “more promising” searches

Reuse code for speeding up other data mining algorithms

which uses randomized hill climbing.

27

Eick et al., ParCo11, Ghent