Neutron hybrid mode

advertisement

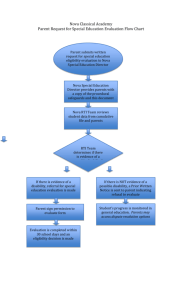

Neutron Deployment at Scale Igor Bolotin, Cloud Architecture Vinay Bannai, SDN Architecture About ebay inc eBay Inc. enables commerce by delivering flexible and scalable solutions that foster merchant growth. With 145 million active buyers globally, eBay is one of the world's largest online marketplaces, where practically anyone can buy and sell practically anything. With 148 million registered accounts in 193 markets and 26 currencies around the world, PayPal enables global commerce, processing almost 8 million payments every day. eBay Enterprise is a leading provider of commerce technologies, omnichannel operations and marketing solutions. It serves 1000 retailers and brands. Outline of the Presentation • Business case • Cloud at eBay Inc • Deployment Patterns • Problem Areas • How we addressed them • Future Direction • Summary • Q&A What our businesses need? • Agility − Reduce time to market − Enable innovation • Efficiency − Elastic scale − Reduce overall cost • Multi-Tenancy • Availability • Security & Compliance • Software Enabled Data Centers Cloud at eBay Inc Global Orchestration Identity & Image Management Region/DC Region/DC AZ eBay Inc Cloud AZ Region/DC AZ Region/DC AZ Openstack Controllers Nova Cells 5 Deployment Patterns • Private Cloud for all eBay Inc properties • Global Orchestration with traffic and load balancing • Identity Management − Region level (eventually global) • Image Management − Region level • Nova/Cinder/Neutron − Availability Zones − Active/Active servers • Trove • Zabbix for monitoring • All services run behind a load balancer VIP Deployment Patterns (contd.) • Shared Cloud between tenants • Different types of tenants − eBay Production, PP production, StubHub, GSI Enterprise etc − Dev/QA − Sandbox environment, internal tenants (IT, VPCs) • Production Traffic − All bridged and no overlays − No DHCP • Dev/QA and some of the internal tenants − Overlays − DHCP Gateway Nodes Physical Racks 8 Areas With Scale Issues • Hypervisor Scale Out • Overlay Networks • Bridged Networks • Neutron Services (DHCP, Metadata, API server) • SDN Controllers • Network Gateway Nodes • Upgrade Hypervisor Scale Out Nova Nova API Nova API API Neutron Neutron API Neutron API API Nova Nova Cells Nova Cells Cells Nova Nova Cells Cells Nova Sched Nova Nova Cells Cells Nova Sched DHCP DHCP Agent Agent SDN SDN Contrl Contrl 10 Hypervisor Scale Out • Several hundreds of hypervisor in a cell • Multiple cells in a AZ • Several thousands of hypervisors in a AZ • Nova cells mitigate hypervisor scale • Neutron scaling − Majority of the hypervisors support Bridged VM’s − Hybrid mode with both overlay and bridged VM’s Bridged and Overlay Tenant on Bridged Network VM VM VM VM VM VM VM VM VM L2 L3 L2 L2 Network Virtualization Layer VM Tenant on Overlay Network Overlay Networks • Overlay technology − VXLAN − STT • Handling BUM traffic − ARP − Unknown unicast − Multicast • Logical switches and routers • Distributed L3 routers − Direct tunnels from hypervisor to hypervisor • Scale out deployment of Gateway nodes Neutron Services • Keystone tokens • Single threaded Quantum/Neutron server • DHCP Servers • Healing Instance Info Caching Interval Keystone Token Generation and Authentication Client • UUID based Token – Needs to be authenticated by the keystone server for every call • PKI based Token – Authenticated by the servers using Keystone certs • Token caching – Prevents unnecessary token creation Nova Server Neutron Server Client Cinder Server Client Image Server Keystone Server 15 Token Caching • Applies to uuid based tokens − 98% of tokens generated by inter-API services − 92% are quantum/neutron related − Average of 25 to 30 tokens/sec created by quantum/neutron alone − RPC call overhead, bloated token table • Fix − Use token caching (1 hour) − Use PKI for service tenant − Reduces network chatter and improves performance • Openstack bugs − Bug id : 1191159 − Bug id : 1250580 Neutron Multi Worker • Prior to Havana • One api thread handling both REST calls and the RPC calls • Broke up the api to two threads − One handles REST API calls − The other handles RPC calls • Havana fixes − DHCP renewals not handled by neutron servers, instead dhcp_release − Multi-worker support Heal Instance Info Cache Interval • All nova computes regularly poll neutron server • To get network info of the instances running on the compute node • Default is 10 seconds • Hundreds of hypervisors and tens of thousands of VM’s will add up − Even though only one instance is checked for each interval • We adjusted the interval to 600 seconds DHCP Scaling • The most common source of problems • We employ multiple strategies − DHCP active/standby − Planning to support DHCP active/active − No DHCP/Config Drive option • Production Environment − No DHCP − Config drive management − Requires “cloudinit” aware images SDN Controllers Nova API Neutron API SDN Controller OS Ctrl OS Ctrl Neutron API SDN Controller OS Ctrl Neutron API SDN Controller Network Gateway Nodes • Only with overlay networks • Scale out architecture • Problems with high CPU utilization • Number of flows in the gateway node • East – West traffic also hitting VIPs − Load Balancer running as a appliance on a hypervisor − Using SNAT • OVS Enhancements − Use megaflows in openvswitch − Multi-core version of ovs-vswitchd OVS Improvements Megaflows • Prior to OVS 1.11 • Megaflow introduces wildcarding in kernel module • Fewer misses and punts to user space • Reduced number of flows in kernel • Requires OVS 1.11 or greater • Cons − Using security groups nullifies the effects of megaflows OVS Improvements Multi-Core cpu cores vswitchd vswitchd User Space Kernel Space Kernel module OVS < 2.0 Kernel module OVS >= 2.0 23 Future Work • VPC model • Neutron Tagging Blueprint − Network Assignment for Bridged VM’s − Network Selection for VPC tenants − Network Scheduling − Additional meta data information • Blueprint − https://blueprints.launchpad.net/neutron/+spec/network-tagging Network Assignment in Bridged VM’s • There are two primary ways to plumb a VM into a network − Pass the net-id to the nova boot − Create a port and pass it to nova boot • Nova schedules the instance without much knowledge about the underlying network • BP proposes to address this issue as one of the use cases Network Tagging VM FZ1 FZ2 FZ3 FZ4 Rack 1 N1 Rack 2 N2 Rack 3 N3 Rack 4 N4 26 Summary • Know your requirements • Understand your size and scale • Pick the SDN controller based on your needs • Design with multiple failure domains • Overlay, Bridged or Hybrid • Monitor your cloud for performance degradation Thank you. Yes. We are hiring! dl-ebay-cloud-hiring@corp.ebay.com