MM_Ch06

advertisement

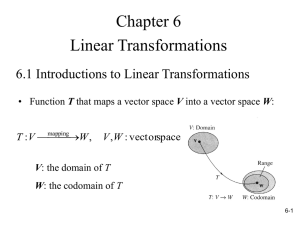

Chapter 6

Linear Transformations

6.1 Introduction to Linear Transformations

6.2 The Kernel and Range of a Linear Transformation

6.3 Matrices for Linear Transformations

6.4 Transition Matrices and Similarity

6.5 Applications of Linear Transformations

6.1

6.1 Introduction to Linear Transformations

A function T that maps a vector space V into a vector space W:

mapping

T : V

W,

V ,W : vector spaces

V: the domain (定義域) of T

W: the codomain (對應域) of T

Image of v under T (在T映射下v的像):

If v is a vector in V and w is a vector in W such that

T ( v) w,

then w is called the image of v under T

(For each v, there is only one w)

The range of T (T的值域):

The set of all images of vectors in V (see the figure on the

next slide)

6.2

The preimage of w (w的反像):

The set of all v in V such that T(v)=w

(For each w, v may not be unique)

The graphical representations of the domain, codomain, and range

※ For example, V is R3, W is R3, and T

is the orthogonal projection of any

vector (x, y, z) onto the xy-plane, i.e.

T(x, y, z) = (x, y, 0)

(we will use the above example many

times to explain abstract notions)

※ Then the domain is R3, the codomain

is R3, and the range is xy-plane (a

subspace of the codomian R3)

※ (2, 1, 0) is the image of (2, 1, 3)

※ The preimage of (2, 1, 0) is (2, 1, s),

6.3

where s is any real number

Ex 1: A function from R2 into R2

T : R2 R2

v (v1 , v2 ) R 2

T (v1 , v2 ) (v1 v2 , v1 2v2 )

(a) Find the image of v=(-1,2) (b) Find the preimage of w=(-1,11)

Sol:

(a) v ( 1, 2)

T ( v ) T (1, 2) (1 2, 1 2(2)) (3, 3)

(b) T ( v) w (1, 11)

T (v1 , v2 ) (v1 v2 , v1 2v2 ) (1, 11)

v1 v2 1

v1 2v2 11

v1 3, v2 4 Thus {(3, 4)} is the preimage of w=(-1, 11)

6.4

Linear Transformation (線性轉換):

V ,W: vector spaces

T : V W: A linear tra nsformatio n of V into W if the

following two properties are true

(1) T (u v) T (u) T ( v), u, v V

(2) T (cu) cT (u),

c R

6.5

Notes:

(1) A linear transformation is said to be operation preserving

(because the same result occurs whether the operations of addition

and scalar multiplication are performed before or after the linear

transformation is applied)

T (u v) T (u) T ( v)

Addition

in V

Addition

in W

T (cu) cT (u)

Scalar

multiplication

in V

Scalar

multiplication

in W

(2) A linear transformation T : V V from a vector space into

itself is called a linear operator (線性運算子)

6.6

Ex 2: Verifying a linear transformation T from R2 into R2

T (v1 , v2 ) (v1 v2 , v1 2v2 )

Pf:

u (u1 , u2 ), v (v1 , v2 ) : vector in R 2 , c : any real number

(1) Vector addition :

u v (u1 , u2 ) (v1 , v2 ) (u1 v1 , u2 v2 )

T (u v) T (u1 v1 , u2 v2 )

((u1 v1 ) (u2 v2 ), (u1 v1 ) 2(u2 v2 ))

((u1 u2 ) (v1 v2 ), (u1 2u2 ) (v1 2v2 ))

(u1 u2 , u1 2u2 ) (v1 v2 , v1 2v2 )

T (u) T ( v)

6.7

(2) Scalar multiplica tion

cu c(u1 , u2 ) (cu1 , cu2 )

T (cu) T (cu1 , cu2 ) (cu1 cu2 , cu1 2cu2 )

c(u1 u 2 , u1 2u 2 )

cT (u)

Therefore, T is a linear transformation

6.8

Ex 3: Functions that are not linear transformations

(a) f ( x) sin x

sin( x1 x2 ) sin( x1 ) sin( x2 )

(f(x) = sin x is not a linear transformation)

sin( 2 3 ) sin( 2 ) sin( 3 )

(b) f ( x) x 2

( x1 x2 ) 2 x12 x22

(1 2) 2 12 22

(f(x) = x2 is not a linear transformation)

(c) f ( x) x 1

f ( x1 x2 ) x1 x2 1

f ( x1 ) f ( x2 ) ( x1 1) ( x2 1) x1 x2 2

f ( x1 x2 ) f ( x1 ) f ( x2 ) (f(x) = x+1 is not a linear transformation,

although it is a linear function)

In fact, f (cx) cf ( x)

6.9

Notes: Two uses of the term “linear”.

(1) f ( x) x 1 is called a linear function because its graph

is a line

(2) f ( x) x 1 is not a linear transformation from a vector

space R into R because it preserves neither vector

addition nor scalar multiplication

6.10

Zero transformation (零轉換):

T :V W

Identity transformation (相等轉換):

T :V V

T ( v ) 0, v V

T ( v) v, v V

Theorem 6.1: Properties of linear transformations

T : V W , u, v V

(1) T (0) 0 (T(cv) = cT(v) for c=0)

(2) T ( v) T ( v) (T(cv) = cT(v) for c=-1)

(3) T (u v) T (u) T ( v) (T(u+(-v))=T(u)+T(-v) and property (2))

(4) If v c1v1 c2 v2 cn vn ,

then T ( v) T (c1v1 c2 v2 cn vn )

c1T (v1 ) c2T (v2 ) cnT (vn )

(Iteratively using T(u+v)=T(u)+T(v) and T(cv) = cT(v))

6.11

Ex 4: Linear transformations and bases

Let T : R 3 R 3 be a linear transformation such that

T (1,0,0) (2,1,4)

T (0,1,0) (1,5,2)

T (0,0,1) (0,3,1)

Find T(2, 3, -2)

Sol:

(2,3,2) 2(1,0,0) 3(0,1,0) 2(0,0,1)

According to the fourth property on the previous slide that

T (c1v1 c2v2 cnvn ) c1T (v1 ) c2T (v2 ) cnT (vn )

T (2,3,2) 2T (1,0,0) 3T (0,1,0) 2T (0,0,1)

2(2,1,4) 3(1,5,2) 2T (0,3,1)

(7,7,0)

6.12

Ex 5: A linear transformation defined by a matrix

0

3

v1

2

3

The function T : R R is defined as T ( v) Av 2

1

v2

1 2

(a) Find T ( v), where v (2, 1)

(b) Show that T is a linear transformation form R 2 into R3

Sol:

2

3

R

vector

R

vector

(a) v (2, 1)

0

3

6

2

T ( v) Av 2

1 3

1

1 2

0

T (2,1) (6,3,0)

(b) T (u v) A(u v) Au Av T (u) T ( v)

T (cu) A(cu) c( Au) cT (u)

(vector addition)

(scalar multiplication)

6.13

Theorem 6.2: The linear transformation defined by a matrix

Let A be an mn matrix. The function T defined by

T ( v ) Av

is a linear transformation from Rn into Rm

Note:

R n vector

a11

a21

Av

am1

R m vector

a12 a1n v1 a11v1 a12v2 a1n vn

a22 a2 n v2 a21v1 a22v2 a2 n vn

am 2 amn vn am1v1 am 2 v2 amnvn

T ( v ) Av

T : Rn

R m

※ If T(v) can represented by Av, then T is a linear transformation

※ If the size of A is m×n, then the domain of T is Rn and the

codomain of T is Rm

6.14

Ex 7: Rotation in the plane

Show that the L.T. T : R 2 R 2 given by the matrix

cos

A

sin

sin

cos

has the property that it rotates every vector in R2

counterclockwise about the origin through the angle

Sol:

(Polar coordinates: for every point on the xyv ( x, y ) (r cos , r sin ) plane, it can be represented by a set of (r, α))

r:the length of v ( x 2 y 2 )

:the angle from the positive

x-axis counterclockwise to

the vector v

T(v)

v

6.15

cos sin x cos

T ( v) Av

sin cos y sin

r cos cos r sin sin

r sin cos r cos sin

r cos( )

r sin( )

sin r cos

cos r sin

according to the addition

formula of trigonometric

identities (三角函數合角公式)

r:remain the same, that means the length of T(v) equals the

length of v

+:the angle from the positive x-axis counterclockwise to

the vector T(v)

Thus, T(v) is the vector that results from rotating the vector v

counterclockwise through the angle

6.16

Ex 8: A projection in R3

The linear transformation T : R 3 R 3 is given by

1 0 0

A 0 1 0

0

0

0

is called a projection in R3

1 0 0 x x

If v is ( x, y, z ), Av 0 1 0 y y

0 0 0 z 0

※ In other words, T maps every vector in R3

to its orthogonal projection in the xy-plane,

as shown in the right figure

6.17

Ex 9: The transpose function is a linear transformation from

Mmn into Mn m

T ( A) AT

(T : M mn M nm )

Show that T is a linear transformation

Sol:

A, B M mn

T ( A B) ( A B)T AT BT T ( A) T ( B)

T (cA) (cA)T cAT cT ( A)

Therefore, T (the transpose function) is a linear transformation

from Mmn into Mnm

6.18

Keywords in Section 6.1:

function: 函數

domain: 定義域

codomain: 對應域

image of v under T: 在T映射下v的像

range of T: T的值域

preimage of w: w的反像

linear transformation: 線性轉換

linear operator: 線性運算子

zero transformation: 零轉換

identity transformation: 相等轉換

6.19

6.2 The Kernel and Range of a Linear Transformation

Kernel of a linear transformation T (線性轉換T的核空間):

Let T : V W be a linear transformation. Then the set of

all vectors v in V that satisfy T ( v ) 0 is called the kernel

of T and is denoted by ker(T)

ker(T ) {v | T ( v) 0, v V }

※ For example, V is R3, W is R3, and T is the

orthogonal projection of any vector (x, y, z)

onto the xy-plane, i.e. T(x, y, z) = (x, y, 0)

※ Then the kernel of T is the set consisting of

(0, 0, s), where s is a real number, i.e.

ker(T ) {(0, 0, s) | s is a real number}

6.20

Ex 1: Finding the kernel of a linear transformation

T ( A) AT (T : M 32 M 23 )

Sol:

0 0

ker(T ) 0 0

0

0

Ex 2: The kernel of the zero and identity transformations

(a) If T(v) = 0 (the zero transformation T : V W ), then

ker(T ) V

(b) If T(v) = v (the identity transformation T : V V ), then

ker(T ) {0}

6.21

Ex 5: Finding the kernel of a linear transformation

x1

1 1 2

3

2

T (x) Ax

x

(

T

:

R

R

)

2

1 2 3 x

3

ker(T ) ?

Sol:

ker(T ) {( x1 , x2 , x3 ) | T ( x1 , x2 , x3 ) (0, 0), and ( x1 , x2 , x3 ) R 3}

T ( x1 , x2 , x3 ) (0,0)

x1

1 1 2 0

x2

1 2

3 0

x3

6.22

1 1 2 0 G.-J. E. 1 0 1 0

1 2 3 0 0 1 1 0

x1 t 1

x2 t t 1

x3 t 1

ker(T ) {t (1,1,1) | t is a real number }

span{( 1,1,1)}

6.23

Theorem 6.3: The kernel is a subspace of V

The kernel of a linear transformation T : V W is a

subspace of the domain V

Pf:

T (0) 0 (by Theorem 6.1) ker(T ) is a nonempty subset of V

Let u and v be vectors in the kernel of T . Then

T (u v) T (u) T ( v) 0 0 0

T is a linear transformation

T (cu) cT (u) c0 0

(u ker(T ), v ker(T ) u v ker(T ))

(u ker(T ) cu ker(T ))

Thus, ker(T ) is a subspace of V (according to Theorem 4.5

that a nonempty subset of V is a subspace of V if it is closed

under vector addition and scalar multiplication)

6.24

Ex 6: Finding a basis for the kernel

Let T : R 5 R 4 be defined by T (x) Ax, where x is in R 5 and

1

2

A

1

0

2 0

1 3

0 2

0 0

1 1

1 0

0 1

2 8

Find a basis for ker(T) as a subspace of R5

Sol:

To find ker(T) means to find all x satisfying T(x) = Ax = 0.

Thus we need to form the augmented matrix A 0 first

6.25

A

1

2

1

0

0

1 1 0

1

1 3 1 0 0 G.-J. E. 0

0

0 2 0 1 0

0 0 2 8 0

0

2

0

x1 2s t

2 1

x s 2t

1 2

2

x x3 s s 1 t 0

x

4 4t

0 4

x5 t

0 1

1 0

1 1 0 2 0

0 0 1 4 0

0 0 0 0 0

0

2

s

0

t

B (2, 1, 1, 0, 0), (1, 2, 0, 4, 1): one basis for the kernel of T

6.26

Corollary to Theorem 6.3:

Let T : R n R m be the linear fransforma tion given by T (x) Ax.

Then the kernel of T is equal to the solution space of Ax 0

T (x) Ax (a linear transformation T : R n R m )

ker(T ) NS ( A) x | Ax 0, x R n (subspace of R n )

※ The kernel of T equals the nullspace of A (which is defined

in Theorem 4.16 on p.239) and these two are both subspaces

of Rn )

※ So, the kernel of T is sometimes called the nullspace of T

6.27

Range of a linear transformation T (線性轉換T的值域):

Let T : V W be a linear tra nsformatio n. Then the set of all

vectors w in W that are images of any vector s in V is called the

range of T and is denoted by range (T )

range (T ) {T ( v) | v V }

※ For the orthogonal projection of

any vector (x, y, z) onto the xyplane, i.e. T(x, y, z) = (x, y, 0)

※ The domain is V=R3, the codomain

is W=R3, and the range is xy-plane

(a subspace of the codomian R3)

※ Since T(0, 0, s) = (0, 0, 0) = 0, the

kernel of T is the set consisting of

(0, 0, s), where s is a real number

6.28

Theorem 6.4: The range of T is a subspace of W

The range of a linear tra nsformatio n T : V W is a subspace of W

Pf:

T (0) 0 (Theorem 6.1)

range (T ) is a nonempty subset of W

Since T (u) and T ( v) are vectors in range( T ), and we have

because u v V

T (u) T ( v) T (u v) range (T )

T is a linear transformation

cT (u) T (cu) range (T )

because cu V

Range of T is closed under vector addition

because T (u), T ( v), T (u v) range(T )

Range of T is closed under scalar multi

plication because T (u) and T (cu) range(T )

Thus, range(T ) is a subspace of W (according to Theorem 4.5

that a nonempty subset of W is a subspace of W if it is closed

under vector addition and scalar multiplication)

6.29

Notes:

T : V W is a linear transformation

(1) ker(T ) is subspace of V (Theorem 6.3)

(2) range (T ) is subspace of W (Theorem 6.4)

6.30

Corollary to Theorem 6.4:

Let T : R n R m be the linear tra nsformatio n given by T (x) Ax.

The range of T is equal to the column space of A, i.e. range (T ) CS ( A)

(1) According to the definition of the range of T(x) = Ax, we know that the

range of T consists of all vectors b satisfying Ax=b, which is equivalent to

find all vectors b such that the system Ax=b is consistent

(2) Ax=b can be rewritten as

a11

a12

a

a

Ax x1 21 x2 22

am1

am 2

a1n

a

xn 2 n b

amn

Therefore, the system Ax=b is consistent iff we can find (x1, x2,…, xn)

such that b is a linear combination of the column vectors of A, i.e. b CS ( A)

Thus, we can conclude that the range consists of all vectors b, which is a linear

combination of the column vectors of A or said b CS ( A) . So, the column space

6.31

of the matrix A is the same as the range of T, i.e. range(T) = CS(A)

Use our example to illustrate the corollary to Theorem 6.4:

※ For the orthogonal projection of any vector (x, y, z) onto the xyplane, i.e. T(x, y, z) = (x, y, 0)

※ According to the above analysis, we already knew that the range

of T is the xy-plane, i.e. range(T)={(x, y, 0)| x and y are real

numbers}

※ T can be defined by a matrix A as follows

1 0 0

A 0 1 0 , such that

0 0 0

1 0 0 x x

0 1 0 y y

0 0 0 z 0

※ The column space of A is as follows, which is just the xy-plane

1

0

0 x1

CS ( A) x1 0 x2 1 x3 0 x2 , where x1 , x2 R

0

0

0 0

6.32

Ex 7: Finding a basis for the range of a linear transformation

Let T : R 5 R 4 be defined by T ( x) Ax, where x is R 5 and

1

2

A

1

0

2 0

1 3

0 2

0 0

1 1

1 0

0 1

2 8

Find a basis for the range of T

Sol:

Since range(T) = CS(A), finding a basis for the range of T is

equivalent to fining a basis for the column space of A

6.33

1

2

A

1

0

2 0 1 1

1

1 3 1 0 G.-J. E. 0

0

0 2 0 1

0 0 2 8

0

c1 c2 c3 c4 c5

0 2 0 1

1 1 0 2

B

0 0 1 4

0 0 0 0

w1 w2 w3 w4 w5

w1 , w2 , and w4 are indepdnent, so w1 , w2 , w4 can

form a basis for CS ( B)

Row operations will not affect the dependency among columns

c1 , c2 , and c4 are indepdnent, and thus c1 , c2 , c4 is

a basis for CS ( A)

That is, (1, 2, 1, 0), (2, 1, 0, 0), (1, 1, 0, 2) is a basis

for the range of T

6.34

Rank of a linear transformation T:V→W (線性轉換T的秩):

rank(T ) the dimension of the range of T dim(range(T ))

According to the corollary to Thm. 6.4, range(T) = CS(A), so dim(range(T)) = dim(CS(A))

Nullity of a linear transformation T:V→W (線性轉換T的核次數):

nullity(T ) the dimension of the kernel of T dim(ker(T ))

According to the corollary to Thm. 6.3, ker(T) = NS(A), so dim(ker(T)) = dim(NS(A))

Note:

If T : R n R m is a linear transformation given by T (x) Ax, then

rank(T ) dim(range(T )) dim(CS ( A)) rank( A)

nullity(T ) dim(ker(T )) dim( NS ( A)) nullity( A)

※ The dimension of the row (or column) space of a matrix A is called the rank of A

※ The dimension of the nullspace of A ( NS ( A) {x | Ax 0}) is called the nullity

6.35

of A

Theorem 6.5: Sum of rank and nullity

Let T: V →W be a linear transformation from an n-dimensional

vector space V (i.e. the dim(domain of T) is n) into a vector

space W. Then

rank(T ) nullity(T ) n

(i.e. dim(range of T ) dim(kernel of T ) dim(domain of T ))

※ You can image that the dim(domain of T)

should equals the dim(range of T)

originally

※ But some dimensions of the domain of T

is absorbed by the zero vector in W

※ So the dim(range of T) is smaller than

the dim(domain of T) by the number of

how many dimensions of the domain of

T are absorbed by the zero vector,

which is exactly the dim(kernel of T) 6.36

Pf:

Let T be represente d by an m n matrix A, and assume rank( A) r

(1) rank(T ) dim(range of T ) dim(column space of A) rank( A) r

(2) nullity(T ) dim(kernel of T ) dim(null space of A) n r

rank (T ) nullity (T ) r (n r ) n

according to Thm. 4.17 where

rank(A) + nullity(A) = n

※ Here we only consider that T is represented by an m×n matrix A. In

the next section, we will prove that any linear transformation from an

n-dimensional space to an m-dimensional space can be represented

by m×n matrix

6.37

Ex 8: Finding the rank and nullity of a linear transformation

Find the rank and nullity of the linear tra nsformatio n T : R 3 R 3

define by

1 0 2

A 0 1 1

0 0 0

Sol:

rank (T ) rank ( A) 2

nullity (T ) dim( domain of T ) rank (T ) 3 2 1

※ The rank is determined by the number of leading 1’s, and the

nullity by the number of free variables (columns without leading

1’s)

6.38

Ex 9: Finding the rank and nullity of a linear transformation

Let T : R 5 R 7 be a linear transformation

(a) Find the dimension of the kernel of T if the dimension

of the range of T is 2

(b) Find the rank of T if the nullity of T is 4

(c) Find the rank of T if ker(T ) {0}

Sol:

(a) dim(domain of T ) n 5

dim(kernel of T ) n dim(range of T ) 5 2 3

(b) rank(T ) n nullity(T ) 5 4 1

(c) rank(T ) n nullity(T ) 5 0 5

6.39

One-to-one (一對一):

A function T : V W is called one-to-one if the preimage of

every w in the range consists of a single vector. This is equivalent

to saying that T is one-to-one iff for all u and v in V , T (u) T ( v)

implies that u v

one-to-one

not one-to-one

6.40

Theorem 6.6: One-to-one linear transformation

Let T : V W be a linear transformation. Then

T is one-to-one iff ker(T ) {0}

Pf:

() Suppose T is one-to-one

Then T ( v) 0 can have only one solution : v 0

i.e. ker(T ) {0}

Due to the fact

that T(0) = 0 in

Thm. 6.1

() Suppose ker(T )={0} and T (u)=T (v)

T (u v) T (u) T ( v) 0

T is a linear transformation, see Property 3 in Thm. 6.1

u v ker(T ) u v 0 u v

T is one-to-one (because T (u) T ( v) implies that u v)

6.41

Ex 10: One-to-one and not one-to-one linear transformation

(a) The linear transformation T : M mn M nm given by T ( A) AT

is one-to-one

because its kernel consists of only the m×n zero matrix

(b) The zero transformation T : R3 R3 is not one-to-one

because its kernel is all of R3

6.42

Onto (映成):

A function T : V W is said to be onto if every element

in W has a preimage in V

(T is onto W when W is equal to the range of T)

Theorem 6.7: Onto linear transformations

Let T: V → W be a linear transformation, where W is finite

dimensional. Then T is onto if and only if the rank of T is equal

to the dimension of W

rank (T ) dim( range of T ) dim( W )

The definition of

the rank of a linear

transformation

The definition

of onto linear

transformations

6.43

Theorem 6.8: One-to-one and onto linear transformations

Let T : V W be a linear transformation with vector space V and W

both of dimension n. Then T is one-to-one if and only if it is onto

Pf:

() If T is one-to-one, then ker(T ) {0} and dim(ker(T )) 0

Thm. 6.5

dim( range (T )) n dim(ker( T )) n dim( W )

Consequently, T is onto

According to the definition of dimension

(on p.227) that if a vector space V

consists of the zero vector alone, the

dimension of V is defined as zero

() If T is onto, then dim( range of T ) dim( W ) n

Thm. 6.5

dim(ker( T )) n dim( range of T ) n n 0 ker(T ) {0}

Therefore, T is one-to-one

6.44

Ex 11:

The linear transformation T : R n R m is given by T (x) Ax. Find the nullity

and rank of T and determine whether T is one-to-one, onto, or neither

1 2 0

(a) A 0 1 1

0 0 1

1 2

(b) A 0 1

0 0

Sol:

dim(Rn)

=

=n

1 2 0

(c) A

0 1 1

= dim(range

of T)

= # of

leading 1’s

T:Rn→Rm

dim(domain

of T) (1)

(a) T:R3→R3

3

3

(b) T:R2→R3

2

(c) T:R3→R2

(d) T:R3→R3

= (1) – (2) =

dim(ker(T))

rank(T)

nullity(T)

(2)

1 2 0

(d) A 0 1 1

0 0 0

If nullity(T)

= dim(ker(T))

=0

If rank(T) =

dim(Rm) =

m

1-1

onto

0

Yes

Yes

2

0

Yes

No

3

2

1

No

Yes

3

2

1

No

No

6.45

Isomorphism (同構):

A linear tra nsformatio n T : V W that is one to one and onto

is called an isomorphis m. Moreover, if V and W are vector spaces

such that there exists an isomorphis m from V to W , then V and W

are said to be isomorphic to each other

Theorem 6.9: Isomorphic spaces (同構空間) and dimension

Two finite-dimensional vector space V and W are isomorphic

if and only if they are of the same dimension

Pf:

() Assume that V is isomorphic to W , where V has dimension n

There exists a L.T. T : V W that is one to one and onto

T is one-to-one

dim(V) = n

dim(ker( T )) 0

dim( range of T ) dim( domain of T ) dim(ker( T )) n 0 6.46

n

T is onto

dim( range of T ) dim(W ) n

Thus dim(V ) dim(W ) n

() Assume that V and W both have dimension n

Let B v1 , v 2 ,

B ' w1 , w 2 ,

,v n be a basis of V and

,w n be a basis of W

Then an arbitrary vector in V can be represente d as

v c1 v1 c2 v 2 cn v n

and you can define a L.T. T : V W as follows

w T ( v) c1w1 c2 w 2 cn w n (by defining T (v i ) w i )

6.47

Since B ' is a basis for V, {w1, w2,…wn} is linearly

independent, and the only solution for w=0 is c1=c2=…=cn=0

So with w=0, the corresponding v is 0, i.e., ker(T) = {0}

T is one - to - one

By Theorem 6.5, we can derive that dim(range of T) =

dim(domain of T) – dim(ker(T)) = n –0 = n = dim(W)

T is onto

Since this linear transformation is both one-to-one and onto,

then V and W are isomorphic

6.48

Note

Theorem 6.9 tells us that every vector space with dimension

n is isomorphic to Rn

Ex 12: (Isomorphic vector spaces)

The following vector spaces are isomorphic to each other

(a) R 4 4 - space

(b) M 41 space of all 4 1 matrices

(c) M 22 space of all 2 2 matrices

(d) P3 ( x) space of all polynomials of degree 3 or less

(e) V {( x1 , x2 , x3 , x4 , 0), xi are real numbers}(a subspace of R 5 )

6.49

Keywords in Section 6.2:

kernel of a linear transformation T: 線性轉換T的核空間

range of a linear transformation T: 線性轉換T的值域

rank of a linear transformation T: 線性轉換T的秩

nullity of a linear transformation T: 線性轉換T的核次數

one-to-one: 一對一

onto: 映成

Isomorphism (one-to-one and onto): 同構

isomorphic space: 同構空間

6.50

6.3 Matrices for Linear Transformations

Two representations of the linear transformation T:R3→R3 :

(1) T ( x1 , x2 , x3 ) (2 x1 x2 x3 , x1 3x2 2 x3 ,3x2 4 x3 )

2 1 1 x1

(2) T (x) Ax 1 3 2 x2

0 3 4 x3

Three reasons for matrix representation of a linear transformation:

It is simpler to write

It is simpler to read

It is more easily adapted for computer use

6.51

Theorem 6.10: Standard matrix for a linear transformation

Let T : R n R m be a linear trtansformation such that

a11

a12

a

a

T (e1 ) 21 , T (e 2 ) 22 ,

a

m1

am 2

where {e1 , e2 ,

a1n

a

, T (e n ) 2 n ,

amn

, en } is a standard basis for R n . Then the m n

matrix whose n columns correspond to T (ei ),

A T (e1 ) T (e2 )

a11

a

T (en ) 21

am1

a12

a22

am 2

a1n

a2 n

,

amn

is such that T ( v) Av for every v in R n , A is called the

standard matrix for T (T的標準矩陣)

6.52

Pf:

v1

1

0

v

0

1

v 2 v1 v2

v

0

0

n

0

0

vn v1e1 v2e2

1

T is a linear transformation T ( v ) T (v1e1 v2e2

If A T (e1 ) T (e2 )

a11

a21

Av

am1

a12

a22

am 2

vnen

vnen )

T (v1e1 ) T (v2e2 )

T (vnen )

v1T (e1 ) v2T (e2 )

vnT (en )

T (en ) , then

a1n v1 a11v1 a12v2 a1n vn

a2 n v2 a21v1 a22v2 a2 n vn

amn vn am1v1 am 2 v2 amnvn

6.53

a11

a12

a1n

a

a

a

v1 21 v2 22 vn 2 n

am1

am 2

amn

v1T (e1 ) v2T (e2 ) vnT (e n )

Therefore, T ( v) Av for each v in R n

Note

Theorem 6.10 tells us that once we know the image of every

vector in the standard basis (that is T(ei)), you can use the

properties of linear transformations to determine T(v) for any

v in V

6.54

Ex 1: Finding the standard matrix of a linear transformation

Find the standard matrix for the L.T. T : R3 R 2 defined by

T ( x, y , z ) ( x 2 y , 2 x y )

Sol:

Vector Notation

T (e1 ) T (1, 0, 0) (1, 2)

T (e2 ) T (0, 1, 0) (2, 1)

T (e3 ) T (0, 0, 1) (0, 0)

Matrix Notation

1

1

T (e1 ) T ( 0 )

2

0

0

2

T (e2 ) T ( 1 )

1

0

0

0

T (e3 ) T ( 0 )

0

1

6.55

A T (e1 ) T (e 2 ) T (e3 )

1 2 0

2

1

0

Check:

x

x

1 2 0 x 2 y

A y

y

2 1 0 2 x y

z

z

i.e., T ( x, y, z ) ( x 2 y, 2 x y)

Note: a more direct way to construct the standard matrix

1 2 0 1x 2 y 0 z

A

2 1 0 2 x 1 y 0 z

※ The first (second) row actually

represents the linear transformation

function to generate the first (second)

component of the target vector

6.56

Ex 2: Finding the standard matrix of a linear transformation

The linear transformation T : R 2 R 2 is given by projecting

each point in R 2 onto the x - axis. Find the standard matrix for T

Sol:

T ( x , y ) ( x , 0)

1 0

A T (e1 ) T (e2 ) T (1, 0) T (0, 1)

0

0

Notes:

(1) The standard matrix for the zero transformation from Rn into Rm

is the mn zero matrix

(2) The standard matrix for the identity transformation from Rn into

Rn is the nn identity matrix In

6.57

Composition of T1:Rn→Rm with T2:Rm→Rp :

T ( v) T2 (T1 ( v)), v R n

This composition is denoted by T T2 T1

Theorem 6.11: Composition of linear transformations (線性轉換

的合成)

Let T1 : R n R m and T2 : R m R p be linear transformations

with standard matrices A1 and A2 ,then

(1) The composition T : R n R p , defined by T ( v) T2 (T1 ( v)),

is still a linear transformation

(2) The standard matrix A for T is given by the matrix product

A A2 A1

6.58

Pf:

(1) (T is a linear transformation)

Let u and v be vectors in R n and let c be any scalar. Then

T (u v) T2 (T1 (u v)) T2 (T1 (u) T1 ( v))

T2 (T1 (u)) T2 (T1 ( v)) T (u) T ( v)

T (cv) T2 (T1 (cv)) T2 (cT1 ( v)) cT2 (T1 ( v)) cT ( v)

(2) ( A2 A1 is the standard matrix for T )

T ( v) T2 (T1 ( v)) T2 ( A1v) A2 A1v ( A2 A1 ) v

Note:

T1 T2 T2 T1

6.59

Ex 3: The standard matrix of a composition

Let T1 and T2 be linear transformations from R 3 into R 3 s.t.

T1 ( x, y, z) (2 x y, 0, x z)

T2 ( x, y, z) ( x y, z, y)

Find the standard matrices for the compositions T T2 T1

and T ' T1 T2

Sol:

2

A1 0

1

1

A2 0

0

1 0

0 0 (standard matrix for T1 )

0 1

1 0

0 1 (standard matrix for T2 )

1 0

6.60

The standard matrix for T T2 T1

1 1 0 2 1 0 2 1 0

A A2 A1 0 0 1 0 0 0 1 0 1

0

1

0

1

0

1

0

0

0

The standard matrix for T ' T1 T2

2 1 0 1 1 0 2 2 1

A' A1 A2 0 0 0 0 0 1 0 0 0

1 0 1 0 1 0 1 0 0

6.61

Inverse linear transformation (反線性轉換):

If T1 : R n R n and T2 : R n R n are L.T. s.t. for every v in R n

T2 (T1 ( v)) v and T1 (T2 ( v)) v

Then T2 is called the inverse of T1 and T1 is said to be invertible

Note:

If the transformation T is invertible, then the inverse is

unique and denoted by T–1

6.62

Theorem 6.12: Existence of an inverse transformation

Let T : R n R n be a linear transformation with standard matrix A,

Then the following condition are equivalent

※ For (2) (1), you can imagine that

since T is one-to-one and onto, for every

w in the codomain of T, there is only one

(2) T is an isomorphism

preimage v, which implies that T-1(w) =v

can be a L.T. and well-defined, so we can

(3) A is invertible

infer that T is invertible

※ On the contrary, if there are many

preimages for each w, it is impossible to

find a L.T to represent T-1 (because for a

L.T, there is always one input and one

Note:

output), so T cannot be invertible

(1) T is invertible

If T is invertible with standard matrix A, then the standard

matrix for T–1 is A–1

6.63

Ex 4: Finding the inverse of a linear transformation

The linear transformation T : R3 R3 is defined by

T ( x1 , x2 , x3 ) (2 x1 3x2 x3 , 3x1 3x2 x3 , 2 x1 4 x2 x3 )

Show that T is invertible, and find its inverse

Sol:

The standard matrix for T

2 3 1

A 3 3 1

2 4 1

2 x1 3x2 x3

3 x1 3x2 x3

2 x1 4 x2 x3

2 3 1 1 0 0

A I 3 3 3 1 0 1 0

2

4

1

0

0

1

6.64

1 0 0 1 1 0

G.-J. E.

0 1 0 1 0 1 I

0 0 1 6 2 3

A1

Therefore T is invertible and the standard matrix for T 1 is A1

0

1 1

A1 1 0

1

6 2 3

0 x1 x1 x2

1 1

T 1 ( v) A1v 1 0

1 x2 x1 x3

6

2

3

x

6

x

2

x

3

x

2

3

3 1

In other word s,

T 1 ( x1 , x2 , x3 ) ( x1 x2 , x1 x3 , 6 x1 2 x2 3x3 )

※ Check T-1(T(2, 3, 4)) = T-1(17, 19, 20) = (2, 3, 4)

6.65

The matrix of T relative to the bases B and B‘:

T :V W

B {v1 , v 2 ,

, vn}

(a linear transformation)

(a nonstandard basis for V )

The coordinate matrix of any v relative to B is denoted by [v]B

if v can be represeted as c v c v

1 1

2 2

c1

c

cn v n , then [v]B 2

cn

A matrix A can represent T if the result of A multiplied by a

coordinate matrix of v relative to B is a coordinate matrix of v

relative to B’, where B’ is a basis for W. That is,

T (v)B ' A[ v]B ,

where A is called the matrix of T relative to the bases B and B’ (T對

應於基底B到B'的矩陣)

6.66

Transformation matrix for nonstandard bases (the

generalization of Theorem 6.10, in which standard bases are

considered) :

Let V and W be finite - dimensional vector spaces with bases B and B ',

respectively, where B {v1 , v 2 , , v n }

If T : V W is a linear transformation s.t.

T ( v1 )B '

a11

a

21 ,

am1

T ( v 2 )B '

a12

a

22 ,

am 2

,

T ( v n )B '

a1n

a

2n

amn

then the m n matrix who se n columns correspond to T (vi )B '

6.67

A [T ( v1 )]B [T ( v 2 )]B

a11

a

[T ( v n )]B 21

am1

a12

a22

am 2

a1n

a2 n

amn

is such that T ( v)B ' A[ v]B for every v in V

※The above result state that the coordinate of T(v) relative to the basis B’

equals the multiplication of A defined above and the coordinate of v

relative to the basis B.

※ Comparing to the result in Thm. 6.10 (T(v) = Av), it can infer that the

linear transformation and the basis change can be achieved in one step

through multiplying the matrix A defined above (see the figure on 6.74

for illustration)

6.68

Ex 5: Finding a matrix relative to nonstandard bases

Let T : R 2 R 2 be a linear transformation defined by

T ( x1 , x2 ) ( x1 x2 , 2x1 x2 )

Find the matrix of T relative to the basis B {(1, 2), ( 1, 1)}

and B ' {(1, 0), (0, 1)}

Sol:

T (1, 2) (3, 0) 3(1, 0) 0(0, 1)

T (1, 1) (0, 3) 0(1, 0) 3(0, 1)

T (1, 2)B ' 03, T (1, 1)B ' 03

the matrix for T relative to B and B'

3 0

A T (1, 2)B ' T (1, 2)B '

0 3

6.69

Ex 6:

For the L.T. T : R 2 R 2 given in Example 5, use the matrix A

to find T ( v), where v (2, 1)

Sol:

v (2, 1) 1(1, 2) 1(1, 1)

B {(1, 2), (1, 1)}

1

v B

1

3 0 1 3

T ( v )B ' Av B

0 3 1 3

T ( v) 3(1, 0) 3(0, 1) (3, 3)

B' {(1, 0), (0, 1)}

Check:

T (2, 1) (2 1, 2(2) 1) (3, 3)

6.70

Notes:

(1) In the special case where V W (i.e., T : V V ) and B B ',

the matrix A is called the matrix of T relative to the basis B

(T 對應於基底B的矩陣)

(2) If T : V V is the identity transformation

B {v1 , v 2 , , v n }: a basis for V

the matrix of T relative to the basis B

A T ( v1 ) B T ( v 2 ) B

1 0

0 1

T ( v n )B

0 0

0

0

In

1

6.71

Keywords in Section 6.3:

standard matrix for T: T 的標準矩陣

composition of linear transformations: 線性轉換的合成

inverse linear transformation: 反線性轉換

matrix of T relative to the bases B and B' : T對應於基底B到

B'的矩陣

matrix of T relative to the basis B: T對應於基底B的矩陣

6.72

6.4 Transition Matrices and Similarity

T :V V

B {v1 , v 2 ,

, vn}

B ' {w1 , w 2 ,

( a linear transformation)

( a basis of V )

, w n } (a basis of V )

A T ( v1 ) B T ( v 2 ) B

T ( v n )B

A ' T (w1 ) B ' T (w 2 ) B '

( matrix of T relative to B)

(T相對於B的矩陣)

T (w n )B '

(matrix of T relative to B ')

(T相對於B’的矩陣)

T ( v)B A vB , and T ( v)B ' A vB '

P w1 B

P 1 v1 B '

w 2 B

v 2 B '

w n B

v n B '

( transition matrix from B ' to B )

(從B'到B的轉移矩陣)

( transition matrix from B to B ')

(從B到B’的轉移矩陣)

from the definition of the transition matrix on p.254 in the

text book or on Slide 4.108 and 4.109 in the lecture notes

vB P vB ' , and vB ' P1 vB

6.73

Two ways to get from v B ' to T ( v)B ' :

(1) (direct) : A '[ v]B ' [T ( v)]B '

(2) (indirect) : P 1 AP[ v]B ' [T ( v)]B ' A ' P 1 AP

indirect

direct

6.74

Ex 1: (Finding a matrix for a linear transformation)

Find the matrix A' for T: R 2 R 2

T ( x1 , x2 ) (2 x1 2x2 , x1 3x2 )

reletive to the basis B' {(1, 0), (1, 1)}

Sol:

(1) A ' T (1, 0) B '

T (1, 1)B '

3

T (1, 0) (2, 1) 3(1, 0) 1(1, 1) T (1, 0)B '

1

2

T (1, 1) (0, 2) 2(1, 0) 2(1, 1) T (1, 1)B '

2

3 2

A' T (1, 0)B ' T (1, 1)B '

1 2

6.75

(2) standard matrix for T (matrix of T relative to B {(1, 0), (0, 1)})

2 2

A T (1, 0) T (0, 1)

1 3

transition matrix from B' to B

1 1

P (1, 0)B (1, 1)B

0

1

transition matrix from B to B '

※ Solve a(1, 0) + b(0, 1) = (1, 0)

(a, b) = (1, 0)

※ Solve c(1, 0) + d(0, 1) = (1, 1)

(c, d) = (1, 1)

1 1

P (1, 0) B ' (0, 1) B '

0

1

matrix of T relative B'

1

※ Solve a(1, 0) + b(1, 1) = (1, 0)

(a, b) = (1, 0)

※ Solve c(1, 0) + d(1, 1) = (0, 1)

(c, d) = (-1, 1)

1 1 2 2 1 1 3 2

A' P AP

0

1

1

3

0

1

1

2

1

6.76

Ex 2: (Finding a matrix for a linear transformation)

Let B {(3, 2), (4, 2)} and B ' {( 1, 2), (2, 2)} be basis for

2

R , and let A

3

Find the matrix of T

2

7

2

2

be

the

matrix

for

T

:

R

R

relative to B.

7

relative to B '

Sol:

Because the specific function is unknown, it is difficult to apply

the direct method to derive A’, so we resort to the indirect

method where A’ = P-1AP

3 2

transition matrix from B' to B : P (1, 2)B (2, 2)B

2

1

1 2

1

transition matrix from B to B': P (3, 2)B ' (4, 2)B '

2 3

matrix of T relative to B':

1 2 2 7 3 2 2 1

1

A' P AP

2 3 3 7 2 1 1 3

6.77

Ex 3: (Finding a matrix for a linear transformation)

For the linear transformation T : R 2 R 2 given in Ex.2, find v B ,

T ( v)B , and T ( v)B ' , for the vector v whose coordinate matrix is

v B '

Sol:

3

1

3 2 3 7

v B Pv B ' 2 1 1 5

2 7 7 21

T ( v)B AvB 3 7 5 14

1 2 21 7

1

T ( v)B ' P T ( v)B 2 3 14 0

or T ( v)B ' A' v B '

2 1 3 7

1 3 1 0

6.78

Similar matrix (相似矩陣):

For square matrices A and A’ of order n, A’ is said to be

similar to A if there exist an invertible matrix P s.t. A’=P-1AP

Theorem 6.13: (Properties of similar matrices)

Let A, B, and C be square matrices of order n.

Then the following properties are true.

(1) A is similar to A

(2) If A is similar to B, then B is similar to A

(3) If A is similar to B and B is similar to C, then A is similar to C

Pf for (1) and (2) (the proof of (3) is left in Exercise 23):

(1) A I n AI n (the transition matrix from A to A is the I n )

(2) A P 1BP PAP 1 P( P 1BP) P 1 PAP 1 B

Q 1 AQ B (by defining Q P 1 ), thus B is similar to A

6.79

Ex 4: (Similar matrices)

2 2

3 2

(a) A

and A '

are similar

1 3

1 2

1 1

1

because A' P AP, where P

0

1

2 7

2 1

(b) A

and A '

are similar

3 7

1 3

3 2

because A' P AP, where P

2

1

1

6.80

Ex 5: (A comparison of two matrices for a linear transformation)

1 3 0

Suppose A 3 1 0 is the matrix for T : R 3 R 3 relative

0 0 2

to the standard basis. Find the matrix for T relative to the basis

B ' {(1, 1, 0), (1, 1, 0), (0, 0, 1)}

Sol:

The transitio n matrix from B' to the standard matrix

P (1, 1, 0)B

(1, 1, 0)B

12 12

P 1 12 12

0 0

0

0

1

1 1 0

(0, 0, 1)B 1 1 0

0 0 1

6.81

matrix of T relative to B ' :

12 12 0 1 3 0 1 1 0

A ' P 1 AP 12 12 0 3 1 0 1 1 0

0 0 1 0 0 2 0 0 1

4 0 0

(A’ is a diagonal matrix, which is

0 2 0 simple and with some

0 0 2 computational advantages)

※ You have seen that the standard matrix for a linear

transformation T:V →V depends on the basis used for V.

What choice of basis will make the standard matrix for T as

simple as possible? This case shows that it is not always

the standard basis.

6.82

Notes: Diagonal matrices have many computational advantages

over nondiagonal ones (it will be used in the next chapter)

d1k 0

k

0

d

k

2

(1) D

0 0

d11 0

1

0 d2

1

(3) D

0 0

d1 0

0 d

2

for D

0 0

0

0

k

d n

0

0

, di 0

1

dn

0

0

dn

(2) DT D

6.83

Keywords in Section 6.4:

matrix of T relative to B: T 相對於B的矩陣

matrix of T relative to B' : T 相對於B'的矩陣

transition matrix from B' to B : 從B'到B的轉移矩陣

transition matrix from B to B' : 從B到B'的轉移矩陣

similar matrix: 相似矩陣

6.84

6.5 Applications of Linear Transformation

The geometry of linear transformation in the plane (p.407-p.110)

Reflection in x-axis, y-axis, and y=x

Horizontal and vertical expansion and contraction

Horizontal and vertical shear

Computer graphics (to produce any desired angle of view of a 3D figure)

6.85