Item Writing Workshop

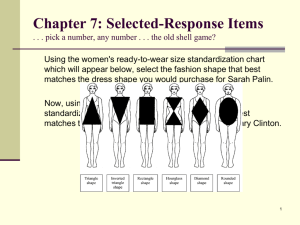

advertisement

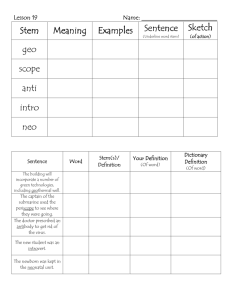

Chris Orem Jerusha Gerstner Christine DeMars Presentation and Discussion Item writing guidelines Examples Develop, Evaluate, and Revise Items Test blueprint A test blueprint is a table of specifications that weights each objective according to how important it is or how much time is spent covering that objective, links objectives to test items and summarizes information Essential for Competency Testing BLUEPRINT TIPS -List objectives in a table -Identify length of test -Designate number of items per objective -Evaluate the importance of each objective and assign items accordingly -Often assign one point per item Objective Weighting Items Objective 1 25% 10 Objective 2 25% 10 Objective 3 25% 10 Objective 4 25% 10 Writing Items Type of Item Construction Scoring True/False Difficult Easy Matching Easy Easy Completion Easy Difficult Multiple Choice Difficult Easy Essay Easy Difficult Some items are more appropriate when testing different kinds of knowledge; or when tapping into different kinds of cognitive processes. We’ll focus on multiple choice items because recruiting faculty to score open-ended items would be difficult. http://testing.byu.edu/info/handbooks/betteritems.pdf Content Style/format Writing the stem Writing the distracters Content Focus on a single problem when writing an item Use new situations to assess application Avoids memorization exercises Allows for synthesis and evaluation Keep content of items independent students shouldn’t be able to use one item to answer another, although a set of items may tap into a shared scenario Avoid opinion-based items Address a mix of higher-order and lowerorder thinking skills. Bloom Haladyna Evaluation Problem Solving Synthesis Evaluating Analysis Higher Order Skills Predicting Application Comprehension Knowledge Lower Order Skills Defining Recalling Try item stems such as "If . . ., then what happens?", "What is the consequence of . . .?", or "What would happen if . . .?" (predicting) Ask students to make a decision based on predetermined criteria, or to choose criteria to use in making a decision, or both (evaluating). Require the student to use combinations of recalling, summarizing, predicting, and evaluating to solve problems. Style/format Avoid excess words – be succinct Use specific, appropriate vocabulary Avoid bias (age, ethnicity, gender, disabilities) Write stems and options in third person Underline or bold negative or other important words Have others review your items Writing the stem The stem should clearly state the problem Place the main idea of the question in the stem, not the item options Keep the stem as short as possible Don’t provide clues to correct answer in stem (e.g., grammatical clues) If the stem is a complete sentence, end with a period and begin all response options with upper-case letters. If the stem is an incomplete sentence, begin all response options with lower case letters. Use negative stems rarely Writing the distracters Make sure there is only one correct answer for each item Develop as many effective plausible options as possible, but three are sufficient (Rodriguez, 2005) It is better to have fewer options than to write BAD options to meet some quota! Vary the location of the correct answer when feasible (Flip a coin), or put options in logical order (e.g. chronological, numerical) Avoid excessive use of negatives or double negatives Keep options independent Keep options similar (in format) Length and wording Writing the distracters DO use as distracters: common student misconceptions (perhaps from open-ended responses from previous work) words that “ring a bell” or “sound official” responses that fool the student who has not mastered the objective DO NOT use as distracters: responses that are just as correct as the right answer implausible or silly distracters Use “all of the above” and “none of the above” sparingly Don’t use “always” or “never” Don’t give clues to the right answer The best way to increase the reliability of a test is to: A. increase the test length B. removing poor quality items C. Tests should be readable for all test takers. What’s wrong with this item? Stem should state the problem California: A). Contains the tallest mountain in the United States B). Has an eagle on its state flag. C). Is the second largest state in terms of area. *D). Was the location of the Gold Rush of 1849. What is the main reason so many people moved to California in 1849? A). California land was fertile, plentiful, and inexpensive. *B). Gold was discovered in central California C). The east was preparing for a civil war. D). They wanted to establish religious settlements. Bleeding of the gums is associated with gingivitis, which can be cured by the sufferer himself by brushing his teeth daily. A. true B. false What’s wrong with this item? More than One Possible Answer The United States should adopt a foreign policy based on: A). A strong army and control of the North American continent. B). Achieving the best interest of all nations. C). Isolation from international affairs. *D). Naval supremacy and undisputed control of the world’s sea lanes. According to Alfred T. Mahan, the United States should adopt a foreign policy based on: A). A strong army and control of the North American continent. B). Achieving the best interest of all nations. C). Isolation from international affairs. *D). Naval supremacy and undisputed control of the world’s sea lanes. Following these rules does not guarantee that items will perform well empirically. Testing companies, using paid item-writers and detailed writing guidelines, ultimately only use 1/3 (or fewer) of the items operationally. It is normal and expected to have to revise items after pilot-testing . ITEM ANALYSIS STEPS: •Item difficulty - proportion of people who answered the item correctly - an item should not be too easy or too difficult - problems arise from poor wording, trick questions, or speediness • Item Discrimination - correlation of item and total test, should be higher than 0.2 - item as an indicator of the overall test score - the higher the better - can be (but shouldn’t be) negative • Distractor Analysis - frequency of response selections - item is problematic if people with a high overall score are selecting incorrect responses frequently Ultimately, you want to know whether students are achieving your objectives Tests are used to indirectly measure these knowledge, skills, attitudes, etc. Items you write are a sample of all possible items to measure that objective the more you write (and the better your items are!) the more reliably you can measure the objective You want to be sure that the items you create are measuring achievement of the objective, and NOT test-wiseness, reading ability, or other factors Haladyna, T. M. (1999). Developing and validating multiple-choice test items. Mahwah, NJ: Lawrence Erlbaum Associates. Rodriguez, M. C. (2005). Three options are optimal for multiple-choice items: A meta-analysis of 80 years of research. Educational Measurement: Issues and Practice, 24, 3-13. Downing & Haladyna (2006). Handbook of test development. Mahwah, NJ: Lawrence Erlbaum Associates. Burton S.J. et al (1991). How to prepare Multiple-Choice Test Items: Guidelines for University Faculty. Brigham Young University Testing Services.