Ch 7. Selected-Response Items

advertisement

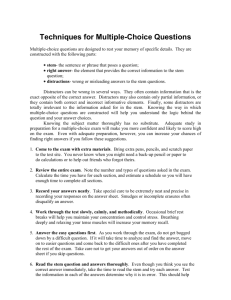

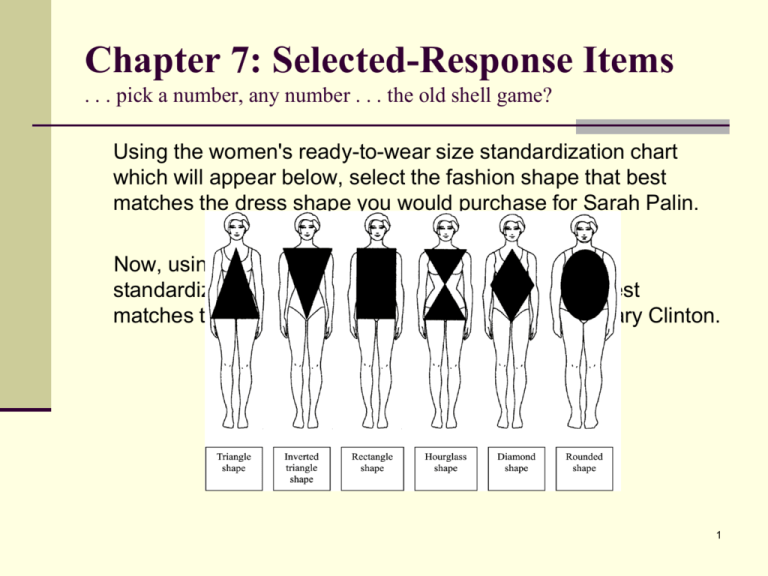

Chapter 7: Selected-Response Items . . . pick a number, any number . . . the old shell game? Using the women's ready-to-wear size standardization chart which will appear below, select the fashion shape that best matches the dress shape you would purchase for Sarah Palin. Now, using the same women's ready-to-wear size standardization chart, select the fashion shape that best matches the dress shape you would purchase for Hillary Clinton. 1 A Bit of History . . . Earliest known Selected response exam. “Multi-Choice-Cave” Art Source: uncyclopedia.wikia.com 2 And More Recent History . . . Slumdog Millionaire 3 Topics Type of test items – SR and CR Comparison of SR and CR items This Chapter is about SR items Recommendations for multiple-choice Recommendations for true-false Recommendations for matching Effect of guessing 4 Types of Test Items Selected-response (SR) test item - the student selects from several alternative Also called an objective item Examples: multiple-choice, true-false, matching Constructed-response (CR) test item – the student must produce a response from scratch but within a context Also called a free-response item Examples: fill-in-the-blank (completion), short answer, essay, performance assessment (some educators put this type of “item” in a separate category) 5 Relative Merits of SR and CR Items . . . page 1 of 3 on the comparisons . . . Reliability – Advantage SR – 1) scorer reliability big problem in CR items; 2) in a given time period, the student can do more SR items than CR items and from our discussion of reliability factors we know the more items the greater the reliability score. Time to Prepare – Advantage CR – a test bank created by the textbook company can help in a SR test, but a CR item is still quicker to write. 6 Relative Merits of SR and CR Items . . . Page 2 of 3 on the comparisons . . . Time to Score – Advantage SR – time to score an SR test is minimal; scoring a CR test requires a great deal of time. Flexibility – Advantage CR – easy to conduct a quick assessment when information is wanted NOW. Cost – Advantage SR – 1) external testing companies charge more for human scoring; 2) if you score yourself and consider “time is money” it costs more for you to do CR items over time. 7 Relative Merits of SR and CR Items . . . Page 3 of 3 on the comparisons . . . Student Reactions – Mixed – The research here is not definitive. Some students like SR and some like CR. Do students study harder preparing for one or the other? Do students do better on one or the other? Research is mixed. Beware broad pronouncements advocating one over the other. Validity – Equal – When CR and SR items are written to measure the same cognitive behavior, CR and SR scores are almost perfectly correlated. 8 So What . . . should we take away from this comparison discussion. Teachers need to be knowledgeable about constructing both item types. Teachers need to be aware that each item type has strengths and weaknesses and use this information to evaluate item type usage. Teachers need to guard against gullibly accepting the “common sense” rhetoric about one type of item being better in assessing important mental processes. 9 Multiple-Choice Items Many variations, common ones are: simple question followed by a number of alternative choices, typically four paragraph(s) followed by the alternatives as the two above, but the alternatives are combinations of conditions (I &II, I&III, I&IV, III & IV) (AVOID making these types of items on your own tests) item sets (i.e., multiple questions based on a single map, chart, table or reading Structure and terms (see next slide) 10 Anatomy of a Multiple-Choice Item (e.g., structure and terms) 11 Multiple Choice Items: Suggestions for Preparing 1. See Haladyna’s comprehensive list of rules. 2. Content is King . . . when writing items get the content issue right above all. 3. Don’t give clues to the correct answer (either wittingly or unwittingly). 4. Don’t be too rigid in rule application. Good items represent a sense of finesse and ingenuity, not just rule application. Let’s look at a few of McEwing’s Fave Rules for Multiple Choice Items - a short list selected from Haladyna and similar lists. 12 A Selection of Multiple Choice Item Writing Guidelines My Fave Guidelines, Sets I. & II. I. General item-writing (procedure) 1. 2. 3. 4. II. Format the item responses vertically, not horizontally. Keep the vocabulary as simple as possible. Minimize student reading time while maintaining meaning. Avoid potentially insensitive content or language. General item-writing (content) 1. 2. 3. 4. Base all items on your previously stated learning objectives. Avoid cuing one item with another; keep items independent of one another. Avoid textbook, verbatim phrasing when developing the item. Base items on important aspects of the content area; avoid trivial material. 13 My Fave Guidelines, Set III. III. Stem development 1. 2. 3. 4. 5. State the stem in either question form or completion form. When using the completion format, don’t place the missing concept at the beginning or middle of the stem. Include only the material needed to make the problem clear. Don't add extraneous information unless this is part of what you ask the students to identify. Word the stem positively; avoid negative phrasing. If an item must be stated negatively, underline or capitalize the negative word. Include the central idea and most of the phrasing in the stem. 14 My Fave Guidelines, Set IV. IV. General option development 1. Place options in a logical order, if one exists (e.g., numerical, alphabetical, chronological). 2. Keep the length of options fairly consistent. 3. Avoid, or use sparingly, the phrase “all of the above.” 4. Avoid, or use sparingly, the phrase “none of the above.” 5. Avoid distracters that can clue test-wise examinees; for example, grammatical inconsistencies involving “a" or "an" give clues to the correct answer. 15 My Fave Guidelines, Sets V. & VI. V. Correct option development 1. 2. VI. Position the correct option so that it appears about the same number of times in each possible position for a set of items. Make sure there is one and only one correct, or clearly best, answer on which experts would agree. Distracter development (sometimes called foils) 1. 2. 3. 4. 5. Use plausible distracters; avoid illogical distracters. Incorporate common errors of students in distracters. Use familiar yet incorrect phrases as distracters. Use true statements that do not correctly answer the item. Shun/Court humor when developing options. 16 True-False Items . . . or any binary choice items – such as “will work or won’t work”; “nonfiction or fiction”; “equal to or not equal to”; “fact or opinion”. Advantages Much of the practical knowledge on which the real world operates reduces to propositions of true or false Allows greater coverage of topics during a given testing time Disadvantages Format invites trivial content Students love to argue these with counter examples demonstrating the item isn’t “always” true or “always” false Poor public image – reinforces the public view that tests are just guessing games, a matter of chance (in point of fact it is NOT easy to get a good score on true/false items if there are enough items in the test) 17 Beware the clues, glorious clues . . . 18 T/F: Suggestions for Preparing Suggestions on writing traditional T/F items 1. Include only one concept in each statement. 2. Phrase items so that a superficial analysis by the student suggests a wrong answer (is this trickery or a mirror of life?). 3. Create approximate equal numbers of T and F. 4. Avoid using negative statements or using the word NOT. 5. Never use double negatives. 6. Use sufficient number of items to counteract guessing. Suggestion for a not-so-traditional T/F item style 1. If sentence is false, revise the sentence so that it is true. 2. Target the revision by underlining a word or phrase. 3. Decide on how you will award points on these types of items. 19 Multiple Binary Choice . . . a more complex approach within the T/F item family. In this approach, the student is presented with a paragraph of information – called the stem or stimulus. For example, this stem could be a new scenario related to a class discussion. The stem is the base for the binary choice questions that follow. This allows you to cluster questions. Make sure: Each question in the cluster meshes well; The length of each question is similar; Each question stays on topic with the stem. Some experts assert that this approach assesses a higher level of cognition than the traditional binary choice. What do you think . . . Agree or Disagree? 20 Using Matching Items . . . . the pluses and minuses Pluses: Much of our knowledge involves associations in our memory banks. Making connections and distinctions is important. Matching items are generally quite brief and are especially suitable for “who, what, when, and where” questions. They permit efficient use of space when there are a number of similar types of information to be tested. They are easy to score accurately and quickly. Minuses: Among the drawbacks of matching items are that they are difficult to use to measure learning beyond recognition of basic factual knowledge. They are usually poor for diagnosing student strengths and weaknesses. They are difficult to construct well since parallel information is required. 21 Matching: Suggestions for Preparing 1. Matching is not appropriate for distinct ideas; all items in a matching section should be related. 2. Use brief lists (not more than 10) 3. Introduce the matching section by describing the relationship you want the student to find. 4. Place the section on a single page. 5. Place the stem list on the left; place the response option list on right. Sometimes, when the stems are lengthy, matching sections are created above (response option list) and below (stem list). 6. Arrange at least one list in a natural order (e.g., chronologically, alphabetically). 7. Have at least one more response option than stem items. 8. Indicate to the student when the response options may be used more than once to match the stem list. 22 Additional Topics Should we allow/encourage student comments on items? (e.g., they explain their thinking regarding their choices) Useful only on your tests, not external. During or after the test? Both? To what end? Do all students know they can do this and how it will be used. Does this favor some students? Should we worry about effects of student guessing? How to analyze: binomial formula Conclusion: It is not easy to get good score by sheer guessing if number of items is sufficient. For example, the probability of getting a good score (90%) on a 20 item MC test just from guessing is 2 in 10,000. 23 Practical Advice 1. Become familiar with the recommendations for writing various types of items. 2. Gain experience in writing items. 3. Have other people critique your items . 4. Focus more about what you want to test than exactly how to test it. 24 Terms Concepts to Review and Study on Your Own (1) alternatives constructed-response (CR) item distracters essay item foils free-response item Item 25 Terms Concepts to Review and Study on Your Own (2) item stem multiple-choice item objective item performance assessment selected-response (SR) item 26