Exam2-ans

advertisement

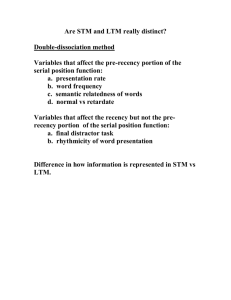

1. There are two visual systems, one rod-based and one cone-based that have different functional characteristics. *For range of light, the rods are sensitive to very low light levels up to fairly high ones while the cones are sensitive from fairly high to very high levels. The eye/brain accommodates to light level, changing its sensitivity in relation to the level of illumination, which widens the range a great deal (to 1013 to 1). For acuity, the center of the eye (fovea) has small cones with one to one connections to retinal ganglion cells so it can report fine detail to the brain (like our finger tip vs. back demo in class). For color, we have three different kinds of cones (responsive to different wavelengths of light) that connect in an opposing way to ganglion cells in reporting what color is activating them. For distance, we have the amount of lens bulging to bring things to a focus on the retina and the convergence of the two eyes as major factors and other cues also that may be mentioned (texture, known size, interposition etc.). 2. a) Lateral inhibition involves a line or sheet of neurons having inhibitory connections to the neurons on either side of them (in addition to their main signaling function onward to ganglion cells). The effect is to enhance or sharpen the spatial distribution of the responses to a pattern of stimulation. b) In audition, the basilar membrane vibrates across a wide area of hair cells, but they have lateral inhibitory connections so they "sharpen" the profile of the vibration in reporting to the brain, and thus the effect is as if a narrow band of the membrane were vibrating--allowing for place theory to be a possibility in allowing us to hear many different frequencies rather than a few. c) In vision, it enhances contrasts--places where the level of illumination changes as in the work with Limulus (the horseshoe crab), allowing us to borders or areas of spatial changes in illumination. 3. a) Top down perception processes are processes that help us perceive by using context and expectations to help us interpret the stimulus. Bottom up processes are those that directly respond to the stimulus, “taking in” the sensory data. b) In reading, the bottom up processes are what enables us to see dark/light areas, the stimulus features out of which letters and words are constructed. Top down processes enable us to read more easily by building up contextual expectations as some words are read and sentence structure emerges--the next words are read much faster/more easily. This can lead to overlooking the one letter error because the reader already has a strong expectation of what the word is likely to be before they take in every part of it. c) The incident involved top down processing in that many people in the class “saw” the wrong woman toss the envelope because it makes more sense for it to be tossed by the person who says “Here’s your damn money…” than for the other woman—it fits our expectations or our schema for how this situation would play out. d) for the Loftus and Palmer work, the word used (“contacted” or “smashed” etc. to ask about a video of an automobile collision (smashup!)) implies smaller or larger impact collisions, so people adopted speed estimates (and consequences—broken glass) that fit their expectations, another example of top down processing. 4. a) Sensory storage: iconic: preserves whole image—vast capacity and very brief (under a second for vision). Backward masking occurs. b) STM: very limited in capacity (Miller’s magic no. 7) but chunking can increase that. Also, STM is short lasting unless maintained via rehearsal (the articulatory loop) with material either fading or being displaced by new info. c) LTM: takes a while (½ to 1 hour or more) to consolidate/make permanent and lasts a very long time (Spanish rate of loss was 5% a year or less). LTM is seemingly unlimited in size. d) STM views that store as a temporary limited size buffer for material coming in via sensorty store—from the outside world. WM views that “store” as the activated part of LTM as well as new material coming in from the outside and also as the “current contents of consciousness” –the stuff we’re aware of and thinking about at any time. Material is either lost (STM view) or returns to an unactivated state in LTM (working memory view) when no longer maintained in STM/WM. 5. ACT posits that our declarative memory consists of propositions stored in nodes connected to other propositions via links of some type over which activation can spread. Thus when we think about one thing, related things are raised to) consciousness (activated). The Godden & Baddeley exper. showed that context helps in retrieval and thus reinforces the idea that things stored together are indeed tied together and that raising awareness of one has an effect on our awareness of the other (activates it and thus makes it more retrievable). The idea of encoding specificity is that we encode along with information we are learning, features of the context in which it is learned. When we try to recall info we do better if the context is similar to the one in w\hich the info was originally encoded. Presumably, being in the same context at retrieval time as at encoding time allows us to use the nodes representing the context as retrieval cues to get to the info we are trying to recall. The fan effect (Dr. is in the bank) exper. showed that if more links radiate off a node, the activation has more ways to spread and thus the amount along each pathway is reduced--adding a small amount of time to the time to verify statements. In our life, when trying to remember (retrieve) something, we often can help the process by thinking about related things. Thus if I try to remember the name of my third grade teacher and have difficulty, I can try to think of kids in the class with me or of the name of my second grade teacher, etc. so as to activate nodes surrounding (and hopefully connected to) the desired one. 6. a) The condition is called anterograde amnesia. b) He was unable to form new long-term declarative memories. The damage to the hippocampus did not allow him to transfer info from STM into LTM (illustrated by one of the two encoding into LTM arrows being blocked). c) He had his old (pre-operation) memories and could still acquire skilled or procedural memory. The remainder of his memory functions were preserved, Sensory storage and readout into STM, loss or forgetting from sensory store or LTM (or other stores), etc. 7. c and e support verbal and a, b and d support spatial memory. a) Shepard and Metzler-for block figures had people look at various pairs of figures (block figures) in various stages of rotation and judge whether one can be rotated in a plane to be identical to the other. and the time was linear with angle of rotation. This showed that mental operations (“rotation”) was analogous to an action in the real world. b) Kosslyn showed that in scanning from one location to another in the island experiment, people took an amount of time proportional to the actual distances apart the two places were in the diagram of the island, thus supporting the idea that there is a spatial quality in the memory process involved in doing this task. c) Meyer and Schvaneveldt showed that priming exists; you are faster at reading two related words than you are at two unrelated words. This demonstrated a linkage between semantically related words in LTM, consistent with the ACT (node, link spreading activation) theory. d) Brooks: scan block letter, spatial response task (point at Y's and N's on board) interfered with spatial task and verbal response task (say yes/no) interfered with verbal task (identify nouns in sentence task). This shows that we have at least two codes in memory, a spatial and a verbal. If there were not dual codes then the interference pattern obtained by Brooks (spatial task interferes with spatial response mode, verbal task with verbal response mode) would not have been obtained. The type of response mode would not have interacted with the type of task if they were all being done by one type of process or "code". e) Anderson showed that when you activated a proposition, the number of propositions (verbal memory code) that shared a common pathway (lawyer in bank + lawyer in park) were slower to respond to than those that only had one pathway. The conclusion was that activation spreads over connected pathways and that the more pathways the more it is split, thus slowing the activation of the connected node. Less activation spreads over any given link if there are more links splitting it up. 8. a) the finding (Godden & Baddeley’s deep sea diver experiment) showed encoding specificity; that we encode specific information about the learning environment and surround that, if reproduced at retrieval time results in better/easier retrieval. b) Broadbent’s theory that we choose one out of multiple input channels to attend to and as a result ignore other channels, focusing on one at a time and thus not noticing voice changes, language changes etc. in the unattended channel. c) Triesman’s explanation for the finding that we do notice some things in the unattended channel in attention experiments involving multiple inputs (for ex. our own name). The explanation is that while we focus on one channel we reduce but do not eliminate info flowing in from other channels. d) the theory that holds that we process multiple channels of input to a great degree and only choose one to process further late in the process-after the meaning is extracted. (Explains pennies at the bank experiment as well as 1B3 in one ear and A2C in other being heard as ABC or 123 when subject is focusing on one channel. Meaningful material from the unattended channel gets in—and it is its meaning that gets it in, therefore late selection. The spotlight model is based on experiments showing that w.o. moving our eyes, we can direct our attention to a particular part of visual world w.o. moving our eyes, that we’re faster at seeing things in the focused upon area. 9. Problem isomorphs are variations of a problem that have different cover stories or other features of their reputation but identical problem spaces. The link is via memory limitations (STM or working memory) that limit the amount of material and amount of planning of move sequences or simply subgoal formation and holding on to subgoals, as well as the ability to visualize problem states. Some representations (isomorphs) of a problem may impose less of a memory load than others and thus make the problem much easier to solve. Another major source of difficulty is the size of the problem space (no. of possible pathways or terminal nodes). Another possible answer would be the amount of specialized knowledge required on knowledge rich problems. 10 c, b, n, I, d, k, j, m, a, r 11. d, a, I, e, f, j, g, m, l, b 12. c, d, a, e, g, j, h, b, k, L E.C..Refer to the Sternberg memory scanning figure in the memory lecture. The axes are “reaction time” or RT on the ordinate and “set size” or “remembered set size on the abscissa. A) Serial processing is evidenced by the fact that the graph is a straight line sloping up to the right, intersecting the Y axis at some point above zero. Exhaustive search is evidenced by the finding that the slope is the same for positive and negative items. B) In the figure, the slope for positive items would be ½ of the normal (paart A) one, indicating self-terminating search. For negative items “no’s” it would be the same as in part A. C) The lines would be horizontal, showing that scanning time did not vary with set size.