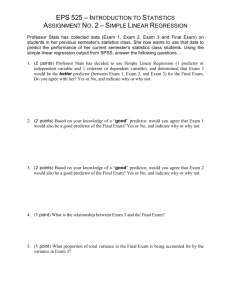

Handout Linear Regression

advertisement

Handout Linear Regression – Spring 2016 Consuelo Arbona Ph.D. EPSY 8334 University of Houston Definition: Multiple linear regressions allow one to examine the individual and collective contribution of more than one independent variable (or predictor variable) on a dependent variable (also called criterion variable). For example, regression could be used to examine the contribution of gender, ethnic identity and self esteem to depression scores. Typically, one would say depression scores are regressed onto gender, ethnicity, self esteem and ethnic identity scores. Regression assumes that the relations of the independent and dependent variables are linear (positive or negative). Regression line – best line that describes the relation of one or more dependent variables ( X1 X2 X3) to an independent variable Y: Y = b1 X1 + b2 X2 + b3 X3 + b4 X4 + a (constant). The bs are the beta coefficients, or weight of each variable). The larger the b the stronger the unique association of the predictor variable to the criterion. Characteristics: Both criterion and predictor variables in regression analyses should be continuous. Categorical variables can be included as predictors only if they have two levels (e.g. gender: 1 = F; 2 =M). Categorical variables with more than two levels (e.g. ethnicity: White, Black and Hispanic) need to be dummy-coded. One way to do it is to create two dummy variables that use one group- lets say Whites - as the reference group: Var 1 Hisp where the coding goes Hisp = 1 and W =2 and B = 2 ; Var 2 Black where Black =1 and W=2 and H = 2--- so we code three ethnic groups in terms of two variables Hisp with two values 1= Hisp and 2 for every one else and Black where 1 stands for Blacks and 2 stands for every one else. Both Hisp (coded 1,2)and Black (coded 1,2) are entered as variables to account for three ethnic groups. Predictor variables should be highly related to the criterion variable and the correlation of the predictor variables among themselves should be low (to aovid multicollinearity problems). Three types of linear regression analyses: Simultaneous, Stepwise, and Hierarchical Simultaneous – all predictors are entered at once in the equation Stepwise – Computer use an algorithm to decide which predictors variables to enter. For example in forward stepwise regression, the variable with the highest correlation with the dependent variable is entered first, followed by the variable that has the highest correlation with the dependent variable once controlling for the fist variable entered and so on. Stepwise methods (there is also backward stepwise) are used for strictly prediction purposes (not to test conceptual models)and with large sample sizes (about 40 cases per predictor). Results typically are very sample specific and typically do not generalize to other samples. Hierarchical – researcher chooses order that variables are entered in the equation, decided according to (1) causal priority – variables presumed to cause other predictor variables are entered first (e.g. parental SES and offspring’s academic attainment), (2) research relevance/theory- those variables that have been studied before are entered first; variables that theory predict should EPSY 8334 - Linear Regression Page 1/5 antecede other variables are entered first (3) main effect variables are always entered before interaction effect variables. Hierarchical regression typically is used to test psychological models. Sample size – Depends on the level of power one wants, the expected value of R2 and the number of predictors. Some authors give the following formula to calculate minimum sample size for linear regression (not considering interaction effects): SS = 50 + 8k (k = # of predictors) Interpretation of Regression Table: R2 Equals the proportion of variance in the criterion variable accounted for or “explained” by the linear combination of the predictor variables. It is considered a measure of effect size. (Comparing size of R2 from different studies is tricky because determining the relative magnitude of the R2 requires taking into consideration range of scores in independent variables, number of independent variables in the analyses and sample size for each study.) Δ R2 In hierarchical regression, ΔR2 equals the proportion of variance accounted for by a predictor variable (or a collection of predictor variables) entered in one step over and above the proportion of variance accounted by all the predictor variables that have been entered in the previous steps in the equation (in other words, the incremental value of R2 ). (B) Non-standardized Beta Partial regression coefficients (Column labeled B in the SPSS Regression Table refers to the bs in the equation: Y = b1 X1 + b2 X2 + b3 X3 + c). The Non-standardized Betas indicate how much Y (the value of the criterion variable or DV) will change for a unit of change in the predictor variable (IV) when all the other variables are controlled for. Each B is expressed in the metric of the DV (e.g in one variable scores may range from 1 to 5 and another form 1to 10), therefore the values of the Nonstandardized Bs cannot be compared with each other. β Standardized Beta (or Beta coefficients ) are the Beta values standardized (expressed in terms of standard deviation units from the mean of the criterion or DV), so within one regression analyses they can be compared to each other (However, without a test of significance one cannot determine if the differences observed in coefficients in a regression output are statistically significant or not). EPSY 8334 - Linear Regression Page 2/5 Table 2 from Pina-Watson et al., (2013) study. Interpretation of Hierarchical Regression Table Step 1 SES Generation .08 - .09 Step 2 SES Generation Indep. Self-C .01 -.03 .45*** Step 3 SES Generation Indep. Self-C Barriers CDSE R² .02 R² .02 .19*** .21*** .10 .31*** -.01 -.02 .26*** -.18*** .29*** Step 4 DV – Life Satisfaction R² indicates that the combination of SES and Generation shares 2% of variance in Life Satisfaction (LS). The R² is not stat. sig. –which means that the combination of variables is not associated to LS. The s for SES and Gen are not stat. sig. either. The R² from step 1 to step 2 = .19 and is statistically significant, which indicates that the addition of Indep. Self C. increased by 19% the amount of variance explained in LS above and beyond the control variables. The for Indep. Self C is statistically significant, which means that when controlling for the other two variables, Indep. Self C contributes unique variance to LS. The R² is stat sig. This means that controlling for all other variables in the model (those entered in Step 1 and Step 2) Barriers and CDSE, as a set, contributed additional variance to LS. Examination of the coefficients in Step 3 shows that when controlling for all variables the model, Indep. Self C., Barriers and CDSE are stat. sig. which means that each one contributed unique variance to LS, in the expected direction .06** .52*** If the study would have examined moderation effects, the interaction terms would have been entered in this stepIf the R² is statistically significant in the step where interaction terms are entered, then, the interaction effect is statistically significant. But is it strong enough? The for the interaction term is examined next. If the for the interaction term is stat. sig., it means that the relation of the predictor to the criterion is moderated by the moderator. Therefore, in order to claim an interaction effect both, the R² in the step where interaction term is entered and the for the interaction term must be statistically significant In the next page this is explained further based on the Wei et al (2012) article. **p < .01, ***p < .001 EPSY 8334 - Linear Regression Page 3/5 Wei et al (2012) Moderation Analyses: Class 4 Moderation analyses is used to examine to what extent the strength and direction of the corelation of a predictor variable (perceived racial discrimination—PRDE ) to a criterion variable (PTSD) is different for people who vary in terms of a third variable –moderator (Ethnic Social Connectedness ESC). This refers to interaction effects or the multiplicative effect of the predictor and the moderator on the outcome of interest. In this case, (PRD X ESC) To test the interaction effect, a new variable – called interaction term- is computed multiplying scores in the predictor and the moderator for each participant – (PRD X ESC, in this case). The interaction term is entered in the last step of the hierarchical regression analyses. If the R² for that step is statistically significant (indicating that the interaction term explains an additional amount of variance), is the first indication that there may be an interaction effect. The predictor and moderator variables must be entered in earlier steps so the interaction term (so that the R² can be examined). In the table above, the R² = .02* from step 3 to 4 is statistically significant therefore, the interaction effect may be statistically significant. In step 4, the for one of the two interaction terms is also stat. sig. (PRD X Ethnic SC; = -.12*). Therefore, the relations of perceived racial discrimination (PRD) to PTSD is moderated by Ethnic Social Connectedness - But how?? Is the moderation effect consistent with the researcher’s hypotheses?? The nature (strength and direction of correlation) of the interaction is revealed by two separate graphs plotting the relation of PRD to PTSD among those who scored either high \ (1 SD>mean) or low ( 1SD<mean) in Ethnic SC. What do the graphs show?? How to plot such graph? EPSY 8334 - Linear Regression Page 4/5 Interaction Effect: plotting the interaction effect (Class 5) Plot the interaction for the Wei et al (2012) article using the Excel file provided by http://www.jeremydawson.co.uk/slopes.htm 1. Read the Analyses section of Wei et al (2012) to determine if they standardized the variables before conducting the regression. 2. Determine if the article reports results of a two-way or a three-way interaction. 3. Select the appropriate Excel File in the web site – enter the name of the Predictor and moderator variables 4. Consult Table 2 in the Wei et al (2012) article to obtain the values for the unstandardized (B) values for the interaction effect (the table does not include the intercept/constant- so do not change that value). 5. Describe what the graph shows regarding the relation of discrimination to PTSD symptoms for participants high versus low in social connectedness with their ethnic group. If you have two standardized variables, you can plot your interaction effect by entering the unstandardized Beta regression coefficients (including intercept/constant) in the following worksheet. If you have control variables in your regression, the values of the dependent variable displayed on the plot will be inaccurate unless you also standardize (or centered) all control variables first (although the pattern, and therefore the interpretation, will be correct). Note that the interaction term should not be standardized after calculation, but should be based on the standardized values of the IV & moderator. 2-way_standardised.xls EPSY 8334 - Linear Regression Page 5/5