universidad de chile facultad de ciencias físicas y matem´aticas

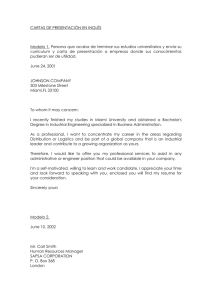

advertisement

UNIVERSIDAD DE CHILE

FACULTAD DE CIENCIAS FÍSICAS Y MATEMÁTICAS

DEPARTAMENTO DE INGENIERÍA MATEMÁTICA

ALGORITMOS DE APROXIMACIÓN PARA PROBLEMAS DE

PROGRAMACIÓN DE ÓRDENES EN MÁQUINAS PARALELAS

MEMORIA PARA OPTAR AL TÍTULO DE INGENIERO CIVIL

MATEMÁTICO

JOSÉ CLAUDIO VERSCHAE TANNENBAUM

PROFESOR GUÍA:

JOSÉ RAFAEL CORREA HAEUSSLER

MIEMBROS DE LA COMISIÓN:

MARCOS ABRAHAM KIWI KRAUSKOPF

ROBERTO MARIO COMINETTI COTTI-COMETTI

SANTIAGO DE CHILE

AGOSTO 2008

RESUMEN DE LA MEMORIA

PARA OPTAR AL TÍTULO DE

INGENIERO CIVIL MATEMÁTICO

POR: JOSÉ C. VERSCHAE T.

FECHA: 06/08/2008

PROF. GUÍA: Sr. JOSÉ R. CORREA

“ALGORITMOS DE APROXIMACIÓN PARA PROBLEMAS DE PROGRAMACIÓN

DE ÓRDENES EN MÁQUINAS PARALELAS”

El presente trabajo de tı́tulo tuvo como objetivo estudiar problemas de programación de

órdenes en máquinas. En este problema un productor dispone de una cierta cantidad de

máquinas en las que debe procesar un conjunto de trabajos. Cada trabajo pertenece a una

orden, correspondiente a un pedido de algún cliente. Por otra parte, los trabajos tienen

asociado un tiempo de procesamiento, que puede depender de la máquina en que es procesado,

y una fecha de disponibilidad a partir de la cual el trabajo puede ser programado. Finalmente,

a cada orden se le asocia un peso que depende de cuán importante es la orden para el

productor. El tiempo de completación de una orden es el instante de tiempo en que todos sus

trabajos han sido procesados. El problema del productor es decidir cuándo y en qué máquina

se procesa cada trabajo con el objetivo de minimizar la suma ponderada de los tiempos de

completación de las órdenes.

Este modelo generaliza varios problemas clásicos del área de programación de tareas. Por

una parte, la función objetivo en nuestro modelo incluye como caso especial minimizar el

tiempo total de procesamiento (makespan) y la suma ponderada de los tiempos de completación de los trabajos. Por otra parte, en esta memoria veremos que nuestro modelo también

generaliza el problema de minimizar la suma ponderada de tiempos de completación de los

trabajos en una máquina sujeto a restricciones de precedencia.

Al ser estos problemas N P-duros, su aparente intratabilidad sugiere buscar algoritmos

eficientes que entreguen una solución cuyo costo sea cercano al valor óptimo. Es con este

objetivo que, basándose en relajaciones lineales indexadas en el tiempo, se propuso un algoritmo de 27/2-aproximación para la versión más general del problema descrito anteriormente.

Este es el primer algoritmo con una garantı́a de aproximación constante para este problema,

lo que mejora el resultado de Leung, Li y Pinedo (2007). Basado en técnicas similares, para

el caso en que los trabajos pueden interrumpirse, también se obtuvo un algoritmo con una

garantı́a de aproximación arbitrariamente cercana a 4.

Además, se encontró un esquema de aproximación a tiempo polinomial (PTAS) para

el caso en que las ordenes son disjuntas, y cuando se dispone de una cantidad constante

de máquinas idénticas. Más aún, se pudo concluir que una variante de este esquema de

aproximación se puede aplicar en el caso en que la cantidad de máquinas es parte de la

entrada del algoritmo, pero la cantidad de trabajos por orden o la cantidad de órdenes es

constante.

Finalmente, se estudió el problema de minimizar el makespan en máquinas no relacionadas. Se propuso un algoritmo que transforma una solución con trabajos interrumpibles a una

donde ningún trabajo es interrumpido, aumentando el makespan en a lo más un factor 4. Más

aún, se demostró que no es posible encontrar un algoritmo que haga lo mismo incrementando

el makespan en un factor menor.

i

Agradecimientos

En primer lugar me gustarı́a agradecer a mis padres y hermanos, que desde niño me inculcaron el gusto por pensar. Su apoyo constante me ayudó toda mi carrera a seguir adelante.

Agradezco a mi hermano Rodrigo que siempre estuvo dispuesto a escucharme y a discutir mi

redacción.

A mi amada esposa Natalia, que con su ayuda, cariño, paciencia y apoyo incondicional

me ayudó a sacar adelante esta memoria.

Muy especialmente le agradezco a mi profesor guı́a José R. Correa, que con mucha paciencia me introdujo al mundo de la investigación. Más que solo ayudarme y encaminar mi

trabajo, me brindó amistad, comprensión y apoyo en general. Sin su constante apoyo esta

memoria no podrı́a haberse llevado a cabo.

A todos los alumnos del departamento de matemáticas de la U. de Chile, por siempre

estar dispuestos a conversar y subirme el ánimo.

Agradezco también a Martin Skutella quién me acogió en mi pasantı́a en Alemania durante

Septiembre y Octubre del 2007. Gracias a su colaboración e importantes aportes se pudo

desarrollar el Capı́tulo 5 de esta memoria. También agradezco a todo su grupo en la TUBerlin, por hacerme mi estadı́a en Berlin muy agradable. También a Nicole Megow por

brindarme su amistad y apoyo.

ii

Acknowledgments

First of all, I want to thank my parents and brothers for instilling in me the love of thinking.

Their constant support helped me throughout all my career. I thank my brother Rodrigo for

always listen to me and discuss my writing.

To my loving wife Natalia, that with her help, love, patience and unconditional support

helped me finishing this thesis.

I specially thank my advisor José R. Correa, that through long hours of discussions introduced me to the world of investigation. More than only help me in my work, he gave me

friendship and support in general. Without his constant support this thesis would not have

carried out successfully.

To all the students of the mathematics department of the University of Chile, for always

being willing to talk and cheer me up.

I also thank Martin Skutella who received me in my staying in Germany during September

and October 2007. His collaboration and important contributions made possible Chapter 5 of

this writing. I also thank all his group in TU-Berlin, for making pleasant my staying in Berlin.

I also thank Nicole Megow for offering me her friendship and support.

iii

Contents

1 Resumen en español

vi

1.1

Problemas de programación de tareas en máquinas . . . . . . . . . . . . . .

vi

1.2

Algoritmos de aproximación . . . . . . . . . . . . . . . . . . . . . . . . . . .

ix

1.3

Esquemas de aproximación a tiempo polinomial . . . . . . . . . . . . . . . .

xiii

1.4

Definición del problema . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

xiii

1.5

Trabajo previo . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

xv

1.5.1

Una máquina . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

xv

1.5.2

Máquinas paralelas . . . . . . . . . . . . . . . . . . . . . . . . . . . .

xvi

1.5.3

Máquinas no relacionadas . . . . . . . . . . . . . . . . . . . . . . . .

xvii

Contribuciones de este trabajo . . . . . . . . . . . . . . . . . . . . . . . . . .

xviii

1.6.1

Capı́tulo 3: El poder de la interrumpibilidad para R||Cmax . . . . . .

xviii

1.6.2

Capı́tulo 4: Algoritmos de aproximación para minimizar

P

wL CL en máquinas no relacionadas . . . . . . . . . . . . . . . . . .

P

Capı́tulo 5: Un PTAS para minimizar

wL CL en máquinas paralelas

1.6

1.6.3

1.7

Conclusiones . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2 Introduction

xxi

xxv

xxix

1

2.1

Machine scheduling problems . . . . . . . . . . . . . . . . . . . . . . . . . .

1

2.2

Approximation algorithms . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

2.3

Polynomial time approximation schemes . . . . . . . . . . . . . . . . . . . .

7

2.4

Problem definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

9

2.5

Previous work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

10

2.5.1

Single machine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

10

2.5.2

Parallel machines . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

12

2.5.3

Unrelated machines . . . . . . . . . . . . . . . . . . . . . . . . . . . .

14

iv

2.6

3 On

3.1

3.2

3.3

Contributions of this work . . . . . . . . . . . . . . . . . . . . . . . . . . . .

the power of preemption on R||Cmax

R|pmtn|Cmax is polynomially solvable .

A new rounding technique for R||Cmax

Power of preemption of R||Cmax . . . .

3.3.1 Base case . . . . . . . . . . . .

3.3.2 Iterative procedure . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

16

18

18

22

24

25

26

P

4 Approximation algorithms for minimizing

wL CL on unrelated machines 32

4.1 A (4 + ε)−approximation algorithm for

P

R|rij , pmtn| wL CL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

32

P

4.2 A constant factor approximation for R|rij | wL CL . . . . . . . . . . . . . .

37

P

5 A PTAS for minimizing

wL CL on parallel machines

5.1 Algorithm overview. . . . . . . . . . . . . . . . . . . .

5.2 Localization . . . . . . . . . . . . . . . . . . . . . . . .

5.3 Polynomial Representation of Order’s Subsets . . . . .

5.4 Polynomial Representation of Frontiers . . . . . . . . .

5.5 A PTAS for a specific block . . . . . . . . . . . . . . .

5.6 Variations . . . . . . . . . . . . . . . . . . . . . . . . .

6 Concluding remarks and open problems

v

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

41

44

46

53

55

57

61

63

Capı́tulo 1

Resumen en español

1.1

Problemas de programación de tareas en máquinas

Los problemas de programación de tareas en máquinas (machine scheduling) tratan sobre la

asignación de recursos escasos a través del tiempo. Ellos surgen en distintos escenarios, como

por ejemplo, un sitio de construcción donde el jefe debe asignar trabajos a cada empleado, una

CPU que debe procesar tareas requeridas por varios usuarios, o en las lı́neas de producción

de una fábrica que debe procesar productos para sus clientes.

En general, una instancia de un problema de programación en máquinas consiste en un

conjunto de n trabajos J y un conjunto de m máquinas M . Una solución del problema, i.e.

una programación de las tareas o schedule, es una asignación que especifica en que máquina

i ∈ M y en que instante de tiempo se procesa cada trabajo.

Para clasificar problemas de programación de tareas debemos mirar las distintas caracterı́sticas o atributos de las máquinas y los trabajos, además de la función objetivo a minimizar. Una de estas es el “ambiente de máquinas”, que describe la configuración de las

máquinas en nuestro modelo. Por ejemplo, podemos considerar maquinas “idénticas” o “paralelas”, donde cada máquina es una copia idéntica de todas las otras. En este caso cada

trabajo j ∈ J toma pj unidades de tiempo en procesarse, independiente de la máquina al

cual fue asignado. Por otro lado, podemos considerar una situación más general donde cada

máquina i ∈ M tiene una velocidad asociada si , tal que el tiempo que toma un trabajo j en

procesarse es inversamente proporcional a la velocidad de la máquina.

Adicionalmente, los problemas de programación de tareas pueden se clasificados según

las caracterı́sticas de los trabajos. Por ejemplo, nuestro modelo podrı́a considerar trabavi

jos “ininterrumpibles”, i.e. trabajos que una vez que se empiezan a procesar no pueden

ser interrumpidos hasta que hayan sido completamente procesados. Por otro lado, también

podrı́amos considerar trabajos “interrumpibles”, donde tenemos la libertad de interrumpir

un trabajo que haya empezado a procesarse, para después reanudar su procesamiento en la

misma máquina u otra distinta.

Por último, podemos clasificar problemas dependiendo de su función objetivo. Una de las

funciones objetivos más naturales corresponde a minimizar el makespan, que se define como

el instante de tiempo donde el último trabajo termina de procesarse. Más precisamente, si

para una cierta programación de tareas definimos el “tiempo de completación” de un trabajo

j ∈ J, denotado Cj , como el instante donde j termina de procesarse, entonces minimizar

el makespan corresponde a minimizar Cmax := maxj∈J Cj . Otro ejemplo clásico de función

objetivo consiste en minimizar el número de trabajos tardı́os. En este caso, cada trabajo

j ∈ J tiene asociado una “fecha de entrega” (deadline) dj , y el objetivo es el de minimizar la

cantidad de trabajos que terminan de ser procesados después de su fecha de entrega. Como

éstas, hay una gran cantidad de distintas funciones objetivo que pueden ser consideradas.

Una gran cantidad de problemas de programación de tareas pueden formarse combinando

las caracterı́sticas recién mencionadas. Es por esto que es conveniente introducir una notación

estándar que describa cada uno de estos problemas. Graham, Lawler, Lenstra y Rynooy Kan

[20] propusieron la “notación de tres campos”, donde un problema de programación de tareas

es representado por una expresión de la forma α|β|γ donde α denota el ambiente de máquinas,

β contiene restricciones extras o caracterı́sticas del problema, y el último campo γ denota la

función objetivo. En lo que sigue describimos algunos valores comunes que toma cada campo

α, β y γ.

1. Valores de α.

• α = 1 : Una máquina. Tenemos una sola máquina para procesar los trabajos. Cada

trabajo j ∈ J toma un tiempo dado pj en ser procesado.

• α = P : Máquinas paralelas. Tenemos un número m de máquinas idénticas o paralelas donde procesar los trabajos. Por ende el tiempo de proceso de un trabajo j

está dado por pj , el cuál no depende de la máquina donde j es asignado.

• α = Q: Máquinas relacionadas. En este caso cada máquina i ∈ M tiene una

velocidad si asociada. Con esto, el tiempo de proceso del trabajo j ∈ J en la

máquina i esta dado por pj /si , donde pj es el tiempo que demora en procesarce el

vii

trabajo j una máquina de velocidad 1.

• α = R: Máquinas no relacionadas. En este caso más general no hay ninguna

relación a priori entre los tiempos de proceso de cada trabajo en cada máquina, con

lo que el tiempo que toma en procesar un trabajo j en una máquina i está descrito

por un número arbitrario pij .

Adicionalmente, en el caso de que α = P, Q o R, podemos añadir la letra m al final

del campo, indicando que la cantidad de máquinas disponibles es constante. Luego, por

ejemplo, si el modelo de máquinas paralelas considera un número fijo m de máquinas

lo denotamos por α = P m. El valor de m también puede ser especificado, e.g., α = P 2

significa que disponemos de dos máquinas paralelas para procesar los trabajos.

2. Valores de β.

• β = pmpt: Trabajos interrumpibles. En este caso consideramos trabajos que si

pueden ser interrumpidos (una o varias veces) antes de ser terminados, los cuales

deben completarse posteriormente en la misma máquina u en otra distinta.

• β = rj : Tiempos de disponibilidad. Cada trabajo tiene asociado un instante de

tiempo rj a partir del cuál puede empezar a ser procesado.

• β = prec: Restricciones de precedencia. Consideremos una relación de orden parcial

sobre los trabajos (J, ≺). Si para algún par de trabajos j y k se tiene que j ≺ k,

entonces k debe empezar a procesarse después de el tiempo de completación del

trabajo j.

3. Valores de γ.

• γ = Cmax : Makespan. El objetivo es minimizar el makespan dado por Cmax :=

maxj∈J Cj , donde Cj corresponde al instante de tiempo donde el trabajo j terminó de procesarse, i.e. a su tiempo de completación

P

• γ =

Cj : Tiempo de completación promedio. Se debe minimizar el tiempo de

completación promedio, o equivalentemente, la suma de los tiempos de completaP

ción j∈J Cj .

P

• γ=

wj Cj : Suma ponderada de tiempos de completación. Se considera un peso

wj asociado a cada trabajo j ∈ J, que describe cuan importante es tal trabajo.

viii

Luego, el objetivo es minimizar la suma ponderada de los tiempo de completación,

P

j∈J wj Cj .

Cabe destacar que por convención se consideran trabajos ininterrumpibles por defecto.

Es decir, cuando el campo β esta vacı́o significa que los trabajos no pueden ser interrumpidos

P

hasta que sean completados. Por ejemplo, R|| wj Cj denota el problema de encontrar una

programación de tareas en J sobre el conjunto de máquinas M , sin interrumpir ningún

trabajo, donde cada trabajo j ∈ J toma pij unidades de tiempo en procesarse en la máquina

P

i ∈ M , y se minimiza la suma ponderada de los tiempos de completación

wj Cj . Por otra

P

parte, R|pmpt| wj Cj denota el mismo el problema recién descrito, con la diferencia de que

permitimos interrupciones de los trabajos. Además, notemos que el campo β puede tomar

P

más de un solo valor. Por ejemplo, R|pmpt, rj | wj Cj es el mismo que el problema anterior,

con la restricción adicional de que cada trabajo j debe empezar a procesarse después del

tiempo rj .

De todos los problemas de programación de tareas, hay una gran cantidad que son N Pduros, y por lo tanto no admiten algoritmos a tiempo polinomial que los resuelvan a optimalidad, a menos que P = N P. En particular, es fácil demostrar que uno de los problemas

fundamentales en el área, P 2||Cmax , es N P-completo. En lo que sigue describiremos algunas

técnicas generales que se pueden ocupar para abordar problema de optimización que son

N P-duros, además de algunas de sus aplicaciones al área de programación de tareas.

1.2

Algoritmos de aproximación

La introducción de la clase de problemas N P-completos dada por Cook [11], Karp [24] e

independientemente Levin [31], dejó grandes desafios abiertos sobre como estos pueden ser

abordados dada su aparente intratabilidad. Una opción que ha sido estudiada a profundidad

es la de algoritmos que resuelven el problema a optimalidad, pero que no tienen una cota

superior polinomial sobre el tiempo de ejecución. Este tipo de algoritmos puede ser útil sobre

instancias de tamaño pequeño o mediano, o para instancias con alguna estructura especial

en donde los tiempos de ejecución son realizables en la práctica. Sin embargo, puede haber

otro tipo de instancias donde el algoritmo toma un tiempo exponencial en terminar, lo cual

restringe su utilidad práctica. Los más comunes de estos enfoques son Branch and Bound,

Branch and Cut y técnicas de Programación Entera.

Para los problemas de optimización N P-duros, otra alternativa es la de usar algoritmos

ix

que corren en tiempo polinomial, pero que no resolverán el problema a optimalidad. Entre

estos, una clase particularmente interesante de algoritmos son los “algoritmos de aproximación”, en donde la solución calculada está garantizada de tener un costo cercano al del óptimo.

Más precisamente, consideremos un problema de minimización P con función de costos c.

Para un cierto α ≥ 1, decimos que una solución S de P es una “α-aproximación” si su costo

c(S) está a un factor α del costo óptimo OP T , i.e., si

c(S) ≤ α · OP T.

(1.1)

Con esto, consideremos un algoritmo A cuya salida sobre una instancia I esta dada por A(I).

Decimos que A es un algoritmo de “α-aproximación” si A(I) es una α-aproximación para

cualquier instancia I. El número α se llama el “factor de aproximación” de A, y si α no

depende de la entrada de A decimos que el algoritmo es un algoritmo de aproximación a un

factor constante.

Análogamente, si ahora P es un problema de maximización con función objetivo c, una

solución S es una α-aproximación, para un α ≤ 1, si

c(S) ≥ α · OP T.

Como antes, un algoritmo A es un algoritmo de α-aproximación si A(I) es una α-aproximación

para cada instancia I de P . En lo que sigue, solamente estudiaremos problemas de minimización, por lo que esta última definición no será usada.

Uno de los primeros algoritmos de aproximación fue dado por R.L. Graham [19] en

1966, incluso antes de que la noción de N P-completitud haya sido formalmente introducida.

Graham estudió el problema de minimizar el makespan en máquinas paralelas, P ||Cmax , proponiendo el siguiente algoritmo glotón: (1) Ordenar los trabajos arbitrareamente, (j1 , . . . , jn );

(2) Para cada k = 1, . . . , n, procesar el trabajo jk en la máquina en donde terminarı́a primero.

A un procedimiento de este tipo se le llama un algoritmo de list-scheduling.

Lema 1.1 (Graham 1966 [19]). List-scheduling es un algoritmo de (2 − 1/m)-aproximación

para P ||Cmax .

Demostración. Primero notemos que si OP T denota el makespan de la solución óptima,

entonces

1 X

OP T ≥

pj ,

(1.2)

m j∈J

x

ya que el lado derecho de esta ecuación corresponde al tiempo promedio de procesamiento de

las m máquinas, que debe ser menor que el makespan óptimo. Ahora bien, sea ℓ ∈ {1, . . . , n}

tal que Cjℓ = Cmax , y denotemos por Sj = Cj − pj el tiempo en que se comienza a procesar

el trabajo j. Luego, notando que en el instante Sjℓ todas las máquinas están ocupadas, se

tiene que

ℓ−1

1 X

S jℓ ≤

pj ,

m k=1 k

con lo cual

ℓ

Cmax

1

1 X

pjk + (1 − )pjℓ ≤

= S jℓ + p jℓ ≤

m k=1

m

1

2−

m

OP T,

(1.3)

donde la última desigualdad sigue de (1.2) y del hecho de que pjℓ ≤ OP T , ya que ninguna

programación de tareas puede terminar antes de pj para ningún j ∈ J.

Como se puede observa en la demostración, un paso crucial en el análisis previo es el

de obtener una “buena” cota inferior de la solución óptima (por ejemplo Ecuación (1.2)

en el lema previo), para después usarla para acotar superiormente la solución dada por el

algoritmo (como en la Ecuación (1.3)). La mayorı́a de las técnicas para encontrar cotas

inferiores del óptimo dependen del problema, y por ende es difı́cil dar reglas generales de

como hallarlas. Una de las pocas excepciones que ha mostrado ser útil en una diversidad

de contextos, corresponde a formular el problema como un problema de programación lineal

entero, y luego relajar las condiciones de integralidad. Claramente, la solución del problema

relajado es una cota inferior del problema original. Un algoritmo que ocupa esta técnica para

encontrar cotas inferiores es comúnmente llamado “algoritmo de aproximación basado en un

PL”. Para ilustrar esta idea consideramos el siguiente problema.

Vertex-Cover de Costo Mı́nimo:

Entrada: Un grafo G = (V, E) y una función de costos c : V → Q sobre los vértices.

Objetivo: Encontrar un vertex-cover , i.e. un conjunto B ⊆ V tal que cada arista en E

P

intersecta a algún vértice en B, minimizando el costo c(B) = v∈B c(v).

Es fácil ver que este problema es equivalente al siguiente problema entero:

xi

[PL] min

X

yv c(v)

(1.4)

v∈V

yv + yw ≥ 1

yv ∈ {0, 1}

para todo vw ∈ E,

(1.5)

para todo v ∈ V.

(1.6)

Luego, reemplazando la Ecuación (1.6) por yv ≥ 0, obtenemos un programa lineal cuyo

valor óptimo es una cota inferior del problema de Vertex-Cover de Costo Mı́nimo. Para

obtener un algoritmo de aproximación a un factor constante, procedemos como sigue. Primero

resolvemos [PL] (ocupando, por ejemplo, el método del elipsoide), y llamamos la solución yv∗ .

Para redondear esta solución fraccionaria, notemos que la Ecuación (1.5) implica que para

cada arista vw ∈ E, ya sea yv∗ ≥ 1/2 o yw∗ ≥ 1/2. Luego, el conjunto B = {v ∈ V |yv∗ ≥ 1/2}

es un vertex-cover, y más aún podemos acotar su costo de la siguiente manera,

c(B) =

X

v:yv∗ ≥1/2

c(v) ≤ 2

X

v∈V

yv∗ c(v) ≤ 2OP TP L ≤ 2OP T,

(1.7)

donde OP T es el valor óptimo del problema de Vertex-Cover de Costo Mı́nimo, y

OP TP L es el óptimo de [PL]. Luego el algoritmo descrito es una 2-aproximación.

Notando que OP T ≤ c(B), la Ecuación (1.7) implica que

OP T

≤2

OP TP L

para cualquier instancia I de Vertex-Cover de Costo Mı́nimo. Más generalmente, cualquier algoritmo de α-aproximación que usa OP TP L como cota inferior debe satisfacer

max

I

OP T

≤ α.

OP TP L

El lado izquierdo de esta última ecuación se llama el “gap de integralidad” del programa

lineal. Encontrar una cota inferior sobre el gap de integralidad de un programa lineal es

una técnica usual para determinar cual es el mejor factor que puede tener un algoritmo de

aproximación que ocupa el programa lineal como cota inferior. Para hacer esto basta con

encontrar una instancia con un cociente OP T /OP TP L lo más grande posible. Por ejemplo,

es fácil ver que el algoritmo recién descrito para Vertex-Cover de Costo Mı́nimo es el

xii

mejor posible ocupando [PL] como cota inferior. En efecto, si consideramos el grafo G como

el grafo completo de n vértices y función de costos c ≡ 1, obtenemos que OP T = n − 1 y

OP TP L = n/2, con lo que OP T /OP TP L → 2 cuando n → ∞.

1.3

Esquemas de aproximación a tiempo polinomial

Dado un cierto problema de optimización N P-duro, es natural preguntarse cual es el algoritmo de aproximación que corre en tiempo polinomial con el mejor factor de aproximación.

Claramente, esto depende de cada problema. Por un lado, hay algunos problemas que no

admiten ningún algoritmo de aproximación a menos que P = N P. Por ejemplo, el problema

del vendedor viajero con costos binarios no puede ser aproximado a ningún factor. En efecto,

si existe un algoritmo de α-aproximación para este problema, entonces podrı́amos decidir si

es que existe o no un circuito hamiltoniano de costo cero: Si la solución óptima del problema del vendedor viajero es cero, el algoritmo de aproximación debe retornar cero por (1.1),

independiente del valor de α; Si el valor del óptimo es mayor que cero, la solución dado por

el algoritmos también lo será.

Por otro lado, hay algunos problemas que admiten algoritmos con factores de aproximación arbitrareamente buenos. Para formalizar esta idea definimos un “esquema de algoritmos

de aproximación en tiempo polinomial” (polynomial time approximation scheme o PTAS), como una familia de algoritmos {Aε }ε>0 tal que cada Aε es un algoritmo de (1+ε)-aproximación

que corre en tiempo polinomial. Cabe destacar que ε no es considerado como parte del input,

y por lo tanto el tiempo de ejecución del algoritmo puede depender exponencialmente de ε.

Por otra parte, un PTAS donde el tiempo de ejecución de los Aε dependen polinomialmente

en 1/ε se le llama un “esquema de algortimos de aproximación totalmente polinomial” (fully

polynomial time approximation scheme o FPTAS).

1.4

Definición del problema

En esta memoria estudiaremos un problema de programación de tareas que surge naturalmente en la industria. Consideremos una situación donde clientes hacen pedidos, consistentes

en varios productos, a un fabricante que dispone de un conjunto de máquinas para procesarlas. Cada producto debe ser procesado en alguna de las m máquinas disponible para ello, y

el tiempo que se demora en procesar cada trabajo puede depender de la máquina en donde

xiii

es programado. El productor debe decidir una programación de las tareas con el objetivo de

entregar el mejor servicio posible a sus clientes.

En su versión más general, el problema a considerar es como sigue. Tenemos un conjunto

S

de trabajos J y un conjunto de órdenes O ⊆ P(J), tal que L∈O L = J. Cada trabajo

j ∈ J tiene asociado un valor pij que representa su tiempo de proceso en la máquina i ∈ M .

Además, a cada orden L ∈ O le asociamos un peso wL , dependiendo de cuan importante

es la orden para el productor. Por otra parte, cada trabajo j tiene asociado un tiempo de

disponibilidad dependiente de la máquina donde el trabajo es procesado, rij , tal que j no

puede comenzar a procesarse en i antes de rij . Una orden esta completada cuando todos los

trabajos pertenecientes a ella han sido completamente procesados. Luego, si Cj denota el

instante de tiempo en donde se termino de procesar el trabajo j, entonces CL = max{Cj :

j ∈ L} corresponde al tiempo de completación de la orden L ∈ O. El objetivo del productor

es el de encontrar una programación de las tareas (sin interrumpirlas) en las m máquinas tal

que se minimice la “suma ponderada de los tiempos de completación de las órdenes”, i.e.,

min

X

wL CL .

L∈O

Cabe destacar que en lo recién descrito permitimos que un trabajo pertenezca a más de una

orden simultaneamente, i.e., las órdenes en O pueden no ser dos a dos disjuntas.

Para adoptar la notación de tres campos de Graham et al., denotaremos este problema

P

P

como R|rij | wL CL , o R|| wL CL en el caso en que todos los tiempos de disponibilidad

sean 0. Cuando los tiempo de proceso pij no dependan de la máquina, cambiaremos la “R”

por una “P ”, indicando que estamos en el caso de máquinas paralelas. Además, cuando

imponemos la condición extra de que las órdenes forman una partición de J añadimos part

como parte del segundo campo β.

Como mostraremos más adelante, nuestro problema generaliza varios problemas clásicos

P

P

de programación de tareas. Estos incluyen R||Cmax , R|rij | wj Cj y 1|prec| wj Cj . Ya que

todos estos problemas son fuertemente N P-duro (ver por ejemplo [17]), también nuestro

problema más general lo será.

Sorprendentemente, el mejor factor de aproximación para cada uno de estos problemas es

de 2 [4, 35, 37]. Sin embargo, en nuestro caso más general, ningún algoritmo de aproximación

a factor constante es conocido. El mejor resultado, dado por Leung, Li, Pinedo y Zhang [29],

es un algoritmo para el caso especial de máquinas relacionadas (i.e. pij = pj /si , donde si

xiv

denota la velocidad de la máquina i) y todos los tiempos de disponibilidad son cero. El factor

de aproximación de este algoritmo es 1 + ρ(m − 1)/(ρ + m − 1), donde ρ es la razón entre las

velocidades de la máquina más rápida y la más lenta. En general esta cota no es constante,

y puede ser tan mala como m/2.

1.5

Trabajo previo

Para ilustrar la flexibilidad de nuestro modelo, presentamos varios modelo que nuestro problema generaliza, además de dar una reseña de los resultados más importantes conocidos

sobre ellos.

1.5.1

Una máquina

Comenzamos considerando el problema de minimizar la suma ponderada de tiempos de completación de órdenes en una máquina. Primero estudiamos el caso donde ningún trabajo

P

P

pertenece a más de una orden, 1|part| wL CL , mostrando que es equivalente a 1|| wj Cj .

Smith [41] mostró que este último problema se puede resolver en tiempo polinomial ocupando

un algoritmo de tipo list-scheduling ordenando los trabajos de manera no-creciente en wj /pj .

Este algoritmo glotón es conocido como la “regla de Smith”.

Para ver que los dos problemas mencionados son en efecto equivalentes, primero mosP

tramos que existe una solución óptima de 1|part| wL CL en donde todos los trabajos de

una orden L ∈ O son procesados de manera consecutiva. Para ver esto, consideremos una

solución óptima donde esto no ocurre. Luego, existen trabajos j, ℓ ∈ L y k ∈ L′ 6= L, tal que

k empieza a procesarse en el instante Cj , y ℓ es procesado después que k. Intercambiando los

trabajos j y k, i.e. adelantando k en pj unidades de tiempo y retrasando j en pk unidades de

tiempo, no incrementa el costo de la solución. En efecto, el trabajo k disminuye su tiempo

de completación, y por lo tanto CL′ no aumenta. La orden L tampoco aumenta su tiempo de

completación puesto que el trabajo ℓ ∈ L, que esta siempre siendo procesado después de j,

se mantiene sin modificar. Iterando este argumento terminamos con una programación de las

tareas donde todos los trabajos de una orden son procesados de manera consecutiva. Por lo

tanto, cada orden puede ser vista como un trabajo más grande con tiempo de proceso igual

P

P

P

a j∈L pj , y por ende 1|part| wL CL se reduce a 1|| wj Cj .

P

Ahora consideramos el problema más general de 1|| wL CL , donde permitimos que un

trabajo pertenezca a más de una orden simultaneamente. Se puede probar que este problema

xv

es equivalente a 1|prec|

P

wj Cj (ver Capı́tulo 2.5.1), lo que resumimos en el siguiente teorema.

Teorema 1.2. Existe un algoritmo de α-aproximación para 1|prec|

P

para 1|| wL CL .

P

wj Cj ssi existe uno

P

El problema con restricciones de precedencia 1|prec| wj Cj ha recibido mucho atención

desde los sesenta. Lenstra y Rinnooy Kan [26] probaron que este problema es fuertemente

N P-duro, incluso si los pesos o los tiempos de proceso son unitarios. Por otro lado, varios

algoritmos de 2-aproximación han sido propuestos: Hall, Schulz, Shmoys y Wein [21] dieron

un algoritmo basado en una relajación lineal, mientras que Chudak & Hochbaum [6] propusieron otro algoritmo de 2-aproximación basado en una relajación lineal semi-entera. Además,

Chekuri y Motwani [4], y Margot, Queyranne y Wang [32] independientemente desarrollaron

un algoritmo combinatorial sencillo con un factor de aproximación 2. Mas aún, los resultados

P

en [2, 12] implican que 1|prec| wj Cj es un caso especial de vertex cover. Por otro lado, no

hubo conocimiento sobre la dificultad de aproximar este problema, hasta que recientemente

Ambuhl, Mastrolilli y Svensson [3] demostraron que este problema no admite un PTAS a

menos que problemas N P-duros pueden ser resueltos en tiempo aleatorio subexponencial.

1.5.2

Máquinas paralelas

En esta sección hablaremos sobre problemas de programación de tareas en máquinas paralelas, donde los tiempos de proceso de cada trabajo j, están dados por pij = pj que no

dependen de la máquina en donde j es procesado.

Recordemos el problema previamente definido de minimizar el makespan en máquinas

paralelas, P ||Cmax , que consiste en encontrar una programación de un conjunto de tareas

J en un conjunto M de m máquinas paralelas, tal que se minimice el máximo tiempo de

P

completación. Notemos que si en nuestro problema P || wL CL el conjunto O solo contiene

una orden, la función objetivo se vuelve maxj∈J Cj = Cmax y por lo tanto P ||Cmax es un

P

caso especial de P || wL CL , que a su vez es un caso especial de nuestro modelo más general

P

R|rij | wL CL .

El problema de P ||Cmax es un problema clásico en el área de programación de tareas.

Se puede probar fácilmente que es N P-duro, incluso con m = 2, ya que el problema de

2-partición se puede reducir a él. Por otra parte, como mostramos en el Lema 1.1, un

algoritmo de tipo list-scheduling es un algoritmo de 2-aproximación. Más aun, Hochbaum y

Shmoys [22] presentaron un PTAS para este problema (ver también [42, Chapter 10]).

xvi

Por otro lado, cuando en nuestro modelo cada orden contiene un solo trabajo, el problema

P

se vuelve equivalente a minimizar la suma ponderada de tiempos de completación j∈J wj Cj .

P

Por ende nuestro problema también generaliza P || wj Cj . El estudio de este problema

también se remonta a los sesenta (ver por ejemplo [9]). Al igual con P ||Cmax , el problema

es N P-duro inclusive cuando hay solo dos máquinas para procesar los trabajos. Por otra

parte, una seguidilla de algoritmos de aproximación fueron propuestos hasta que Skutella

y Woeginger [40] encontraron un PTAS para este problema. Más adelante, Afrati et al. [1]

extendieron este resultado al caso con fechas de disponibilidad no triviales.

Con esto, surge la pregunta de si existe un PTAS para P |part|wL CL (recordemos que,

P

como se discutió en la Sección 1.5.1, el problema levemente más general P || wL CL no

tiene PTAS a menos que los problemas N P-duros puedan ser resueltos en tiempo aleatorio

subexponencial). Aunque no sabemos si es que lo último es cierto, Leung, Li, y Pinedo [28]

(ver también Yang y Posner [44]) presentaron una algoritmo de 2-aproximación para este

problema, que es lo mejor que se conoce hasta el momento.

1.5.3

Máquinas no relacionadas

En el caso más general de máquinas no relacionadas, nuestro problema también generaliza

varios problemas clásicos del área de programación de tareas. Como antes, si hay una sola

orden y rij = 0, nuestro problema se vuelve equivalente a R||Cmax . Lenstra, Shmoys y Tardos

[27] dieron un algoritmo de 2-aproximación para R||Cmax , y mostraron que no se puede

obtener un algoritmo con una garantı́a mejor que 3/2 a menos que P = N P. Luego, tenemos

P

el mismo resultado para nuestro problema mas general de R|| wL CL .

Por otro lado, si las órdenes son singletons y los tiempos de disponibilidad triviales, i.e.

P

rij = 0, nuestro problema se vuelve R|| wj Cj . Como en el caso de makespan, este último

problema es APX-duro [23] y por lo tanto no admite un PTAS a menos que P = N P. Sin

embargo, Schulz y Skutella [35] usaron una relajación lineal para diseñar un algoritmo de

(3/2 + ε)-aproximación en el caso con fechas de disponibilidad iguales a cero, y de (2 + ε)aproximación cuando las fechas de disponibilidad son no triviales. Más aún, Skutella [38]

refinó este resultado usando programación cuadrática convexa, obteniendo un algoritmo de

3/2-aproximación cuando rij = 0, y de 2-aproximación con fechas de disponibilidad arbitrarias.

Finalmente cabe mencionar que nuestro problema también generaliza el problema de

P

lı́nea de ensamblaje, A|| wj Cj , el cuál ha recibido bastante atención recientemente (ver

xvii

e.g. [7, 8, 30]). Una instancia de este problema consta de un conjunto de M máquinas y un

conjunto de trabajos J, con pesos asociados wj . Cada trabajo j ∈ J consta de m partes,

tal que la i-ésima parte de j debe ser procesada en la i-ésima máquina donde toma pij

unidades de tiempo en ser procesada. El objetivo es el de minimizar la suma ponderada

P

de tiempos de completación,

wj Cj , donde el tiempo de completación de un trabajo en

este contexto se define como el instante de tiempo en que la último de sus partes termina

de procesarse. Para ver que nuestro problema generaliza el de lı́nea de ensamblaje, basta

hacer la correspondencia de una orden de nuestro problema con cada trabajo de la lı́nea

de ensamblaje, y a sus respectivos partes con los trabajos que pertenecen a cada orden.

Para asegurar que los trabajos en cada orden solo puedan ser procesados en su respectiva

máquina, le asignamos un tiempo de proceso infinito (o suficientemente largo) en todas las

otras máquinas.

Además de probar que el problema de lı́nea de ensamblaje es N P-duro, Chen y Hall [7]

y Leung, Li, y Pinedo [30] dieron, de manera independiente, un algoritmo de 2-aproximación

usando un programa lineal basado en las llamadas parallel inequalities (ver también [33]).

1.6

Contribuciones de este trabajo

P

En esta memoria desarrollamos algoritmos de aproximación para R|rij | wL CL y algunos

casos particulares de este. A continuación resumimos cada uno de los capı́tulos.

1.6.1

Capı́tulo 3: El poder de la interrumpibilidad para R||Cmax

En este capı́tulo estudiamos el problema de minimizar el makespan en máquinas no relacioP

nadas R||Cmax , el cual es un caso particular de nuestro problema más general R|| wL CL .

Las técnicas desarrolladas en este capı́tulo darán pie a técnicas para encontrar algoritmos de

P

P

aproximación para los casos más generales R|rij | wL CL y R|rij , pmpt| wL CL .

En primer lugar revisamos el resultado de Lawler y Labetoulle [25] que muestra que el

problema de R|pmpt|Cmax puede ser resuelto en tiempo polinomial. Este resultado se basa

en demostrar que hay una correspondencia uno a uno entre una programación de tareas con

trabajos interrumpibles y una solución de un programa lineal. Este programa lineal, que

llamaremos [LL] y que está descrito a continuación, ocupa variables de asignación xij que

denotan la fracción del trabajo j que es procesado en al máquina i ∈ M .

xviii

[LL]

min C

X

xij = 1

para todo j ∈ J,

(1.8)

pij xij ≤ C

para todo i ∈ M,

(1.9)

pij xij ≤ C

para todo j ∈ J,

(1.10)

xij ≥ 0

para todo i, j.

(1.11)

i∈M

X

j∈J

X

i∈M

Es claro que cada programación de tareas que interrumpe trabajos induce una solución

factible de [LL]. En efecto, dada una programación de tareas que interrumpe trabajos, sea

C su makespan y xij la fracción del trabajo j que es procesada en la máquina i. Luego, se

debe satisfacer la Ecuación (1.8) ya que cada trabajo se procesa completamente. Más aún,

la Ecuación (1.9) también se satisface ya que ninguna máquina i ∈ M puede terminar de

P

procesar trabajos antes de j pij xij . Similarmente, la Ecuación (1.10) es válida puesto que

ningún trabajo j puede ser procesado en dos máquinas simultaneamente, y por ende el lado

izquierdo de esta ecuación es una cota inferior en el tiempo de completación del trabajo j. La

implicancia contraria, es decir, que toda solución de [LL] induce una programación de tareas

interrumpibles con makespan C, requiere más trabajo y ocupa técnicas de emparejamientos

en grafos bipartitos.

A continuación proponemos como redondear cualquier solución de [LL] a una programación de tareas no interrumpibles, aumentando el makespan en un factor de a lo más 4. Juntando esto con el hecho que [LL] da una cota inferior para el problema de R||Cmax , obtenemos

una algoritmo de 4-aproximación para R||Cmax . Aunque esto no mejora la 2-aproximación

dada por Lenstra, Shmoys y Tardos [27] para este problema, tiene la ventaja de que la técnica

P

de redondeo ocupada es fácil de generalizar a nuestro problema general R|rij | wL CL . Dado

x y C solucion de [LL], el redondeo consiste en lo siguiente.

1. Comenzamos por llevar a cero las variables que procesan un trabajo en una máquina

que demora mucho tiempo. Más precisamente, definimos

yij =

0

x

si pij > 2C,

ij

xix

si no.

Con esto, ningún trabajo se encuentra parcialmente asignado a una máquina en donde

demorarı́a más de 2C unidades de tiempo en ser procesado. Sin embargo, ahora los trabajos no están completamente procesados en la solución fraccionaria y. Para solucionar

esto reescalamos las variables, tal que la nueva solución, x′ , satisfaga (1.8). Gracias a

la ecuación (1.10), es fácil ver que al hacer esto ninguna variable aumentó más que al

doble.

2. Finalmente aplicamos a la solución x′ un famoso resultado de Shmoys y Tardos [37], el

cual está sintetizado en el siguiente teorema:

Teorema 1.3 (Shmoys y Tardos [37]). Dada una solución fraccionaria no negativa del

siguiente sistema de ecuaciones:

XX

j∈J i∈M

cij xij ≤ C,

X

(1.12)

para todo j ∈ J,

xij = 1

i∈M

(1.13)

existe una solución integral x̂ij ∈ {0, 1} que satisface (1.12), (1.13), y además,

xij = 0, =⇒ x̂ij = 0

X

X

pij x̂ij ≤

pij xij + max{pij : xij > 0}

j∈J

j∈J

para todo i ∈ M, j ∈ J,

(1.14)

para todo i ∈ M.

Más aún, tal solución integral puede ser encontrada en tiempo polinomial.

Es sencillo probar que el algoritmo recién descrito termina con una programación de

tareas de trabajos no interrumpibles con makespan a lo más 4C, donde C es el makespan de

la solución fraccionaria dada por x.

Finalizamos el Capı́tulo 3 demostrando que el gap de integralidad de [LL] es exactamente

4, lo que implica que la técnica de redondeo recién descrita es la mejor que se puede obtener.

Para ello construimos una familia de instancias de R||Cmax , {Iβ }β<4 , tal que si CβINT denota

el makespan óptimo considerando trabajos no interrumpibles, y Cβ denota el valor óptimo

de [LL], entonces CβINT /Cβ ≥ β, para todo β < 4.

xx

1.6.2

Capı́tulo 4: Algoritmos de aproximación para minimizar

P

wL CL en máquinas no relacionadas

P

En este capı́tulo presentamos algoritmos de aproximación para el caso general R|rij | wL CL ,

P

además de su versión con trabajos no interrumpibles, R|rij , pmtn| wL CL . La mayorı́a de

las técnicas presentadas en este capı́tulo son generalización de los métodos mostrados en el

Capı́tulo 3.

P

Primero mostramos un algoritmo de (4+ε)-aproximación para R|rij , pmtn| wL CL . Para

ello consideramos un programa lineal indexado en el tiempo, cuyas variables representan la

fracción de cada trabajo que es procesada en cada instante de tiempo (discreto) en cada

máquina. Este tipo de relajación lineal fue originalmente introducido por Dyer y Wolsey [13]

P

para el problema 1|rj | j wj Cj , y fue posteriormente extendido por Schulz y Skutella [35],

quienes lo usaron para obtener algoritmos de (3/2+ε)-aproximación y de (2+ε)-aproximación

P

P

para R|| wj Cj y R|rij | wj Cj respectivamente.

La relajación lineal considera un horizonte de tiempo T , suficientemente grande tal

que sea una cota superior del makespan de cualquier programación razonable, por ejemplo

P

T = maxi∈M,k∈J {rik + j∈J pij }. Luego dividimos el horizonte de tiempo en intervalos que

crecen de manera exponencial, tal que habrán solamente una cantidad polinomial O(log T ) de

intervalos. Para ello, sea ε un parámetro fijo, y sea q el primer entero tal que (1 + ε)q−1 ≥ T .

Luego, consideramos los intervalos

[0, 1], (1, (1 + ε)], ((1 + ε), (1 + ε)2 ], . . . , ((1 + ε)q−2 , (1 + ε)q−1 ].

Para simplificar la notación, definimos τ0 = 0, y τℓ = (1 + ε)ℓ−1 para cada ℓ = 1, . . . , q.

Con esto, el ℓ-ésimo intervalo corresponde a (τℓ−1 , τℓ ].

Dado una programación de tareas con trabajos interrumpibles, sea yjiℓ la fracción del

trabajo j que es procesada en la máquina i en el ℓ-ésimo intervalo. Luego, pij yjiℓ es la cantidad

de tiempo que el trabajo j utiliza en la máquina i en el ℓ-ésimo intervalo. Consideremos el

siguiente programa lineal.

xxi

[DW]

min

X

wL CL

L∈O

q

XX

para todo j ∈ J,

(1.15)

pij yjiℓ ≤ τℓ − τℓ−1

para todo ℓ = 1, . . . , q y i ∈ M,

(1.16)

pij yjiℓ ≤ τℓ − τℓ−1

para todo ℓ = 1, . . . , q y j ∈ J,

(1.17)

para todo L ∈ O, j ∈ L,

(1.18)

yjiℓ = 0

para todo j, i, ℓ tal que rij > τℓ ,

(1.19)

yjiℓ ≥ 0

para todo i, j, ℓ.

(1.20)

yjiℓ = 1

i∈M ℓ=1

X

j∈J

X

i∈M

X

i∈M

yji1 +

q

X

ℓ=2

τℓ−1 yjiℓ

!

≤ CL

Es sencillo de verificar que este programa lineal da una cota inferior para nuestro problema

P

R|rij , pmtn| wL CL . En efecto, la Ecuación (1.15) asegura que cada trabajo es completamente procesado. La Ecuación (1.16) también debe ser válida ya que en cada intervalo ℓ

y máquina i la cantidad total de tiempo disponible es a lo más τℓ − τℓ−1 . Similarmente, la

Ecuación (1.17) se satisface puesto que ningún trabajo puede ser procesado en dos máquinas

de manera simultanea, y por lo tanto en cada intervalo ℓ la cantidad total de tiempo que

se puede ocupar para procesar un trabajo es a lo más el largo del intervalo. Para ver que la

Ecuación (1.18) es válida, notemos que pij ≥ 1, y por lo tanto CL ≥ 1 para todo L ∈ O.

También notemos que CL ≥ τℓ−1 para todo L, j ∈ L, i, ℓ tal que yjiℓ > 0. Por ende, el lado

izquierdo de la Ecuación (1.18) es una combinación convexa de valores más pequeños que

CL . Finalmente, la Ecuación (1.19) es válida ya que ninguna parte de un trabajo puede ser

asignada a un intervalo que termina antes de su fecha de disponibilidad, cualquiera sea la

máquina.

Para obtener una (4+ε)-aproximación, primero resolvemos [DW], obteniendo una solución

y ∗ , {CL∗ }L∈O . Luego, procedemos de manera análoga al redondeo del Capı́tulo 3, llevando a

cero todas las variables que asignan un trabajo j ∈ L a un intervalo que empiece después que

2CL∗ . Después, reescalamos las variables para asegurar que todos los trabajos estén siendo

completamente procesados. Es fácil ver que al hacer esto cada variable aumenta a lo más al

doble. Finalmente, las ecuaciones (1.16) y (1.17) nos permiten ocupar la técnica de Lawler y

xxii

Labetoulle [25] sobre cada intervalo de tiempo [τℓ−1 , τℓ ) para asegurar que ningún trabajo este

siendo procesado en dos máquinas al mismo tiempo, con lo que obtenemos una programación

de las tareas en donde ningún trabajo j ∈ L se procese en un instante de tiempo posterior a

4(1 + ε)CL∗ . Con esto, podemos demostrar el siguiente teorema.

Teorema 1.4. Para todo ε > 0, existe un algoritmo de (4+ε)-aproximación para el problema

P

de R|rij , pmpt| wL CL .

A continuación proponemos el primer algoritmo de aproximación a un factor constante

P

para el caso de trabajos no interrumpibles R|rij | wL CL . Nuestro algoritmo esta basado

en un programa lineal indexado en el tiempo propuesto por Hall, Schulz, Shmoys, y Wein

[21], con variables que indican en qué máquina y qué intervalo se termina de procesar cada

trabajo, además de la técnica de redondeo desarrollada en el Capı́tulo 3.

Al igual que antes, consideramos un horizonte de tiempo T más grande que el makespan

P

de cualquier programación de tareas razonable, por ejemplo T = maxi∈M,k∈J {rik + j∈J pij }.

También, dividimos el horizonte de tiempo en intervalos que crecen exponencialmente en un

factor 3/2,

[1, 1], (1, 3/2], (3/2, (3/2)2 ], . . . , ((3/2)q−2 , (3/2)q−1 ].

Para simplificar la notación, definimos τ0 = 1, y τℓ = (3/2)ℓ−1 , para todo ℓ = 1 . . . q. Con

esto, el ℓ-ésimo intervalo corresponde a (τℓ−1 , τℓ ].

Dada una programación de tareas, definimos las variables yjiℓ como uno si y solo si el

trabajo j termina de procesarse en la máquina i en el ℓ-ésimo intervalo. Con esto en mente

consideramos el siguiente programa lineal.

xxiii

[HSSW]

min

X

wL CL

L∈O

q

XX

yjiℓ = 1

para todo j ∈ J,

(1.21)

pij yjis ≤ τℓ

para todo i ∈ M y ℓ = 1, . . . , q,

(1.22)

para todo L ∈ O, j ∈ L,

(1.23)

yjiℓ = 0

para todo i, ℓ, j tal que pij + rij > τℓ ,

(1.24)

yjiℓ ≥ 0

para todo i, l, j.

(1.25)

i∈M ℓ=1

ℓ X

X

s=1 j∈J

q

XX

i∈M ℓ=1

τℓ−1 yjiℓ ≤ CL

Para ver que [HSSW] es una relajación de nuestro problema, consideremos una programación de trabajos ininterrumpibles arbitrarea, y definamos yjiℓ = 1 ssi el trabajo j termina

de ser procesado en la máquina i en el ℓ-ésimo intervalo. Luego, la Ecuación (1.21) es válida

ya que cada trabajo termina en exactamente un intervalo y una máquina. El lado izquierdo

de (1.22) corresponde a la carga total procesada en la maquina i en el intervalo [0, τℓ ]. y por

lo tanto la desigualdad se satisface. La suma doble en la desigualdad (1.23) es igual a τℓ−1 ,

donde ℓ es el intervalo donde el trabajo j se completa, por lo que es a lo más Cj , y por

ende está acotado superiormente por CL si j ∈ L. La regla (1.24) dice que algunas variables

deben ser impuestas como cero antes de resolver el PL. Esto es válido ya que si pij + rij > τℓ

entonces el trabajo j no podrá terminar de procesarce antes que τℓ en la máquina i, y por lo

tanto yjiℓ será cero.

Para obtener una solución aproximada de nuestro problema, primero resolvemos [HSSW]

a optimalidad, llamando a las solución y ∗ , {CL∗ }L∈O . Luego, llevamos a cero todas las variables que asignan un trabajo j ∈ L a un intervalo posterior a 3/2CL∗ ,1 y reescalamos de

manera tal que los trabajos sean totalmente asignados por la solución fraccionaria. Se puede

demostrar que al hacer esto ninguna variable tuvo que incrementarse en más que un factor

3. Posteriormente aplicamos el Teorema 1.3, interpretando cada par intervalo-máquina de

nuestras variables como una máquina del teorema, obteniendo ası́ una asignación entera de

trabajos a pares intervalo-máquina. Podemos notar que gracias a la Ecuación (1.24) y (1.14)

1

El número 3/2 es elegido tal que el algoritmo final de el mejor factor de aproximación posible.

xxiv

la solución no empeora más que en un factor constante al aplicar el redondeo del Teorema

1.3. Concluimos el algoritmo asignando trabajos de manera glotona como sigue. Dentro de

cada máquina, para todo ℓ = 1, . . . , q, procesamos todos los trabajos que están asignados al

ℓ-ésimo intervalo lo antes posibles, y ordenando de manera arbitraria si es que habı́a mas de

un trabajo asignado a un cierto intervalo. Se puede probar que al aplicar este algoritmo cada

trabajo j ∈ L termina de procesarse antes de 27/2CL∗ . Obtenemos el siguiente resultado.

Teorema 1.5. Existe un algoritmo de 27/2-aproximación para R|rij |

1.6.3

Capı́tulo 5: Un PTAS para minimizar

paralelas

P

P

wL CL .

wL CL en máquinas

P

En este capı́tulo diseñamos un PTAS para algunas versiones restringidas de P |part| wL CL .

Asumimos que hay un número constante de máquinas, un número constante de trabajos por

orden, o un número constante de órdenes. Primero describimos el caso donde el número de

trabajos por orden esta acotado por una constante K, y luego justificaremos porque esto

implica la existencia de PTASes para los otros casos. Los resultados en este capı́tulo siguen

P

muy de cerca el PTAS para P |rj | wj Cj desarrollado por Afrati et al. [1].

Como es usual en el diseño de un PTAS, la idea general consiste en añadir estructura a la

solución, modificando la instancia de tal manera que el costo de la solución óptima no empeore

más que en un factor (1 + ε). Además, aplicando varias modificaciones a la solución óptima

de esta nueva instancia, probaremos que existe una solución casi-óptima que satisface varias

propiedades extras. La estructura otorgada por estas propiedades nos permitirán encontrar

esta solución haciendo busquedas exhaustivas o programación dinámica. Como cada una de

las modificaciones que aplicaremos a la solución óptima solo genera una perdida de un factor

(1 + ε) al costo, podemos aplicar una cantidad constante de ellas, obteniendo una solución

que está a un factor (1+ε)O(1) del costo óptimo. Luego, escogiendo ε suficientemente pequeño

podemos aproximar a un factor arbitrareamente cercano a 1.

Como en los capı́tulos 3 y 4, divideremos el horizonte de tiempo en intervalos que crecerán

exponencialmente. Para cada entero t, denotaremos por It el intervalo [(1 + ε)t , (1 + ε)t+1 ),

y llamaremos a |It | a el tamaño de tal intervalo, i.e. |It | = ε(1 + ε)t .

Una de las técnicas principales que ocuparemos es la de “estiramiento”, que consiste en

estirar el eje de tiempo en un factor (1 + ε). Claramente, esto solo empeora la solución en un

factor de (1 + ε). Las dos técnicas básicas de estiramientos son:

xxv

1. Estirar Tiempos de Completación Este procedimiento consiste en retrasar cada

trabajo, tal que el tiempo de completación de un trabajo j se vuelve Cj′ = (1 + ε)Cj

en la nueva programación de tareas. Es fácil de verificar que este procedimiento genera

un tiempo muerto de εpj previo a cada trabajo j.

2. Estirar Intervalos: El objetivo de este procedimiento es crear tiempo muerto en cada intervalo, excepto por aquellos que tienen un trabajo que los cubren completamente.

Como antes, consiste en desplazar los trabajos hasta el siguiente intervalo. Más precisamente, si el trabajo j termina en It y ocupa dj unidades de tiempo en It , moveremos j

a It+1 desplazandolo en exactamente |It | unidades de tiempo, tal que ocupe dj unidades

de tiempo en It+1 . Luego, el tiempo de completación de la nueva solución será a lo más

(1 + ε)Cj , y por lo tanto el costo total de la solución se incrementará en a los más un

factor (1 + ε).

Notemos que si j parte siendo procesado en It donde es procesado por dj unidades

de tiempo, después de aplicar el desplazamiento será procesada en It+1 en a lo más

dj unidades de tiempo. Ya que It+1 tiene ε|It | = ε2 (1 + ε)t más unidades de tiempo

que It , al menos esa cantidad de tiempo muerto será creado en It+1 . Además, podemos

asumir que este tiempo muerto es consecutivo en cada intervalo. En efecto, esto se puede

lograr moviendo a la izquierda lo más posible todos los trabajos que son programados

completamente dentro de un intervalo.

Antes de dar una descripción general del algoritmo, presentamos un teorema que asegura

la existencia de una solución (1 + ε)-aproximada donde ninguna orden cruza más que O(1)

intervalos. Para esto, primero mostramos la siguiente propiedad básica, la cuál está planteada

en el caso más general de máquinas no relacionadas.

P

Lema 1.6. Para cualquier instancia de R|part| wL CL existe una solución óptima tal que:

1. Para cada orden L ∈ O y para cada máquina i = 1, . . . , m, todos los trabajos en L

asignados a la máquina i son procesados de manera consecutiva.

2. La secuencia en la cual las órdenes son procesadas en cada máquina es independiente

de la máquina.

Lema 1.7. Sea s := ⌈log(1 + 1/ε)⌉, luego existe una programación de tareas (1 + ε)aproximada en la cual cada orden es completamente procesada en a lo más s + 1 intervalos

consecutivos

xxvi

En lo que sigue describimos la idea general del PTAS. Dividimos el horizonte de tiempo en

bloques de s+1 = ⌈log(1+1/ε)⌉+1 intervalos, y denotemos por Bℓ el bloque [(1+ε)ℓ(s+1) , (1+

ε)(ℓ+1)(s+1) ). El Lema 1.7 sugiere optimizar cada bloque por separado, y posteriormente juntar

las soluciones de cada bloque para construir la solución global.

Ya que pueden haber órdenes que cruzan de un bloque al siguiente, será necesario perturbar la “forma” de los bloques. Para ello introducimos el concepto de “frontera”. La “frontera

saliente” de un bloque Bℓ es un vector con m entradas, tal que su i-ésima coordenada contiene

el tiempo de completación del último trabajo procesado en la máquina i entre los trabajos

pertenecientes a órdenes que comienzan a procesarse en Bℓ . Por otro lado, la “frontera entrante” de un bloque es la frontera saliente del bloque anterior. Dado un bloque, una frontera

entrante y una frontera saliente, diremos que una orden es procesada dentro del bloque Bℓ si

en cada máquina todos los trabajo en esa orden empiezan a procesarse después de la frontera

entrante y terminan de procesarse antes de la frontera saliente.

Asumamos momentaneamente que sabemos como calcular una solución (1+ε)-aproximada

para un subconjunto dado de órdenes V ⊆ O dentro de un bloque Bℓ , con fronteras entrante

y saliente F ′ y F respectivamente. Sea W (ℓ, F ′ , F, V ) el costo (suma ponderada de tiempos

de completación de órdenes) de esta solución.

Sea Fℓ el conjunto de posibles fronteras entrantes del bloque Bℓ . Usando programación

dinámica podemos llenar una tabla T (ℓ, F, U ) que contiene el costo de una solución casióptima para el subconjunto de órdenes U ⊆ O en el bloque Bℓ o antes, respetando la frontera

saliente, F , de Bℓ . Para calcular esta cantidad podemos usar la siguiente formula recursiva:

T (ℓ + 1, F, U ) =

min

F ′ ∈Fℓ ,V ⊆U

{T (ℓ, F ′ , V ) + W (ℓ + 1, F ′ , F, U \ V )}.

Desafortunadamente, la tabla T no contiene una cantidad polinomial de entradas, ni siquiera

finita. Luego, es necesario reducir su tamaño de la misma manera que en [1]. Con esto en

mente el esquema del algoritmo es como sigue.

Algoritmo: PTAS-DP

1. Localización: En este paso acotamos el perı́odo de tiempo en el cual cada orden puede

ser procesada. Damos estructura extra a la instancia, definiendo un instante de disponibilidad rL para cada orden L, tal que existe una solución (1 + ε)-aproximada donde

cada orden comienza a procesarse después de rL y termina de procesarse antes de un

cierto número constante de intervalos después de rL . Esto juega un rol crucial en el

próximo paso.

xxvii

2. Representación polinomial de subconjuntos de órdenes: El objetivo de este paso es

el de reducir el número de subconjuntos de órdenes que necesitamos considerar en la

programación dinámica. Para ello, para todo ℓ definimos un subconjunto de tamaño

polinomial Θℓ ⊆ 2O de posibles subconjuntos de órdenes que son procesadas en Bℓ o

antes en alguna solución casi-óptima.

3. Representación polinomial de fronteras: En este paso reducimos el número de fronteras

que debemos considerar en la programación dinámica. Para cada ℓ, encontramos Fbℓ ⊂

Fℓ , un conjunto de tamaño polinomial tal que en cada bloque la frontera saliente en

una solución casi-óptima pertenece a Fbℓ .

4. Programación dinámica: Para todo ℓ, F ∈ Fbℓ+1 , U ∈ Θℓ calculamos:

T (ℓ, F, U ) =

min

bℓ ,V ⊆U,V ∈Θℓ−1

F ′ ∈F

{T (ℓ − 1, F ′ , V ) + W (ℓ, F ′ , F, U \ V )}.

Es claro que no es necesario calcular exactamente W (ℓ, F ′ , F, U \ V ); una (1 + ε)aproximación de este valor, que mueve la frontera en a lo más un factor (1 + ε), es

suficiente. Para calcular esto, particionamos las órdenes en pequeñas y grandes. Para

los órdenes grandes usamos enumeración, y esencialmente tratamos cada posible programación de tareas, mientras que para las órdenes pequeñas las procesamos de manera

glotona.

Una de las mayores dificultades de este enfoque es que todas las modificaciones aplicadas

a la solución óptima deben conservar las propiedades dadas por el Lema 1.6. Esto es necesario

para describir la interacción entre un bloque y el siguiente usando solo el concepto de frontera.

En otras palabras, si esto no fuera cierto podrı́a pasar que algún trabajo de una orden que

comienza a procesarse en un bloque Bℓ sea procesado después de un trabajo que pertenece a

una orden que comienza en el bloque Bℓ+1 . Esto incrementarı́a la complejidad del algoritmo,

ya que esta interacción tendrı́a que ser considerada en la programación dinámica. Esta es la

principal razón por la cuál nuestro resultado no se generaliza de manera directa al caso en

donde tenemos tiempos de disponibilidad no triviales, ya que en este caso el Lema 1.6 no se

satisface.

Aplicando cuidadosamente estas ideas, se pueden concluir los siguientes teoremas.

Teorema 1.8. Algoritmo: PTAS-DP es un esquema de aproximación a tiempo polinomial

P

para P |part| wL CL cuando el número de trabajos por orden esta acotado por una constante

xxviii

K.

Teorema 1.9. Algoritmo: PTAS-DP es un esquema de aproximación a tiempo polinomial

P

para P m|part| wL CL .

Teorema 1.10. Algoritmo: PTAS-DP es un esquema de aproximación a tiempo polinoP

mial para P |part| wL CL cuando el número de órdenes es constante.

1.7

Conclusiones

En esta memoria estudiamos problemas de programación de tareas con el objetivo de minimizar la suma ponderada de tiempos de completación de órdenes. En el Capı́tulo 3 comenzamos

estudiando el caso particular de minimizar el makespan en máquinas no relacionadas. Mostramos como una simple técnica de redondeo puede transformar una solución con trabajos

interrumpibles a una en donde ningún trabajo es interrumpido, tal que el makespan aumenta en a lo más un factor 4. Luego, probamos que esta resultado es lo mejor que se puede

alcanzar, por medio de construir una familia de instancias que casi alcanzan esta cota.

P

En el Capı́tulo 4 presentamos algoritmos de aproximación para R|rij | wL CL y su verP

sión con trabajos interrumpibles R|rij , pmpt| wL CL . Ambos algoritmos son basados en una

técnica de redondeo muy similar a la desarrollada en el Capı́tulo 3 para minimizar el makespan. Además, cada algoritmo es el primero en tener un factor de aproximación constante para

cada problema. Sin embargo, todavia quedan varias preguntas abiertas. En primer lugar, podriamos preguntarnos si es que las técnicas de redondeo ocupadas en cada algoritmo pueden

ser mejoradas. A primera vista el paso que parece más factible de mejorar corresponde a

cuando los valores de y son llevados a cero si es que asignan un trabajo a un intervalo muy

tardı́o. Aunque no es una demostración, en el Capı́tulo 3 mostramos que una técnica muy

similar da un redondeo que no puede ser mejorado.

P

Recordemos que el mejor resultado sobre la dificultad de aproximar R|| wL CL deriva

del hecho que es N P-duro aproximar R||Cmax a un factor mejor que 3/2. Considerando que

el algoritmo dado en este escrito asegura una garantı́a de 27/2, serı́a interesante el disminuir

esta diferencia. Dado la generalidad de nuestro modelo, parece ser más fácil hacer esto dando

una reducción diseñada especı́ficamente para nuestro problema, demostrando que nuestro

problema es N P-duro de aproximar a un factor α > 3/2.

P

En el Capı́tulo 5 dimos un PTAS para P |part| wL CL , cuando el número de trabajos por

orden, el número de órdenes o el número de máquinas son constantes. Esto generaliza varios

xxix

P

PTASes previamente conocidos, como por ejemplo los PTAS para P ||Cmax y P || wj Cj . Sin

P

embargo, serı́a interesante el responder la pregunta de si el caso más general P |part| wL CL

es APX-duro o no.

Finalmente, otra posible dirección para continuar esta investigación es el de considerar

nuestro problema en el caso en lı́nea. En esta variante las órdenes llegan a través del tiempo,

y ningún tipo de información es conocida sobre ellas antes de su fecha de disponibilidad.

En problemas en lı́nea estamos interesados en comparar el costo de nuestra solución con la

solución óptima del caso en donde toda la información es conocida desde el tiempo 0. Con

este objetivo, la noción de α-points (ver por ejemplo [18, 5, 35, 10]) ha demostrado ser útil

para el problema de minimizar la suma ponderada de tiempos de completación de trabajos,

y por ende serı́a interesante el estudiar está técnica para nuestro caso más general en la

presencia de órdenes.

xxx

Chapter 2

Introduction

2.1

Machine scheduling problems

Machine scheduling problems deal with the allocation of scarce resources over time. They

arise in several and very different situations, for example, a construction site where the boss

has to assign jobs to each worker, a CPU that must process tasks asked by several users, or

a factory’s production lines that must manufacture products for its clients.

In general, an instance of a scheduling problem contains a set of n jobs J, and a set of m

machines M where the jobs in J must be processed. A solution of the problem is a schedule,

i.e., an assignment that specifies when and on which machines i ∈ M each job j ∈ J is

executed.

To classify scheduling problems we have to look at the different characteristics or attributes that the machines and jobs have, as well as the objective function to be optimized.

One of these is the machine environment, or the characteristics of the machines on our model.

For example, we can consider identical or parallel machines, where each machine is an identical copy of all the others. In this setting each job j ∈ J takes a time pj to be processed,

independent of the machine in which is scheduled. On the other hand, we can consider a

more general situation where each machine i ∈ M has a different speed si , and then the time

that takes to process job j on it is inversely proportional to the speed of the machine.

Additionally, scheduling problems can be classified depending on job’s characteristics.

Just to name a few, our model may consider nonpreemptive jobs, i.e. jobs cannot be interrupted until they are completed, or preemptive jobs, i.e. jobs that can be interrupted at any

time and later resumed on the same or in a different machine.

1

Also, we can classify problems depending on the objective function. One of the more

naturals objective functions is to minimize the makespan, i.e., to minimize the point in time

at which the last job finishes. More precisely, if for some schedule we define the completion

time of a job j ∈ J, denoted as Cj , as the time where job j ∈ J finishes processing, then the

objective is to minimize Cmax := maxj∈J Cj . Other classical example consists on minimizing

the number of late jobs. In this setting, each job j ∈ J has a deadline dj and the objective is

to minimize the number of jobs that finish processing after its deadline. As these, there are

several other different objective functions that can be considered.

A large amount of scheduling problems can be consired by combining the characteristics

just mentioned. So, it becomes necessary to introduce a standard notation for all these

different problems. For this, Grahams, Lawler, Lenstra and Rinnooy Kan [20], introduced

the “three field notation”, where a scheduling problem is represented by an expression of

the form α|β|γ. Here, the first field α denotes the machine environment, the second field β

contains extra constrains or characteristics of the problem, and the last field γ denotes the

objective function. In the following we describe the most common values for α, β and γ.

1. Values of α.

• α = 1 : Single Machine. There is only one machine at our disposal to process the

jobs. Each job j ∈ J takes a given time pj to be processed.

• α = P : Parallel Machines. We have a number m of identical or parallel machines

to process the jobs. Then, the processing time of job j is given by pj , independently

of the machine where job j is processed.

• α = Q: Related Machines. In this setting each machine i ∈ M has a speed si

associated. Then, the processing time of job j ∈ J on machine i ∈ M equals

pj /si , where pj is the time it takes to process j in a machine of speed 1.

• α = R: Unrelated Machines. In this more general setting there is no a priori

relation between the processing times of jobs on each machine, i.e., the processing

time of job j ∈ J on machine i ∈ M is an arbitrary number denoted by pij .

Additionally, in the case that α = P, Q or R, we can add the letter m at the end of the

field indicating that the number of machines m is constant. Then, for example, if under

a parallel machine environment the number of machines is constant, then α = P m. The

value of m can also be specified, e.g., α = P 2 means that there are exactly 2 parallel

machines to process the jobs.

2

2. Values of β.

• β = pmtn: Preemptive Jobs. In this setting we consider jobs that can be preempted, i.e., jobs that can be interrupted and resume later on the same or on a

different machine.

• β = rj : Release Dates. Each job j ∈ J has associated a release date rj , such that

j cannot start processing before that time.

• β = prec: Precedence Constrains. Consider a partial order relation over the jobs

(J, ≺). If for some pair of jobs j y k, j ≺ k, then k must start processing after the

completion time of job j.

3. Values of γ.

• γ = Cmax : Makespan. The objective is to minimize the makespan Cmax :=

maxj∈J Cj .

P

• γ =

Cj : Average Completion Times. We must minimize the average of the

P

completion times, or equivalently j∈J Cj .

P

• γ =

wj Cj : Sum of weight Completion Times. Consider a weight wj for each

j ∈ J. Then, the objective is to minimize the sum of weighted completion time

P

j∈J wj Cj .

It is worth noticing that by default we consider nonpreemptive jobs. In other words,

P

if the field β is empty, then jobs cannot be preempted. For example, R|| wj Cj denotes

the problem of finding a nonpreemptive schedule of a set of jobs J on a set of machines

M , where each job j ∈ J takes pij units of time to process in machine i ∈ M , minimizing

P

P

wj Cj denotes the same problem as before, with

j∈J wj Cj . As a second example, R|rj |

the only difference that a job j can only start processing after rj . Also, note that the field β

P

can take more than just one value. For example, R|prec, rj | wj Cj is the same as the last

problem, but adding precedence constrains.

Over all scheduling problems, most non-trivial ones are N P-hard and therefore there

is no polynomial time algorithm to solve them unless P = N P. In particular, as we will

show later, one of the fundamental problems in scheduling, P 2||Cmax , can be easily proven

N P-hard. In the following section we describe some general techniques to address N P-hard

optimization problems and some basic applications to scheduling.

3

2.2

Approximation algorithms

The introduction of the N P-complete class given by Cook [11], Karp [24] and independently

Levin [31], left big challenges about how these problems could be tackle given their apparent

intractability. One option that has been widely studied is the use of algorithms that completely solves the problem, but has no polynomial upper bound on the running time. This

kind of algorithm can be useful in small to medium instances, or in instances with some

special structure where the algorithm runs fast enough in practice. Nevertheless, there may

be other instances where the algorithm takes exponential time to finish, becoming impractical. The most commons of this approaches are Branch & Bound, Branch & Cut and Integer

Programming techniques.

For the special case of N P-hard optimization problems, another alternative is to use

algorithms that runs in polynomial time, but may not solve the problem to optimality. Among

this kind of algorithms, a particularly interesting class is “approximation algorithms”, i.e.,

algorithms in which the solution is guaranteed to be, in some sense, close to the optimal

solution.

More formally, let us consider a minimization problem P with cost function c. For α ≥ 1,

we say that a solution S to P is an α-approximation if it cost c(S) is within a factor α from

the cost of the optimal OP T , i.e., if

c(S) ≤ α · OP T.

(2.1)

Now, consider a polynomial-time algorithm A whose output over instance I is A(I). Then,