Chapter 4 Internal Memory - Computer Systems & Reliable SOC LAB

advertisement

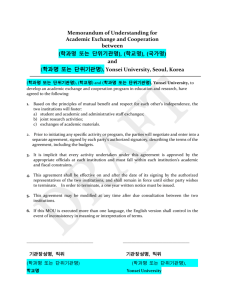

Chapter 4 Internal Memory Yonsei University Contents • • • • • 4-2 Computer Memory System Overview Semiconductor Main Memory Cache Memory Pentium and Power PC Advanced DRAM Organization Yonsei University Key Characteristics Location Processor Internal (main) External (secondary) Capacity Word size Number of words Unit of Transfer Word Block Access Method Sequential Direct Associative 4-3 Computer memory system overview Performance Access time Cycle time Transfer rate Physical Type Semiconductor Magnetic Optical Magneto-Optical Physical Characteristics Volatile/nonvolatile Erasable/nonerasable Organization Yonsei University Characteristics of Memory System Computer memory system overview • Location – Processor – Internal(main) – External(secondary) 4-4 Yonsei University Capacity Computer memory system overview • Internal memory capacity – Expressed in terms of bytes or words • External memory capacity – Expressed in terms of bytes 4-5 Yonsei University Unit of Transfer Computer memory system overview • Internal memory – The unit of transfer is equal to number of data into and out of the memory module – Often equal to the word length – Word • Natural unit of organization of memory • The size of the word is equal to number of bits used to represent a number and to instruction length – Addressable unit • In many systems, the addressable unit is word • Some systems allow addressing at the byte level – Unit of transfer • The number of bits read out of or written into memory at a time 4-6 Yonsei University Methods of Accessing Computer memory system overview • Sequential access – Start at the beginning and read through in order – Access time depends on location of data and a previous location – e.g. tape • Direct access – Individual blocks have unique address – Access is by jumping to vicinity plus sequential search – Access time depends on location and previous location – e.g. disk 4-7 Yonsei University Methods of Accessing Computer memory system overview • Random access – Individual addresses identify locations exactly – Access time is independent of location or previous access – e.g. RAM • Associative access – Data is located by a comparison with contents of a portion of the store – Access time is independent of location or previous access – e.g. cache 4-8 Yonsei University Performance Computer memory system overview • Access time – Time it takes to perform read or write operation (for random-access memory) – Time it takes to position the read-write mechanism at desired location (for non-random-access memory) • Memory cycle time – Applied to random-access memory – Consists of the access time plus any additional time required before next access 4-9 Yonsei University Computer memory system overview Performance • Transfer rate – Rate at which data can be transferred into or out of a memory unit (for random-access memory) – For non-random-access memory T N ? T A ? N R TN = Average time to read or write N bits TA = Average access time N = Number of bits R = Transfer rate, in bits per second(bps) 4-10 Yonsei University Physical Types Computer memory system overview • Semiconductor – RAM • Magnetic – Disk & Tape • Optical – CD & DVD • Others – Bubble – Hologram 4-11 Yonsei University Physical Characteristics Computer memory system overview • Decay • Volatility • Erasable • Power consumption 4-12 Yonsei University Organization Computer memory system overview • Physical arrangement of bits to form words • Not always obvious • e.g. interleaved 4-13 Yonsei University The Bottom Line Computer memory system overview • How much? – Capacity • How fast? – Time is money • How expensive? 4-14 Yonsei University Computer memory system overview The Memory Hierarchy • Relationships – The faster access time, the greater cost per bit – The greater capacity, the smaller cost per bit – The greater capacity, the slower access time • As one goes down the hierarchy, the following occur – – – – 4-15 Decreasing cost per bit Increasing capacity Increasing access time Decreasing frequency of access of the memory by the processor Yonsei University The Memory hierarchy 4-16 Computer memory system overview Yonsei University Computer memory system overview The Memory Hierarchy • Registers – In CPU • Internal or Main memory – May include one or more levels of cache – RAM • External memory – Backing store 4-17 Yonsei University Performance of A Two-Level Memory 4-18 Computer memory system overview Yonsei University Hierarchy List • • • • • • • • 4-19 Computer memory system overview Registers L1 Cache L2 Cache Main memory Disk cache Disk Optical Tape Yonsei University Memory Hierarchy Computer memory system overview • Locality of reference – During the course of the execution of a program, memory references tend to cluster 4-20 Yonsei University Memory Hierarchy Computer memory system overview • Additional levels can be effectively added to the hierarchy in software • Portion of main memory can be used as a buffer to hold data that is to be read out to disk • Such a technique, sometimes referred to as a disk cache 4-21 Yonsei University Computer memory system overview So You Want Fast? • It is possible to build a computer which uses only static RAM (see later) • This would be very fast • This would need no cache – How can you cache cache? • This would cost a very large amount 4-22 Yonsei University Semiconductor Memory Types Memory Type Category Random-access Read-write memory memory(RAM) Read-only memory(ROM) Programmable ROM(PROM) Electrically Erasable PROM(EEPROM) 4-23 Erasure Write Mechanism Volatility Electrically, byte level Electrically Volatile Mask Read-only memory Not possible UV light, chip level Erasable PROM(EPROM) Flash memory Semiconductor main memory Read-mostly memory Electrically, block level Nonvolatile Electrically Electrically, byte level Yonsei University Semiconductor main memory RAM • Misnamed as all semiconductor memory is random access • Read/Write • Volatile • Temporary storage • Static or dynamic – DRAM requires periodic charge refreshing to maintain data storage 4-24 Yonsei University DRAM • • • • • • • • • 4-25 Semiconductor main memory Bits stored as charge in capacitors Charges leak Need refreshing even when it is powered Simpler construction Smaller per bit Less expensive Need refresh circuits Slower Main memory Yonsei University SRAM • • • • • • • • • 4-26 Semiconductor main memory Bits stored as on/off switches No charges to leak No refreshing needed when it is powered More complex construction Larger per bit More expensive Does not need refresh circuits Faster Cache Yonsei University Read Only Memory (ROM ) Semiconductor main memory • Permanent storage • Applications – – – – Microprogramming Library subroutines Systems programs (BIOS) Function tables • Problems – The data insertion step includes a large fixed cost – No room for error • Written during manufacture – Very expensive for small runs 4-27 Yonsei University Programmable ROM (PROM) Semiconductor main memory • Nonvolatile & Written only once – Writing is performed electrically and may be performed by a supplier or customer at a time later than the original chip fabrication – Needs special equipment to program 4-28 Yonsei University Read “Mostly” Memory (RMM) Semiconductor main memory • Read operations are far more frequent than write operations • But for which nonvolatile storage is required • EPROM • EEPROM • Flash 4-29 Yonsei University EPROM Semiconductor main memory • Erasable Programmable • Storage cell must be erased by UV before written operation • Read and written electrically • More expensive than PROM • Advantage of multiple update capability 4-30 Yonsei University Electrically Erasable PROM(EEPROM) Semiconductor main memory • A read-mostly memory that can be written into at any time without erasing prior contents • Takes much longer to write than read • Advantage – Nonvolatility with the flexibility of being updatable in place using ordinary bus control, address, data lines 4-31 Yonsei University Flash Memory Semiconductor main memory • Erase whole memory electrically • Entire flash memory can be erased in one or few seconds (faster than EPROM) • Possible to erase just blocks • Impossible to erase byte-level • Use only one transistor per bit • Achieves high density 4-32 Yonsei University Memory Cell Operation 4-33 Semiconductor main memory Yonsei University Chip Logic Semiconductor main memory • A 16Mbit chip can be organized as 1M of 16 bit words • A bit per chip system has 16 lots of 1Mbit chip with bit 1 of each word in chip 1 and so on • A 16Mbit chip can be organized as a 2048 x 2048 x 4bit array – Reduces the number of address pins • Multiplex row address and column address • 11 pins to address (211=2048) • Adding one more pin doubles range of values • So the memory size grows by a factor of 4 4-34 Yonsei University Semiconductor main memory Refreshing • • • • • • 4-35 Refresh circuit included on chip Disable chip Count through rows Read & Write back Takes time Slows down apparent performance Yonsei University Typical 16 Mb DRAM (4M x 4) 4-36 Semiconductor main memory Yonsei University Semiconductor main memory Chip Packaging • Pins support following signal lines – Address of words being accessed • For 1M words, a total of 20(220=1M) pins needed(A0-A19) – Data to be read out, consisting of 8 lines(D0-D7) – Power supply to the chip(Vcc) – Ground pin(Vss) – Chip enable (CE)pin – Program voltage(Vpp) that is supplied during programming(writing operation) 4-37 Yonsei University Typical Memory Package 4-38 Semiconductor main memory Yonsei University Module Organization Semiconductor main memory • How a memory module consisting of 256K 8-bit words could be organized – For 256K word, 18-bit address is needed and is supplied to the module from some external sources 4-39 Yonsei University Semiconductor main memory Module Organization • Possible organization of a memory consisting of 1M word by 8 bits per word – Need four columns of chips, each column containing 256K words arranged 4-40 Yonsei University 1-Mbyte Memory Organisation 4-41 Semiconductor main memory Yonsei University Semiconductor main memory Error Correction • Hard Failure – Permanent physical defect – Memory cell or cells affected cannot reliably store data and become stuck at 0 or 1or switch erratically between 0 and 1 – Caused by harsh environmental abuse, manufacturing defects and wear • Soft Error – Random, non-destructive – No permanent damage to memory – Caused by power supply problems or alpha particles • Detected using Hamming error correcting code 4-42 Yonsei University Error - Correcting Code Semiconductor main memory • When data are to be read into memory, a function f is performed to produce a code • Both the data and the code are stored • If M-bit data word and K-bit code, the stored word is M+K bits 4-43 Yonsei University Error Correction Semiconductor main memory • If M-bit word of data is stored, and code is K bit, then actual size of stored word is M+K bits • New set of K code is generated from M data bits compared with fetched code bits • Comparison one of three results – No errors detected • The fetched data bits sent out – An error is detected and it is possible to correct error • The data bit plus error-correction bits fed into corrector which produces a corrected set of M bits to be sent out – An error is detected, but it is impossible to correct it • This condition reported 4-44 Yonsei University Hamming Error-Correcting Code 4-45 Semiconductor main memory Yonsei University Semiconductor main memory Hamming Code • Parity bits • By checking the parity bits, discrepancies found in circle A and circle C, but not in circle B • Only one of the seven compartments is in A and C but not B • The error can be corrected by changing that bit • Syndrome word : result of comparison of bit-by-bit • Each bit of syndrome is 0 or 1 according to if there is or is not a match in position for two input • The syndrome word is K bits wide and has a range between 0 and 2K-1 2K – 1 ≥ M + k 4-46 Yonsei University Semiconductor main memory Increase in Word Length Single-Error Single-ErrorCorrection/DoubleCorrection Error Detection Data Bits Check Bits %Increase Check Bits %Increase 4-47 8 4 50 5 62.5 16 5 31.25 6 37.5 32 6 18.75 7 21.875 64 8 10.94 8 12.5 128 256 8 9 6.25 3.52 9 10 7.03 3.91 Yonsei University Error Correction Semiconductor main memory • To generate 4-bit syndrome – If the syndrome contains all 0s, no error is detected – If the syndrome contains one and only one bit set to 1, an error occurs in one of 4 check bits • No correction needed – If the syndrome contains more than one bit set to 1, numerical value of syndrome indicates the position of data bit in error • This data bit is inverted for correction 4-48 Yonsei University Layout of Data Bits & Check Bits 4-49 Semiconductor main memory Yonsei University Layout of Data Bits & Check Bits Semiconductor main memory • To achieve these characteristics, data and check bits are arranged into a 12-bit word as depicted • Those bit positions whose position numbers are powers of 2 are designated as check bits 4-50 Yonsei University Error Correction Semiconductor main memory C1 ? M 1 ? M 2 ? M 4? M5? M7 C 2 ? M1 ? M3? M 4? M6? M7 C4 ? M 2? M3? M 4? M8 C8 ? M5? M6? M7 ? M8 • Each check bit operates on every data bit position whose position number contains 1 in corresponding column position 4-51 Yonsei University Check Bit Degeneration Semiconductor main memory • Data and check bits are positioned properly in the 12-bit word. • By laying out position number of each data bit in columns, the 1s in each row indicates data bits checked by the check bit for that row 4-52 Yonsei University Check Bit Degeneration 4-53 Semiconductor main memory Yonsei University Semiconductor main memory Error Correction • Single-error-correcting(SEC) • Single-error-correcting, double-error -detecting(SEC-DED) 4-54 Yonsei University Hamming SEC-DEC Code 4-55 Semiconductor main memory Yonsei University Error Correction Semiconductor main memory • IBM 30xx implementations use 8-bit SECDED code for each 64 bits of data in main memory • Size of main memory is 12% larger than apparent to the user • VAX computer use 7-bit SEC-DED for each 32 bits of memory, for 22% overhead • A number of contemporary DRAMs use 9 check bits for each 128 bits of data, for 7% overhead 4-56 Yonsei University Cache memory Cache & Main Memory • Small amount of fast memory • Sits between normal main memory and CPU • May be located on CPU chip or module 4-57 Yonsei University Cache memory Principle • • • • CPU requests contents of memory location Check cache for this data If present, get the word from cache (fast) If not present, main memory is read into cache and the word is delivered to the processor • The word is delivered from cache to CPU because of phenomenon of locality of reference • Cache includes tags to identify which block of main memory is in each cache slot 4-58 Yonsei University Cache memory Principle • For mapping purposes, the memory is considered to consist of number of fixedlength block of K words each – Number of block : M = 2n/K • Cache consists of C lines of K words each and the number of lines is considerably less than the number of main memory blocks • Each line includes a tag that identifies which particular block is currently being stored 4-59 Yonsei University Cache memory Cache/Main-Memory Structure 4-60 Yonsei University Cache memory Principle • Cache hit occurs – Data and address buffers are disabled and the communication is only between the processor and cache, with no system bus traffic • Cache miss occurs – Desired address is loaded onto the system bus and the data are returned through data buffer to both cache and main memory 4-61 Yonsei University Cache memory Cache Read Operation 4-62 Yonsei University Cache memory Elements of Cache Design Cache size Mapping Function Direct Associative Set Associative Replacement Algorithm Least recently used(LRU) First in first out(FIFO) Least frequently used(LFU) Random 4-63 Write Policy Write through Write back Write once Line Size Number of Caches Single or two level Unified or split Yonsei University Cache memory Cache Size • Small enough that overall average cost per bit is close to that of main memory • Large enough that overall average access time is close to that cache alone • The larger cache, the larger number of gates involved in addressing the cache • The larger cache tend to be slower than small caches • Cache size is limited by available chip and board area • Impossible to arrive “optimum” size because cache’s performance is very sensitive to the nature of workload 4-64 Yonsei University Cache memory Factors For Cache Size • Cost – More cache is expensive • Speed – More cache is faster (up to a point) – Checking cache for data takes time 4-65 Yonsei University Cache memory Typical Cache Organization 4-66 Yonsei University Cache memory Mapping Function • Needed for determining which main memory block currently occupies a cache line • Direct mapping – Cache of 64kByte – Cache block of 4 bytes • i.e. cache is 16k (214) lines of 4 bytes – 16MBytes main memory – 24 bit address • (224=16M) 4-67 Yonsei University Cache memory Direct Mapping • Maps each block of main memory into only one possible cache line i = j modulo m where i = cache line number j = main memory block number m= number of lines in the cache 4-68 Yonsei University Direct Mapping Cache Organization 4-69 Yonsei University Cache memory Direct Mapping Cache Line Table Cache line Main memory block assigned 0 1 . . . m -1 0, m, 2m, … , 2s- m 1, m +1, 2m +1, … , 2s- m + 1 . . . m –1, 2m –1, 3m –1,… , 2s-1 Cache memory – Least significant w bits identify a unique word or byte – Most significant s bits specify one memory block – The MSBs are split into a cache line field r and a tag of s-r (most significant) – This latter field identifies one of the m=2r lines of cache 4-70 Yonsei University Cache memory Direct Mapping Example 4-71 Yonsei University Cache memory Direct Mapping Address Structure Tag s-r 8 Line or Slot r Word w 14 2 • 24 bit address • 2 bit word identifier (4 byte block) • 22 bit block identifier – 8 bit tag (=22-14) – 14 bit slot or line • No two blocks in the same line have the same Tag field • Check contents of cache by finding line and checking Tag 4-72 Yonsei University Cache memory Direct Mapping • Advantage – Direct mapping technique is simple and inexpensive to implement • Disadvantage – A fixed cache location for any given block – The hit ratio will be low 4-73 Yonsei University Cache memory Associative Mapping • A main memory block can load into any line of cache • The tag field uniquely identifies a block of main memory • To determine whether a block is in the cache, the cache control logic must simultaneously examine every line’s tag for a match • Cache searching gets expensive 4-74 Yonsei University Fully Associative Cache Organization 4-75 Yonsei University Cache memory Cache memory Associative Mapping Example 4-76 Yonsei University Associative Mapping Address Structure Cache memory Word 2 bit Tag 22 bit • 22 bit tag stored with each 32 bit block of data • Compare tag field with tag entry in cache to check for hit • Least significant 2 bits of address identify which 16 bit word is required from 32 bit data block • e.g. – Address – FFFFFC 4-77 Tag FFFFFC Data 24682468 Cache line 3FFF Yonsei University Cache memory Associative Mapping • Replacement algorithms is designed to maximize the hit ratio • Advantage – Flexible replacement of blocks when a new block is read into the cache • Disadvantage – Complex circuitry is required to examine the tags of all cache lines in parallel 4-78 Yonsei University Cache memory Set Associative Mapping • Compromise that reduces disadvantage of direct & associative approaches m=v× k i = j modulo v Where i = cache set number j = main memory block number m= number of lines in the cache k-way set associative mapping – V=m, k=1, the set associative technique reduces to direct mapping – V=1, k=m, reduces to associative mapping 4-79 Yonsei University Cache memory Set Associative Mapping • Cache is divided into a number of sets • Each set contains a number of lines • A given block maps to any line in a given set – e.g. Block B can be in any line of set i • e.g. 2 lines per set – 2 way associative mapping – A given block can be in one of 2 lines in only one set 4-80 Yonsei University K- Way Set Associative Cache Organization 4-81 Yonsei University Cache memory Set Associative Mapping Address Structure Tag 9 bit Word 2 bit Set 13 bit • Use set field to determine cache set to look in • Compare the tag field to see if we have a hit • e.g – Address – 1FF 7FFC – 001 7FFC 4-82 Tag 1FF 001 Data 12345678 11223344 Cache memory Set number 1FFF 1FFF Yonsei University Set Associative Mapping Example • 13 bit set number • Block number in main memory is modulo 213 • 000000, 008000,… , FF8000map to same set 4-83 Yonsei University Cache memory Two Way Set Associative Mapping Example 4-84 Yonsei University Cache memory Cache memory Replacement Algorithms • A new block is brought into cache, one of the existing blocks must be replaced • Direct mapping – Because there is only one possible line for any particular block, the block is must be replaced • Associative & set associative techniques – Need replacement algorithm • Least recently used(LRU) – Most effective – Replace that block in the set that has been in the cache longest with no reference – When a line is referenced, its USE bit is set to 1 and the USE bit of the other line is set to 0 4-85 Yonsei University Cache memory Replacement Algorithms • First in first out (FIFO) – Replace block that has been in cache longest • Least frequently used(LFU) – Replace block which has experienced the fewest references • Random – Not based on usage 4-86 Yonsei University Cache memory Write Policy • Must not overwrite a cache block unless main memory is up to date • If it has not updated, old block in the cache may be overwritten • When multiple processors are attached to the same bus and each processor has its own local cache – A word altered in one cache, invalidates a word in other cache • Multiple CPUs may have individual caches • I/O may address main memory directly 4-87 Yonsei University Cache memory Write Through • All writes go to main memory as well as cache • Multiple CPUs can monitor main memory traffic to keep local (to CPU) cache up to date • Disadvantage – Generates substantial memory traffic and may create bottleneck 4-88 Yonsei University Cache memory Write Back • Minimizes memory writes • Updates are made only in the cache • An UPDATE bit for cache slot is set when an update occurs • If a block is to be replaced, it is written back to main memory if and only if the UPDATE bit is set • Other caches get out of sync • I/O must access main memory through cache – Complex circuitry and a potential bottleneck • 15% of memory references are writes 4-89 Yonsei University Cache memory Cache Coherency • If data in one cache is altered, this invalidates the corresponding word in main memory and the same word in other caches • Even if a write-through policy is used, the other caches may contain invalid data • A system that prevent this problem said to maintain cache coherency – Bus watching with write through – Hardware transparency – Noncachable memory 4-90 Yonsei University Bus Watching With Write Through Cache memory • Each cache controller monitors address lines to detect write operations to memory by other bus masters • If another master writes to a location in shared memory that also resides in cache memory, cache controller invalidates that cache entry • This strategy depends on the use of a writethrough policy by all cache controller 4-91 Yonsei University Cache memory Hardware Transparency • Additional hardware used to ensure that all updates to main memory via cache reflected in all cache • If one processor modifies a word in its cache, this update is written to main memory • Any matching words in other caches are similarly updated 4-92 Yonsei University Cache memory Noncachable Memory • Only a portion of main memory is shared by more than one processor, and this is designated as noncachable • All accesses to shared memory are cache misses because the shared memory is never copied into the cache • The noncachable memory can be identified using chip-select logic of high-address bits 4-93 Yonsei University Cache memory Line size • When a block of data is retrieved and placed in the cache, the desired word and some number of adjacent words are retrieved • Two specific effects come into play – Larger blocks reduce the number of block that fit into a cache. Because each block fetch overwrites older cache contents, a small number of blocks result in data being overwritten shortly after it is fetched – As a block becomes larger, each additional word is farther from the requested word, and therefore less likely to be needed in near future 4-94 Yonsei University Cache memory Line size • The relationship between block size and hit ratio is complex, depending on the locality characteristics of a particular program • No definitive optimum value has been found • A size of from 2 to 8 words seems reasonably close to optimum 4-95 Yonsei University Cache memory Number of Caches • On chip cache – Possible to have cache on the same chip as the processor – Reduces processor’s external bus activity and speed up execution times and increases overall system performance • Most contemporary designs include both on-chip and external caches • Two-level cache – Internal cache designed level 1(L1) and external cache designed level 2(L2) 4-96 Yonsei University Cache memory Number of Caches • Reason for including L2 cache – If there is no L2 cache and the processor makes access request for memory location not in L1 cache, the processor must access DRAM or ROM memory across the bus • Poor performance because of slow bus speed and slow memory access time – If L2 SRAM cache is used, missing information can be quickly retrieved – Effect of using L2 depends on hit rates in both L1 and L2 caches 4-97 Yonsei University Cache memory Number of Caches • To split the cache into two: one dedicated to instruction and one dedicated to data • Potential advantage of a unified cache – For a given cache size, a unified cache has higher hit rate than split caches because it balances the load between instruction and data fetches automatically – Only one cache needs to be designed and implemented 4-98 Yonsei University Cache memory Number of Caches • The trend toward split caches – Superscalar machines (Pentium II, PowerPC) which emphasize parallel instruction execution and prefetching of predicted future instructions – The key advantage of the split cache design is that it eliminates condition for cache between the instruction processor and the execution unit – Important in any design that relies on the pipelining of instructions 4-99 Yonsei University Pentium II Block Diagram 4-100 Pentium II and PowerPC Yonsei University Structure Of Pentium II Data Cache Pentium II and PowerPC • LRU replacement algorithm • Write-back policy 4-101 Yonsei University Data Cache Consistency Pentium II and PowerPC • To provide cache consistency, data cache supports a protocol MESI (modified/exclusive/shared/invalid) • Data cache includes two status bit per tag, so that each line can be in one of four states – – – – 4-102 Modified Exclusive Shared Invalid Yonsei University Pentium II and PowerPC MESI Cache Line States M E S Modified Exclusive Shared I Invalid This cache line valid? Yes Yes Yes No The memory copy is… Out of date Valid Valid - Copies exist in other caches? No No Maybe Maybe Does not go to bus Goes to bus and updates cache Goes directly to use A write to this line… 4-103 Does not go to bus Yonsei University Pentium II and PowerPC Cache Control • Internal cache controlled by two bits – CD (cache disable) – NW(not write – through) • Two Pentium II instruction that used to control cache – INVD invalidates(flushes) internal cache memory and signals external cache to invalidate – WBINVD writes back and invalidates internal cache, then writes back and invalidates external cache 4-104 Yonsei University Pentium II Cache Operation Modes Control Bits Pentium II and PowerPC Operating Mode CD NW Cache Fills Write Throughs 0 0 Enabled Enabled Enabled 1 1 0 1 Disabled Disabled Enabled Disabled Enabled Disabled 4-105 Invalidates Yonsei University PowerPC Internal Cache Pentium II and PowerPC Model Size Bytes/Line Organization PowePC 601 1 32-kbyte 32 8-way set associative PowePC 603 2 8-kbyte 32 2-way set associative PowePC 604 2 16-kbyte 32 4-way set associative PowePC 620 2 32-kbyte 64 8-way set associative 4-106 Yonsei University PowerPC G3 Block Diagram 4-107 Pentium II and PowerPC Yonsei University PowerPC Cache Organization Pentium II and PowerPC • The L1 caches are eight-way set associative and use a version of the MESI cache coherency protocol • The L2 cache is a two-way set associative cache with 256K, 512K, of 1 Mbyte of memory 4-108 Yonsei University Enhanced DRAM(EDRAM) Advanced DRAM organization • Integrates a small SRAM cache onto a generic DRAM chip • Refresh operations can be conducted in parallel with cache read operations, minimizing the time that the chip is unavailable due to refresh • The read path from the row cache to the output port is independent of the write path from the I/O module to the sense amplifiers • This enables a subsequent read access to the cache to be satisfied in parallel with the completion of the write operation 4-109 Yonsei University EDRAM 4-110 Advanced DRAM organization Yonsei University Cache DRAM(CDRAM) Advanced DRAM organization • Includes a larger SRAM cache than EDRAM • SRAM on the CDRAM can be used in two ways – Used as a true cache, consisting of a number of 64-bit lines • The cache mode is effective for ordinary random access to memory – Used as a buffer to support the serial access of a block of data 4-111 Yonsei University Synchronous DRAM(SDRAM) Advanced DRAM organization • Exchanges data with the processor synchronized to an external clock signal and running at the full speed of the processor/memory bus without imposing wait states • With synchronous access, the DRAM moves data in & out under control of the system clock • Employs burst mode – To eliminate the address setup time and row and column line precharge time after first access – In burst mode, a series of data bits can be clocked out rapidly after the first bit has been accessed – Useful when all bits are to be accessed in sequence and in the same row of the array as the initial access 4-112 Yonsei University SDRAM Advanced DRAM organization • Dual-bank architecture that improves opportunities for on-chip parallelism • The mode register and associated control logic is another key feature differentiating SDRAMs from conventional DRAMs • It provides a mechanism to customize the SDRAM to suit specific system needs • The mode register specifies the burst length • SDRAM performs best when it is transferring large blocks of data serially 4-113 Yonsei University SDRAM 4-114 Advanced DRAM organization Yonsei University Rambus DRAM(RDRAM) Advanced DRAM organization • A more revolutionary approach to the memory-bandwidth problem • The chip exchanges data with processor over 28 wires no more than 12cm long • The bus can address up to 320 RDRAM chip and is rated at 500 Mbps • The special RDRAM bus delivers address and control information using an asynchronous block-oriented protocol • An RDRAM gets a memory request over the high-speed bus 4-115 Yonsei University RamLink Advanced DRAM organization • A memory interface with point-to-point connections arranged in a ring – Traffic on the rings is managed by a memory controller that sends messages to the DRAM chips • Data is exchanged in the from of packets • Request packets initiate memory transaction • Strengths – Provides a scalable architecture that supports a small or large number of DRAMs – Does not dictate internal DRAM structure 4-116 Yonsei University RamLink Advanced DRAM organization • RamLink Architecture 4-117 Yonsei University RamLink Advanced DRAM organization • Packet format 4-118 Yonsei University Characteristics of Two-Level Memories Appendix 4A Main Memory Cache Virtual Memory (Paging) Disk Cache Typical access time ratios 5/1 1000/1 1000/1 Memory management system Implemented by special hardware Combination of hardware and System software system software 4 to 128 bytes 64 to 4096 bytes 64 to 4096 bytes Direct access Indirect access Typical block size Access of processor to second level 4-119 Indirect access Yonsei University Relative Dynamic Frequency Study Language [HUCK83] [KNUT71] Pascal Workload Scientific FORTRAN Student Appendix 4A [PATT82] Pascal C System System [TANE78] SAL System Assign Loop 74 4 67 3 45 5 38 3 42 4 Call 1 3 15 12 12 IF 20 11 29 43 36 GoTo Other 2 - 9 7 6 3 1 6 4-120 Yonsei University Locality Appendix 4A • Each call is represented by the line moving down and to the right • Each return is represented by the line moving up and to the right • A window with depth equal to 5 is defined – Only a sequence of calls and returns with a net movement of 6 in either direction causes the window to move 4-121 Yonsei University The Call/Return Behavior of Programs 4-122 Appendix 4A Yonsei University Locality Appendix 4A • Spatial locality – Refers to the tendency of execution to involve a number of memory locations that are clustered • Temporal locality – Refers to the tendency for a processor to access memory locations that have been used recently 4-123 Yonsei University Locality of Reference For Web Pages 4-124 Appendix 4A Yonsei University Operation of Two-Level Memory Appendix 4A Ts = H ×T1 + (1 - H) × (T1 + T2 ) = T1 + (1 – H) × T2 where Ts = average(system) access time T1 = access time of M1 (e.g., cache, disk cache) T2 = access time of M2 (e.g., main memory, disk) H = hit ratio (fraction of time reference is found in M1) 4-125 Yonsei University Performance C Appendix 4A s ? C S S 1 1 1 ? C S ? S 2 2 2 Where Cs = average cost per bit for the combined two-level memory C1 = average cost per bit of upper-level memory M1 C2 = average cost per bit of lower-level memory M2 S1 = size of M1 S2 = size of M2 We would like Cs ˜ C2 (Given C1 >> C2 , this requires S1 << S2 ) 4-126 Yonsei University Memory cost Vs. Memory Size 4-127 Appendix 4A Yonsei University Performance Appendix 4A • Consider the quantity T 2 / T1 , which referred to as the access efficiency T T 4-128 1 s ? 1 1 ? (1 ? H ) T T 2 1 Yonsei University Access Efficiency Vs. Hit Ratio(T2 / T1 ) 4-129 Appendix 4A Yonsei University Hit Ratio Vs. Memory Size 4-130 Appendix 4A Yonsei University Performance Appendix 4A • If there is strong locality, it is possible to achieve high values of hit ratio even with relatively small upper-level memory size – Small cache sizes will yield a hit ratio above 0.75 regardless of the size of main memory – A cache in the range of 1K to 128K words is generally adequate, whereas main memory is now typically in the multiple-megabyte range • If we need only a relatively small upper-level memory to achieve good performance, the average cost per bit of the two levels of memory will approach that of the cheaper memory 4-131 Yonsei University