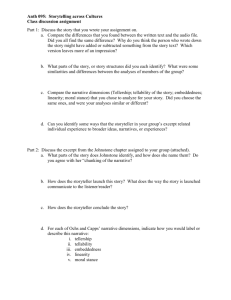

Generating natural narrative speech for the Virtual Storyteller

advertisement