Global Environment for Networking Innovations (GENI): Establishing

advertisement

GENI

Global Environment for Network Innovations

Spiral 1 Substrate Catalog

(DRAFT)

Document ID: GENI-INF-PRO-S1-CAT-01.5

February 22, 2009

Prepared by:

The GENI Project Office

BBN Technologies

10 Moulton Street

Cambridge, MA 02138 USA

Issued under NSF Cooperative Agreement CNS-0737890

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

TABLE OF CONTENTS

1

DOCUMENT SCOPE ...................................................................................................................... 6

1.1

1.2

1.3

1.4

PURPOSE OF THIS DOCUMENT ........................................................................................................... 6

CONTEXT FOR THIS DOCUMENT ........................................................................................................ 7

RELATED DOCUMENTS ..................................................................................................................... 8

1.3.1 National Science Foundation (NSF) Documents ................................................................. 8

1.3.2 GENI Documents ................................................................................................................ 8

1.3.3 Standards Documents .......................................................................................................... 8

1.3.4 Other Documents ................................................................................................................. 8

DOCUMENT REVISION HISTORY ........................................................................................................ 8

2

GENI OVERVIEW .......................................................................................................................... 9

3

MID ATLANTIC NETWORK........................................................................................................10

3.1

3.2

3.3

3.4

3.5

3.6

4

SUBSTRATE OVERVIEW....................................................................................................................10

GENI RESOURCES ...........................................................................................................................12

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................13

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................13

MEASUREMENT AND INSTRUMENTATION.........................................................................................14

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................15

GPENI .............................................................................................................................................15

4.1

4.2

4.3

4.4

4.5

4.6

5

SUBSTRATE OVERVIEW....................................................................................................................15

GENI RESOURCES ...........................................................................................................................18

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................19

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................20

MEASUREMENT AND INSTRUMENTATION.........................................................................................21

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................21

BEN .................................................................................................................................................21

5.1

5.2

5.3

5.4

5.5

5.6

6

SUBSTRATE OVERVIEW....................................................................................................................21

GENI RESOURCES ...........................................................................................................................27

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................27

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................29

MEASUREMENT AND INSTRUMENTATION.........................................................................................30

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................30

CMU TESTBEDS ...........................................................................................................................31

6.1

6.2

6.3

6.4

SUBSTRATE OVERVIEW....................................................................................................................31

GENI RESOURCES ...........................................................................................................................31

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................31

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................32

Page 2 of 71

(DRAFT) Spiral 1 Substrate Catalog

6.5

6.6

7

January 20, 2009

MEASUREMENT AND INSTRUMENTATION.........................................................................................32

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................32

DOME .............................................................................................................................................32

7.1

7.2

7.3

7.4

7.5

7.6

8

SUBSTRATE OVERVIEW....................................................................................................................32

GENI RESOURCES ...........................................................................................................................33

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................34

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................35

MEASUREMENT AND INSTRUMENTATION.........................................................................................35

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................36

ENTERPRISE GENI .......................................................................................................................36

8.1

8.2

8.3

8.4

8.5

8.6

9

SUBSTRATE OVERVIEW....................................................................................................................36

GENI RESOURCES ...........................................................................................................................37

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................37

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................37

MEASUREMENT AND INSTRUMENTATION.........................................................................................37

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................37

SPP OVERLAY ..............................................................................................................................37

9.1

9.2

9.3

9.4

9.5

9.6

10

SUBSTRATE OVERVIEW....................................................................................................................38

GENI RESOURCES ...........................................................................................................................39

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................40

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................41

MEASUREMENT AND INSTRUMENTATION.........................................................................................41

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................41

PROTO-GENI .................................................................................................................................41

10.1

10.2

10.3

10.4

10.5

10.6

11

SUBSTRATE OVERVIEW....................................................................................................................42

GENI RESOURCES ...........................................................................................................................43

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................44

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................44

MEASUREMENT AND INSTRUMENTATION.........................................................................................44

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................44

PROGRAMMABLE EDGE NODE ................................................................................................45

11.1

11.2

11.3

11.4

11.5

11.6

12

GENI-INF-PRO-S1-CAT-01.5

SUBSTRATE OVERVIEW....................................................................................................................45

GENI RESOURCES ...........................................................................................................................46

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................46

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................47

MEASUREMENT AND INSTRUMENTATION.........................................................................................47

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................48

MEASUREMENT SYSTEM ..........................................................................................................48

12.1 SUBSTRATE OVERVIEW....................................................................................................................48

12.2 GENI RESOURCES ...........................................................................................................................48

12.3 AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................49

Page 3 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

12.4 AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................49

12.5 MEASUREMENT AND INSTRUMENTATION.........................................................................................50

12.6 AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................50

13

VISE ................................................................................................................................................50

13.1

13.2

13.3

13.4

13.5

13.6

14

WIMAX...........................................................................................................................................57

14.1

14.2

14.3

14.4

14.5

14.6

15

SUBSTRATE OVERVIEW....................................................................................................................57

GENI RESOURCES ...........................................................................................................................59

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................60

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................60

MEASUREMENT AND INSTRUMENTATION.........................................................................................62

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................62

TIED ................................................................................................................................................62

15.1

15.2

15.3

15.4

15.5

15.6

16

SUBSTRATE OVERVIEW....................................................................................................................62

GENI RESOURCES ...........................................................................................................................62

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................62

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................62

MEASUREMENT AND INSTRUMENTATION.........................................................................................63

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................63

PLANETLAB ..................................................................................................................................63

16.1

16.2

16.3

16.4

16.5

16.6

17

SUBSTRATE OVERVIEW....................................................................................................................63

GENI RESOURCES ...........................................................................................................................63

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................63

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................63

MEASUREMENT AND INSTRUMENTATION.........................................................................................63

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................64

KANSEI SENSOR NETWORKS ...................................................................................................64

17.1

17.2

17.3

17.4

17.5

17.6

18

SUBSTRATE OVERVIEW....................................................................................................................50

GENI RESOURCES ...........................................................................................................................53

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................54

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................54

MEASUREMENT AND INSTRUMENTATION.........................................................................................55

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................56

SUBSTRATE OVERVIEW....................................................................................................................64

GENI RESOURCES ...........................................................................................................................66

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................68

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................68

MEASUREMENT AND INSTRUMENTATION.........................................................................................69

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................69

ORBIT .............................................................................................................................................70

18.1 SUBSTRATE OVERVIEW....................................................................................................................70

18.2 GENI RESOURCES ...........................................................................................................................70

Page 4 of 71

(DRAFT) Spiral 1 Substrate Catalog

18.3

18.4

18.5

18.6

19

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

AGGREGATE PHYSICAL NETWORK CONNECTIONS (HORIZONTAL INTEGRATION) ............................70

AGGREGATE MANAGER INTEGRATION (VERTICAL INTEGRATION) ..................................................70

MEASUREMENT AND INSTRUMENTATION.........................................................................................70

AGGREGATE SPECIFIC TOOLS AND SERVICES ..................................................................................70

ACRONYMS ..................................................................................................................................71

2

Page 5 of 71

(DRAFT) Spiral 1 Substrate Catalog

1

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Document Scope

This section describes this document’s purpose, its context within the overall GENI document tree,

the set of related documents, and this document’s revision history.

1.1

Purpose of this Document

Spiral-1 of GENI prototyping activities will provide a diverse set of substrate technologies in the

form of components or aggregates, with resources that can be discovered, shared, reserved and

manipulated by GENI researchers. It is the purpose of this document to catalog substrate technologies

in reference to a set of topic areas regarding the capabilities and integration of the spiral-1 prototype

aggregates and components. These topic areas1 were sent to the PI’s of projects providing substrate

technologies. Together with subsequent discussions with the GPO, this document presents the

information gathered in these areas. Information in some of the topic areas will be determined over the

duration of the spiral-1 integration activities, and thus this document will evolve with time.

There are several reasons for requesting the information forming this document. A detailed

description of substrate technologies and the relevance to GENI will assist in the analysis of spiral-1

capabilities to support early research experiments and to identify substrate technology gaps. Presently in

spiral-1, two small projects are funded to provide this type of analysis to the GPO.

Data Plane Measurements http://groups.geni.net/geni/wiki/Data%20Plane%20Measurements

GENI at Four Year Colleges http://groups.geni.net/geni/wiki/GeniFourYearColleges

In addition, having a well documented set of aggregate integration solutions, as expected for the diverse

set of substrate technologies in spiral-1, will provide a valuable resource as GENI system, operations

and management requirements are developed.

1

For more information see http://groups.geni.net/geni/wiki/ReqInf

Page 6 of 71

(DRAFT) Spiral 1 Substrate Catalog

1.2

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Context for this Document

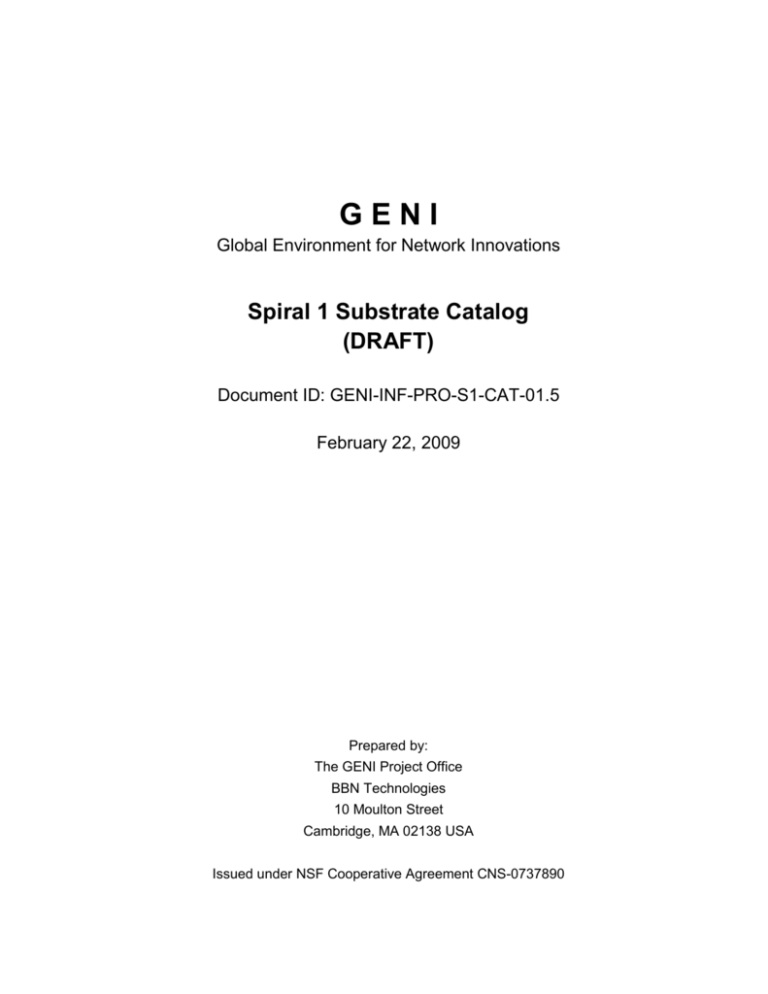

Figure 1-1 below shows the context for this document within GENI’s overall document tree.

GPO

Project

Management

Legal & Contract

Management

Architecture

System

Engineering

Infrastructure

Planning

Outreach

Infrastructure

Prototyping

Spiral 1

Substrate Catalog

Figure 1-1. Spiral-1 Substrate Catalog within the GENI Document Tree.

Page 7 of 71

(DRAFT) Spiral 1 Substrate Catalog

1.3

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Related Documents

The following documents are related to this document, and provide background information,

requirements, etc., that are important for this document.

1.3.1

National Science Foundation (NSF) Documents

Document ID

Document Title and Issue Date

N/A

1.3.2

GENI Documents

Document ID

Document Title and Issue Date

GENI-SE-SY-SO02.0

GENI System Overview

GENI-FAC-PRO-S1OV-1.12

GENI Spiral 1 Overview

GENI-SE-AS-TD01.1

GENI Aggregate Subsystem Technical Description

1.3.3

Standards Documents

Document ID

Document Title and Issue Date

N/A

1.3.4

Other Documents

Document ID

Document Title and Issue Date

N/A

1.4

Document Revision History

The following table provides the revision history for this document, summarizing the date at which

it was revised, who revised it, and a brief summary of the changes. This list is maintained in

chronological order so the earliest version comes first in the list.

Revision

Date

Revised By

Summary of Changes

Page 8 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

-01.0

01Oct08

J. Jacob

Initial draft to set scope of document

-01.1

08Dec08

J. Jacob

Compiled input from substrate providers

-01.2

-01.3

-01.4

10Dec08

5Jan09

20Jan09

J. Jacob

J. Jacob

J. Jacob

Edit of sections 3,4; addition of WiMAX content

Include comments from A. Falk and H. Mussman

Revised PI input to reflect discussions to date. Initial

editing for uniformity across projects. Addition of Kansei

Sensor Networks

-01.5

22Feb09

J. Jacob

Incorporated additional PI input as well as SE comments

and diagrams

2

GENI Overview

The Global Environment for Network Innovations (GENI) is a novel suite of infrastructure now

being designed to support experimental research in network science and engineering.

This new research challenges us to understand networks broadly and at multiple layers of

abstraction from the physical substrates through the architecture and protocols to networks of people,

organizations, and societies. The intellectual space surrounding this challenge is highly

interdisciplinary, ranging from new research in network and distributed system design to the theoretical

underpinnings of network science, network policy and economics, societal values, and the dynamic

interactions of the physical and social spheres with communications networks. Such research holds

great promise for new knowledge about the structure, behavior, and dynamics of our most complex

systems – networks of networks – with potentially huge social and economic impact.

As a concurrent activity, community planning for the suite of infrastructure that will support NetSE

experiments has been underway since 2005. This suite is termed the Global Environment for Network

Innovations (GENI). Although its specific requirements will evolve in response to the evolving NetSE

research agenda, the infrastructure’s conceptual design is now clear enough to support a first spiral of

planning and prototyping. The core concepts for the suite of GENI infrastructure are as follows.

Programmability – researchers may download software into GENI-compatible nodes to

control how those nodes behave;

Virtualization and Other Forms of Resource Sharing – whenever feasible, nodes implement

virtual machines, which allow multiple researchers to simultaneously share the infrastructure;

and each experiment runs within its own, isolated slice created end-to-end across the

experiment’s GENI resources;

Federation – different parts of the GENI suite are owned and/or operated by different

organizations, and the NSF portion of the GENI suite forms only a part of the overall

‘ecosystem’; and

Slice-based Experimentation – GENI experiments will be an interconnected set of reserved

resources on platforms in diverse locations. Researchers will remotely discover, reserve,

configure, program, debug, operate, manage, and teardown distributed systems established

across parts of the GENI suite.

Page 9 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

As envisioned in these community plans, the GENI suite will support a wide range of experimental

protocols, and data dissemination techniques running over facilities such as fiber optics with nextgeneration optical switches, novel high-speed routers, city-wide experimental urban radio networks,

high-end computational clusters, and sensor grids. The GENI suite is envisioned to be shared among a

large number of individual, simultaneous experiments with extensive instrumentation that makes it easy

to collect, analyze, and share real measurements.

The remainder of this document presents the substrate catalog descriptions covering the topic areas

suggested by the GPO. In response to the initial input, the GPO has provided a list of comments and

questions to the PI’s. These will be embedded within the discussion to serve as placeholders where

additional information is requested, and will be updated as the information is provided. This is a public

document and all within the GENI community are encouraged to contribute (when applicable) either

directly within this document or through the substrate working group mailing list2.

3

Mid Atlantic Network

Substrate Technologies: Regional optical network, 10Gbps DWDM optical nodes, 10 Gbps Ethernet

switches, Compute servers

Cluster: B

Project wiki: http://groups.geni.net/geni/wiki/Mid-Atlantic%20Crossroads

3.1

Substrate Overview

Mid-Atlantic Crossroads owns and operates the DRAGON network deployed within the

Washington, D.C. beltway, connecting ten regional participating institutions and interconnecting with

both the Internet2 national backbone network and the National Lambda Rail network. This research

infrastructure consists of approximately 100 miles of high-grade fiber connecting five core switching

locations within the Washington metropolitan area. Each of these switching locations include optical

add/drop multiplexers and state-of-the-art MEMS-based wavelength selectable switches (Adva Optical

Networking). These nodes also include 10Gbps capable Ethernet switches (Raptor Networks). The

network currently connects approximately a dozen research facilities within the region that are either

involved in the development of control plane technologies, or are applying the light path capabilities to

e-science applications (note: these are not GENI projects). Figure 5-1 depicts the network

configuration at the time of this writing.

2

substrate-wg@geni.net

Page 10 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Figure 3-1 Research Infrastructure

The Mid-Atlantic Network PoP locations include:

University of Maryland, College Park

USC ISI-East Arlington, VA

Qwest Eckington Place NE, Washington, DC

George Washington University

Level 3 Old Meadow Rd. Mclean, VA

6 St Paul Street Baltimore

660 Redwood Street Baltimore

Equinix 21715 Filigree Court Ashburn, VA

The dedicated research network supporting the GENI spiral-1 prototyping project includes the

following locations:

University of Maryland, College Park

USC ISI-East Arlington, VA

Qwest Eckington Place NE, Washington, DC

George Washington University

Level 3 Old Meadow Rd. Mclean, VA

It is possible for MAX to support connection for additional GENI prototyping projects at sites from

either list of PoP locations.

As shown in Figure 3-2, a typical core node includes either a three or four degree ROADM, an

OADM, one or more Layer 2 Ethernet switches and several PC’s for control, virtualization and

performance verification.

Page 11 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

PC

(AM)

?

Core DRAGON Node – Typical Configuration

Control Plane Switch

GMPLS Stack

PCs

Public

Internet

Virtualization

?

Performance Testing

n GBE

Raptor Ethernet

SW

Public Internet

Data Plane Switch

Inter-domain

data plane

connections

n PC

(VM)

?

Control

SW

?

MAX Layer 3

IP Service

?

?

GigE & 10GigE

n 10GBE

Adva Optical

Add/Drop Shelf

MAX Ops

and Mgmt

nλ

ADVA DWDM

nλ

Dark fiber to other

metro-area ROADMs

or add/drop shelves

Adva Optical

Multi-Degree ROADM

nλ

Dark fiber to other

metro-area ROADMs

or add/drop shelves

n OTN2

nl

Figure 3-2 Typical core node

3.2

?

nλ

ADVA ROADM

nl

nl

GENI Resources

The following resources will be offered by this aggregate:

Ethernet Vlans

Optical measurements

These resources fall into the categories of component resources, aggregate resources, and measurement

resources,3 respectively.

The PC’s designated by (VM) in figure 3-2 are configured as PlanetLab servers which are have

deployed across the DRAGON infrastructure. The resources available from PlaneLab servers are cpu

slices and memory. Circuits can be provisioned to these PlanetLab nodes through one of the APIs

discussed in section 3.4.

Layer 2 Ethernet VLANs (802.1Q tagged MAC frames) provide the ability to provision or schedule

circuits (based on Ethernet VLANs) across the optical network with the following restrictions and

limitations:

maximum of 250 VLANs in use at any one time

maximum of 1 Gbps per circuit provisioned with bandwidth granularity of 0.1 Mbps

VLAN ID must be in the range of 100-3965

Ethernet VLAN’s enable the physical infrastructure to be shared, but traffic is logically isolated into

multiple broadcast domains. This effectively allows multiple users to share the underlying physical

resources without allowing access to each other’s traffic.

The DWDM optical layer will provide optical performance measurements. Measurements are made

at the wavelength level, which carries multiple slice VLANS. While the DRAGON infrastructure does

provide Layer 2 and Layer 1 programmable connectivity within the Washington DC metro area, and

3

See GENI Aggregate Subsystem Technical Description for a detailed discussion of these resources

Page 12 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

peering points with national infrastructures such as Internet2 and NLR, the complexities associated with

optical wavelength provisioning and configurations on a shared optical network infrastructure prohibit

offering alien wavelengths or direct access to the photonic layer as a GENI resource.

3.3

Aggregate Physical Network Connections (Horizontal Integration)

Physical connectivity within the substrate aggregate is illustrated in Figures 3-1 and 3-2. Core

nodes are connected via dark fiber, which is connected to DWDM equipment. Connectivity to the

network edge may either be a single wavelength at GigE, 10GigE, or in some cases a multi-wavelength

DWDM connection.

Connectivity to the GENI backbone (provided by Internet2 and NLR) will be accomplished via

existing tie fibers between Mid Atlantic Crossroads’ suite in the Level3 co-location facility at McLean,

Virginia. The DRAGON network already has existing connections to both NLR and Internet2 in

McLean.

The DRAGON infrastructure primarily uses Gigabit Ethernet and 10Gigabit Ethernet for physical

connectivity – in particular, 1000BaseLX, 1000BaseSX, 1000baseT, 10GBase-LR, and 10GBase-SR.

3.4

Aggregate Manager Integration (Vertical Integration)

The control switch provides physical interfaces (all at 1GBE) between the control framework and

the aggregate resources. The GENI facing side of the aggregate manager communicates to the CF

clearinghouse on a link to the public internet. The substrate facing side of the aggregate manager

communicates with each substrate component over intra-node Ethernet links.

A high level view of the aggregate manager and its software interfaces are illustrated in 3.3. With

respect to resource discovery and resource management (reservation, manipulation), the large GENI

(PlanetLab) block represents a native GENI subsystem within the aggregate manager. The arrows

represent interfaces from this subsystem to specific aggregate substrate technologies. The GENI facing

interfaces between the aggregate manager and the clearinghouse and/or researcher are not illustrated.

Under the assumption that optical measurements are resources to be discovered an interface must exist

for the ADVA DWDM equipment. The measurement

GENI (PlanetLab)

configuration and retrieval will be realized using TL1

over the craft (Ethernet) interface for the ADVA

equipment. The VLAN resource requires an interface to

the DRAGON software system. The DRAGON

DRAGON

software interfaces currently include a Web Services

(API/CLI)

(SOAP/XML) API, a binary API (based on GMPLS

standards), as well as command-line interfaces (CLI) and

web-based user interfaces. A GENI-compliant interface

ADVA

Raptor

will be provided by developing a wrapper to the existing

MyPLC

(SNMP/TL1)

(SNMP)

Figure 3-3

Web Services API. VLAN configuration will be realized using SNMP over the craft (Ethernet)

interface for Raptor switches. The PlanetLab hosts will use typical PLC control and management per

GENI wrapper developments for these hosts.

Page 13 of 71

(DRAFT) Spiral 1 Substrate Catalog

3.5

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Measurement and Instrumentation

Identify any measurement capabilities either embedded in your substrate components or as dedicated

external test equipment.

Layer 1 equipment (Adva Optical Networking), provides access to optical performance monitoring

data, FEC counters (only for 10G transponders), and SNMP traps for alarm monitoring. Layer 2

Ethernet switches, provides access to packet counters and error counters.

The DRAGON network also uses Nagios for monitoring general network health, round-trip time

between nodes, etc. Cricket is used for graphing network performance (interface speeds) as well as

graphing optical performance monitoring data (light levels, FEC counters, etc). Cricket and Nagios

monitoring is depicted in Figures 3 and 4, respectively.

The DRAGON network also has a Perfsonar active measurement point deployed at

https://perfsonar.dragon.maxgigapop.net.

Page 14 of 71

Figure 3-4 Cricket monitoring optical receive power on a 10G transponder (last 24

hours)

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Figure 3-5 Nagios monitoring PING service for a network switch

3.6

Aggregate Specific Tools and Services

Describe any tools or services which may be available to users and unique to your substrate

contribution.

The DRAGON software interfaces to the aggregate manager as an aggregate specific tool as well as

for resource discovery and management. The latter interface was discussed in the vertical integration

section above. This discussion focuses on how researchers will use DRAGON as a tool to configure the

VLAN’s which have been discovered and reserved. Researchers will be able to allocate dedicated Layer

2 circuits (provisioned using Ethernet VLANs) using the DRAGON API integrated into GENI

framework. Researchers must specify end points (source/destination interfaces), desired bandwidth,

starting time and duration. Circuits may be scheduled in advance (book-ahead reservations) or set to

signal immediately upon receiving a reservation request.

4

GpENI

Substrate Technologies: Regional optical network, 10Gbps optical switches, 10 Gbps Ethernet switches,

Programmable Routers, Site-specific experimental nodes

Cluster: B

Project wiki: http://groups.geni.net/geni/wiki/GpENI

4.1

Substrate Overview

Provide an overview of the hardware systems in your contribution.

Page 15 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

GpENI is built upon a multi-wavelength fiber interconnection between four GpENI universities within

the GPN (Great Plains Network), with direct connection to the Internet 2 backbone. At a high level,

Qwest dark fiber IRUed to the state of Kansas interconnects KSU to KU through the Qwest POP to the

Internet2 POP in Kansas City. UMKC is connected to the Internet2 POP over MOREnet IRU fiber, and

UNL is connected to the Internet POP by its own IRU fiber. Administration of the GpENI infrastructure

is performed by staff at each university, assisted by GPN, KanREN (Kansas Research and Education

Network) and MOREnet (Missouri Research and Education Network).

In the first year deployment, each university has a

GpENI node cluster interconnected to one-another and

to the rest of GENI by Ethernet VLAN’s. In later

deployments, each university site will include a Ciena

optical switch for layer-1 interconnections among

GpENI institutions. The node cluster is designed to be

as flexible as possible at every layer of the protocol

stack.

Each GpENI node cluster (figure 4-2) consists of

several components, physically interconnected by a

Gigabit Ethernet switch to allow arbitrary and flexible

experiments. Multiple PC’s in the node cluster will be

Figure 4-1 GpENI Participating Universities

designated for use as programmable routers such as

XORP or Click, or designated as PlanetLab nodes. The

specific number, n, per site is to be determined. One of the PC’s in the KSU cluster will be designated

as a MyPLC controller, and one of the PC’s in the KU

Campus

Internet

cluster will be designated as a backup MyPLC controller.

At each site an additional processor is reserved to host the

1 GbE

GpENI management and control. The exact functions of

this machine and its accessibility to GENI researchers is

n PC’s

n PC’s

GpENI mgmt &

Site-specific

prog routers

MyPLC

control

(tbd)

still being determined. This is a potential processor to host

the GpENI aggregate manager to provide the interface

between the GpENI substrate and the PlanetLab

1 GbE

1 GbE

1 GbE

1 GbE

clearinghouse. All of the PC’s in the node cluster have

GbE connections to the Ethernet switch. The Ethernet

?

Ethernet SW

switch will enable VLAN’s between the host PC’s

spanning the GpENI topology as well as VLAN’s

spanning the GENI backbone and connecting to other host

1 GbE

nx1 GbE

PC’s within cluster B. The ethernet switch also enables

the physical connections between GpENI control and

Ciena Optical

Switch

management with all substrate components in the node

cluster. Site specific nodes could include experimental

OC192

nodes, including software defined radios (such as the

KUAR), optical communication laboratories, and

sensor testbeds, as well as nodes from other GENI

Figure 4-2 GpENI Node Cluster

projects. These site specific nodes will connect to the

ethernet switch for control and management

interfaces. Experimental plane connections could be through either the ethernet switch or the Ciena

optical switch. At this time, no site-specific nodes have been committed to the GpENI aggregate. The

Ciena optical switch forms the high speed optical interconnections between GpENI cluster nodes.

Currently only one Ciena optical switch is deployed in GpENI and is located at UNL. This deployment

is a Core director configured with a single 10GbE Ethernet Service Line Module (ELSM) on the client

Page 16 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

side, and a single OC-192 module on the line side. The ELSM client side can support ten 1GbE

connections or one 10GbE connection. As drawn in figure 4-2, multiple 1GbE connections are

illustrated.

Per site decomposition of GpENI node clusters

The University of Kansas

Role

Optical Switch

Model

Ciena (4200 or CD)

HW Specs

TBD

Ethernet Switch

Netgear JGS524

24-port 10/100/1000 Enet

Ethernet Switch

Netgear GSM7224

24-port 10/100/1000 Enet with VLAN mgt

SNMP traffic monitoring

ordered

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

PL node

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

PL node

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

prog. router

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

prog. router

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

spare node

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

spare node

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

spare node

Dell Precision 470

Intel Xeon 2.8 GHz, 1GB

Linux

installed

GpENI

master control

GpENI control

backup

MyPLC

KSU backup

SW Specs

TBD

Status

Planned

temporary

Kansas State University

Role

Model

HW Specs

SW Specs

Status

Optical Switch

Ciena (4200 or CD)

TBD

TBD

future

Ethernet Switch

Netgear GSM7224

24-port 10/100/1000 Enet with VLAN mgt

SNMP traffic monitoring

planned

Linux

planned

GpENI

?

KSU control

?

MyPLC

Dell Precision 470

Intel Xeon 2.8 GHz, 2GB

Linux

installed

PL node

Custom

2.8 GHz Pentium 4, 1 GB

Linux

installed

PL node

Custom

2.8 GHz Pentium 4, 1 GB

Linux

installed

PL node

VMware Server 2.0

1.8 GHz Pentium Dual-Core, 1 GB

Linux

installed

Page 17 of 71

(DRAFT) Spiral 1 Substrate Catalog

PL node

GENI-INF-PRO-S1-CAT-01.5

(Dylan)?

January 20, 2009

?

Linux

future

PL node

?

?

Linux

future

prog. router

?

?

Linux

planned

prog. router

?

?

Linux

planned

University of Missouri – Kansas City

Role

Model

HW Specs

SW Specs

Status

Optical Switch

Ciena CD

TBD

TBD

planned

Ethernet Switch

Netgear GSM7224

SNMP traffic monitoring

planned

GpENI

24-port 10/100/1000 Enet with VLAN

mgt

?

?

Linux

planned

PL node

?

?

Linux

planned

PL node

?

?

Linux

planned

prog. router

?

?

Linux

planned

prog. router

?

?

Linux

planned

HW Specs

SW Specs

Status

160 Gbps cross connect

Core Director version

1 STM64/OC192 optical interface

5.2.1

UMKC control

University of Nebraska – Lincoln

Role

Model

Optical Switch

Ciena CDCI

Ethernet Switch

Netgear GSM7224

GpENI

24-port 10/100/1000 Enet with VLAN

mgt

installed

SNMP traffic monitoring

planned

?

?

Linux

planned

PL node

?

?

Linux

planned

PL node

?

?

Linux

planned

prog. router

?

?

Linux

planned

prog. router

?

?

Linux

planned

UNL control

4.2

GENI Resources

Discuss the GENI resources offered by your substrate contribution and how they are shared,

programmed, and/or configured. Include in this discussion to what extent you believe the shared

resources can be isolated.

Page 18 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

The following is a tentative list of resources available from the GpENI aggregate.

PlanetLab hosts provide cpu slices and memory.

Programmable routers provide cpu cycles, memory, disk space, queue’s, addresses and

ports

Ethernet switches provides VLAN’s

Optical switch resource is tbd.

Layer 2 Ethernet VLANs (802.1Q tagged MAC frames) provide the ability to provision or schedule

circuits (based on Ethernet VLANs) across the optical network with the following restrictions and

limitations:

maximum of 250 VLANs in use at any one time

maximum of 1 Gbps per circuit provisioned with bandwidth granularity of 0.1 Mbps

VLAN ID must be in the range of 100-3965

Ethernet VLAN’s enable the physical infrastructure to be shared creating researcher defined topologies.

Traffic is logically isolated into multiple broadcast domains. This effectively allows multiple users to

share the underlying physical resources without allowing access to each other’s traffic.

x

4.3

Aggregate Physical Network Connections (Horizontal Integration)

Provide an overview of the physical connections within the substrate aggregate, as well as between the

aggregate and the GENI backbones. Identify to the extent possible, non-GENI equipment, services and

networks involved in these connections.

The GpENI Infrastructure is still in early phases of deployment. Detailed network maps will be updated

on the GpENI wiki (https://wiki.ittc.ku.edu/gpeni_wiki) as the infrastructure is deployed. The physical

topology consists of fiber interconnection between the four GpENI universities, and is currently being

deployed, as shown in the figure 4-3 as white blocks. GpENI-specific infrastructure is depicted by blue

blocks.

Each of the four university node clusters will interface into the GpENI backbone via a Ciena CN4200

or CoreDirector switch. Currently only a CoreDirector switch has been deployed at UNL. The CN4200

switches shown in figure 4-3 represent the initial plans, which are being modified in response to the

phased acquisition alignment with GENI funding levels. The rest of the GpENI node infrastructure

(PC’s and Ethernet switch) for each site is labeled "GpENI node cluster". It should be noted that both

blue boxes at each site form the GpENI node cluster illustrated in figure 4-2.

The main fiber run between KSU, KU, and Kansas City is Qwest Fiber IRUed (leased) to KU,

proceeding through the Qwest POP at 711 E. 19th St. in Kansas City, and continuing to the Internet2

(Level3) POP at 1100 Walnut St., which will provide access to GpENI from Internet2. A chunk of Cband spectrum is planned providing multiple wavelengths at KU and KSU. UMKC is connected over

MOREnet (Missouri Research and Education Network) fiber to the Internet2 POP, with four

wavelengths anticipated. UNL is also connected to the Intenet2 POP over fiber IRUed from Level3 with

two wavelengths committed. Local fiber in Manhattan and Lawrence is leased from Wamego

Telephone (WTC) and Sunflower Broadband (SFBB), respectively. There is abundant dark fiber

already in place on the KU, KSU, UMKC, and UNL campuses to connect the GpENI nodes to the

switches (existing or under deployment) on the GPN fiber backbone. For reference, the UNL link is

Page 19 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

terminated by Ekinops switches, the UMKC link is terminated by ADVA switches, and the KU/KSU

link is terminated by Ciena switches.

Figure 4-3 GpENI Network Connections

4.4

Aggregate Manager Integration (Vertical Integration)

Identify the physical connections and software interfaces for integration into your cluster's assigned

control framework

Vertical integration of GpENI into the PlanetLab CF is currently not understood. The following text

describes a potential host for the aggregate manager and interfaces into the GpENI node cluster

components (PC’s, Ethernet Switch, and Optical Switch). The GpENI control and management node

seems like a logical place to consider hosting the PlanetLab aggregate manager. The physical

connection from this node to the campus Internet enables connectivity to both the GENI (PlanetLab)

clearinghouse and researchers. The GpENI control and management node also interfaces to all

components within the node cluster via 1GbE connections through the Ethernet switch. The 1GbE

connection between Ethernet switch and the optical switch is a special case where the control and

management interfaces to a craft port on the optical switch, i.e. the control and experiment planes have

physically separate connections. All other components share the same connection between control and

experiment planes.

The control and management interfaces to the GpENI node cluster components, which defines the

aggregate specific interfaces to integrate into the PlanetLab CF are illustrated in figure 4-4. GpENI is

currently investigating the use of DRAGON software from the Mid-Atlantic Network project for

dynamic VLAN’s to be part of the GpENI resources. As such, this appears as an aggregate specific tool

with interfaces between GENI and the Ethernet switch.

Page 20 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

GENI (PlanetLab)

MyPLC

Xorp/Click

DRAGON

(Web Svc API)

PL nodes

(Linux)

PL nodes

(Linux)

NetGear

(SNMP)

Ciena CD

(http)

Figure 4-4 Aggregate specific SW interfaces

4.5

Measurement and Instrumentation

Identify any measurement capabilities either embedded in your substrate components or as dedicated

external test equipment.

4.6

Aggregate Specific Tools and Services

Describe any tools or services which may be available to users and unique to your substrate

contribution.

5

BEN

Substrate Technologies: Regional optical network, Optical fiber switch, 10Gbps Optical Transport, 10

Gbps Routers/Switches, Site-specific experimental nodes

Cluster: D

Project wiki: http://groups.geni.net/geni/wiki/ORCABEN

5.1

Substrate Overview

Provide an overview of the hardware systems in your contribution.

BEN4 is the primary platform for RENCI network research. It’s a dark fiber-based, time-sharing

research facility created for researchers and scientists in order to promote scientific discovery by

4

Breakable Experimental Network: https://ben.renci.org

Page 21 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

providing the three Triangle Universities with world-class infrastructure and resources. RENCI

engagement sites at the three university campuses (UNC, NCSU and Duke) in RTP area as well as

RENCI Europa anchor site in Chapel Hill, NC contain BEN PoPs (Points of Presence) that form a

research test bed. Fig. 5-1 illustrates RENCI’s BEN dark fiber network that’s used to connect the

engagement sites.

Figure 5-1 The diagram illustrates the BEN fiber footprint over the NCNI ring. Its touchpoints

include the Duke, NCSU and UNC Engagement Sites and RENCI’s anchor site, the Europa

Center.

BEN consists of two planes – management, run over a mesh of secure tunnels provisioned over

commodity IP and data, run over the dark fiber infrastructure. Access to the management plane is

granted over a secure VPN. Management plane address space is private non-routable.

Each RENCI PoP shares the same network architecture that enables the following capabilities in

support of regional network research:

Reconfigurable fiber switch (layer 0) provides researcher access to fiber ports for dynamically

assigning new connections, which enables different physical topologies to be generated and

connect different experiments to BEN fiber

Power and Space to place equipment for performing experiments on BEN

RENCI additionally provides equipment to support collaborative research efforts:

o Reconfigurable DWDM (Dense Wavelength Division Multiplexing) (layer 1) which

provides access to wavelengths to configure new network connections

o Reconfigurable switch/router (layers 2 and 3) provide packet services

o Programmable compute platforms (3-4 server blades at each PoP)

Page 22 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

o

Figure 5-2 Fig. (a) provides a functional diagram of a RENCI BEN PoP. The nodal architecture

indicates various types of equipment and power and space for researchers to collocate their

experimental equipment. Fig (b) provides a systems level network architecture perspective that

illustrates network connectivity between PoPs.

As shown in figure 5-2 each BEN PoP is capable of accommodating equipment for several

experiments that can use the dark fiber exclusively in a time-shared fashion, or, at higher layers, run

concurrently.

Further details of a BEN PoP are shown in figure 5-3 with detailed equipment and per-site

configuration provided in the following text.

Polatis 32 fiber Reconfigurable Optical Switch

The Polatis Fiber Switch enables connectivity between individual fibers in an automated fashion.

The optical switch permits various scenarios for connecting the different pieces of equipment in a BEN

PoP. In a traditional implementation, layer 2-3 devices will connect to layer 1 WDM equipment that

connects to the optical switch. Alternatively, a layer 2-3 switch/router or experimental equipment can

connect directly to the fiber switch. With the appropriate optics, it’s entirely possible to directly connect

end systems into the optical fiber switch for network connectivity to end systems located in other BEN

PoPs.

Polatis implements beam-steering technology to collimate opposite interfaces from an input array to

an output array of optical ports

Voltage signals control the alignment of the optical interfaces on both sides of the array

Fully reconfigurable switch allows connectivity form any port to any other port on the switch

Page 23 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Optical power monitors are inserted to accurately measure the strength of optical signals through the

switch

32 fibers

Full non-blocking and completely reconfigurable: e.g. any fiber may be connected to another fiber

without needing to reconfigure existing optical fiber connections

Switch unit is optically transparent and fully bidirectional

Switch uinit front panel comprising 32 LC/UPC type connectors

Switch unit height 1RU

Switch unit to fit into a standard 19” rack

Ethernet interface and a serial RS-232 interface

Support for SNMP and TL1

See fiber switch data sheet for detailed specification of optical performance http://www.polatis.com/datasheets/vst%20303-07-0-s%20aug%202007.pdf

o Base loss through switch core < 1.4 dB (See Performance Category H on OST data

sheet – excludes additional loss from optical power monitors, OPM)

o Additional loss for a single OPM in connected path < 0.2 dB (See Performance

Category K-300 on fiber switch data switch)

o Additional loss for two OPMs in connected path < 0.3 dB (See performance category

K-500 on fiber switch data switch)

o Additional loss for three OPMs in connected path < 0.5 dB

o Additional loss for four OPMs in connection path < 0.6 dB

Infinera Digital Transport Node (DTN)

The Infinera DTN (Digital Transport Node) delivers advanced WDM infrastructure capabilities for

the purpose of supporting network and application research. Dedicated to promote experimentation in

BEN, the DTN is fully reconfigurable to aid investigators in advancing network science. Within a BEN

node, the DTN can accept a variety of client side signals through the fiber switch to enable direct

connectivity to experimental systems or other commercial network equipment to facilitate new proofof-concept demonstrations. Reconfigurability at layer 1 in a non-production, pro-research testbed

facility encourages fresh looks at network architectures by incorporating dynamic signaling of the DTN

in new networking paradigms and integrating it’s functionality in novel approaches to exploring

network phenomena.

Cisco Catalyst 6509-E

The Cisco 6509 provides a well-understood platform to support the high performance networking

needs and network research requirements at Renci. Its support for nearly all standardized routing and

switching protocols make it advantageous for implementing different testbed scenarios and for

customized experimentation over BEN. The 6509 enables collaboration between Renci’s engagement

sites by integrating various computing, visualization and storage technologies.

Support for Cisco’s Internetwork Operating System (IOS)

Each 6509 equipped with multiple 10 gigabit and 1 gigabit Ethernet interfaces

Redundant Supervisor Engine 720 (3BXL)

Catalyst 6500 Distributed Forwarding Card (3BXL)

Specifications URL

Page 24 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

http://www.cisco.com/en/US/prod/collateral/modules/ps2797/ps5138/product_data_sheet09186a00800ff916_

ps708_Products_Data_Sheet.html

Juniper 24-port EX 3200 Series Switch

The Juniper EX switch provides a programmatic API that can be used to externally communicate

with and control the switch through the use of XML. The advantage of having this capability is it

enables another device, that’s separate from the switch, to control its behavior for separation of the

control and forwarding planes.

Support for the Juniper Operating System (JUNOS)

XML application to facilitate programmatic API for the switch

24-port 10/100/1000 Ethernet interfaces

Specifications URL

http://www.juniper.net/products_and_services/ex_series/index.html

Node/Site configuration

Duke (status: operational)

Polatis 32 Fiber Reconfigurable Optical Switch

Non-blocking, optically transparent and fully bi-directional, LC connectors

Supports optical power measurement

Infinera Digital Transport Node (DTN) – 23” chassis

Band Multiplexing Module - C-band Only, OCG 1/3/5/7, Type 1 (Qty: 2)

Digital Line Module - C-band, OCG 1, Type 2 (Qty: 2)

Tributary Adaptor Module - 2-port, 10GR with Ethernet PM (Qty: 2)

Tributary Optical Module - 10G SR-1/I64.1 & 10GBase-LR/LW (Qty: 3)

Cisco Catalyst 6509 Enhanced 9-slot chassis, 15RU

Supervisor 720 Fabric MSFC3 PFC3BXL (Qty: 2)

48-port 10/100/1000 Ethernet Module, RJ-45, DFC-3BXL (Qty: 1)

8-port 10 Gigabit Ethernet Module, X2, DFC-3BXL (Qty: 1)

Juniper EX Switch, 1U

24-port 10/100/1000 Ethernet interface, RJ-45

Dell PowerEdge 860 Server (Qty: 3)

2.8GHz/256K Cache,Celeron533MHz

2GB DDR2, 533MHZ, 2x1G, Dual Ranked DIMMs

80GB, SATA, 3.5-inch 7.2K RPM Hard Drive

DRAC 4 Dell Remote Management PCI Card; 8X DVD

NCSU (status: under construction, due on-line 01/09)

Polatis 32 Fiber Reconfigurable Optical Switch

Non-blocking, optically transparent and fully bi-directional, LC connectors

Supports optical power measurement

Page 25 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Infinera Digital Transport Node (DTN) – 23” chassis

Band Multiplexing Module - C-band Only, OCG 1/3/5/7 (Qty: 2)

Digital Line Module - C-band, OCG 1 (Qty: 2)

Tributary Adaptor Module - 2-port, 10GR with Ethernet PM (Qty: 2)

Tributary Optical Module - 10G SR-1/I64.1 & 10GBase-LR/LW (Qty: 3)

Cisco Catalyst 6509 Enhanced 9-slot chassis, 15RU

Supervisor 720 Fabric MSFC3 PFC3BXL (Qty: 2)

48-port 10/100/1000 Ethernet Module, RJ-45, DFC-3BXL (Qty: 1)

8-port 10 Gigabit Ethernet Module, X2, DFC-3BXL (Qty: 1)

4-port 10 Gigabit Ethernet Module, XENPAK, DFC-3BXL (Qty:1)

Juniper EX Switch, 1U

24-port 10/100/1000 Ethernet interface, RJ-45

Dell PowerEdge 860 Server (Qty: 3)

2.8GHz/256K Cache,Celeron533MHz

2GB DDR2, 533MHZ, 2x1G, Dual Ranked DIMMs

80GB, SATA, 3.5-inch 7.2K RPM Hard Drive

DRAC 4 Dell Remote Management PCI Card; 8X DVD

UNC (status: operational)

Polatis 32 Fiber Reconfigurable Optical Switch

Non-blocking, optically transparent and fully bi-directional, LC connectors

Supports optical power measurement

Infinera Digital Transport Node (DTN) – 23” chassis

Band Multiplexing Module - C-band Only, OCG 1/3/5/7 (Qty: 2)

Digital Line Module - C-band, OCG 1 (Qty: 2)

Tributary Adaptor Module - 2-port, 10GR with Ethernet PM (Qty: 2)

Tributary Optical Module - 10G SR-1/I64.1 & 10GBase-LR/LW (Qty: 4)

Cisco Catalyst 6509 Enhanced 9-slot chassis, 15RU

Supervisor 720 Fabric MSFC3 PFC3BXL (Qty: 2)

48-port 10/100/1000 Ethernet Module, RJ-45, DFC-3BXL (Qty: 2)

8-port 10 Gigabit Ethernet Module, X2, DFC-3BXL (Qty: 2)

Juniper EX Switch, 1U

24-port 10/100/1000 Ethernet interface, RJ-45

Dell PowerEdge 860 Server (Qty: 3)

2.8GHz/256K Cache,Celeron533MHz

2GB DDR2, 533MHZ, 2x1G, Dual Ranked DIMMs

80GB, SATA, 3.5-inch 7.2K RPM Hard Drive

DRAC 4 Dell Remote Management PCI Card; 8X DVD

Renci (status: operational)

Polatis 32 Fiber Reconfigurable Optical Switch

Non-blocking, optically transparent and fully bi-directional, LC connectors

Page 26 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Supports optical power measurement

Infinera Digital Transport Node (DTN) – 23” chassis

Band Multiplexing Module - C-band Only, OCG 1/3/5/7 (Qty: 2)

Digital Line Module - C-band, OCG 1 (Qty: 2)

Tributary Adaptor Module - 2-port, 10GR with Ethernet PM (Qty: 4)

Tributary Optical Module - 10G SR-1/I64.1 & 10GBase-LR/LW (Qty: 8)

Cisco Catalyst 6509 Enhanced 9-slot chassis, 15RU

Supervisor 720 Fabric MSFC3 PFC3BXL (Qty: 2)

48-port 10/100/1000 Ethernet Module, RJ-45, DFC-3BXL (Qty: 2)

8-port 10 Gigabit Ethernet Module, X2, DFC-3BXL (Qty: 2)

Juniper EX Switch, 1U

24-port 10/100/1000 Ethernet interface, RJ-45

Dell PowerEdge 860 Server (Qty: 3)

2.8GHz/256K Cache,Celeron533MHz

2GB DDR2, 533MHZ, 2x1G, Dual Ranked DIMMs

80GB, SATA, 3.5-inch 7.2K RPM Hard Drive

DRAC 4 Dell Remote Management PCI Card; 8X DVD

BEN is represented in the GENI effort by RENCI. Following the established operational model,

GENI will become a project on BEN and RENCI personnel will serve as an interface between BEN and

the external research community for the purposes of setup, operational support and scheduling. This

will allow for the use of RENCI owned resources on BEN, such as Infinera’s DTN platforms, Cisco

6509 and Juniper routers etc. If other groups within the NC research community desire to contribute

equipment to GENI, their equipment can be accommodated at BEN PoPs and connected to BEN using

the interfaces described in the above list.

5.2

GENI Resources

Discuss the GENI resources offered by your substrate contribution and how they are shared,

programmed, and/or configured. Include in this discussion to what extent you believe the shared

resources can be isolated.

BEN is a regional networking resource that is shared by the NC research community. BEN operates

in a time-shared fashion with individual experiments scheduling time on BEN exclusively, or, when

possible, concurrently. Currently BEN scheduling is manual. Our plans for automatic scheduling and

provisioning are centered on adapting Duke’s ORCA framework to BEN to become its de facto

scheduling solution. Researchers are represented in BEN by their projects and allocation of time on

BEN is done on a per-project basis. Each project has a representative in the BEN Experimenter Group

(BEN EG), and the EG is the body that is responsible for managing BEN time allocations and resolving

conflicts.

5.3

Aggregate Physical Network Connections (Horizontal Integration)

Page 27 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Provide an overview of the physical connections within the substrate aggregate, as well as between the

aggregate and the GENI backbones. Identify to the extent possible, non-GENI equipment, services and

networks involved in these connections.

BEN is an experimental testbed that is designed to allow running networking experiments in

isolation. However some experiments may require external connectivity to national backbones or other

resources. BEN external connectivity is achieved through an interface between BEN’s Cisco 6509 and a

production Cisco 7609 router at RENCI’s Europa anchor site. This router allows interconnecting BEN’s

data plane with either a regional provider (NCREN) or national backbones (NLR or, via NCREN, I2).

Due to the production nature of the Cisco 7609, which also serves to support RENCI’s global

connectivity, as well as due to security concerns, connections from BEN to the outside world are

currently done by special arrangements.

Infinera utilizes Photonic Integrated Circuits (PICs) implemented in Indium Phosphide as a

substrate to integrate opto-electronic functions

Each DTN node introduces an OEO operation, which provides signal clean-up, digital power

monitoring, sub-wavelength grooming, muxing and add/drop

The DTN architecture simplifies link engineering and service provisioning

GMPLS control plane enables end-to-end services and topology auto-discovery across an Infinera

DTN network

BEN Ops

and Mgmt

Additional

Components

GbE

PC

Node

Control

(AM)

n PC

(VM)

GbE

GbE

Control Switch

GbE x n

GbE

Cisco Router (3)

GbE

Juniper Router (23)

GbE

10 GbE

1/10GbE

GbE

GbE

Infinera Transport

(1)

10 GbE

10 GbE

OTU 2

GbE

Polatis Fiber Switch

(0)

nl

Patch Panel

n l Internet

Figure 5-3: BEN external connectivity diagram – access to BEN occurs directly through Renci’s 10

GigE NLR FrameNet connection and through Renci’s production router that’s used for commodity

Internet, Internet2 and NLR connectivity (via NCREN).

Figure 5-3 and Table 7-1 summarize the possibilities for external connections to BEN data plane.

Page 28 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Table 7-1: BEN External connectivity

Connect

Type

Interf

ion

Purpose

ace

BEN

IP

10

GigE

Enables remote access to BEN through directly

connected IP interface on Renci’s 7609 router

NCREN

IP/BGP

10

GigE

Primary connectivity to commodity Internet and

R&E networks (Internet2 and NLR PacketNet); can

be used for layer 2 connectivity to Internet2’s

Dynamic Circuit Network (DCN)

Etherne

10

GigE

Experimental connectivity to national layer 2

switch fabric - FrameNet; it can also be used to

implement layer 2 point-to-point connections

through NLR, as well as connectivity to C-Wave

NLR

FrameNe

t

t

5.4

Aggregate Manager Integration (Vertical Integration)

Identify the physical connections and software interfaces for integration into your cluster's assigned

control framework.

GENI AM (ORCA)

(ORCA?)

Polatis

(SNMP)

(TL1)

Infinera

(SNMP)

(TL1)

(Prop XML)

(GMPS UNI)

Juniper

(CLI-TL1?)

(Pub XML)

Cisco

(CLI-TL1?)

(SNMP)

PC

(Linux)

Additional

Components

Polatis- TL1 and SNMP for configuration and status.

Infinera - TL1, SNMP and proprietary XML for configuration and status. GMPLS UNI support for

service provisioning.

Cisco - CLI and SNMP for configuration and status.

Juniper - CLI, published XML interface for configuration and status.

Page 29 of 71

(DRAFT) Spiral 1 Substrate Catalog

5.5

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Measurement and Instrumentation

Identify any measurement capabilities either embedded in your substrate components or as dedicated

external test equipment.

Polatis - 20 directional optical power monitors (OPM) as specified in the table below

Fiber

#

Input

OPM?

Output

OPM?

Fiber

#

Input

OPM?

Output

OPM?

1

Yes

Yes

17

Yes

Yes

2

Yes

Yes

18

Yes

Yes

3

Yes

19

Yes

4

Yes

20

Yes

5

Yes

21

Yes

6

Yes

22

Yes

7

Yes

23

Yes

8

Yes

24

Yes

9

25

10

26

11

27

12

28

13

29

14

30

15

31

16

32

Input optical power monitors measure light entering the switch

Output optical power monitors measure light exiting the switch

No optical power monitors on fibers 9-16 and 25-32

5.6

Aggregate Specific Tools and Services

Describe any tools or services which may be available to users and unique to your substrate

contribution.

Renci is developing a graphical user interface with multiple views (based on credentials) to operate the

Polatis optical switch.

Page 30 of 71

(DRAFT) Spiral 1 Substrate Catalog

6

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

CMU Testbeds

Substrate Technologies: Small scale Emulab testbed, Residential small form-factor machines, Wireless

emulator

Cluster: C

Project wiki: http://groups.geni.net/geni/wiki/CmuLab

6.1

Substrate Overview

Provide an overview of the hardware systems in your contribution.

Three distinct testbeds and hardware systems:

CMULab is basically a small version of the Emulab testbed. It has 10 experimental nodes, and two

control nodes. Each experimental node has two ethernet ports, a control network port and an

experimental network port. The machines are connected to a reconfigurable ethernet switch, just like in

Emulab.

Homenet is a distributed testbed of small form-factor machines. We're still in the process of negotiating

the deployment, but at this point, it looks like the initial major deployment will be in a residential

apartment building in Pittsburgh. The nodes will be connected to cable modem connections in

individual apartments. Each node has 3 wireless network interfaces.

The CMU Wireless Emulator is a DSP-based physical wireless emulator. It has a rack of laptops

connected to the emulator. The emulator can provide, within a range of capabilities, arbitrary physicallayer effects (attenuation, different shape rooms for multipath, interference, etc.). The wireless emulator

is physically connected to the CMULab rack over gigabit ethernet.

6.2

GENI Resources

Discuss the GENI resources offered by your substrate contribution and how they are shared,

programmed, and/or configured. Include in this discussion to what extent you believe the shared

resources can be isolated.

All nodes can be programmed by users. The Homenet nodes are like PlanetLab or RON testbed nodes:

Users can get accounts and run user-level programs or interface with the raw network. They probably

can NOT change the kernel. The emulator and CMULab nodes are fully controllable by experimenters,

including different OS support, etc. All three testbeds can be shared using space sharing.

The wireless emulator testbed also supports wireless channels that can be fully controlled by the user.

For an N node experiment, the user has N*(N-1)/2 channels that can be controlled. Control is typically

specified through a Java program, although control through a GUI or script are also supported.

Channels belonging to different experiments are fully isolated from each other.

6.3

Aggregate Physical Network Connections (Horizontal Integration)

Provide an overview of the physical connections within the substrate aggregate, as well as between the

aggregate and the GENI backbones. Identify to the extent possible, non-GENI equipment, services and

networks involved in these connections.

Page 31 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

The homenet nodes are located in houses. They require a commodity DSL or cable connection. The

CMULab and Emulator are connected to our campus network, which connects to I2, and from there to

ProtoGENI.

6.4

Aggregate Manager Integration (Vertical Integration)

Identify the physical connections and software interfaces for integration into your cluster's assigned

control framework

Resource allocation for all three testbeds is dong using CMUlab, which uses the ProtoGeni software

from Utah. Users use an ns-2-like script to specify how many nodes they need.

To a first order, CMUlab views the emulator as a pool of special laptops and users can specify how

many they need. Some laptops have slightly different capabilities (multi-path, software radios, etc.),

and users can specify these in the script they give to CMUlab. Users can also specify the OS, software,

etc. that they need on the laptops.

User swap in of an experiment currently requires a temporary solution. After the CMUlab swap in is

completed (which means that CMUlab has set up the laptops with the right software etc.) users then log

into the emulation controller and run a control program that syncs up with CMUlab and sets up the

emulator hardware to be consistent with the experiment. The user can then run experiments and they

need to run the control program again after the CMUlab swap out. An autonomous solution, i.e. no user

intervention, is in the plans for this project.

6.5

Measurement and Instrumentation

Identify any measurement capabilities either embedded in your substrate components or as dedicated

external test equipment.

Standard machine monitoring only - interface monitoring, tcpdump/pcap, nagios-style monitoring of

machine availability, etc.

6.6

Aggregate Specific Tools and Services

Describe any tools or services which may be available to users and unique to your substrate

contribution.

7

DOME

Substrate Technologies: Metro mobile network, compute nodes, variety of wireless interfaces, GPS

devices, wireless access points

Cluster: D

Project wiki: http://groups.geni.net/geni/wiki/DOME

7.1

Substrate Overview

Page 32 of 71

(DRAFT) Spiral 1 Substrate Catalog

GENI-INF-PRO-S1-CAT-01.5

January 20, 2009

Provide an overview of the hardware systems in your contribution.

The DOME test bed consists of 40 buses, each equipped with: