A title page including the tentative title of the thesis, your name

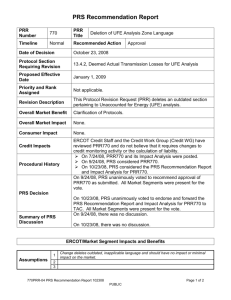

advertisement

An Honour’s Program Thesis Proposal The E-Net Neuro Controller Author: Joel Thomson Adviser: Denis Doorly April 16, 2004 Goal To develop a robust controller capable of handling multiple flight regimes. Purpose Over the past decade, there has been increasing interest in Unmanned Aerial Vehicles (UAVs), aided primarily by the fact that the computing power required for capable and robust controllers has become much more readily available. For civilian purposes, they offer the possibility of an aircraft that can’t be hijacked. For military purposes, they offer increased maneuverability as human constraints are removed, and they remove the human pilot from immediate danger. While there are already many UAVs flying today, most of them still have an operator on the ground controlling the UAV by remote control, thus relying on a constant and secure communications link. The next step is to remove this leash and allow UAVs to fly autonomously, however there are still many challenges to over come. A human pilot has the capability to think creatively, to adjust and compensate to his surroundings, even if he hasn’t seen them before. Traditional controllers are designed to do very specific tasks under a specific set of conditions, and tend to be based analytical analyses and stability criterions, what are otherwise be known as ‘hard’ computing techniques in that provable solutions are always used. This means that their performance tends to suffer when applied across many flight regimes or when subject to turbulence. Another approach to the solution must be found if an autonomous vehicle is to be constructed. ‘Soft’ computing techniques, such as neural networks, genetic algorithms, fuzzy logic, and probabilistic methods, have become the alternative of choice, as the large amount of computing power required to run them has become more easily available. The task now becomes finding the right soft technique that will yield an empirically stable controller. Anecdotally similar to the manner in which genetic algorithms combine two solutions in an attempt to obtain a better solution, this paper aims to do much the same by combining the Neuro-Adaptive Predictive Controller (NAPC) and E-Net. The E-Net [1] is a classifier that uses a pair of genetic algorithms (GAs) to synthesize detectors and Pattern Recognition Systems (PRSs), which in turn are made up of different types of neural networks (NNs). The NAPC [2] uses a single NN as a plant model to predictively control an aircraft, however the single NN proved to be limiting. The proposed approach involves training multiple NN, each one to a different set of flight conditions, while implementing an E-Net to classify the flight condition and choose the correct predictive NN. The E-Net was chosen because it allows the set of flight conditions to which each NN is trained to evolve along with the E-Net itself. This proposed approach is called an E-Net Neuro-Controller (ENC). The bulk of this paper is broken up into two main parts. The first presents the background knowledge that led to the development of the ENC; this includes brief discussions on feed-forward NNs, the NAPC, GAs, E-Net, the GA used in the E-Net, and the aircraft model used to evaluate the ENC. The second part presents the details of the 2 ENC. The paper is concluded with preliminary results, and a timetable for the completion of the research. Background These sections are intended as brief overviews of each topic and do not go into very much detail. Where appropriate, the reader is referred to further reading to gain a more comprehensive understanding of the subject. Feed-Forward NN i1·w1 i2·w2 i3·w3 Σ φ Output Layer Figure : A neuron Hidden Layers The first layer is known as the input layer, and simply holds the place for all the inputs. The intermediate layers, between the input and output layers, are known as hidden layers; the neurons in the hidden layer often have hyperbolic tangent neurons, x tanh x . The last layer is known as the output layer and the outputs of these neurons represent the output of the NN; the neurons in the output layer often have linear activation functions, x x . Input Layer A feed-forward NN consists of several layers of neurons, where each neuron in a given layer is connected to every neuron in the preceding and subsequent layer. A neuron multiplies each of its inputs by some weight, adds those products together, and applies an activation function; every neuron is identical in structure, though their weights and activation functions differ. The weights of the neurons within a network are what make each NN unique. Offline, the weights are determined using complex training algorithms; common examples include Levenburg-Marquardt Back Propagation and gradient descent. Online, the weights of a NN can be adapted by Figure : A typical NN making small adjustments to the weights on an ongoing basis. The exact details of these algorithms are beyond the scope of this paper; the reader is referred to [3], for further information. A Linear Neural Classifier (LNC), which is part of an E-Net that will be mentioned later, is feed-forward NN that only has an input and a linear output layer. NAPC The NAPC, as shown in [2], uses an adaptive feed-forward NN as a plant model to predict the behaviour of an aircraft. The input to the NN includes the aircraft state and control input, along with selected time-delays thereof that essentially give the NN a shortterm memory. By varying the current control input, a performance index, which is made up of two terms, is optimized. The first term is the difference between the NN’s predicted state (based on the current control input) and the desired state of the aircraft 3 (known before hand); the second term looks at the required control effort, or the different between the current control input and the previous control input. The current control input required to obtain the minimum value of this performance index is then fed to the plant. The error between the NN’s prediction and the plant’s actual response is used to adapt the NN. As shown in the paper, while the NAPC demonstrated a good ability to generalize even in the presence of considerable noise, the NN had difficulty dealing with the entire flight regime with which it was presented. y Plant (Aircraft) yd Performance Index Optimization u Σ e Neural Network (Model of Plant) yp + – yp Figure : Simplified NAPC diagram GAs GAs use evolutionary strategies, based on what has been observed in genetic processes, to find the best solution to a problem. A GA evolves a population of potential solutions and by altering and combining them in such a way as to encourage the emergence of a better solution. The first task is to devise a manner by which a solution to the problem can be encoded into a gene; this is known as the genetic structure. Each element, or allele, in the genetic structure represents a specific portion of a solution to a problem. A gene is often just a set of real numbers, or even just 1s and 0s. The initial population of genes is created randomly. By decoding a member’s gene into a solution to the problem at hand, that member can be evaluated and is assigned a fitness value. Genetic operators are then applied to the population, according to user-defined probabilities; some common operators include crossover, mutation, and elitism. Crossover is a method by which two parent genes in a given population are combined, or spliced together, to form a child in the new generation; the likelihood that a member of the population is chosen as a parent is based on its fitness. Mutation is a process by which the gene of a given member of the population is randomly perturbed; mutation is extremely important as it introduces variety to the population. Elitism is where one or more most fit individuals in the population are automatically members of the new population. Once a new population has been formed, the cycle begins again by evaluating the new population. This is only a basic overview of GAs, a more specific look at the GAs used in the E-Net is covered later. For further reading, the reader is referred to [4]… The E-Net 4 An E-Net [1] is built using a pair of cooperating GAs, and its overall performance is judged by how well it classifies a set of inputs. Once during every outer evolutionary cycle, or iteration, after each GA has gone through a certain number of inner evolutionary cycles, or generations, information is exchanged between the GAs. After many iterations, an E-Net is produced that is capable of classifying an input. New Detectors Detector Generator PRS Generator Subnets from used Detectors and Detector Training Data Figure : E-Net evolution diagram One GA evolves a population of PRSs, it is known as the PRS generator. Within the GA, a PRS is a set of GA-selected detectors, but in application, a PRS also includes a LNC whose inputs are taken from those detectors and whose outputs are the likelihood that the input belongs to a given class. Similarly known as the detector generator, the other GA evolves a population of detectors, which are meant to extract features from the input. A detector is made from a set of subnets that form a NN of arbitrary structure with a single output; its structure is arbitrary in that it is not organized in layers, but instead into connections and their associated weights. All neurons in a detector have hyperbolic tangent activation functions. A formed detector can be split up into as many subnets as it has neurons; a subnet consists of all the preceding neurons. Figure 1 shows the breakdown of a detector into its subnets; note that the first subnet is also the original detector. Neuron Input Subnet 1 Subnet 2 Subnet 3 Subnet 4 Subnet 5 (Original Detector) Figure : Division of a 5 neuron detector into 5 subnets Each subnet, detector, and PRS has an associated complexity, which, in the context of the E-Net, is defined as their total number of neural connections. For example, the original detector shown above would have a complexity of 12, while subnets 1 through 5 have respective complexities of 12, 4, 7, 2, and 3. The complexity of a PRS is the sum of the complexity of its detectors. 5 At each iteration, the PRS generator lets the detector generator know which inputs have been causing difficulty, so that during the next iteration the detector generator can find a feature common to those particular inputs. The detector generator’s mutation pool is updated based on the subnets extracted from the detectors which have been used in the PRS population. The PRS generator’s mutation pool is updated to include the new detectors that have been formed. This exchange of data between the populations is often referred to as the outer evolutionary cycle. The E-Net GA The pair of GAs used in the E-Net are different from conventional GAs, though they are identical to each other in every way except that the meaning of their alleles vary, and thus so do their evaluation method. In the detector generator, an allele represents a subnet, while in the PRS generator, an allele represents a detector. A gene is thus just a set of subnets or detectors, and the gene may vary in length as there may be different numbers of subnets or detectors used to make up detectors or PRS (respectively). Detectors are evaluated according to how well their subnets allow them to fire when presented with an input with which the PRS generator was having difficulty classifying. PRSs are evaluated based on well their detectors allow them to properly classify the given input. Each member of the population thus has an associated fitness. In order to prevent overly complex and over fitted solutions from developing, populations are constructed such that members are evenly distributed across equally sized complexity bins. The minimum complexity of the lowest bin is always 0, while the maximum complexity of the highest bin starts at the complexity of the most complex member of the initially random population, and adjusts to match the complexity of the most accurate member of the population. In order to maintain the initial diversity of the population, multitiered tournament selection is used. Each member is matched up against a random subset of the population in the same complexity bin, and is awarded a victory if: f member U 0,1 f member f random where U 0,1 is a uniformly generated random number between 0 and 1, f member represents the fitness of the member and f random represents the fitness of one of the members of the subset. The members of the population are ranked according to how many victories they accumulate; the more victories that a member accumulates, the larger its role in creating the new population. Once two parents have been chosen, crossover involves taking random alleles from a randomly chosen parent until the child has the same length as the shortest parent. For every additional allele that the longer parent has, there is a 50% chance that a random allele from that parent will be added to the child. The child is then mutated by adding a random allele from the mutation pool. This type of crossover is similar to that found in genetic programming, and, as pointed out in [5], in contrast to genetic algorithms, two 6 parents can produce children that are quite different both from the parents and from each other, even if the two parents are similar in the first place. The size of the population depends on how well the population is performing overall, and is given by: f f min S max s E f max f min ,1 where s E is the maximum expanded population size and f max and f min represent respectively the maximum and minimum obtainable fitness values. Thus as the population develops better solutions, it will grow by allowing there to more members in the next generation. The Aircraft Model In order to evaluate the performance of the ENC, a longitudinal aircraft model with ground reaction was built. The model is based on the equations presented in [6], but with landing gear equations so that take-off and landing can be considered. Gravity is constant (9.80665 m/s2). The density of air is constant (1.225 kg/m3). The modified equations of motion are: X A X T m g sin ( X Gmain X Gnose ) U m W Z A m g cos Z Gmain Z Gnose m M A M T Z Gmain xmain X Gmain zmain Z Gnose xnose X Gnose znose Q I yy Q X E U cos W sin Z E U sin W cos where subscripts A, T, Gmain, and Gnose represent the aerodynamic, thrust, main ground and nose ground (respectively) forces and moments acting on the aircraft. The lowercase letters are the position of the landing gear relative to the CG. The equations for the aerodynamic forces are easily obtainable, and the equations for the coefficients are calculated as follows: C D C D0 C D C L C L0 C L e C L e CM CM 0 CM e CM e qˆ CM q The aerodynamic effects of the flaps and landing gear are as follows [2]: 7 CL fla p s 0.6 CD fla p s 0.02 CM fla p s 0.05 CL CD g ea r 0.015 The Thrust is a force pointing in the body fixed x-direction with a z-direction offset that creates moments about the CG. Altitude (variations in density or temperature) is not taken into account in terms of available engine power. There are two points of contact with the ground: the nose gear and the main gear, thus if there are any ground forces, then the nose, the main, or both of the landing gear is touching the ground. Since the vertical and horizontal elements of the ground reaction of each landing gear are proportional to each other, the ground reaction was reduced to magnitude and an angle of arctan(μ) from the earth fixed horizontal (note that nose and main gear may have different μ values). The following three cases show what happens when different combinations of landing gear are on the ground. When both the main and nose gear are on the ground, there are two new forces, and thus two new pieces of information must be added to the system, Q 0 and Z E 0 . When the main gear is on the ground, only one new piece of information is needed, Z Emain 0 . When the nose gear is on the ground, only one new piece of information is needed, Z Enose 0 . Unfortunately, difficulties integrating are encountered when ‘if’ statements are used to choose the correct ground reaction force, due to the non-linear nature of an ‘if’ statement. To remedy this, a logsig function is employed to create a model that be more easily integrated. At each time step, all 3 of the above cases are calculated, it is verified that they aren’t negative (landing gear can’t be holding the aircraft to the ground), and they are combined as follows: main nose FGmain FGboth main nose FGmain 1 main nose FGmain main 1 nose main Γmain and Γnose are calculated as follows: log sig b Z main log sig b Z Emain nose Enose where b is a scaling factor (typically a large integer) to ensure that the logsig doesn’t affect the dynamics of the aircraft. Proposed work E-Net Neuro-Controller 8 Online, the ENC algorithm is similar to that of the NAPC, however instead of a single NN as a plant model, there is a set of NNs; an E-Net decides, based on the flight condition of the aircraft, which NN, from the set of NNs, will make the best prediction. Flight condition, in the context of this paper, is defined as the state of the aircraft, its objective, and any other relevant data; the first two are considered in this work, while the last may include such concerns as damage, terrain, and targets. Flight regime refers to a common portion of an aircraft’s flight (such as take-off, climb, cruise, etc.), and can be thought of as a range of flight conditions. The NAPC had shown good ability to robustly control an aircraft during takeoff; however difficulty was encountered in training a large single NN to handle the entire flight regime. In order to implement such a controller across multiple flight regimes, the NN would need to be enormous. It would have to be able to make accurate predictions across a large range of flight conditions that may only vary by a single input. It would also need to be able to adapt in one flight condition without affecting its accuracy in other flight conditions, which would be difficult to accomplish. The task of training a NN of this size and complexity would be a daunting and computationally intensive process as training time often increases exponentially with network size [1]. To overcome these issues, ENC is proposed as an alternative. y Plant (Aircraft) yd Performance Index Optimization u e yp NN 1 E-Net Σ + – yp NN 2 NN… Figure : ENC diagram The ENC uses a set of NNs and each NN in the set is trained to perform well under one set of flight conditions. This has three main advantages. First, each NN has to learn less, thus not only is it being trained to less data, but it can also contain fewer neurons. Less data and fewer neurons decrease the time required for offline training, while fewer neurons decreases the amount of time required for online computation. Second, the adaptive feed-back is only applied to one of the NN in the set, leaving the rest of NN in the set unchanged. Third, since NN are initialized with random weights, each NN will 9 have a natural disposition to certain flight conditions in comparison to the other NNs in the set; the ENC exploits this fact, as will be addressed shortly. There are two main drawbacks. It requires the training of multiple NN, though this is more than offset by the fact that the NN and training sets are both smaller. Second, having multiple NNs implies that one of them has to be chosen to be applied to the current flight condition, which is where the E-Net is implemented. The E-Net essentially classifies the flight condition in order to determine which NN should be used at a given point in time. It has one output neuron for each class of flight condition, or in the present context, it has one output neuron for each NN in the set; the NN whose output neuron (in the E-Net) fires the strongest is the NN that is applied to the current flight condition. The E-Net was chosen for two principle reasons. The first is the fact that it’s an evolving network; this allows the training process for the set of NNs and the evolutionary process for the E-Net to occur simultaneously, with each one adjusting and compensating for strengths and weaknesses in the other. The second reason is that the E-Net algorithm is designed to determine the necessary set of features on its own, rather than having to attempt to manually determine what will distinguish different flight conditions. Offline, the E-Net algorithm forms the basis for the ENC. During each iteration in the ENet, there are two populations that are updated, and then they exchange information. The ENC algorithm adds a third population, the set of NNs. While the first two populations are updated using a GA, the third uses conventional training algorithms. New Detectors Detector Generator PRS output data PRS Generator Subnets from used Detectors and Detector Training Data Set of NNs NN performance data Figure : ENC evolution diagram Communication between Populations As shown in the figure, there are four lines of communication between the three populations. For the purposes of illustration, assume that there is an input dataset P that consists of the flight conditions over which the ENC is to be utilized. There is also a desired output dataset T , obtained from the aircraft model. The development of these datasets and their specific details, such as time delays and jitter training, are explored later. 10 The set of NN must let the PRS generator know which NNs perform well under which conditions. Given the input/output data P and T , the error of each NN in the set of NN is calculated. A blunt approach would record the NN that produced the least amount of error for each input provided, and pass this information on to the PRS generator. Unfortunately, this tends to lead to a small number of NNs becoming dominant over the rest, thus not fully exploiting the fact that there are in fact multiple NNs and essentially eliminating the need for the E-Net. To prevent this from happening when passing data to the PRS generator, each NN is only allowed to be considered the best performer for an equal share of the inputs. Thus if there are 1000 inputs and 10 NNs, each NN is only considered to be the best for 100 of those inputs. Let TPRS represent this new output dataset created by the set of NNs and which is passed to the PRS generator every iteration. In the PRS generator, the GA selects a set of Input Data P P detectors to be used (vector input data) by the PRS, the aim being to create a PRS Detectors … D1 D2 DN that is capable of choosing the NN ~ PPRS , extracted features under the correct flight conditions to Linear Neural Classifier control the aircraft. LNC (winner takes all) The input data P is fed through these Output (ideally TPRS ) detectors to form ~ dataset PPRS . A LNC, Figure : Pattern Recognition System whose inputs are the detectors that make up the PRS and whose strongest output determines which NN is to be used, is trained to ~ provide the output TPRS given input PPRS . A new PRS has now been created, which is bound to perform well with certain inputs, and poorly with others; in order to train the detectors, a new output data set, TD , is created that records when the PRS correctly dealt with the given input, and when the PRS had difficulty with it. TD , along with all the detectors that were used in the PRS, are then passed to the detector generator. In the detector generator, the GA selects a set of subnets to be used in a detector, the aim being to create a detector that will fire for inputs with which the PRS had difficulty. The ~ input data P is fed through the subnets to form data set PD , which is essentially a set of subfeatures. A temporary linear neural classifier, whose inputs are the subnets of the ~ given detector, is trained to provide the output TD given input PD . A hyperbolic tangent neuron is created and trained to fire when the temporary linear neural classifier makes a mistake; the output of the given detector is the output of the new neuron. 11 Input Data P P (vector input data) S1 S2 … SM Subnets ~ PD , sub-features LNC N New Neuron Output (new neuron fires when LNC can’t) Figure : Detector Once the detector generator has created a new set of detectors, they are passed to the PRS generator. Given the new detectors, the PRS generator then makes its own judgment as to the flight conditions under which each NN, from the set of NNs, should be utilized. The NNs are then trained to try and match these flight conditions, and the cycle begins again. Despite the fact that the communication between the different populations has just been laid out here in a deceivingly linear manner, this communication is in fact occurring simultaneously at each iteration. This leads to a somewhat obscure relationship in terms of evolutionary interactions between the different populations. Evolutionary Interactions between Populations As the three populations evolve and pass their information among each other, their interaction must be well coordinated in order to avoid having one of the populations become overly evolved. The most important interaction is between the PRS generator and the set of NN. Should the PRS not evolve fast enough in comparison to the NN, the NN end up simply being trained to the flight conditions chosen by the initially random PRS population. This is evident in the most extreme case when the PRS population isn’t evolving at all; regardless of the information that the set of NN pass to the PRS generator, the PRS will continue the pass the same information to the set of NN, and the NN will slowly be trained to obey the PRS population. A similar matter that affects the performance of the ENC is the communication lag between the three populations. In the E-Net paper it is demonstrated that from the time that a PRS has difficulty in classifying a certain input, it takes one iteration for a new detector to be created and another iteration for that detector to be introduced into the PRS’s mutation pool; thus having taken two iterations to correct the problem. In the case 12 of the ENC, it may take twice as long to correct a given problem as the information must be passed that much further. The Question of Complexity Conventional complexity is concerned with the extent to which a NN has organized itself into parallel processes. In the case of the ENC, this has essentially been algorithmically imposed. Further reading on this topic will be pursued later in the research. Preliminary Results A simple trail run is created in which the aircraft, starting from steady state, is commanded to climb, level off, descend, and level off. Commands are specified with a constant climb angle and speed. The figure provides an example of an ENC’s output; the different colours represent the use of different NN by the ENC. In this particular case, the ENC has chosen to use one NN when the pitch rate is positive (yellow), another for a negative pitch rate (burgundy), and a third when the pitch rate is close to zero (blue). Please note that explaining this figure with pitch rate is merely for convenience. One problem with this example is that only three of nine neural networks were used, a potential problem mentioned earlier. As well, it should be noted that the results are not sufficiently to control an aircraft at this point, though with the use of jitter training [2], this should not prove difficult. 13 Timetable Date Goals May 7, 2004 Complete extended abstract, and submit to the 43rd AIAA Aerospace Sciences Meeting and Exhibit Summer 2004 Write complete paper and extend results using jitter training Fall 2004 – Spring 2005 Explore other related algorithms as investigate their potential to enhance the ENC: Spring 2005 Ensembling Bootstrapping Bagging Learning ENC (rather than adaptive) Complete Thesis Overview Over the past decade, there has been increasing interest in Unmanned Aerial Vehicles (UAVs), aided primarily by the fact that the computing power required has become much more readily available, though many technical challenges still remain. In many cases, single Neural Networks (NN) have proven to be effective at controlling aircraft in certain flight regimes (i.e. take-off, climb, cruise, etc.). This paper aims to extend this by using multiple NN to control an aircraft, while using an E-Net to choose which NN should be used at a given point in time based on the state of the aircraft. The E-Net uses a pair of genetic algorithms to evolve a neural classifier, and is adapted in this paper to also evolve the set of NNs used to control the aircraft. This proposed algorithm is called the E-Net Neuro Controller (ENC). Preliminary results indicate that the ENC appears to work well. Bibliography [1] Wickera, D.W. , Rizkib, M.M. , Tamburinoa, L. A., “E-Net: Evolutionary neural network synthesis”, Neurocomputing 42 (2002) 171–196 [2] Thomson, J., Jha, R., and Pradeep, S., “Neurocontroller Design for Nonlinear Control of Takeoff of Unmanned Aerospace Vehicles”, Proceedings of the 42nd AIAA Aerospace Sciences Meeting and Exhibit, Reno, NV, January 2004 [3] Haykin, S,, 1999, Neural networks : a comprehensive foundation, Upper Saddle River : Prentice-Hall 14 [4] Michalewicz, Z., 1992, Genetic algorithms + data structures = evolution programs, Berlin & London :Springer-Verlag [5] Koza, J.R., 1992, Genetic programming: on the programming of computers by means of natural selection, Massachusetts Institute of Technology [6] Davies, M., 2003, The Standard Handbook for Aeronautical and Astronautical Engineers, McGraw Hill 15