Ironically, the need for the Internet2 project has grown out of the

The New Internet

Jeffrey R. Ellis, Adam P. Uccello, Richard C. Gronback

University of Connecticut

Computer Science and Engineering

CSE 245 – Computer Networks

April 12, 2020

Abstract

The Gartner Group has predicted that a large minority of the more than 4,500 Internet Service

Providers (ISPs) in the United States “will be forced out of business in the next five years”

(Gar98, 1). Additionally, “[b]etween one-third and one-half of U.S. households will not be able to afford … the $50 to $60 monthly cost of cable modem access by century’s end” (Gar98, 2).

This is due to the inevitable spill over of new internet technologies into the commercial market as the government and academic community invest in future networking technologies. Currently, there are two major initiatives in this quest for alleviating the bandwidth-constrained research and academic communities who now share with commercial markets what was once their exclusive network: Internet2 (I2) and Next Generation Internet (NGI). Even though these are two separate programs, they have many commonalities not just in concept, but in physical hardware. This paper will explore each individually and also take a look at the features they share, thereby giving a comprehensive overview of what lies ahead in the new Internet and why the Gartner Group may be correct in their assessment of its impact on commercial Internet activity.

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

1. Introduction

As anyone living outside a cave in the last 4 to 5 years can attest, the Internet has revolutionized many facets of the way we live, work and do business. Many of those same people, prior to

1995, had no idea that a cross-country computer network existed and was being used daily by researchers in both government and academic environments. The reason being that prior to the

National Science Foundation’s (NSF) NSFnet going public in 1995, no one would have known, or likely cared. Now, however, as the personal computer and its Internet browser(s) are found in almost as many homes as the television, we have come to expect this network not only to exist, but also to perform up to our increasingly higher standards. Of course, as our “commercial” use increases, the institutions that formerly had exclusive access to this network have seen their available bandwidth squeezed to unacceptable levels. So, the cycle continues as these institutions are planning to develop a new network – much of which is actually implemented on top of existing infrastructure – to get back their “private” access.

The original NSFnet allowed for a relatively fast T3 (45 Mbps) connection between many university and government research facilities spanning most of the U.S. The NSF was the key player in developing this backbone and regional IP networks. Later, to encourage further development of the Internet and networking technologies, the Foundation partnered with MCI to develop the very-high-performance Backbone Network Service (vBNS), which opened for

“business” in 1995. More on the particulars associated with the vBNS and its role in the new

Internet will follow below. For now, it is important to realize that vBNS is important to both the

I2 and NGI initiatives.

Both I2 and NGI address the problem of increased congestion on the present Internet.

The difference between the two lies mainly with their approach to a solution. Internet2 is a bottom-up initiative and a project of the University Corporation for Advanced Internet

Development (UCAID). It is comprised of some 120 universities and 25 corporate sponsors, all of which pay “an annual fee of between $10,000 and $25,000 and must demonstrate that they are making a definitive, substantial, and continuing commitment to the development, evolution and use of networking facilities and applications in the conduct of research and education” (Fin98, 2).

On the other hand, Next Generation Internet is a top-down initiative, originating in the White

House, “involving federal agencies, that reaches down to academia and the user community.

The New Internet Page 2 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Essentially, its mandate is to remove roadblocks to continued American dominance in technological innovation” (Fin98, 4). While I2 is an off-the-shelf approach using existing technologies, NGI is interested in the research and development of new networking technologies.

Both programs will initially act as a test-bed, as did the original NSFnet, and probably be released for commercial use, as was the current Internet.

Given all of the hype over the present Internet, it is not surprising to find ourselves subjected to even more hype over the next generation Internet. Often, there is confusion about how this new Internet is coming into reality. This paper will hopefully clear up some common misconceptions. First of all, there is not currently any new cabling being laid across the country.

Both I2 and NGI will utilize existing backbones. Additionally, to connect these backbones in an efficient manner, both initiatives will need to use GigaPOPs. A GigaPOP is a gigabit-capacity

Point of Presence that will allow for discriminate points of access to the newer high capacity backbone. An example of an existing GigaPOP in North Carolina will be detailed below. Also, as the expandability of IPv4 has about come to an end, the new Internet will incorporate the newer IPv6. A brief overview of this protocol is also given below. Before an overview of vBNS, GigaPOPs and IPv6 is given in section 4, a detailed look at the specifics of I2 and NGI will be addressed in sections 2 and 3, respectively.

2. Internet2 (I2)

Ironically, the need for the Internet2 project has grown out of the commercial success of the original Internet. Whereas the Internet once provided ample opportunity for universities and research organizations to study, experiment, and download and share information, the rush of private companies and corporations to use the network for commercial activity has clogged the information expressway and forced researchers to evaluate alternative methods for experimentation and research. And while capitalism and business usually drive advances in technology, problems associated with the developed Internet’s architecture are too massive for any company or consortium of companies to successfully combat.

Therefore, in late 1996, thirty-four universities met to examine the current Internet situation and plan how to develop new networking technologies and applications. The result of this meeting was the plan for Internet2, whose mission is to:

The New Internet Page 3 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Facilitate and coordinate the development, deployment, operation and technology transfer of advanced, network-based applications and network services to further U.S. leadership in research and higher education and accelerate the availability of new services and applications on the Internet.

(In2M98)

The universities pledged to set up this “second” Internet as a way to further research and development of networking issues and technologies. Running on high-performance network backbones, separate from the mainstream Internet, and utilizing new Point-of-Presence access junctions of exceedingly high data-rate capacity, this new internet would serve as a test-bed for experimental technologies and application programming. And, as it would be a private network accessible only by cooperating universities, it would suffer from none of the bandwidth overloads of the original Internet.

Today, Internet 2 boasts over 130 member universities and institutions of learning.

Overseen by the University Corporation for Advanced Internet Development (UCAID), Internet2 has also drawn support from corporate sponsors, who have pledged $30 million to the project.

Member universities are providing over $60 million each year in equipment, personnel, and funding. Since the announcement of Internet2 in October 1996, these institutions and this funding has transformed the idea of a next-generation Internet into a reality, through two enormous network backbones and a dozen GigaPOP access nodes.

UCAID has identified three major goals for the Internet2 project. First and foremost is the development of a cutting-edge research network. As universities have often pioneered advanced networks, and contributed to the success of the original Internet, UCAID believes it to be a responsibility of academia to create and maintain an advanced network. Identifiable aspects of a cutting-edge network include large bandwidth backbones, high-capacity PoPs, experimental technologies for data transfer, adaptability, reliability, security, and groundbreaking hardware devices. It is expected that advances in many of these areas will occur as a direct result of I2 development, that university researchers will use current technologies until newer technologies are stabilized, and then the flexible network will be “upgraded” to take advantage of these new offered services. In that way, I2 is designed using a state-of-flux mind-set.

The New Internet Page 4 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

A second goal set forth for Internet2 is the development of revolutionary networking applications. Although the current Internet does support client/server application programming, mostly utilized via applets and CGI-scripts on the World Wide Web, full-fledged network applications are few and far-between on today’s Internet. I2 hopes to remedy this by providing a development environment for computer scientists and researchers to create applications that utilize all the resources and services developed in this advanced physical network. This focus of the project signals the shift away from client/server applications to a fully distributed programming paradigm. This shift is hoped to lead to more efficient programming practices and in general, a better use of available resources.

The final goal set forth by UCAID involves the community at large: the transfer of all network advances to the commercial Internet. UCAID recognizes that although research for the sake of increasing knowledge is important, practical application of the knowledge to mainstream society is beneficial. All research results of Internet2, therefore, are public domain results and companies in private industry are encouraged to adopt and use the gains of I2 for commercial advancement. Advances in network design, as obtained by the first goal outlined for the project, are expected to be implemented by mainstream Internet service providers and companies responsible for the maintenance and creation of the actual physical network connections.

Likewise, it is expected that standards organizations will adopt any new standards and technologies that prove successful on I2. At the same time, all web-based industries and organizations are encouraged to attempt to incorporate the new application media into their existing online applications. Only if the technologies birthed by the I2 project are adopted into and fostered by the community-at-large will practical value result from the researches.

Although these are the main aspects of the Internet2 project, UCAID has outlined several other objectives that it would like to meet. As I2 is a nonprofit, research-based network, members of the project are expected to cooperate, not compete, on various portions of research.

It is hoped that I2 will facilitate the coordination of standards and practices among the members.

In addition to cooperating on standards issues, it is desired that researches will create and use new applications that facilitate the sharing of research and experimental data, so as to cut down on data duplication and redundant experimental overhead, and to foster the spirit of coordination

The New Internet Page 5 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback between members. In addition, universities will partner with government and private-sector organizations to develop software beneficial for all.

I2 is primarily an educational tool, and great advances in education are encouraged.

Virtual proximity, the concept of high-quality, real-time video communication among individuals separated by physical distance, has become one of the mainstays of I2 development. A recent demonstration of I2 technology occurred when a handful of doctors assisted in gallbladder surgery from across the U.S. I2 will allow for the further enhancement of virtual proximity in education services. Students and other researchers will be encouraged to experiment with communication technologies. The impact of new networking paradigms and researches will also be studied at educational institutions, with the results being analyzed to prepare for regular

Internet adoption.

Quality of Service is a hot topic in today’s Internet industry, and I2 has a special taskforce assigned to develop network services that allow for more assurances that data will be delivered properly to the correct client. QoS infrastructures will be developed and deployed on I2 to try to address this common problem. Advanced application programming will be encouraged by the development of middleware and other tools that facilitate design and coding. And of course, all technological advances of I2 will be actively encouraged in migration to the regular Internet.

These additional objectives, nine in all, when combined with the goals already explored, set forth a complete picture of the development of I2, and enumerating them gives a quantitative measure for the project’s continued success. In short, I2 will develop the Internet of the future, one piece at a time.

The hardware that supports Internet2 must, by the project’s definition, provide the most advanced physical network available. First under consideration is the network’s backbone. The backbone, of course, is the major pipe through which the non-local data is transferred. Existing

Internet backbones do not provide the bandwidth necessary for the goals of I2, so UCAID had to find other backbone resources. The two alternatives were to find an existing one and upgrade it as necessary, or to create one from scratch. The first option would be faster and cheaper, but it would not allow for UCAID’s total control of data transmission nor would upgrades occur solely at their discretion. In the end, both strategies were implemented, as the Internet2 project began to use the existing vBNS backbone and pioneer the Abilene backbone.

The New Internet Page 6 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

The vBNS, or very high performance Backbone Network Service, was launched by MCI and the National Science Foundation in April 1995 to provide a backbone for advanced research and application development. The vBNS was designed to communicate via standard IP over the cell-switching technology called ATM, through the Synchronous Optical Network (SONET) standard. These technologies allow the vBNS to operate at a capacity of 622 Mbps (OC12), approximately 403 times today’s standard T1 link. Upgrades to the vBNS are planned to increase the bandwidth to OC48, a fourfold increase to 2.488 Gbps, by the turn of the century.

In addition to the high bandwidth opportunities of the vBNS, there were several other aspects of the backbone that proved enticing to Internet2 partners. The backbone had already demonstrated low latency, high throughput, stability, wide coverage, and regular decongestion.

MCI provided both a production network and a test-bed network for experimentation.

Mechanisms were already in place for measuring network traffic and performance, and regular monthly reports were distributed to all interested parties. A public archive detailed all previous performance activities, network tests, and engineering experiments. Important work in multicasting, quality of service, and the next generation IP standard (IPv6) was already underway. The vBNS proved a natural fit for the ideals of I2, and over 50 universities have connected to the vBNS as part of the Internet2 initiative.

However, the vBNS was only a partial solution to Internet2’s need for backbone services.

As MCI and the NSF were the leaders of the vBNS project, UCAID could not force the organizations to upgrade their network bandwidth to OC48 (2.4 Gbps) to meet the demands of I2.

Further, the agreement between MCI and the NSF was only for five years, expiring in April

2000, and although a continuation of the project is likely, UCAID did not want to depend on a further agreement between the two organizations for I2’s continued existence. Also, the fact that the backbone was sponsored by the NSF meant that research institutions not directly related to

Internet2 were also using the network and taking advantages of the bandwidth benefits. In April

1998, therefore, Vice President Gore announced that UCAID would be developing a new high performance backbone called Abilene. Less than a year later, on February 24, 1999, Abilene was activated.

Partnering with Qwest Communications, Nortel Networks, Cisco Systems, and Indiana

University, UCAID has created an OC48 (2.4 Gbps) network in support of Internet2 research and

The New Internet Page 7 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback experimentation. UCAID outlined three goals for the new Abilene network. It would be a network that would support the demands of up-and-coming advanced research applications, would enable the testing of new network capabilities, and would provide opportunity for the conducting of network research. Abilene is not set to compete with the vBNS for subscribers, but rather addresses the concerns that UCAID members had with the vBNS. The contract between Cisco, Nortel, Qwest, and Indiana University outlasts the NSF-MCI parntership. The network is being developed at the OC48 rate that will eventually result in the vBNS, and developers of Abilene are planning on migration to OC192 links (9.6 Gbps). Also, UCAID would have strict control over who would be able to access Abilene. And as Abilene would utilize different topologies from the vBNS, member universities would have more of a choice in technology development by choosing either network.

The major difference in technology between Abilene and the vBNS is that in UCAID’s project, IP is transferred directly over SONET, eliminating the overhead of the ATM switching.

Advances in routing technology since the creation of the vBNS enabled this alteration, and the routers provided by Cisco are state-of-the-art. Abilene will provide links to the vBNS, however, to ensure maximum productivity by member organizations. Abilene’s launch was entirely successful, and marked by the demonstration of the virtual proximity gallbladder surgery.

Both backbones provide high performance network traffic, but backbones alone do not solve the bandwidth woes of the common Internet. Equally as important is the Point-of-Presence that connects a local network to the backbone. Points-of-Presence can be thought of as the onramp to the so-called information highway. And of course, to take advantage of the high performance of the backbone, the PoP should have sufficiently high data capacity as well. Thus, the concept of a GigaPOP is born.

The GigaPOP is a term simply stating that any connection to the backbone must occur at a rate high enough to handle gigabit transactions. The connection rate does not have to be 1

Gbps or more, but it must be higher than a standard Internet PoP. One of Internet2’s defining characteristics is that each member university must connect to a backbone through a GigaPOP. It is in development and deployment of the GigaPOP that prevents universities from getting actively involved in Internet2; Strawn and Luker predict that regular cost of GigaPOP and

Internet service may cost some universities up to $500,000. Therefore, it is in GigaPOP

The New Internet Page 8 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback technology that is currently the major bottleneck for Internet2 participation. The backbones exist and are ready for users, but universities have to fund GigaPOP access junctions before they can take advantage of, and contribute to, the services of the vBNS and Abilene.

Of course, Internet2 is about more than just the underlying hardware. It is very profitable for industry to develop groundbreaking hardware, so the research focus of I2 is more involved in software, communication methods, protocols, and the like. UCAID and member universities have identified nine different Internet2 working groups, where ongoing research in particular areas is collected and concentrated by various researchers. Although universities are encouraged to develop their own research and development projects, experiments related to one of the working groups would have better facilities and more data if they joined the current working group. Each of these working groups publishes regular updates for public consumption

(In2W99):

IPv6 : The University of Nebraska is working toward the full production of the next IP standard (128-bit instead of 32-bit). Working in tandem with the IPv6 Research and

Education Network, the IPv6 group is attempting to secure the deployment of a production-quality IPv6 system on one of the backbones.

QoS: The Quality of Service group is coordinating and developing the QBone Initiative

(explained further in this paper). Quality of Service refers to a packet-delivery paradigm that is more reliable than the current Internet packet-delivery system. Ensuring data delivery is one of the key aspects of Internet2, as advanced applications will not be able to lose any data upon network migration. The QoS group is involved in QBone development, testing, and standards-setting.

Measurements: The University of California is leading the Measurement working group, studying network performance. The group is working on developing measurement standards for GigaPOPs. Currently, the Measurements group is working with the QoS group to study QBone test results and develop performance measurements.

Network Storage: The University of Tennessee, partnering with IBM, is studying network storage. The Distributed Storage Infrastructure (DSI) initiative (discussed further in this paper) is a result of this working group.

The New Internet Page 9 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Multicast: Multicasting is the ability of one host to transmit data to several clients at the same time. Currently, the Internet supports this only by clients forwarding the packets of information onto other clients, essentially becoming hosts themselves. This group strives to develop true ways of multicasting, as opposed to the Internet’s read-and-forward approach.

Topology: This group is focused on communicating with the Next Generation Internet initiative. As many of the goals of the NGI effort are synonomous with those of

Internet2, the Topology group discusses where and when various networks can be joined to provide better services to members.

Routing: Based in the University of Washington, the Routing group examines the latest in routing issues and technologies, including the topics of routing registries for different host locations and explicit forwarding of data packets.

Network Management: The Network Management group focuses on the Abilene backbone.

This group is taking long-term responsibility for Abilene’s continued operation, as well as architecting future management tools. This group is currently working with the Routing group to develop common routing registries.

Security: Out of the Pittsburgh Supercomputing Center, the Security group is working on a test authentication scheme with the Corporation for Research and Education Networking.

Security, like Multicasting and QoS, is often brandied as a major focus of I2 research.

These working groups represent a good cross-section of current technologies being studied by I2 researchers. They provide delineated action items for lofty, general goals. And they are a great starting point for future research. Such research has already led to three I2 initiatives, each an outcropping of ideas presented from the working groups. The initiatives,

QBone, the Digital Video Network, and the Distributed Storage Infrastructure, are finding success and practical use from I2-developed technologies.

QBone is the name for a test-bed for the Quality of Service testing on Internet2. Develped by the QoS working group, the QBone attempts to use Differentiated Services to separate highimpact computing from general network traffic. Basically, DiffServ works as follows: highimport data and application code can be marked as a special high priority, whereas common network services (html pages, e-mail, non-real-time data) are not marked. When routers forward

The New Internet Page 10 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback packets of data, they will queue up the common service data in favor of forwarding the highpriority data. This invites constant streams of data to not become lost or slowed down because of volumes of less important data. Such QoS implementation is necessary to Internet2’s success; early implementations at the backbone and GigaPOP level ensured QoS by allocating exorbitant amounts of bandwidth to deserving high-priority data, a policy that will not scale onto the

Internet, whereas this DiffServ approach is very applicable on the commercial Internet. The

QBone provides not only the DiffServ, but methods for tracking and measuring the effects of the implementation.

The Digital Video Network (DVN) initiative is one service that expects to take advantage of progress made from QBone. Although real-time video exists on the Internet, performance problems such as Quality of Service occur. The DVN initiative exists to provide more advanced video networking. In addition to application development, the DVN will become a production system that will support member universities’ need for teleconferencing, courses, and other shared information. The aforementioned gallbladder surgery was made possible through the advances of the DVN service.

A third initiative, the Digital Storage Infrastructure (DSI) initiative is exploring how to incorporate storage resources directly into the network. Understanding that data transfer will continue to burden network traffic, the DSI researchers are utilizing intelligent replication of data to alleviate this problem. DSI is also studying the concept of I2 channels to provide consistent data links from server to user. The new channels would extend the power of Internet channels by providing the passing of non-web services through the data stream. The DSI’s advances are intended for general use of course, but the main goals are directed at producing the next century’s education network.

The goals, topology, and ongoing projects of Internet2 have all been discussed on a national level. But individual universities have as much importance to the success of I2 as any of the working groups or initiatives already mentioned. The University of Connecticut is a working member of the Internet2 community, and although it is not at the forefront of I2 development, it will be taken as an example of what a single University can accomplish through I2 membership.

Led by Dr. Peter Luh and the UConn Computer Center (UCC), the UConn I2 program consists of

The New Internet Page 11 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback six different projects (Luh98). Each requires the advances inherent in the I2 paradigm, and each will provide useful research and data in its associated field.

First, the School of Education proposes to study the affects of distance learning on grades

K-12 students. Implementing the Virtual Proximity ideas, project leader Michael Young believes that only two-way interactive distance learning actually promotes learning, whereas one-way video-teaching promotes only boredom. Young argues that distance learning could affect the choices of careers that young people make, and that science and technology occupations could be impressed onto children via Virtual Proximity. His proposal includes the study of Virtual

Proximity effects on teachers and students alike. The tenets of the Internet2 program are met in his proposal; a justifiable use of network bandwidth, an educational content, and relevance to the community at large.

Krishna Pattipati and Peter Willett from the Electrical Engineering department plan to use the features of I2 to work on network-based monitoring and fault diagnosis. The idea is that in large, production systems, when a fault occurs, it is nearly impossible to diagnose the fault with a minimum of downtime. The engineers suggest that the advances of network science may be able to revolutionize system monitoring and remote diagnosis by collecting system state data from widespread data collectors. They will be testing new monitoring algorithms and reporting tools as part of a joint project with NASA.

Dr. Luh and L. Thakur from the Booth Research Center intend on studying network-based scheduling and supply chain coordination. Understanding that competition in the marketplace of today is more dependent on time considerations than cost or quality, Luh and Thakur have developed methods for comparing different aspects of project development, and identifying which aspects are responsible for timing issues. They propose to develop an “integrated planning, scheduling, and supply chain management method” tool on using I2 services for communications. They propose to develop a prototype for testing at various I2 member universities. After successful there, a further version of the tool may be redeployed to corporate sponsors.

Distributed Services Telemedicine is the topic of study for Dr. Ian Greenshields and Dr.

G. Ramsby. Greenshields and Ramsby suggest that simple video communication between doctors at remote sites is no longer good enough, but images should be able to be analyzed

The New Internet Page 12 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback quantitatively, digitally enhanced, and diagnosed. Further, the scientists believe a fully distributed environment to be more advantageous than a client/server methodology. A

Java/CORBA tool is being developed and the researchers expect to test the product on I2.

Dong-Guk Shin and Peter Gogarten are cooperating on research for the Human Genome

Project. Explaining that the Genome project has produced a multitude of data, Shin and

Gogarten state that only a network with many available resources would have enough power to analyze genome data. They propose the creation of a virtual genome center, through which participating researchers can share the important data analyses.

UCC and Robert Vietzke plans on the creation of a multimedia routing network. Vietzke presents the network to combine analog and digital signals into one multimedia network device.

The advances of I2 of which he plans to take advantage include the improved bandwidth capabilities of the backbone, and the Quality of Service option which does not exist on the standard Internet. This project, like all the UConn-sponsored I2 projects, fit the ideals of I2 perfectly.

As can be seen from the preceding discussions, Internet2 is a forward-thinking project that is already starting to make huge strides. The goals of the project are to advance the science of networking, and to apply these advances to the common Internet. As can be seen from the hardware contributions of companies like Cisco and MCI, the studies of the national working groups and initiatives, and the individual University of Connecticut I2 projects, the goals of

UCAID are truly being realized through I2. Internet2 is certainly helping develop the next generation’s Internet.

3. Next Generation Internet (NGI)

History has proven that in order for technology to advance effectively, it must be fueled with a large amount of capital. Thus, a precedent has been established in the government taking on, and consequently funding, large next-generation research and development projects. Just as the original ‘Internet’ was born as a DARPA project, the Next Generation Internet (NGI) project is beginning its life as a large government funded R&D project. As for taxpayer justification, the

NGI program is said to be “essential to sustain U.S. technological leadership in computing and communications and [to] enhance U.S. economic competitiveness.” (LSN98, 1)

The New Internet Page 13 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Developing new networking technologies is no small task. Recognition of this fact has lead to the coalition of many government, commercial and academic institutions. Among these institutions from the government side are the Defense Advanced Research Projects Agency (DARPA), the

National Science Foundation (NSF), the National Aeronautics and Space Administration

(NASA), the National Institute of Standards and Technology (NIST), the National Library of

Medicine (NLM), and the Department of Energy (DoE). As is visible, the NGI has some very large institutions working towards its success. It is also backed by a very large sum of money.

The NGI’s budget falls under the Large Scale Networking (LSN) Working Group of the

Subcommittee on Computing, Information, and Communications (CIC) under R&D. For the fiscal year (FY) 1998, the NGI budget was $100 million. For FYs 1999 and 2000, the current proposal calls for $110 million per year. With the farthest deliverable due date currently projected to be 2002, it is evident that the NGI project is one that will evolve for a number of years most likely receiving an increase in funding as network superiority becomes an increasingly important topic in the goal of U.S. technical dominance.

In a sentence,

The goal of the NGI initiative is to conduct R&D in advanced networking technologies, to demonstrate those technologies in testbeds that are 100 to 1,000 times faster than today’s Internet, and to develop and demonstra[te] on those testbeds revolutionary applications that meet important national needs and that cannot be achieved with today’s Internet. (LSN98, 1)

More formally, there are three goals that have been defined and under which research is currently being conducted:

1.

To advance research, development, and experimentation in the next generation of networking technologies to add functionality and improve performance.

2.

To develop a Next Generation Internet testbed, emphasizing end-to-end performance, to support networking research and demonstrate new networking technologies. This testbed will connect at least 100 NGI sites – universities, Federal research institutions, and other research

The New Internet Page 14 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback partners – at speeds 100 times faster than today’s Internet, and will connect on the order of 10 sites at speeds 1,000 times faster than the current Internet.

3.

To develop and demonstrate revolutionary applications that meet important national goals and missions and that rely on the advances made in goals 1 and 2. These applications are not possible on today’s Internet.

(LSN98, 2)

As is very apparent, these goals are fairly broad. This allows the NGI initiative to encompass many different aspects of the future of the Internet and of communications technologies as a whole.

3.1 Goal I: Experimental Research for Advanced Network Technologies

The thrust of Goal 1 is to design, develop and deploy advanced networking technologies. It is touted to be the ‘pathway’ to terabit-per-second speeds over wide area advanced networks. This will be accomplished through partnerships with industry that will allow for the construction of an infrastructure that can be used profitably by new advanced applications. Advancements made in

Goal 1 will be continually inserted into the Goal 2 testbed. In this way, the new technologies can be experimented with ‘on-the-fly’ thereby decreasing the life-cycle time between successive iterations of an emerging technology. The goal is broken down into three major sub-goals:

1.

Network Growth Engineering

2.

End-to-end Quality of Service (QoS)

3.

Security

3.1.1 Network Growth Engineering

This sub-goal is concerned with the evolution of the new network topologies. It seeks to implement a scalable architecture that has built into it the mechanisms necessary for it to grow.

This task is again broken down into three major areas:

1.

Create and deploy tools and algorithms for planning and operations that guarantee predictable end-to-end performance at scales and complexities of 100 times those of the current Internet.

The New Internet Page 15 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

2.

Facilitate management of large-scale internetworks operating at gigabit to terabit speeds supporting a range of traffic classes on a shared infrastructure.

3.

Create an infrastructure partnership through which lead users (government and research) share facilities with the general public thereby accelerating the development and penetration of novel network applications.

(LSN98, 9)

In support of this task are the following areas of concentration:

Planning and Simulation: This concentration seeks to formalize and possibly automate the planning necessary for network growth and maintenance. It proposes to define a ‘network planning description language’ that can be used for this purpose.

Monitoring, Control, Analysis, and Display: This concentration seeks to provide nextgeneration analysis tools for viewing network traffic in real-time at super-high data rates. The ultimate goal is to be able to provide real-time summaries of traffic and communication patterns that can be used to configure better network topologies to support a particular network’s behavior. These tools will also be invaluable in the ‘debugging’ and testing of the new technologies.

Integration: This concentration is concerned with the seamless integration of these new technologies. Compiling requirements from users, it will be responsible for the clean synergy of these requirements into the NGI testbed.

Data Delivery: This concentration is concerned with the methods and protocols by which information is delivered. It plans to provide tools that will allow a network engineer to adjust the

‘strategy tradeoffs’ that best meet their needs. Tradeoffs include everything from routing and switching methods to priority traffic support, to virtual circuit support, to flat rate versus variable costing options.

Managing Lead User Infrastructure: This concentration seeks to incorporate into the infrastructure the ability to support certain ‘lead users’ of a given communications medium. In this way, big industries such as telecommunications companies could use a large portion of the

The New Internet Page 16 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback bandwidth of a given line while seamlessly allowing other traffic to use the remainder of the available bandwidth.

3.1.2 End-to-end Quality of Service

This sub-goal seeks to provide effective end-to-end QoS support to the new networking technologies. It plans to provide the framework of models, languages and protocols necessary to permit the delivery of QoS throughout all layers of the network. Built into these protocols will be the necessary ability to negotiate for different confidence levels and bandwidth/latency tradeoffs.

At a higher level, API’s to this framework will be defined so that applications can make immediate use of the developed architecture.

3.1.3 Security

The last of the sub-goals of Goal 1 is to provide comprehensive security in the new technologies.

A major part of this goal is centered around cryptography. The plan is to incorporate an extensive

Public Key Infrastructure (PKI) that will interface with the industry-wide interface to effectively provide the much needed services of authentication, data integrity, data confidentiality and nonrepudiation. Along with the PKI, there are plans to develop new protocols which are much more secure than the protocols used today.

It has been recognize that in order for NGI to truly succeed, there must be a high level of confidence in the services listed above. To this end, the third focus of the security sub-goal is that of security criteria and thorough testing. Going beyond simple functionality tests, tests will be conducted to ensure a one-to-one mapping between what a given functionality was defined to provide and what it actually does. In this way, things such as unintentional or intentional

‘backdoors’ in a given functionality can be discovered and disposed of.

3.2

Goal II: NGI Testbed

The proposed outcome of Goal 2 is twofold. The first and major component is that of High

Performance Connectivity. This component plans to connect at least 100 sites at speeds 100 times faster (end-to-end) than today’s Internet. The goal of this component is to provide a “full

The New Internet Page 17 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback system, proof-of-concept testbed for hardware, software, protocols, security, and network management that will be required in the future commercial Internet.” (LSN98, 3) The second component is more concerned with advanced R&D. This component, Ultra High Performance

Connectivity, plans to develop ultrahigh speed switching and transmission technologies that are capable of providing connectivity at 1+ Gbps end-to-end. This component hopes to connect approximately 10 sites at these speeds and will lay the groundwork for future tera-bit-per-second communications.

The NGI will essentially be a large, distributed laboratory where the technologies of Goal 1 and the application requirements of Goal 3 are put to the test. It is expected to be an extremely transient environment that will be constantly changing as the technologies and applications evolve. Goal 2 is concerned with the integration of the different technologies and making sure that everything is able to work together. The goal has been broken down into seven sub-goals, which are detailed below.

Infrastructure: This sub-goal seeks to deal with the transient environment mentioned above. In order to have an effective working environment, this sub-goal seeks to create a “leading edge but stable” environment. This type of environment is difficult to achieve and will require things such as basic backup services that can be used if a higher level service goes down. While occasional inconsistencies (and possible failures) in the network will be inevitable and expected, the infrastructure sub-goal will do its best to provide an environment where these ‘outages’ are limited.

Common Bearer Services: Like the above, this sub-goal is concerned with the stable migration to new technologies from current ones. In this way, initially, IPv4 will be the underpinning of

NGI. Once IPv6 demonstrates “stable” performance, it will be integrated. Similar actions will be taken with all new technologies coming out of Goal 1.

Interconnection: This sub-goal is concerned with providing the seamless ‘nationwide fabric’ necessary to provide consistent QoS over all NGI sites.

The New Internet Page 18 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Site Selection: This sub-goal is primarily deals with NGI administrative issues. It requires that

NGI sites are properly adhering to NGI plans and goals and that the sites have plans to eventually move to commercial funding. The sites must also ensure that a connection to a GigaPOP is available and that all associated costs, etc… are in ‘concert’ with the overall NGI plan.

Network Management: This sub-goal is responsible for taking on the daunting task of network management. Some key features of this effort include Distributed Help Desk,

Security/Authentication Methods, A Distributed GigaPOP Network Operation Center (NOC), and Network Monitoring and Management Tools.

Information Distribution and Training: The final sub-goal is concerned with keeping interested parties up-to-date on the current state of affairs in the NGI world. This will include everything from web sites, to training classes, to conferences, etc…

3.3 Goal III: Revolutionary Applications

As with many types of technologies, the success of the NGI initiative will be measured not by the maximum bps rate that can be guaranteed end-to-end, but instead by the ‘neat’ and ‘eye-popping’ applications that can be run on top of it. The NGI community realizes this and is therefore spending a considerable amount of time and effort in selecting the test applications that will show the world ‘what this thing can do’. There is a very extensive selection process whereby an application proposal must prove that it ‘requires’ NGI technologies and that it is of general use to the U.S. as a whole. The selection process will be selected through the collaboration of four groups. These are, the NGI Funded Agency Missions, the NGI Affinity Groups, the Federal

Information Services Applications Council, and Broader Communities.

NGI Funded Agency Missions will solicit the mission specific application proposals that would benefit each of the involved agencies directly. Since the NGI project spans so many agencies, they all would like to see applications relevant to their needs be the chosen applications. To help deal with this and identify projects that span multiple fields, the NGI has created the NGI

The New Internet Page 19 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Affinity Groups. There are Disciplinary Affinity groups (Health care, Environment, Education,

Manufacturing, Crisis management, Basic science, Federal information services) and Application

Technology Affinity groups (Collaborative technologies, Distributed computing, Digital libraries,

Remote operations, Security and Privacy). The Federal Information Services Applications

Council will seek to include application proposals from government groups outside of the NGI initiative. Finally, the Broader Communities group will look outside of the government altogether. These groups will work together to select applications that they feel are best.

Some examples of current application proposals include:

(From the ngi.gov web site as of 04.19.99)

Distributed Positron Emission Tomography (PET) Imaging: Enhance the ability of biomedical scientists to conduct animal research through the development of high resolution PET scans and ATM transmission of the resulting reconstructed 3-D images.

Real-time Telemedicine: Provide a means of remote medical consultations through the use of real-time analysis of medical diagnostic procedures involving motion.

Medical Image Reference Libraries: Create medical reference libraries where images, movies and sounds are digitized and accessible remotely.

Telerobotic Operation of Scanned Probe Microscopes (SPM): This project, among its technical goals, aims to demonstrate and implement capabilities for the remote operation of scanned probe microscopy (SPM) systems at various levels of control using standard data representations and controller interfaces for collaborative measurement, research, and diagnostics purposes associated with nanometer-scale dimensional artifacts.

Advanced Weather Forecasting: To add the new advanced Doppler weather radars to the suite of observing systems used to initialize and update numerical weather models. This will provide key additional data which is expected to make rapid storm-scale modeling possible, thereby providing additional warning of weather related hazards and for crisis management related to these events.

Chesapeake Bay Virtual Environment: To enable scientists at dispersed sites to study the

Chesapeake Bay and other marine environments using real time control of the simulation and multimodal presentation.

As is visible, the NGI project is one that will push networking technologies to the n -th degree and will be a major player in the future of communications as a whole.

The New Internet Page 20 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

4. I2 and NGI Synergy

Although I2 and NGI are distinctly different programs that happen to be geared toward a common goal, they share certain technologies that will be looked at more closely in this section.

First, as was described above, the vBNS is important to the success of both programs, so it will be described in some detail. Second, as the concept of a GigaPOP is imperative to the interconnecting of future high capacity backbones, we will explore the specifics of one such

GigaPOP currently in use in North Carolina. Lastly, this section will give an overview of IPv6, as it will be the Internet Protocol version of choice in the future.

4.1 The vBNS

With the popularity of the Internet, the NSF wanted to ensure that the country’s research communities were able to access a private, high-speed network to further develop internetworking technologies that would eventually benefit not only academia, but commercial interests as well. The very-high-performance Backbone Network Service was launched in April

1995 as the result of a 5-year cooperative agreement between MCI and the NSF. The vBNS provides the following core services: “a high-speed best-effort Ipv4 datagram delivery service[,]… an IPv4 multicast service, an ATM switched virtual circuit logical IP subnet service, and ATM permanent virtual circuits across the vBNS backbone as needed. Among services under development, are a reserved-bandwidth service and a high-speed IPv6 datagram delivery service” (Jam98, 6).

Like the NSFnet that preceded it, the vBNS is a closed network that connects NSFsponsored supercomputer centers (SCC) and NSF-specified network access points (Fig. 1).

Originally, only 5 SCCs and 4 network access points were available. This high-speed interconnectivity enabled researchers to link two or more SCCs, forming supercomputing metacenters. In fact, as pointed out in (Jam98), the NSF created the High Performance Connections

(HPC) program to sponsor access to the vBNS for R&E institutions in order to reach the “critical mass” of the vBNS. Only then would the number of applications and experiments running on the vBNS be able to exploit its potential and bring more advanced services to the commercial

Internet. The vBNS will ultimately host over 100 institutions, including links to other research networks in the U.S. and abroad.

The New Internet Page 21 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Looking at the architectural layout of the vBNS, it is implemented as IP-over-ATM running on over 25,000 km of a Synchronous Optical Network (Sonet) OC-12-622.08 Mbps backbone. A collection of ATM switches and IP routers interconnect 12 vBNS POPs, located at

MCI terminal facilities, and four POPs located at the following SCCs: the National Center for

Atmospheric Research (NCAR), the National Center for Supercomputing Applications (NCSA), the Pittsburgh Supercomputing Center (PSC), and the San Diego Supercomputer Center (SDSC).

Each of these POPs typically provides access via User-Network Interface (UNI) ports on a Fore

ASX-1000 ATM switch. In addition to this ATM connectivity, “[f]rame-based connections to the Cisco 7507 router are also available[,] as are ports which support Packet-over-Sonet” (Jam98,

2). To allow supercomputers access via High-Performance Parallel Interface (HIPPI), their POPs also have Ascend GRF 400 routers. In order to provide a measure of the network’s performance, each POP has a Sun Microsystems Ultra-2 workstation with an OC-12 ATM NIC to run nightly tests on each backbone link of the vBNS. Interested readers can find the plotted output of these tests on the vBNS web site at http://www.vbns.net/stats .

The ATM layer actually rides on top of MCI’s Hyperstream network, which is also used for commercial applications. “The vBNS was the first ‘customer’ on MCI’s Hyperstream network, is the only customer with an OC-12 access rate, and will continue to be the first recipient of advanced capabilities” (Jam98, 3). A set of Permanent Virtual Paths (PVPs) form a full mesh topology, on top of the vBNS, connecting each node with each other. Each PVP carries a number of Permanent Virtual Circuits (PVCs), which connect some 23 IP routers running the Border Gateway Protocol (BGP), the internal BGP (iBGP), and the Open Shortest

Path First (OSPF) protocol.

In addition to the directly attached institutions, the vBNS supplies high-speed connections to many other federally funded research networks and to R&E networks in Canada, Germany and

Singapore. “This high-speed (mostly 155.52Mb/s) Ipv4 connectivity between the vBNS and other large Federal Networks provides a valuable broadening of the vBNS community, and is an integral part of the vBNS’ participation in Internet2 and the Next Generation Internet” (Jam98,

5).

As the three projects have overlapping goals, the vBNS, NGI and I2 are commonly confused; they all aim to provide the R&E community with a top-of-the-line network so that they

The New Internet Page 22 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback may further networking technologies. Not only do the goals of these projects overlap, but so do the participants, thereby adding more confusion. “The NSF is part of the multi-agency Next

Generation Internet initiative as well as the sponsor of the vBNS and the High Performance

Connections program. MCI is party to the vBNS cooperative agreement as well as an Internet2 corporate partner. Most universities with NSF grants to connect to the vBNS are also members of Internet2. Some of these universities are also involved in NGI research” (Jam98, 2). Goals and partnerships aside, it is clear that as the physical vBNS is a year and a half older than either

I2 or NGI, it will in many ways serve as a prototype for the both of them. The vBNS is already running some of the types of applications that NGI and I2 aspire to support. As put in (Jam98):

One of the goals of the vBNS project is to accelerate the pace of the deployment of advanced services into the commercial Internet in order to advance the capabilities of all Internet users. The vBNS is an environment in which new Internet technologies and services can be introduced and evaluated prior to deployment on the large-scale, heavily-loaded commercial backbones. Examples…include native

IP multicasting, a reserved bandwidth service, and the latest version of IP, IPv6.

With respect to NGI’s goal of delivering 100 times the performance of the current

Internet with over 100 participating entities, the “vBNS represents the NSF’s efforts to meet that goal” (Jam98, 2). And with respect to I2’s goal of providing academic institutions the ability to develop advanced network technologies, they will utilitze “existing networks, such as vBNS, to connect members to each other and to other research institiutions” (Jam98, 2).

4.2 The GigaPOP

Another collaborative effort between I2 and NGI is the GigaPOP. As the expression “a chain is only as strong as its weakest link” promises, a network can analogously only be as fast as the smallest bottleneck it is forced to transmit through. From (Col99) and Internet2, in order for a

GigaPOP to provide the desired interconnectivity, it must:

have at least 622 Mbps capacity;

provide high reliability and availability;

use the Internet Protocol (IP) as a bearer service;

also be able to support emerging protocols and applications;

The New Internet Page 23 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

be capable of serving simultaneously as a workaday environment and as a test bed;

allow for traffic measurement and data gathering;

permit migration to differentiated services and application-aware networking.

As some general features of GigaPOPs and their requirements with respect to I2 (and NGI) were given in the discussions above, here we aim to expand upon these with a specific implementation of a GigaPOP: the NC GigaPOP.

Four institutions, Duke University, North Carolina (NC) State, the University of North

Carolina (UNC) at Chapel Hill, and MCNC, teamed up with Cisco Systems, IBM, Nortel (later

Nortel Networks) and Time-Warner Communications came together as the North Carolina

Networking Initiative (NCNI). Their goal was to create a new regional “independent network in the Research Triangle, because such a network would let the universities and MCNC conduct computing and networking research in an environment unconstrained by congestion, undifferentiated services, and other limitations of the commercial Internet” (Col99, 2). The

NCNI was formed in May 1996 and forwarded its first packets on the NC GigaPOP in February

1997, becoming one of the first implementations of a GigaPOP.

The NC GigaPOP has four primary nodes at NC State, Duke, UNC Chapel Hill and

MCNC (Fig. 2). These primary nodes will serve as connection points to the vBNS and upcoming

Abilene Network, while secondary nodes connect other NCNI partners. In deciding on the exact architecture of the GigaPOP, the NCNI had to carefully consider two issues: topology and fiberoptic infrastructure.

With only four primary nodes, the GigaPOP could easily have been configured in a full mesh topology, which would have provided for robustness in the event of a link failure. The problem with mesh topologies is in that they are not very scalable. A ring topology was implemented due to its scalability and its resemblance to what phone and cable companies call a metropolitan network (MAN), which allows for the use of hardware and software equipment that has been optimized for this configuration.

The issue of fiber-optic infrastructure came down to cost. In order to lease the required four OC-12 links to form the ring, the monthly cost would be $276,000 a month ($3.3 million per annum). And, the local exchange carriers did not currently have the fiber nor the switching capabilities required for the GigaPOP. Thus, NCNI made a deal with Time Warner

Communications to provide a private four-fiber ring infrastructure (two in, two out).

The New Internet Page 24 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Having a topology and cabling infrastructure, the next decision was in the networking technologies. The NCNI decided on the same setup as the vBNS; IP atop ATM over Sonet.

Nortel provided Sonet add/drop multiplexers (ADMs) for each of the nodes, providing a total of

2.488 Gbps (OC-48) in both directions around the ring. In order to connect IP routers to the

Sonet ADMs, there were two possibilities: a 155 Mbps Cisco router that mapped IP packets directly into Sonet (POS); or, a 155 Mbps Cisco router that mapped IP packets into ATM before mapping them to Sonet. As alluded to earlier, the IP-to-ATM over Sonet was selected to preclude the need for a second router “at each node to logically connect each of the four routers and take advantage of the distributed architecture. Since ATM is virtual-circuit technology, however, three virtual circuits could be spread across two 155-Mb/s adapters to create a fully connected IP network, and only a single router would be needed at each node on the ring. The virtual-circuit nature of ATM also mapped well to the circuit orientation of the Sonet multiplexers” (Col99, 4). Additional details of the connections can be found in (Col99) and at http://www.internet2.edu/ .

The NCNI submitted a proposal to the NSF in order to have the NC GigaPOP connected to the vBNS and was awarded $1.4 million to do so. In order to provide connectivity, the first link in the Southern Crossroads (SoX) GigaPOP-to-GigaPOP network was made, connecting the

NC GigaPOP to the developing GigaPOP at the Georgia Institute of Technology. This link was a

45 Mbps (DS-3) dedicated line that now enabled the NCNI to share an OC-3 ATM connection to the vBNS. The remaining problem with the GigaPOP was how to restrict commercial network traffic, as the NCNI, Abilene and vBNS networks are designed to be research networks that avoid commercial Internet congestion.

Since current IP routers can only implement one routing policy, NCNI provided separate routers for each policy. Although not a scalable solution, it will have to do until explicit routing technology based on source-destination pairs is available. For now, all network traffic originating on the NCNI systems is directed to a single router. This router contains a list of routes to vBNS destinations. If a destination doesn’t have a route by way of the NCNI and vBNS, it is forwarded to the commodity Internet. This problem of policy enforcement has led I2

(In297, 2) to expect two general types of GigaPOPs:

The New Internet Page 25 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Type I gigapops, which are relatively simple, serve only I2 members, route their traffic through a one or two connections to another gigapops, and therefore have little need for complex internal routing and firewalling; and

Type II gigapops, which are relatively complex, serve both I2 members and other networks to which I2 members need access, have a rich set of connections to other gigapops, and therefore must provide mechanisms to route traffic correctly and prevent unauthorized or improper use of I2 connectivity.

Although there is no exact blueprint for the construction of a GigaPOP, the NCNI implementation seems to fulfill the requirements of I2. For every GigaPOP that is needed to construct the new Internet, there will probably be a unique solution. The key is in the planning and utilization of the highest speed equipment available, so as to prevent the congestion problems which plague the commercial Internet. In collaborating on GigaPOPs, it is clear how the I2 and NGI initiatives complement each other.

4.3 IPv6 Overview

Although subnetting and Classless Interdomain Routing (CIDR) have helped limit Internet address space depletion and routing table size, it is clear that an improvement to IPv4 is needed to overcome the scaling problems associated with the Internet’s rapid growth. IPv6 hopes to provide a solution to the current problems while anticipating the need for future adaptability.

IPv6 provides a 128-bit address space, which will allow it to address 3.4 x 10

38

distinct nodes. “Based on the most pessimistic estimates of efficiency…, the IPv6 address space is predicted to provide over 1500 addresses per square foot of the earth’s surface, which certainly seems like it should serve us well even when toasters on Venus have IP addresses” (Pet96, 254).

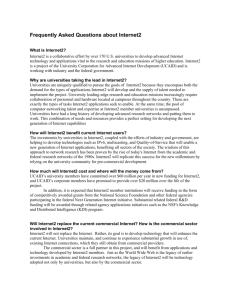

Figure 3 shows the IPv6 header. In addition to the expanded address space, other features planned for IPv6 as outlined in (Hin95) are:

Expanded Routing and Addressing Capabilities : along with the increase from 32 to

128-bit addresses, IPv6 will provide more levels of addressing hierarchy and allow for simpler auto-configuration of addresses. An additional “scope” field will add to the scalability of multicast routing.

The New Internet Page 26 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Anycast Addresses : this new type of address will identify sets of nodes where a packet sent to an anycast address is delivered to one of those nodes. This will allow IPv6 source route to allow nodes to control the path which their traffic flows.

Header Format Simplification : some of the IPv4 header fields have been dropped or made optional. By header simplification, even though the size of the IPv6 address is four times that of IPv4, its header is only two times longer.

Improved Option Support : the IPv6 header options are encoded to allow for more efficient forwarding with less stringent limits on the length of options and greater flexibility for the additions of new options in the future.

QoS Capabilities

: packets can be labeled as belonging to a particular traffic “flow” for which the sender requests special handling, such as non-default QoS or “real-time” service.

Authentication and Privacy Capabilities : IPv6 includes the definition of extensions which provide for authentication, data integrity, and confidentiality.

4 8

Version Priority

PayloadLen

12 16 20

SourceAddress

FlowLabel

NextHeader

Destination Address

Next header/data

24 28

HopLimit

32

*

*

*

Figure 3 (IPv6 Header)

With all of its improved capabilities, some of which challenge the features that make ATM an attractive alternative to IP, IPv6 is clearly needed in any future Internet endeavor. For this

The New Internet Page 27 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback reason, both I2 and NGI have embraced IPv6. The only challenge facing IPv6 is a smooth transition from IPv4. Although several transition plans have been proposed, with tunneling as popular technique, the problem is of the scale and nature that has been likened by many to the

Internet version of Y2K.

5. Conclusion

Although the two main initiatives, I2 and NGI, are distinct, they both aim toward increased networking bandwidth for educational, research and government interests. It should be interesting to see how the future of the Internet unfolds as these “new” networks are constructed and later made available to an increasingly computer-centric society. If the success of the last unveiling is any indication of future success, the Gartner Group may be right in predicting the decline of many Internet service providers (although we’re not sure the shift from our ISPs to our local cable company for service is a necessarily a good thing). As newer networking technologies are proven and introduced into the commercial market, the promised “information superhighway” may very well resemble a highway, and not Lombard Street in San Francisco.

This should make many of us happy, as we are ultimately financing their creation.

The New Internet Page 28 of 29 04/12/20

CSE245 – Computer Networks J. Ellis, A. Uccello, R. Gronback

Works Cited

(Col99) Collins, John C., et al, “Data Express: Gigabit Junction with the Next-Generation

Internet,” IEEE Spectrum, February 1999: http://www.spectrum.ieee.org/spectrum/feb99/ngi.html

.

(Fin98) Finley, Amy, "Untangling the Next Internet," SunWorld, April 1998: http://www.sunworld.com/sunworldonline/swol-04-1998/swol-04-internet2.html

(Gar98) "GigaPOP - Lynchpin of Future Networks - Will Add Scalability; Wide Range of Price/Performance Choices," Gartner Group, 19 Aug. 1998: http://www.techmall.com/techdocs/TS970819-8.html

(Hin95) Hinden, Robert M., “IP Next Generation Overview,” IETF, 14 May 1995: http://playground.sun.com/pub/ipng/html/INET-IPng-Paper.html

.

(In297) "Preliminary Engineering Report," Internet2, 22 Jan. 1997: http://www.internet2.edu/html/engineering.html

(In2M98)

“Internet2 Mission,” Internet2, 1998: http://www.internet2.edu/html/mission.html

.

(In2W99) “Internet2 Working Group Reports: February 1999”, February 1999: http://www.internet2.edu/html/wgreport-9902.html

.

(Jam98) Jamison, John, et al, "vBNS: Not Your Father's Internet," IEEE Spectrum, July 1998: http://www.vbns.net/presentations/papers/NotYourFathers/notyourf.htm

(LSN98) Large Scale Networking , Next Generation Internet Implementation Team, NGI

Implementation Plan, February 1998 http://www.ngi.gov/implementation

(Luh98) Luh, Peter B. and Vietzke, Robert ,“UConn & Internet2 – Project Summary,”

University of Connecticut, 1998: http://abraham.ucc.uconn.edu/internet2.

(NGI99) The Official NGI Web Site http://www.ngi.gov

(Pet96) Peterson, Larry L., Davie, Bruce S., Computer Networks: A Systems Approach. San

Francisco: Morgan Kaufmann, 1996. http://www.mkp.com/books_catalog/1-55860-368-9.asp

.

(Sch98) Von Schweber, Erick, "Projects Promise IS Plenty," PC WEEK, 09 Feb. 1998: http://www.zdnet.com/pcweek/reviews/0209/09ngi.html

The New Internet Page 29 of 29 04/12/20