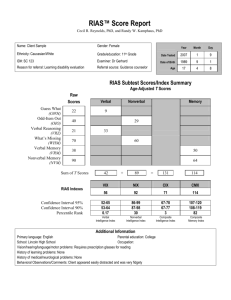

Development and Application of the Reynolds Intellectual

advertisement