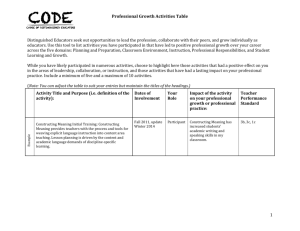

Constructing Measures

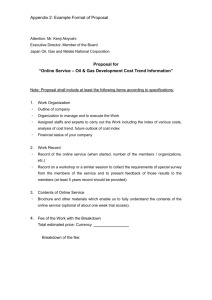

advertisement