On the external storage fragmentation produced by first

advertisement

1. Introduction

Operating

Systems

J. Stockton Gaines

Editor

On the External

Storage Fragmentation

Produced by First-Fit

and Best-Fit Allocation

Strategies

John E. Shore

Naval Research Laboratory

Published comparisons of the external fragmentation

produced by first-fit and best-fit memory allocation have

not been consistent. Through simulation, a series of experiments were performed in order to obtain better data

on the relative performance of first-fit and best-fit and a

better understanding of the reasons underlying observed

differences. The time-memory-product efficiencies of

first-fit and best-fit were generally within 1 to 3 percent

of each other. Except for small populations, the size of

the request population had little effect on allocation

efficiency. For exponential and hyperexponential distributions of requests, first- fit outperformed best- fit; but

for normal and uniform distributions, and for exponential distributions distorted in various ways, best-fit outperformed first-fit. It is hypothesized that when first-fit

outperforms best-fit, it does so because first-fit, by preferentially allocating toward one end of memory, encourages large blocks to grow at the other end. Sufficient contiguous space is thereby more likely to be

available for relatively large requests. Results of simulation experiments supported this hypothesis and showed

that the relative performance of first-fit and best-fit

depends on the frequency of requests that are large compared to the average request. When the coefficient of

variation of the request distribution is greater than or

approximately equal to unity, first-fit outperformed

best-fit.

Key Words and Phrases: storage fragmentation,

dynamic memory allocation, first- fit, best- fit

CR Categories: 3.73, 4.32, 4.35

Copyright © 1975, Association for Computing Machinery, Inc.

General permission to republish, but not for profit, all or part

of this material is granted provided that ACM's copyright notice

is given and that reference is made to the publication, to its date

of issue, and to the fact that reprinting privileges were granted

by permission of the Association for Computing Machinery.

Author's address: Naval Research Laboratory, Washington,

DC 20375.

433

In this paper we report on some experiments whose

results have helped us to understand differences in the

performance of two well-known storage-allocation strategies, first-fit and best-fit. In first-fit allocation, available blocks of storage are examined in the order of their

starting addresses, and the pending storage request is

allocated in the first encountered block in which it fits.

In best-fit allocation, the pending storage request is

allocated in the smallest available block in which it fits.

For each allocation, the work required to choose

among available blocks of memory, or to make available

a block of sufficient size, is generally termed the allocation overhead. The choice of an allocation technique for

a particular application and machine is necessarily a

compromise between the conflicting desires for efficient

use of memory and low allocation overhead. Memory

compaction, a process that rearranges memory contents

so that all free space is contiguous, is a brute force way

of making the best use of available space. At one extreme of memory allocation techniques, one might

compact memory after every release of space. Allocation

decisions are then trivial, the use of memory is maximal,

but the overhead is high. At another extreme, one might

have a sophisticated allocator that obviates compaction

by making decisions that are in some sense optimal [1].

Such allocators might take into account knowledge of

the statistics of the distributions of request sizes and

memory residence times; they might even conduct their

own look-ahead simulations. Memory is well used, but

the overhead is again high.

First-fit and best-fit are examples of strategies that

perform well with low overhead, hence their popularity.

In studying their performance, as measured by their

efficiency in using memory, we ignore allocation overhead. This restriction enables us to advance and test

hypotheses concerning the reasons for observed performance differences without the ambiguities that would

be injected if overhead was included. Such ambiguities

could not be avoided because the overhead is as much a

function of how an allocation technique is implemented,

and on what machine, as it is a function of the principles

of the technique.

2. Motivation

The original motivation of our work was the somewhat puzzling success [2] of best-fit allocation in the

Automatic Operating and Scheduling Program (AOSP)

[3] of the Burroughs D-825, a modular, multiprogrammed multiprocessor first delivered in 1962 [4].

Best-fit had been chosen, in the absence of any literature

advising to the contrary, because it was both simple and

intuitively efficient [5]. Debilitating fragmentation,

which had been anticipated with plans for periodic

memory compaction, never occurred.

Communications

of

the ACM

August 1975

Volume 18

Number 8

Literature published since the AOSP design choices

were made has been inconclusive, both about the relative performance of first-fit and best-fit, as well as about

the reasons underlying such differences. Collins [6] reported that best-fit performs slightly better than first-fit,

although he gave no details. In a stimulating discussion,

Knuth [7] reported on a series of simulations that

showed first-fit to be superior, and he offered an explanation for these results*. He did not, however, give

many details about the actual performance.

Against this background, we decided to study best-fit

and first-fit allocation. Because best-fit and first-fit

seem to embody distinctly different systematic effects

and because we wanted to restrict the number of variables, we chose not to include in our study such intermediate strategies as modified first-fit [7].

3. Simulation Description

We simulated the allocation of an infinite proffered

workload of storage requests in a fixed-size memory.

Storage is allocated in units of one word so that no more

than the requested amount is ever allocated. Resulting

fragmentation is therefore purely external [8]. The event

driven simulator maint~fins a list of available-storage

blocks. When an allocated block is released, the simulator determines whether it is located adjacent to one or

two blocks already on the available-storage list. If so,

the appropriate blocks are combined by modifying the

available-storage list. If not, the newly released block is

added to the list. The simulator then attempts to allocate

the pending storage request. If there is space for it, the

request is allocated at the low address end of whichever

available block is selected by a prespecified allocation

strategy. The available-storage list is then modified, and

the newly allocated space is scheduled for release at a

time computed from a prespeeified distribution or

algorithm. Following a successful allocation, the simulator continues to generate and allocate new requests until

an attempted allocation fails. Whenever failure occurs,

the simulator advances to the next scheduled storage

release and continues as described in the foregoing until

a prespecified time limit is reached. Summary statistics

are then stored, and the simulator begins anew after

reinitializing everything but the random number generator. During one run, the simulation is repeated for a

prespecified number N of such iterations, after which the

statistics for each iteration and the averages over the

ensemble of iterations are printed.

In all of the simulations reported herein, the

memory-residence time of requests was continuously

and uniformly distributed between 5.0 and 15.0 time

units. Memory contents were therefore replenished on

the average once every 10 time units. Preliminary studies

showed that major transients in the various performance statistics subsided by about 5 to 10 memory replenishments, so that in production runs no statistics

434

were gathered until at least 50 time units had elapsed.

Iteration time limits for production runs were typically

500 to 3000 units.

In referring to distributions of storage requests we

shall use the following notation:

------mean of request generating distribution.

------standard deviation of request generating distribution.

M -- memory size (M = 32K for all results reported

herein).

X --1/~.

a -- coefficient of variation (~/~).

Exponential, hyperexponential, normal, and uniform

distributions are available for generating requests. Requests outside of the interval [0,M] are discarded, and

all requests are truncated to the nearest whole word.

These actions will not produce significant distortions of

the generating distribution p(x) provided that the

following conditions hold:

1 << ? << M,

(IA)

o'p(x) dx ~ 1.

(1B)

If the exponential distribution p(x) = X exp(Xx), for example, is used, the probability density function of requests that satisfy 0 _< x _< M and are truncated to the

nearest integer k is

1 - exp (--X)

p(k) = 1 -- exp (--XM) exp (--Xk),

(2)

where k = 0, 1 , . . . M -- 1. The condition (1A) implies XM >> 1 and X << 1 so that eq. (2) becomes

p(k) ~ X exp(--Xk).

Two options are available concerning the size of the

population of possible requests. When what we call the

full population is used, storage requests are obtained

directly from the appropriate generating distribution

each time a new request is needed. When what we call a

partial population is used, P requests are obtained from

the appropriate generating distribution during the initialization phase of each iteration of a simulation run.

These P requests are retained for the duration of the

iteration, and storage requests are generated by choosing randomly from among them. It is important to note

that the full population is not equivalent to a partial

population with P = M, since, unless the generating

distribution is uniform, it is most unlikely that all of the

M different requests that can be generated by the full

population will be included in the partial population.

The assumption of an infinite proffered workload is

an aspect of the simulation that merits further discussion. As an alternative, we could have assumed a finite

workload in which requests are scheduled in accordance

with some distribution of arrival times. To see that this

would have introduced an unnecessary degree of

freedom, suppose that two different allocation strategies

are successful in accommodating a given finite workload

Communications

of

the ACM

August 1975

Volume 18

Number 8

in a given sized memory, where by successful we mean

that no allocation attempt ever fails. The most significant measurements are then those that quantify the

degree of storage fragmentation. Such measurements

are of interest only insofar as they may predict which

of the two strategies would accommodate the largest

workload, or which would successfully accommodate

the given workload in the smallest memory. One might

study these questions empirically by increasing the

workload or decreasing the memory size until one of the

strategies saturates, that is, until one of them results in a

constant or growing queue of storage requests--a condition equivalent to an infinite proffered workload.

Clearly, one could gain the same information simply

by fixing the memory size, subjecting both strategies to

an infinite workload, and measuring the efficiencies.

3.1 Performance Measurement

Although the simulator gathers a wide variety of

statistics, all of our results will be presented in terms of

one figure of merit, the time-memory-product efficiency

E. If n requests r l , i = 1, 2 , . . . , n, are allocated for

times ti in a memory of size M during a total elapsed

time T, then the efficiency is defined as

E = ~

r~ h .

(3)

i=l

E is a direct measure of how well the memory has been

used and seems to us to be the best single figure of merit

with which to compare the performance of different

allocation strategies.

One alternative performance measure is the storage

utilization fraction U, defined as an average of a quantity Ut, which in turn is a function of the memory contents at any time t. If {r~t} is the set of requests that

happen to be resident in memory at time t, then

1

U, =---~ ~ r , .

The measures E and U are not generally equivalent.

Among other things, the difference depends upon how

samples Ut are selected for inclusion in the average U.

If the memory is sampled periodically, with an intersample time that is small with respect to the average

residence time of requests, then E and U are equivalent.

If samples Ut are taken only at times of allocation activity, then E and U will differ to the extent that residence times are a function of request sizes. To be more

specific, consider an extreme case in which we have a

partial population of only two requests, rl and r2.

Furthermore, let these requests satisfy the constraint

rl + r2 > M; i.e. only one request at a time fits in

memory. If r~ and r2 have fixed residence times of h and

h , respectively, then the allocation efficiency is given,

on the average, by E = (rxtl + r2h)/M(h + h), whereas

the storage utilization is, on the average, U = (r~ + r2)/

2M. If rx << r~ and r~ ~ M, then U ~ ½. But if it also

happens to be true that h << t2, then the performance

435

figure U ~ ½ is most misleading (consider the spacetime diagram). On the other hand, the efficiency E

approaches unity, as is appropriate for a measure of

how well memory has been used. The insensitivity of U

to memory residence times makes it a poor performance

measure.

As mentioned previously, in all of the simulations

reported herein, the memory residence times were uniformly distributed and independent of request size. In

the foregoing example, U and E become equivalent if

the memory residence times are independent of the

request sizes. That such an equivalence holds for the

general allocation problem as well can be seen from the

following argument: Given sufficient samples, one can

write the storage utilization in terms of average values

as U = ¢~/M. Here, ~ is defined as the average number

of requests that are resident in memory when a sample

Ut is taken. If the residence-time distribution is independent of the request-size distribution, then the

efficiency is, on the average,

E = Ntf/MT,

(4)

where N is the (ensemble) average number of requests

allocated during some long period of length T and t is

the average memory residence time. But N t / T is the

average number of requests resident at any time, hence

E and U are equivalent. The simulation results were

spot-checked to verify eq. (4) and the equivalence,

given request-size independent residence times, of E

and U. Both comparisons checked to two decimal

places.

Another alternative performance measure is

throughput, which might be defined as the average

number of requests allocated per unit time or as the

average amount of memory allocated per unit time.

But, given long time averages and stationary request

statistics, the throughput will be proportional, in both

cases, to E.

In general, results will be plotted as functions of

various parameters of the original request generating

distribution, rather than of the distorted distribution

that may result from restricting requests to integers on

the interval [0,M].

Owing to the stochastic nature of the simulation,

the allocation efficiency varies slightly from iteration to

iteration, even though the conditions being simulated

are the same for all iterations of a run. This point is

not important if one is comparing situations for which

the variance of the apparently normal distributions of

efficiencies are small compared to the distances between

their expected values. In most of our simulations,

however, the distribution of efficiencies obtained with

first-fit allocation overlapped that obtained with best-fit

allocation when all conditions, other than the allocation

strategy, were the same. It was, therefore, important to

sample the distributions sufficiently often to estimate

their expected values with enough accuracy to resolve

them. The expected values are estimated by averaging

Communications

of

the ACM

August 1975

Volume 18

Number 8

Fig. 3. Fraction of allocations in which requests could have fit in

more than one available block versus mean request ?. Data was

measured during the best-fit runs plotted in Figure 2.

1.0o

In the figures the memory size was 32K.

First-fit points are plotted as A.

Best-fit points are plotted as e , and in Fig. 1 are offset slightly to

of the first-fit points.

Worst-fit points are plotted as I .

is plotted as )<.

For allocation efficiencies, the error bars show the 95 percent confidence limits of the estimates of mean efficiency.

0.75

o~ 0.50

E

0.25

I

128

I

256

I

5t2

I

1024

I

2048

I

4096

I

8192

I

16584

r ~

Fig. 1. Allocation efficiency E of first-fit and best-fit versus size P

of the request population. The generating distribution was exponential with F = 1024. For P = 4, 100 iterations were averaged.

All other points are the result of averaging ten iterations.

Fig. 4. Allocation efficiency E of best-then-first-fit versus the

breakpoint B between the best-fit and first-fit regions. The request

distribution was exponential with ~ = 256. Each point is the result

of averaging 70 iterations.

I.OC

0.835

a9o

]tttt

Q830

t

t

0.80

t

1

I

w 0.825

0.820

0.70

I,

2

II

4

h

8

t

16

b

32

p~

II

64

h

128

h

256

~ /,~_~b

52

I

I

I

I

I

I

I

I

0

4K

8K

12K

16K

20K

24K

28K

B~

Fig. 2. Allocation efficiency E of first-fit, best-fit, and worst-fit

versus mean request ~ for exponentially distributed requests. Each

point is the result of averaging ten iterations.

Fig. 5. Allocation efficiency E of first-fit and best-fit, and measured

value of the coefficient of variation a, versus maximum request

r . . . . Requests were generated from an exponential distribution

with ~ = 1024 and ignored unless they satisfied 0 < r < r . . . .

Each point is the result of averaging 25 iterations.

a85

0.90

1.00

0.80

O75

0.85

1

"' 0.75

t

O50

t

w

0.80

BEST-FITJ - 02.5

0.70

I

128

436

I

32K

I

256

I

512

I

I

1024 2048

?" ~.

I

4096

I

8192

I

16384

0.75

I

I

I

I

I

I

512

1024

2048

4096

8192

16384

r max

Communications

of

the ACM

August 1975

Volume 18

Number g

C

Fig. 6. Allocation efficiency E of first-fit and best-fit, and measured

value of the coefficient of variation a, versus minimum request

rmin. Requests were generated from an exponential distribution

with ~ = 256 and ignored unless they satisfied rmin < r < 32K.

Each point is the result of averaging 25 iterations.

0.86 -~

tOO

..._

0.85-

.80

/

0.75

t0.84

o. ot

w

0.82 - I

t

I

16

8

~

E

S

T

-

F

I

T

~

.75

,70

T

o83 t

.85

/f

' ~ ,

FIRST-FIT

Fig. 9. Allocation efficiency E of first-fit and best-fit versus the

coefficient of variation a of the request distribution. The request

distribution was hyperexponential with mean request ~ = 512.

Each point is the result of averaging 10 iterations.

025

I

32

rmin

I

64

I

128

.65

I 0

256

Fig. 7. Allocation efficiency E of first-fit and best-fit, and measured

value of the coefficient of variation ,~, versus mean ~ of the request

distribution. Request distribution was uniform with a = 256. Each

point is th result of averaging 25 iterations.

1.oo

°9°

I

BEST-FIT.

m

0.85

t

LU

I

1.0

I

1.5

I

2.0

I

2.5

I

3.0

the efficiencies f r o m all iterations run under the same

conditions. In plotting these estimates we also show

their 95 percent confidence limits, which are given by

-4- 1.96 @(N) ~, where ~ is the standard deviation,

which we estimate f r o m the set o f samples and N is

the n u m b e r of iterations averaged. In general, the

observed distributions of efficiencies appeared to be

symmetrical a b o u t a single peak. In discussions that

follow, we assume that the mean efficiency coincides

with the peak o f the distribution.

0.8C

- 0.25

x

0.75

I

I

512

I

1024

[

2048

4096

~l,...

f

8192

o

Fig. 8. Allocation efficiency E of first-fit and best-fit, and measured

value of the coefficient of variation a, versus standard deviation ~r

of request distribution. Request distribution was uniform with

= 1024. Each point is the result of averaging 25 iterations.

-

tO0

0.87

0.75

3.2 Some

Hesitations

It is i m p o r t a n t to emphasize that the results o f

simulations like ours must be interpreted with caution.

F o r example, m u c h o f our w o r k is based on exponential distributions o f request sizes, and although some

published data shows exponential-like distributions,

[9] other data does not [I0]. Moreover, such simplifying

assumptions as well-behaved distributions,

independence o f successive requests, and independence o f

request size and duration are questionable [11]. The

assumption of a sequential set of requests in which no

new request is made until the previous one is satisfied

is realistic for certain situations only.

4. R e s u l t s

for Partial

Populations

0.86

0.50~u

0.85

uJ

0.84

0.25

0.83

I

128

437

I

256

I

O"---e,-

512

I o

1024

Initially, we investigated the relationship between

the size of the request population and the p e r f o r m a n c e

o f best-fit and first-fit. O u r motivation was W a l d ' s

hypothesis that the absence o f debilitating fragmentation in the AOSP'S use of best-fit was due to the discrete

population o f storage requests that a c c o m p a n i e d the

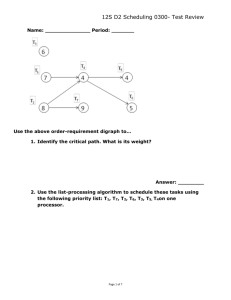

real time application [2]. Figure 1 shows the results o f

a series o f experiments in which a partial P-request

population was generated at the start of each iteration

Communications

of

the ACM

August 1975

Volume 18

Number 8

of the simulation by an exponential distribution with a

mean of 1024. As one should expect, the allocation

efficiency increased as P was reduced, but most of the

increase was confined to the region P < 16. For the

full-population case, first-fit outperformed best-fit by

about 1 percent. As P was reduced, the variance of the

measured efficiency increased to the point where we

could no longer resolve the relative performance, although the results suggest midly that, for small P,

best-fit outperformed first-fit. For P = 4, we tried to

resolve the relative performance by running 100 iterations, compared to 10 for all other points, but were

unsuccessful.

Because there were several hundred possible requests in the real time application of the D-825 [12],

Figure 1 implies that the success of best-fit reported by

Wald [2] was due more probably to a nonsaturating

workload, relative to the available storage and the

allocation efficiency of best-fit, than it was to the

discreteness of the request population.

5. Results for Full Populations

5.1 Initial Comparison of Best-Fit and First-Fit

In order to understand better the relative performance of best-fit and first-fit, we measured their

allocation efficiencies as a function of the mean of a

full, exponentially distributed population (see Figure 2).

In both cases the efficiency increased as the mean request decreased. When the mean request was less than

about 4K, first-fit consistently performed about 1 percent better than best-fit. When the mean request was

greater than 8K, the two strategies performed equally

well. These results of ours are consistent with those of

Knuth [7].

5.2 Knuth's Hypothesis and Worst-Fit

In trying to understand the reasons underlying the

observed performance differences, we began with a

hypothesis implied by Knuth [7]. This hypothesis

notes that, statistically speaking, the storage fragment

left after a best-fit allocation is smaller than that left

after a first-fit allocation. Because the smaller fragment

seems less likely to be useful in a subsequent allocation,

it follows that best-fit, by proliferating smaller blocks

than does first-fit, should result in more fragmentation

and lower efficiency than first-fit.

Because the foregoing hypothesis is difficult to test

directly, we considered a worst-case generalization.

That is, if the hypothesis is correct, it might be that

the best performance would result from a strategy that

systematically leaves the largest possible fragment.

Thus, allocation in the largest available block, or

worst-fit, should result in better performance than

either best-fit or first-fit. But it does not (see Figure 2).

Indeed, worst-fit is worse than both best-fit and firstfit. Furthermore, the superiority of best-fit over worst438

fit shows that, under some circumstances, it is better to

allocate a request in the available block that results in

the smallest remaining fragment.

Several aspects of Figure 2 merit further comment.

At first glance it might suggest that, in general, allocation efficiency depends most strongly on the ratio of the

mean request to the memory size. But such a conclusion

is unfounded. Since the mean and the standard deviation of exponential distributions are equal, Figure 2

equally supports the hypothesis that allocation efficiency

depends most strongly on the ratio of the standard

deviation of the request distribution to the memory size.

It is reasonable to expect that at least first and second

order statistics of the request distribution are important. For example, if M/? is a large integer, and if

we vary a while measuring E, then we expect E to

approach 1 as a becomes small with respect to F. If,

for a << ~, we vary 1= and measure E, then we expect

E to be a nonmonotonic function of e that has a local

maximum whenever M/? is integral, and is less than 1

between these points.

The near-equal performance of all three strategies

for ? ~> 8K is an intriguing result which we finally

traced to the simple principle that, whenever the average

request is a significant fraction of the memory size,

there is rarely more than one available block in which

a pending storage request can fit (see Figure 3). This

being the case, allocation efficiency cannot be affected

by allocation strategy.

The apparent decrease in the performance of worstfit as F is reduced from ~ ~ 1024 is in marked contrast

to the increase in the performance of both best-fit and

first-fit. As the limit e ---+ 1 is approached, the efficiency

of any reasonable strategy should approach I. Some

single-iteration runs indicated that the performance of

worst-fit begins increasing again at about ~ = 32. The

shallow peak in the performance of worst-fit between

f ~ 4096 and ~ ~ 128 and the tiny mean request

required before a performance increase is again observed are results for which analytic explanations

should be extremely illuminating.

5.3 A Mixed Strategy To Test a Hypothesis for the

Superiority of First-Fit

It is reasonable to attribute the superior performance

of best-fit over worst-fit to the beneficial effect of allocating a request in the available block that results in the

smallest remaining fragment. Since first-fit outperforms

best-fit, and since first-fit does not choose the available

block that results in the least remainder, it must embody

a beneficial effect that competes with the beneficial

effect of best-fit and, for the circumstances we have

considered so far, dominates it. It seems reasonable

that the key to such a beneficial effect should lie in the

one systematic effect produced by first-fit: preferential

allocation toward one end of memory. With this in

mind, we examined detailed outputs of the simulation

and noted that, as one might expect, the biggest de-

Communications

of

the ACM

August 1975

Volume 18

Number 8

creases in efficiency occurred when the m e m o r y could

not accommodate a relatively large request, with no

allocation taking place until a sufficient amount of

contiguous space accumulated. This observation led to

the hypothesis that, by preferentially allocating toward

one end of memory, first-fit encourages large blocks to

grow at the other end. Sufficient contiguous space is

thereby more likely to be available for relatively large

requests. Stated differently, allocation toward one end

of m e m o r y is a beneficial effect of first-fit that competes

with the small-remainder beneficial effect of best-fit.

To test this hypothesis, we devised a mixed allocation strategy that can be varied parametrically between

first-fit and best-fit in such a way to expose directly the

competition between beneficial effects. M e m o r y is

divided by a pointer B into two regions. The lower

region, words 0 through B, is termed the best-fit region.

The upper region, words B -t- 1 through M, is termed

the first-fit region. The allocator first tries to allocate

requests in the lower region using first-fit. If unsuccessful, it then tries to allocate requests in the upper region

using first-fit. Thus, when B = 0, pure first-fit results

and, when B = M, pure best-fit results. If the efficiency

of this best-then-first-fit strategy is measured as B is

varied from 0 to M, the efficiency should start at that

of pure first-fit and, as B approaches M, eventually drop

to that of pure best-fit. For small B, requests will still

be allocated preferentially toward the low end of

memory, but there will be some best-fit allocation

mixed in at the low end. Our hypothesis suggests that

under these circumstances we might enjoy some of the

beneficial effects of both first-fit and best-fit, in which

case, as B increases, the allocation efficiency would

increase before it drops to the value for pure best-fit.

The results of such an experiment supported our

hypothesis and are shown in Figure 4.

The foregoing best-then-first-fit experiment illustrated the danger of not paying sufficient attention to

estimation theory in such simulations. For B = 0, the

distribution of efficiencies coincides with the first-fit

distribution. As B varies from 0 to M, the distribution

changes shape and moves until, at B = M, it coincides

with the best-fit distribution. The experiment consisted

of estimating, as a function of B, the position of the

distribution's peak. The results (Figure 4) show that

the peak moves in the direction of higher efficiency

before moving down to coincide with the peak of the

distribution for pure best-fit. We had initially reached

a negative conclusion until we realized that the standard

error ( a / ( N ) ~) resulted in insufficient resolution to

detect the motion that was taking place for the number

of iterations that were considered originally.

5.4 Conditions Under Which Best-Fit Should Outperform

First-Fit

If, as was suggested, first-fit was more efficient than

best-fit because first-fit encourages the growth of

relatively large free blocks which are thereby available

439

more often for the allocation of relatively large requests, then the absence of such large requests should

eliminate the beneficial effect of first-fit. One way to

test this prediction is to generate requests f r o m an

exponential distribution that has been truncated at

r ..... < M. When rm~x = M, we have the same situation

as before--typical results were shown in Figure 2. As

r .... is reduced from M, fewer large requests are made,

the hypothesized beneficial effect of first-fit is depreciated, and best-fit should outperform first-fit. Results,

shown in Figure 5, confirm the prediction.

These results imply that the statistical advantage of

first-fit over best-fit depends on the frequency of requests that are large with respect to the mean request.

In order to make a more precise statement, we introduce

the coefficient of variation a given by the ratio of the

request-distribution's standard deviation to its mean,

a = ~/i=. The smaller a is, the smaller is the frequency

of requests that are large compared to r. F o r an exponential distribution truncated at rma~, we have a ~ 1

as long as 1 << ~ << r ...... When r ..... and f are of the

same order of magnitude, a < 1. In Fig. 5 we have also

plotted the measured value of a as a function of r . . . .

F r o m a statistical point of view it is more appropriate to think of the crossover in Figure 5 in terms of

the parameter a than in terms of the truncation point

r . . . . . Thus, if a is reduced by distorting the exponential

distribution in some other way than by decreasing the

truncation point, then a similar crossover should occur.

For example, in Figure 6 we show the results of an

experiment in which the lower end of an exponential

distribution was cut off at r,,,in. The results clearly

show a crossover in which best-fit becomes better than

first-fit as a drops below about .8.

In comparing these results with those shown in

Figure 2, it is important to note that, in Figure 6, the

absolute performance of both strategies increases with

rm~n, even though the actual mean request also increases with rmln, a result that underlines our previous

caution in interpreting Figure 2. Indeed, on the basis

of all of the results presented so far, one might now be

tempted to conclude that allocation efficiency is most

strongly dependent on ~, and only weakly dependent

on ~. But such a conclusion would be premature without

data from experiments with distributions for which

and a can be specified independently.

The present results may also explain the mild

implication, in Figure 1, that best-fit outperforms

first-fit for small request populations. Let s ~ be the

variance of n samples taken from a distribution whose

variance is ~2. Then the expectation value of s 2 is given

[13] by (s 2) = ~r2(n - 1)/n. Since the square root is a

concave function, we have the following estimate for

the expectation value of s itself [14]:

(s) = ((s~) ~) < ((s~)) ~.

Thus, since ~ = a for exponential distributions, the

expectation value of the coefficient of variation may be

Communications

of

the ACM

August 1975

Volume 18

Number 8

estimated, as a function of n, by (a) = "(s)/r < ((n 1)/n) ½. Thus, a is significantly less than 1 for small n.

5.5 Results for Uniform and Normal Distributions

In Figure 7 we show the results of an experiment in

which we varied the mean of a uniform distribution with

a constant standard deviation and measured the efficiency of first-fit and best-fit. In Figure 8 we show the

results obtained when the mean is held constant while

the standard deviation is varied. In both experiments

the efficiency of first-fit allocation never exceeded that

of best-fit. The ratio a was always quite small, we note

that, if w is the width of a uniform distribution, then

a = w / ( f (12)'~), and is maximal when p = w/2. Thus,

a .... = 2/(12) ~ = .577.

Similar experiments were performed using normal

distributions. The results were qualitatively the same

as those shown for uniform distributions [15]. A m o n g

other things, the combined results show that the absolute performance of an allocation strategy can depend

strongly on both the first moment and the second central

moments of the request distribution. The results also

lend further support for the hypothesis of Section 5.3

concerning the relative performance of best-fit and

first-fit.

5.6 Results for Hyperexponential Distributions

Results presented in previous sections suggest that

the superior performance of first-fit, seen when the

request distribution was exponential, should be accentuated for request distributions with a > 1. Such a

distribution is the hyperexponential distribution

p ( x ) = s e x p ( - - 2 s ~ x ) -1- (1 -- s)exp(--2(1 -- s)~x)

(0<s<½)

which has the coefficient of variation

a = [(1 -- 2s -k- 2s2)/2s(1 -- s)] ~.

When s = ½, the hyperexponential distribution becomes

exponential. In Figure 9, we show the results of an

experiment with hyperexponentials in which we increased a from ot = 1 while holding constant the

mean request. As a increased, so did the advantage of

first-fit over best-fit.

6. Summary

Through simulation, we have investigated the

performance of first-fit and best-fit allocation using,

as a figure of merit, the time-memory-product efficiency.

Under the conditions we observed, the efficiencies of

first-fit and best-fit were generally within 1 to 3 percent

of each other. Except for small populations, the size

of the request population had little effect on allocation

efficiency, although this result was inferred from experiments with exponential distributions only.

The absolute performance of first-fit and best-fit can

depend strongly on at least the first moment and the

44O

second-central moment of the request distribution.

There is strong evidence that the relative performance

of the two strategies depends on the frequency of requests that are large compared to the average request.

When this frequency is high, first-fit outperforms bestfit because, by preferentially allocating toward one end

of memory, first-fit encourages large blocks to grow at

the other end. In terms of the coefficient of variation

a = ~r/~, first-fit outperformed best-fit when a was close

to or greater than 1.

Acknowledgments. The author thanks H. Elovitz,

J. Kullback, and B. Wald for numerous helpful discussions. He is particularly grateful to J. Kullback for

illuminating various points in statistics and estimation

theory. He also thanks P. Denning and D. Parnas for

their constructive reviews of the manuscript. The

experiment with hyperexponentials was performed at

the suggestion of P. Denning, S. Fuller, and one of the

referees.

N o t e added in proof. Recently, Fenton and Payne

[16] have reported on some similar simulations. They

also concluded that best-fit performs better than firstfit for various nonexponential distributions.

Received January 1974, revised December 1974

References

1. Campbell, J.A. A note on an optimal-fit method for dynamic

allocation of storage. The Computer J. 14, 1 (Jan. 1971), 7-9.

2. Wa!d,B. Utilization of a multiprocessor in command

control. Proc. IEEE54, 12 (Dec. 1966), 1885-88.

3. Thompson,R., and Wilkinson, J. The D825 automatic

operating and scheduling program. Proc. AFIPS 1963 SJCC, pp

139-146, May 1963; reprinted in S. Rosen, Programming Systems

and Language, McGraw-Hill, New York, 1967.

4. Anderson,J.P., et al. D825--A multiple-computer system

for command and control. Proc. AFIPS 1962 FJCC, pp 86-96,

Dec 1962; reprinted in C.G. Bell and A. Newell, Computer

Structures-Readings and Examples, McGraw-Hill, New York,

1971.

5. Wald, B. Private communication.

6. Collins, G.O. Experience in automatic storage allocation.

Comm. ACM 4, 10 (Oct. 1961), 436-440.

7. Knuth, D.E. The Art of Computer Programming, Vol I

Fundamental Algorithms, Addison-Wesley,Reading, Mass.,

1968, pp. 435-452.

8. Randell, B. A note on storage fragmentation and program

segmentation. Comm. ACM 12, 7 (July 1969), 365-372.

9. Batson, A., Ju, S., and Wood, D.C. Measurements of segment

size. Comm. ACM 13, 3 (Mar. 1970), 155-159.

10. Totschek, R.A. An empirical investigation into the behavior

of the SDC timesharing system. Rep. SP2191, AD 622003,

System Development Corp., Santa Monica, Calif., 1965.

11. Margolin, B.H., Parmelee, R.P,, and Schatzoff, M. Analysis

of free-storage algorithms. IBM SYST J. 4, (1971).

12. Wilson, S. Private communication.

13. Fisz, M. Probability Theory and Mathematical Statistics.

Wiley, New York, 1963, Chap. 13.

14. Loeve, M. Probability Theory. Van Nostrand, New York,

1955, p. 159.

15. Shore, J.E. On the external storage fragmentation produced

by first-fit and best-fit allocation strategies. Naval Research

Lab. Memo. Rep. 2848, Washington, D.C., July 1974.

16. Fenton, J. S. and Payne, D. W. Dynamic storage allocation of

arbitrary sized segments. Proc. IFIP 74, North-Holland Pub. Co.,

Amsterdam, 1974, pp. 344-348.

Communications

of

the ACM

August 1975

Volume 18

Number 8