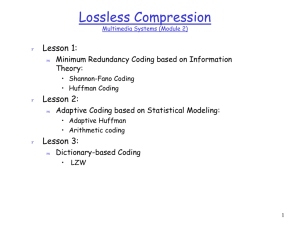

Asymmetric numeral systems

advertisement

ASYMMETRIC NUMERAL SYSTEMS

AS ACCURATE RELACEMENT FOR HUFFMAN CODING

Huffman coding

– fast, but operates on integer number of bits:

(or any prefix codes,

approximates probabilities with powers of ½,

Golomb, Elias, unary) getting suboptimal compression ratio

Arithmetic coding

(or Range coding)

– accurate probabilities, but many times slower

(needs more computation, battery drain)

Asymmetric Numeral Systems (ANS) – accurate and faster than Huffman:

we construct low state automaton optimized for given probability distribution

(also allows for simultaneous encryption)

Jarosław Duda

Warszawa, 31-01-2015

1

Huffman vs ANS in compressors (LZ + entropy coder):

from Matt Mahoney benchmarks http://mattmahoney.net/dc/text.html

Enwiki8

𝟏𝟎𝟖 bytes

26,915,461

27,111,239

27,835,431

29,758,785

30,979,376

34,907,478

36,445,248

36,556,552

40,024,854

40,565,268

encode time decode time

[ns/byte]

[ns/byte]

582

2.8

378

10

1004

31

228

30

19

2.7

24

3.5

101

17

171

50

7.7

3.8

54

31

lzturbo 1.2 –39

LZA 0.70b –mx9 –b7 –h7

WinRAR 5.00 –ma5 –m5

WinRAR 5.00 –ma5 –m2

lzturbo 1.2 –32

zhuff 0.97 –c2

gzip 1.3.5 –9

pkzip 2.0.4 –ex

ZSTD 0.0.1

WinRAR 5.00 –ma5 –m1

zhuff, ZSTD (Yann Collet): LZ4 + tANS (switched from Huffman)

lzturbo (Hamid Bouzidi): LZ + tANS (switched from Huffman)

LZA (Nania Francesco): LZ + rANS

(switched from range coding)

e.g. lzturbo vs gzip: better compression, 5x faster encoding, 6x faster decoding

saving time and energy in very frequent task

2

We need 𝒏 bits of information to choose one of 𝟐𝒏 possibilities.

For length 𝑛 0/1 sequences with 𝑝𝑛 of “1”, how many bits we need to choose one?

Entropy coder: encodes sequence with (𝑝𝑠 )𝑠=0..𝑚−1 probability distribution

using asymptotically at least 𝑯 = ∑𝒔 𝒑𝒔 𝐥𝐠(𝟏/𝒑𝒔 ) bits/symbol (𝐻 ≤ lg(𝑚))

Seen as weighted average: symbol of probability 𝒑 contains 𝐥𝐠(𝟏/𝒑) bits.

Encoding (𝑝𝑠 ) distribution with entropy coder optimal for (𝑞𝑠 ) distribution costs

Δ𝐻 = ∑𝑠 𝑝𝑠 lg(1/𝑞𝑠 ) − ∑𝑠 𝑝𝑠 lg(1/𝑝𝑠 ) =

𝑝𝑠

∑𝑠 𝑝𝑠 lg ( )

𝑞𝑠

≈

1

ln(4)

∑𝑠

(𝑝𝑠 −𝑞𝑠 )2

𝑝𝑠

more bits/symbol - so called Kullback-Leibler “distance” (not symmetric).

3

Huffman coding (HC), prefix codes:

most of everyday compression,

e.g. zip, gzip, cab, jpeg, gif, png, tiff, pdf, mp3…

example of Huffman penalty

for truncated 𝜌(1 − 𝜌)𝑛 distribution

(can’t use less than 1 bits/symbol)

Zlibh (the fastest generally available implementation):

Encoding ~ 320 MB/s

Decoding ~ 300-350 MB/s

(/core, 3GHz)

Range coding (RC): large alphabet arithmetic

coding, needs multiplication, e.g. 7-Zip, VP Google

video codecs (e.g. YouTube, Hangouts).

Encoding ~ 100-150 MB/s

tradeoffs

Decoding ~ 80 MB/s

(binary) Arithmetic coding (AC):

H.264, H.265 video, ultracompressors e.g. PAQ

Encoding/decoding ~ 20-30MB/s

ratio →

𝜌 = 0.5

𝜌 = 0.6

𝜌 = 0.7

𝜌 = 0.8

𝜌 = 0.9

𝜌 = 0.95

𝜌 = 0.99

8/H

4.001

4.935

6.344

8.851

15.31

26.41

96.95

zlibh

3.99

4.78

5.58

6.38

7.18

7.58

7.90

FSE

4.00

4.93

6.33

8.84

15.29

26.38

96.43

Huffman: 1byte → at least 1 bit

ratio ≤ 8 here

Asymmetric Numeral Systems (ANS)

tabled (tANS) - without multiplication

FSE implementation of tANS: Encoding ~ 350 MB/s Decoding ~ 500 MB/s

RC → ANS: ~7x decoding speedup, no multiplication (switched e.g. in LZA compressor)

HC → ANS means better compression and ~ 1.5x decoding speedup (e.g. zhuff, lzturbo)

4

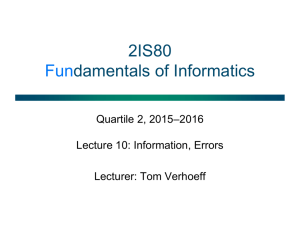

Operating on fractional number of bits

restricting range 𝑁 to length 𝐿 subrange

contains 𝐥𝐠(𝑵/𝑳) bits

number 𝑥

contains 𝐥𝐠(𝒙) bits

adding symbol of probability 𝑝 - containing 𝐥𝐠(𝟏/𝒑) bits

lg(𝑁/𝐿) + lg(1/𝑝) ≈ lg(𝐿/𝐿′ ) for 𝑳′ ≈ 𝒑 ⋅ 𝑳 lg(𝑥) + lg(1/𝑝) = lg(𝑥′) for 𝒙′ ≈ 𝒙/𝒑

5

uABS - uniform binary variant (𝒜 = {0,1}) - extremely accurate

Assume binary alphabet, 𝒑 ≔ 𝐏𝐫(𝟏), denote 𝒙𝒔 = {𝒚 < 𝒙: 𝒔(𝒚) = 𝒔} ≈ 𝒙𝒑𝒔

For uniform symbol distribution we can choose:

𝒙𝟏 = ⌈𝒙𝒑⌉

𝒙𝟎 = 𝒙 − 𝒙𝟏 = 𝒙 − ⌈𝒙𝒑⌉

𝑠(𝑥) = 1 if there is jump on next position: 𝑠 = 𝑠(𝑥) = ⌈(𝑥 + 1)𝑝⌉ − ⌈𝑥𝑝⌉

decoding function: 𝐷(𝑥) = (𝑠, 𝑥𝑠 )

its inverse – coding function:

𝑥+1

𝐶 (0, 𝑥) = ⌈1−𝑝⌉ − 1

𝑥

𝐶 (1, 𝑥) = ⌊ ⌋

𝑝

For 𝑝 = Pr(1) = 1 − Pr(0) = 0.3:

6

uABS

xp = (uint64_t)x * p0;

out[i]=((xp & mask) >= p0);

xp >>= 16;

x = out[i] ? xp : x - xp;

rABS

arithmetic coding

xf = x & mask;

//32bit split = low + ((uint64_t)

(hi - low) * p0 >> 16);

xn = p0 * (x >> 16);

out[i] = (x > split);

if (xfrac < p0)

{ x = xn + xf; out[i] = 0 } if (out[i]) {low = split + 1}

else {hi = split;}

else {x-=xn+p0;out[i] = 1 }

if (x < 0x10000) { x = (x << 16) | *ptr++;

//32bit x, 16bit renormalization, mask = 0xffff

}

if ((low ^ hi) < 0x10000)

{ x = (x << 16) | *ptr++;

low <<= 16;

hi = (hi << 16) | mask }

7

RENORMALIZATION to prevent 𝑥 → ∞

Enforce 𝑥 ∈ 𝐼 = {𝐿, … ,2𝐿 − 1} by

transmitting lowest bits to bitstream

“buffer” 𝒙 contains 𝐥𝐠(𝒙) bits of information

– produce bits when accumulated

Symbol of 𝑃𝑟 = 𝑝 contains lg(1/𝑝) bits:

lg(𝑥) → lg(𝑥) + lg(1/𝑝)

modulo 1

8

9

tABS

test all possible

symbol distributions

for binary alphabet

store tables for

quantized probabilities (p)

e.g. 16 ⋅ 16 = 256 bytes

← for 16 state, Δ𝐻 ≈ 0.001

H.264 “M decoder” (arith. coding)

tABS

𝑡 = 𝑑𝑒𝑐𝑜𝑑𝑖𝑛𝑔𝑇𝑎𝑏𝑙𝑒[𝑝][𝑋];

𝑋 = 𝑡. 𝑛𝑒𝑤𝑋 + readBits(𝑡. 𝑛𝑏𝐵𝑖𝑡𝑠);

useSymbol(𝑡. 𝑠𝑦𝑚𝑏𝑜𝑙);

no branches,

no bit-by-bit renormalization

state is single number (e.g. SIMD)

10

Additional tANS advantage – simultaneous encryption

we can use huge freedom while initialization: choosing symbol distribution –

slightly disturb 𝒔(𝒙) using PRNG initialized with cryptographic key

ADVANTAGES comparing to standard (symmetric) cryptography:

standard, e.g. DES, AES ANS based cryptography (initialized)

based on

XOR, permutations

highly nonlinear operations

bit blocks

fixed length

pseudorandomly varying lengths

“brute force”

just start decoding

perform initialization first for new

or QC attacks

to test cryptokey

cryptokey, fixed to need e.g. 0.1s

speed

online calculation

most calculations while initialization

entropy

operates on bits

operates on any input distribution

11

Entropy coder is usually the bottleneck of compression

ANS offers fast accurate processing of large alphabet without multiplication

accurate and faster replacement for Huffman coding,

- many (~7) times faster replacement for Range coding,

- faster decoding in adaptive binary case,

- can include checksum for free (if final decoding state is right),

- can simultaneously encrypt the message.

Perfect for: DCT/wavelet coefficients, lossless image compression

Perfect with: Lempel-Ziv, Burrows-Wheeler Transform

Further research:

- applications – ANS improves both speed and compression ratio,

- finding symbol distribution 𝑠(𝑥): with minimal Δ𝐻 (and quickly),

- optimal compression of used probability distribution to reduce headers,

- maybe finding other low state entropy coding family, like forward decoded,

- applying cryptographic capabilities (also without entropy coding):

cheap compression+encryption: HDD, wearables, sensors, IoT, RFIDs.

- ... ?

12