Abdullah

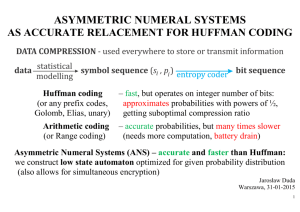

advertisement

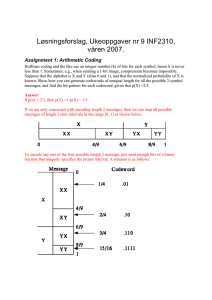

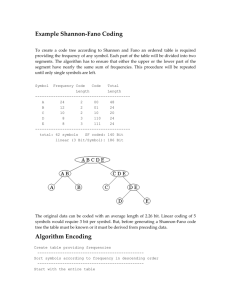

Abdullah Aldahami ( 11 0 7 4 5 95 ) April 6, 2010 1 Huffman Coding is a simple algorithm that generates a set of variable sized codes with the minimum average size. Huffman codes are part of several data formats as ZIP, MPEG and JPEG. The code is generated based on the estimated probability of occurrence. Huffman coding works by creating an optimal binary tree of nodes, that can be stored in a regular array. 2 The method starts by building a list of all the alphabet symbols in descending order of their probabilities (frequency of appearance). It then construct a tree from bottom to top. Step by step, the two symbols with the smallest probabilities are selected; added to the top. When the tree is completed, the codes of the symbols are assigned. 3 Example: circuit elements in digital computations Character Frequency Character Frequency i 6 m 2 t 5 s 2 space 4 a 2 c 3 o 2 e 3 r 1 n 3 d 1 u 2 g 1 l 2 p 1 Summation of frequencies (Number of events) is 40 4 Example: circuit elements in digital computations 0 25 0 13 1 12 13 0 t 1 5 i 0 u 6 7 0 4 e l 0 r 0 4 0 2 7 1 3 1 2 2 n 1 d 1 3 0 4 ‘ ‘ m 2 2 s g 1 4 4 0 1 0 1 8 0 1 1 1 1 1 3 0 0 7 0 c 1 40 2 a 2 1 o 2 1 1 p 1 5 So, the code will be generated as follows: Character Frequency Code Code Length Total Length Character Frequency Code Code Length Total Length i 6 010 3 18 m 2 01111 5 10 t 5 000 3 15 s 2 1001 4 8 space 4 110 3 12 a 2 1110 4 8 c 3 0010 4 12 o 2 1111 4 8 e 3 0110 4 12 r 1 001110 6 6 n 3 100 3 9 d 1 001111 6 6 u 2 00110 5 10 g 1 10000 5 5 l 2 01110 5 10 p 1 10001 5 5 Total is 154 bits with Huffman Coding compared to 240 bits with no compression 6 Input Output Optimality Symbol i t ‘’ c e n u l Probability P(x) 0.15 0.125 0.1 0.075 0.075 0.075 0.05 0.05 Code 010 000 110 0010 0110 100 00110 01110 Code length (in bits) (Li) 3 3 3 4 4 3 5 5 Weighted path length Li ×P(x) 0.45 0.375 0.3 0.3 0.3 0.225 0.25 0.25 Probability budget (2-Li) 1/8 1/8 1/8 1/16 1/16 1/8 1/32 1/32 Information of a Message I(x) = – log2 P(x) 2.74 3.00 3.32 3.74 3.74 3.74 4.32 4.32 Entropy H(x) =-P(x) log2 P(x) 0.411 0.375 0.332 0.280 0.280 0.280 0.216 0.216 • Entropy is a measure defined in information theory that quantifies the information of an information source. •The measure entropy gives an impression about the success of a data compression process. 7 Input Output Optimality Symbol m s a o r d g p Sum Probability P(x) 0.05 0.05 0.05 0.05 0.025 0.025 0.025 0.025 =1 Code 01111 1001 1110 1111 001110 001111 10000 10001 Code length (in bits) (Li) 5 4 4 4 6 6 5 5 Weighted path length Li ×P(x) 0.25 0.2 0.2 0.2 0.15 0.15 0.125 0.125 3.85 Probability budget (2-Li) 1/32 1/16 1/16 1/16 1/64 1/64 1/32 1/32 =1 Information of a Message I(x) = – log2 P(x) 4.32 4.32 4.32 4.32 5.32 5.32 5.32 5.32 Entropy H(x) =-P(x) log2 P(x) 0.216 0.216 0.216 0.216 0.133 0.133 0.133 0.133 3.787 Bit/sym •The sum of the probability budgets across all symbols is always less than or equal to one. In this example, the sum is equal to one; as a result, the code is termed a complete code. • Huffman coding approaches the optimum on 98.36% = (3.787 / 3.85) *100 8 Static probability distribution (Static Huffman Coding) Coding procedures with static Huffman codes operate with a predefined code tree, previously defined for any type of data and is independent from the particular contents. The primary problem of a static, predefined code tree arises, if the real probability distribution strongly differs from the assumptions. In this case the compression rate decreases drastically. 9 Adaptive probability distribution (Adaptive Huffman Coding) The adaptive coding procedure uses a code tree that is permanently adapted to the previously encoded or decoded data. Starting with an empty tree or a standard distribution. This variant is characterized by its minimum requirements for header data, but the attainable compression rate is unfavourable at the beginning of the coding or for small files. 10 11