Context - Philosophy`s Unsolved Problem - IS

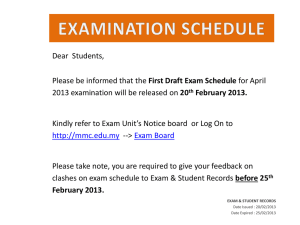

advertisement