Detection Algorithm for Night

advertisement

Detection Algorithm for Night

Algorithm Overview

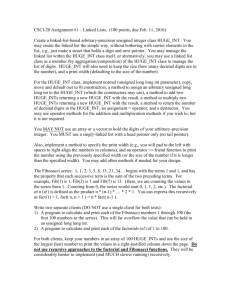

A PVC pipe with a vertical stripe of LEDs is installed. The LEDs are positioned directly

towards the camera. With a high signal noise ratio, the nighttime water level detection

algorithm is quite simple. We define a region of interest (ROI) to speed up the process

and avoid false positives. Figure 1 is the flow diagram of the algorithm. The output

contains a processed image, the length of the ON pixels (which will be converted to a

normalized water level in the driving perl script), and the confidence value – the line

fitting error. Figure 2 shows a sample image taken at the dawn be processed.

Image + ROI

Binarized the image

Image cleaning

dilation and erosion)

Input Image with ROI superimposed

Compute the length

of ON pixels

Fit a line to the ON pixels

Compute the line fitting error

+ Raw LED length

Image + Confidence Value

Processed

Figure 1: Block Diagram for

nighttime water level Detection

ROI being processed

Figure 2: A sample image being processed

Implementation Details

We use an image IO utility (OpenIL) to load and save images. It is freely from the web at

http://www.openil.org. The nighttime detection module is a standalone C program. Its

source contains three files, filter.C, filter.h, and night.C. night.C is the driver program

that calls image processing functions that are implemented in filter.c. In filter.C, the

following image processing functions are implemented.

void dilation(int w, int h, unsigned char * src, unsigned

char * des) ;

Description: Perform image dilation using a 3x3 kernel;

Prerequisite: a) the image must be a binary image; each pixel can only have two

valid values, either zero or one. B) The src and des pointers cannot be the same.

Parameters: w – image width

h – image height

src – pointer to the source image;

des – pointer to the destination image

void erosion(int w, int h, unsigned char * src, unsigned

char * des) ;

Description: Perform image erosion using a 3x3 kernel;

Prerequisite: a) the image must be a binary image, each pixel can only have two

valid values, either zero or one. B) The src and des pointers cannot be the same.

Parameters: w – image width

H – image height

Src – pointer to the source image

Des – pointer to the destination image.

void binarize(int w, int h, unsigned char * src, unsigned

char * des, float threshold) ;

Description: convert a gray scale image to a binary image;

Prerequisite: a) the source image must be a gray scale image;

Parameters: w – image width

H – image height

Src – pointer to the source image;

Des – pointer to the destination image

Threshold – the threshold value, normalized between 0 and 1

void imfeature(int w, int h, unsigned char * src, FEATURES

*fea) ;

Description: compute some image features. Right now, one only the length of the

feature and feature size (in pixels) are computed;

Prerequisite: NONE

Parameters: w – image width

H – image height

Src – a pointer to the source image;

fea – a pointer to the FEATURES structure (defined below). Only the

m_top, m_bottom and m_pixCnt will be filled by this function.

struct FEATURES{

int m_top, m_bottom;

int m_pixCnt;

float m_line[4];

float m_fitError;

float m_lineDir;

// to be expanded as needed;

};

void crop(int w, int h, unsigned char * src,

int startx, int starty, int newW, int newH,

unsigned char * des);

Description: crop the image.

Prerequisite: 0 < startx < w, 0< starty < h; b) the image must be a single channel

image, i.e. one byte per pixel.

Parameters: w – image width

H – image height

Src – pointer to the source image;

Startx, starty – the upper left corner of the cropped image

NewW, newh – the dimension of the cropped image

Des – pointer to the cropped image

void fill(int w, int h, unsigned char * src,

int startx, int starty, int newW, int newH,

unsigned char * des);

Description: update a potion of the image with.

Prerequisite: 0 < startx < w, 0< starty < h; b) the image must be a single channel

image, i.e. one byte per pixel.

Parameters: w – original image width

H – original image height

Src – pointer to the image that will be replaced;

Startx, starty – the upper left corner of the update region

NewW, newh – the dimension of the update region;

Des – pointer to the pixel array that will replace the region in the original

image

void MNCC(int w, int h, unsigned char * src,

int pw, int ph, unsigned char * pattern, float *

result);

Description: Perform normalized correlation using a custom-provided template.

Prerequisite: the template must have odd sizes in both dimensions; b) the image

must be a single channel image, i.e. one byte per pixel;

Parameters: w – original image width

H – original image height

Src – pointer to the image;

Pw, ph – the size of the template

pattern – pointer to the template’s array of pixels

result – pointer to a float array where the correlation score will be stored.

Algorithm:

The correlation score is computed as

r

2

[n IM ( I ) M ]

2

[n I 2 ( I ) 2 ][ n M 2 ( M ) 2 ]

where n is the number of pixels in the template, and I is the image

and M is the template (model)

float * fitLine(int w, int h, unsigned char * src,

float * line) ;

Description: Fit the ON pixels to a line;

Prerequisite: a) the image must be a binary image, each pixel can only have two

valid values, either zero or one. B) line must be an array that can at least hold four

numbers.

Parameters: w – image width

H – image height

Src – pointer to the source image

line – pointer to the array that will hold the line parameters. The line is

x t * line[0] line[2]

expressed in a parametric form.

, where t is a

y t * line[1] line[3]

parameter.

float calDist(int w, int h, unsigned char * src,

float * line) ;

Description: Fit the ON pixels to a line;

Prerequisite: a) the image must be a binary image, each pixel can only have two

valid values, either zero or one.

Parameters: w – image width

H – image height

Src – pointer to the source image

Line – pointer to the array that holds the line parameters, in the same

format as in function fitLine.

Algorithm Tuning

The only user-defined values are the ROI and the threshold for binarize the image. While

the ROI is supplied as a command line parameter, the threshold can only be changed at

compile time. It is defined as a constant number in night.C. Usually, the default value

(0.72) works fine. The binarization algorithm uses the threshold as a relative number to

adjust to various lighting conditions. It is not recommended to change that value.

COMP 145 – Project #6

Flood Warning System

Team Report

April 26, 2001

_________

_________

_________

_________

Kevin Berry

Ashes Ganguly

Alex Giouzenis

Ruigang Yang

Introduction

The goal of this project is to develop a Flood Warning System that will be deployed near

Little Creek, Chapel Hill. The upstream tributaries of Little Creek flood regularly and

frequently damage Eastgate Shopping Center and Camelot Village apartments. Our client

is Stephen Tell, who already has a camera setup at Little Creek near his house. A picture

from that camera is shown below.

We develop image analysis software to

detect the water level, both in day and night.

A web-based archive and report system is

also implemented. System administrator can

customize various parameters of the

detection algorithms, as well as the warning

actions when a dangerous water level is

detected. It is our belief that we have

reached our primary goal – providing a

working system that is robust, easy to use,

and highly customizable.

Technical Lessons Learned

Image Analysis

The core of the system is the water level detection algorithm. Our client is responsible for

the hardware setup; we have to come up with our own detection algorithm. It contains

two separate modules for daytime and nighttime respectively. Alex and Ruigang are

responsible for the design and implementation of these modules.

Stephen installed a piece of plywood, painted with alternating black and white stripes, as

you can see in the above picture, high-lighted in the right. The stripes are slanted to

prevent ambiguity from reflections (a great idea!). Basically, we will have to solve a

pattern-matching problem – detect the slanted stripes from the image. We tested two

methods, one is based on statistics of the stripes, such as the slant angle, blob size, etc.

The other is based on template matching. It turned out that the first method is quite

susceptible to noise and variation in lightings. On the other hand, the template matching

method is quite robust. It can detect the stripes even when more than 80% of the plywood

is under water. It behaved better than expected when we rotated the image to simulate the

rotation of camera. It is able to detect with up to +/-10 degrees of rotations. While

template matching is a great method, cares must be taken to select the template. Our

experiment shows that a real template cropped from real images behaves much better

than a hand-drawn template. In the production code, we use a 13x21 template shown in

the right. Reflection was one of our major concerns during the design phase. The stream

was flooded once so we actually have some real pictures in which the fiducicals were

actually submerged. It turned out that reflections rarely exist.

The nighttime detection module is simpler compared to the daytime one. Stephen

installed a vertical stripe of LEDs on a PVC pipe (highlighted in the left in the creek

picture). The LEDs are pointing directly to the camera. They are the only things visible at

night. We convert the image into a binary image and count the number of on pixels. Our

only concern is the light refracted under water. It has not been tested because the water

level remains lower than the LEDs since the pipe was installed. However, we notice that

the water is very muddy during normal time, thus we expect it be even less transparent

when flood occurs. (Can some one generate a ray-tracing physical-based muddy water

picture for testing? )

In both modules, we use a user-defined region of interest (ROI) to speed up the

computation and avoid false positives. The ROI can be quite relaxed to reduce the

frequency of “calibration”.

The biggest pity for the detection algorithm is the lack of high accuracy, especially in the

daytime. The current code sometime misses the lowest block in the plywood, which

results to a 5-10 cm fluctuation of water levels. It is a quite difficult task to accurately

detect every block given that each block shows up in the camera no bigger than a 10 x 10

pixel blob. Prof. Welch suggested us to use a zoom camera for larger targets. According

to our client, it is quite difficult to find a weather-resistant camera with a zoom lens. So

we have to make the best use of the existing hardware. We hope the 5-10 cm resolution is

good enough for a warning system. The system’s accuracy can certainly be improved by

using a bigger target pattern. This is difficult to do because the force of flooding water is

quite big. During the semester, the target pattern has to be salvaged several times. A big

pattern makes it even more difficult to withstand water.

Our client wants to have a confidence measure about the water level reported. We spend

quite sometime thinking and discussing what kind of confidence metrics we should use.

The metrics we come up are based on some heuristics. We wish we could have some

more scientific measures.

System Integration

System integration was quite tedious. Ashes did a great job binding every piece together

into a working system. The use of Perl script is a smart choice. System configurations are

all in human-readable plain text. We believe our client will like this all console-based

interface.

One of the challenges for system integration is to make the output from daytime and

nighttime modules consistent. Right now, it is still up to the user to decide a bias and a

scale to normalize both outputs. If we had time, we could make it automatic by

examining the two outputs in more detail.

Lessons Learned from Team work

We enjoy a very high level of “democracy” in our group. Typically, we divide a task into

several sub tasks, and everyone picks his own task based on preference and capabilities.

Thus, every one in the team contributes to the task and learns something from it. Usually

it worked quite well. Our final product is truly a group product. Since everyone sticks to a

relatively simple and well-defined interface, our system actually worked in the first time

we put every piece together. The downside is that some time the finished product or

documents lack consistency. Our presentation in class was less than perfect primarily

because of that. We have assigned roles for everyone, but they are not carried out quite

efficiently. If we were going to do this again, we would probably encourage everyone to

pursue his management role more aggressively.

It also seems inevitable that a project takes as long as the deadline is not coming.

Fortunately, our project reached our goal on time, though everyone must remember the

last few hectic days.

Conclusion

Overall, we believe we have a successful project and a friendly and united team.

Everyone learned something from it, both from a technical standpoint and a teamwork

standpoint. We enjoyed a high level of “democracy” and discussed outstanding issues

openly and frankly. We believe our final product will be used in the real environment.

Of course, there are still places for improvement; a number of them are mentioned in

previous sections. As a project in general, there are two things we would do differently

next time. First, we probably would use some quick prototyping tools such as matlab to

first experiment with different detection algorithms, instead of starting from scratch in C.

Secondly, we also wish the final hardware setup could be in place earlier to give us more

real pictures to test with. It is hard to simulate refractions and reflections in pictures.