Thinking the unthinkable

advertisement

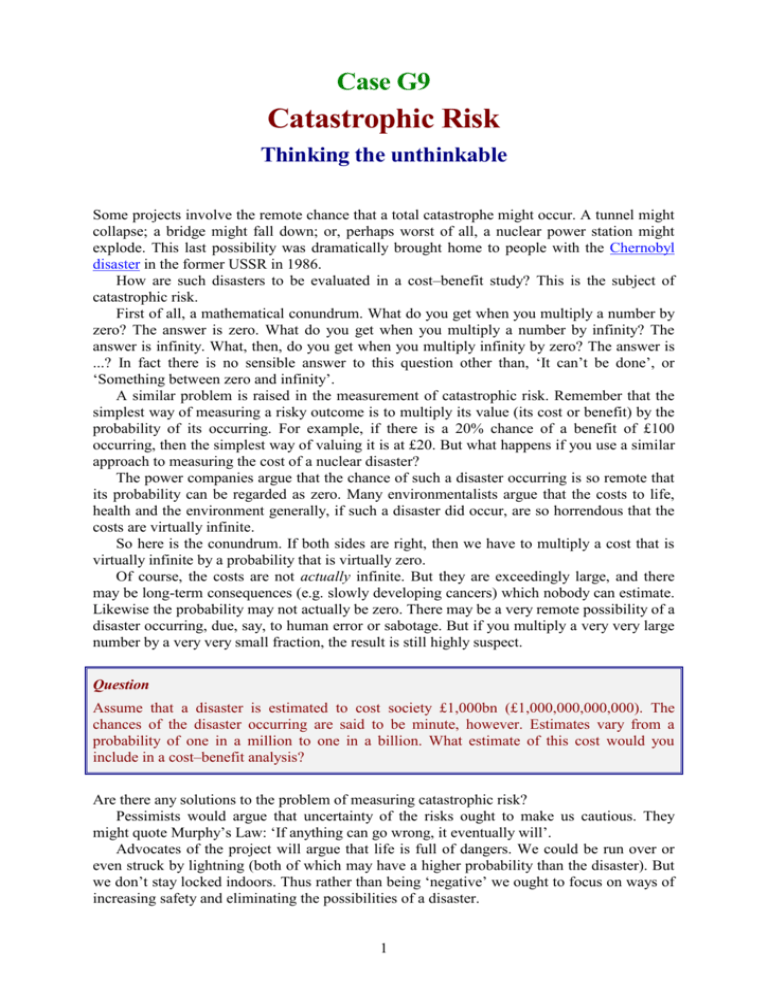

Case G9 Catastrophic Risk Thinking the unthinkable Some projects involve the remote chance that a total catastrophe might occur. A tunnel might collapse; a bridge might fall down; or, perhaps worst of all, a nuclear power station might explode. This last possibility was dramatically brought home to people with the Chernobyl disaster in the former USSR in 1986. How are such disasters to be evaluated in a cost–benefit study? This is the subject of catastrophic risk. First of all, a mathematical conundrum. What do you get when you multiply a number by zero? The answer is zero. What do you get when you multiply a number by infinity? The answer is infinity. What, then, do you get when you multiply infinity by zero? The answer is ...? In fact there is no sensible answer to this question other than, ‘It can’t be done’, or ‘Something between zero and infinity’. A similar problem is raised in the measurement of catastrophic risk. Remember that the simplest way of measuring a risky outcome is to multiply its value (its cost or benefit) by the probability of its occurring. For example, if there is a 20% chance of a benefit of £100 occurring, then the simplest way of valuing it is at £20. But what happens if you use a similar approach to measuring the cost of a nuclear disaster? The power companies argue that the chance of such a disaster occurring is so remote that its probability can be regarded as zero. Many environmentalists argue that the costs to life, health and the environment generally, if such a disaster did occur, are so horrendous that the costs are virtually infinite. So here is the conundrum. If both sides are right, then we have to multiply a cost that is virtually infinite by a probability that is virtually zero. Of course, the costs are not actually infinite. But they are exceedingly large, and there may be long-term consequences (e.g. slowly developing cancers) which nobody can estimate. Likewise the probability may not actually be zero. There may be a very remote possibility of a disaster occurring, due, say, to human error or sabotage. But if you multiply a very very large number by a very very small fraction, the result is still highly suspect. Question Assume that a disaster is estimated to cost society £1,000bn (£1,000,000,000,000). The chances of the disaster occurring are said to be minute, however. Estimates vary from a probability of one in a million to one in a billion. What estimate of this cost would you include in a cost–benefit analysis? Are there any solutions to the problem of measuring catastrophic risk? Pessimists would argue that uncertainty of the risks ought to make us cautious. They might quote Murphy’s Law: ‘If anything can go wrong, it eventually will’. Advocates of the project will argue that life is full of dangers. We could be run over or even struck by lightning (both of which may have a higher probability than the disaster). But we don’t stay locked indoors. Thus rather than being ‘negative’ we ought to focus on ways of increasing safety and eliminating the possibilities of a disaster. 1 But what all this says is that there is no real solution to the conundrum. There are also other questions raised by the possibility of disaster: What discount rate would be used for measuring the long-term effects of, say, radiation? Which is worse, a thousand small disasters, or one disaster a thousand times bigger? (How is this relevant to the issue?) Should the effects on the rest of the world be included in a cost–benefit study, or just the national effects? Question Why are many developing countries apparently prepared to accept riskier projects than are industrialised countries? 2